版本: 2.3.0

准备

保证spark的的各个节点上都有hive的包。

将hive的配置文件, hive-site.xml 拷贝到spark的 conf文件下 。

配置

在hive-site.xml中的参数 hive.metastore.warehouse.dir 自版本 spark2.0.0 起废弃了。 需要使用 spark.sql.warehouse.dir 来指定默认的数据仓库目录。 需要给该目录提供读写权限。

(但是实际来看,hive.metastore.warehouse.dir 仍然在起作用,并且通过spark-sql创建的表也会在相应的目录下存在。 )

启动 hive

hive --service metastore &

hiveserver2 &

启动 spark

start-all.sh

star-histrory-server.sh

启动spark sql 客户端 验证测试

./spark-sql --master spark://node202.hmbank.com:7077

18/05/31 12:00:31 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

18/05/31 12:00:31 WARN HiveConf: HiveConf of name hive.server2.thrift.client.user does not exist

18/05/31 12:00:31 WARN HiveConf: HiveConf of name hive.server2.webui.port does not exist

18/05/31 12:00:31 WARN HiveConf: HiveConf of name hive.server2.webui.host does not exist

18/05/31 12:00:31 WARN HiveConf: HiveConf of name hive.server2.thrift.client.password does not exist

18/05/31 12:00:32 INFO metastore: Trying to connect to metastore with URI thrift://node203.hmbank.com:9083

18/05/31 12:00:32 INFO metastore: Connected to metastore.

18/05/31 12:00:32 INFO SessionState: Created local directory: /var/hive/iotmp/0d80c963-6383-42b5-89c6-9c82cbd4e15c_resources

18/05/31 12:00:32 INFO SessionState: Created HDFS directory: /tmp/hive/root/0d80c963-6383-42b5-89c6-9c82cbd4e15c

18/05/31 12:00:32 INFO SessionState: Created local directory: /var/hive/iotmp/hive/0d80c963-6383-42b5-89c6-9c82cbd4e15c

18/05/31 12:00:32 INFO SessionState: Created HDFS directory: /tmp/hive/root/0d80c963-6383-42b5-89c6-9c82cbd4e15c/_tmp_space.db

18/05/31 12:00:32 INFO SparkContext: Running Spark version 2.3.0

18/05/31 12:00:32 INFO SparkContext: Submitted application: SparkSQL::10.30.16.204

18/05/31 12:00:32 INFO SecurityManager: Changing view acls to: root

18/05/31 12:00:32 INFO SecurityManager: Changing modify acls to: root

18/05/31 12:00:32 INFO SecurityManager: Changing view acls groups to:

18/05/31 12:00:32 INFO SecurityManager: Changing modify acls groups to:

18/05/31 12:00:32 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set()

18/05/31 12:00:33 INFO Utils: Successfully started service 'sparkDriver' on port 33733.

18/05/31 12:00:33 INFO SparkEnv: Registering MapOutputTracker

18/05/31 12:00:33 INFO SparkEnv: Registering BlockManagerMaster

18/05/31 12:00:33 INFO BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

18/05/31 12:00:33 INFO BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

18/05/31 12:00:33 INFO DiskBlockManager: Created local directory at /tmp/blockmgr-f425c261-2aa0-4063-8fa2-2ff4f106d948

18/05/31 12:00:33 INFO MemoryStore: MemoryStore started with capacity 366.3 MB

18/05/31 12:00:33 INFO SparkEnv: Registering OutputCommitCoordinator

18/05/31 12:00:33 INFO Utils: Successfully started service 'SparkUI' on port 4040.

18/05/31 12:00:33 INFO SparkUI: Bound SparkUI to 0.0.0.0, and started at http://node204.hmbank.com:4040

18/05/31 12:00:33 INFO StandaloneAppClient$ClientEndpoint: Connecting to master spark://node202.hmbank.com:7077...

18/05/31 12:00:33 INFO TransportClientFactory: Successfully created connection to node202.hmbank.com/10.30.16.202:7077 after 30 ms (0 ms spent in bootstraps)

18/05/31 12:00:33 INFO StandaloneSchedulerBackend: Connected to Spark cluster with app ID app-20180531120033-0000

18/05/31 12:00:33 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 37444.

18/05/31 12:00:33 INFO NettyBlockTransferService: Server created on node204.hmbank.com:37444

18/05/31 12:00:33 INFO BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

18/05/31 12:00:33 INFO BlockManagerMaster: Registering BlockManager BlockManagerId(driver, node204.hmbank.com, 37444, None)

18/05/31 12:00:33 INFO BlockManagerMasterEndpoint: Registering block manager node204.hmbank.com:37444 with 366.3 MB RAM, BlockManagerId(driver, node204.hmbank.com, 37444, None)

18/05/31 12:00:33 INFO BlockManagerMaster: Registered BlockManager BlockManagerId(driver, node204.hmbank.com, 37444, None)

18/05/31 12:00:33 INFO BlockManager: external shuffle service port = 7338

18/05/31 12:00:33 INFO BlockManager: Initialized BlockManager: BlockManagerId(driver, node204.hmbank.com, 37444, None)

18/05/31 12:00:33 INFO EventLoggingListener: Logging events to hdfs://hmcluster/user/spark/eventLog/app-20180531120033-0000

18/05/31 12:00:33 INFO Utils: Using initial executors = 0, max of spark.dynamicAllocation.initialExecutors, spark.dynamicAllocation.minExecutors and spark.executor.instances

18/05/31 12:00:34 INFO StandaloneSchedulerBackend: SchedulerBackend is ready for scheduling beginning after reached minRegisteredResourcesRatio: 0.0

18/05/31 12:00:34 INFO SharedState: loading hive config file: file:/usr/lib/apacheori/spark-2.3.0-bin-hadoop2.6/conf/hive-site.xml

18/05/31 12:00:34 INFO SharedState: Setting hive.metastore.warehouse.dir ('null') to the value of spark.sql.warehouse.dir ('file:/usr/lib/apacheori/spark-2.3.0-bin-hadoop2.6/bin/spark-warehouse').

18/05/31 12:00:34 INFO SharedState: Warehouse path is 'file:/usr/lib/apacheori/spark-2.3.0-bin-hadoop2.6/bin/spark-warehouse'.

18/05/31 12:00:34 INFO HiveUtils: Initializing HiveMetastoreConnection version 1.2.1 using Spark classes.

18/05/31 12:00:34 INFO HiveClientImpl: Warehouse location for Hive client (version 1.2.2) is file:/usr/lib/apacheori/spark-2.3.0-bin-hadoop2.6/bin/spark-warehouse

18/05/31 12:00:34 INFO metastore: Mestastore configuration hive.metastore.warehouse.dir changed from /user/hive/warehouse to file:/usr/lib/apacheori/spark-2.3.0-bin-hadoop2.6/bin/spark-warehouse

18/05/31 12:00:34 INFO metastore: Trying to connect to metastore with URI thrift://node203.hmbank.com:9083

18/05/31 12:00:34 INFO metastore: Connected to metastore.

18/05/31 12:00:34 INFO StateStoreCoordinatorRef: Registered StateStoreCoordinator endpoint

spark-sql> show databases;

18/05/31 12:02:22 INFO CodeGenerator: Code generated in 171.318399 ms

default

hivecluster

Time taken: 1.947 seconds, Fetched 2 row(s)

18/05/31 12:02:22 INFO SparkSQLCLIDriver: Time taken: 1.947 seconds, Fetched 2 row(s)

可以正常使用 。

创建表 并插入数据

> create table spark2 (id int , seq int ,name string) using hive options(fileFormat 'parquet');

Time taken: 0.358 seconds

18/05/31 14:10:47 INFO SparkSQLCLIDriver: Time taken: 0.358 seconds

spark-sql>

> desc spark2;

id int NULL

seq int NULL

name string NULL

Time taken: 0.061 seconds, Fetched 3 row(s)

18/05/31 14:10:54 INFO SparkSQLCLIDriver: Time taken: 0.061 seconds, Fetched 3 row(s)

spark-sql> insert into spark2 values( 1,1, 'nn');

查询表数据

spark-sql> select * from spark2;

18/05/31 14:12:08 INFO FileSourceStrategy: Pruning directories with:

18/05/31 14:12:08 INFO FileSourceStrategy: Post-Scan Filters:

18/05/31 14:12:08 INFO FileSourceStrategy: Output Data Schema: struct

18/05/31 14:12:08 INFO FileSourceScanExec: Pushed Filters:

18/05/31 14:12:08 INFO CodeGenerator: Code generated in 33.608151 ms

18/05/31 14:12:08 INFO MemoryStore: Block broadcast_6 stored as values in memory (estimated size 249.2 KB, free 365.9 MB)

18/05/31 14:12:08 INFO MemoryStore: Block broadcast_6_piece0 stored as bytes in memory (estimated size 24.6 KB, free 365.8 MB)

18/05/31 14:12:08 INFO BlockManagerInfo: Added broadcast_6_piece0 in memory on node204.hmbank.com:37444 (size: 24.6 KB, free: 366.2 MB)

18/05/31 14:12:08 INFO SparkContext: Created broadcast 6 from processCmd at CliDriver.java:376

18/05/31 14:12:08 INFO FileSourceScanExec: Planning scan with bin packing, max size: 4194304 bytes, open cost is considered as scanning 4194304 bytes.

18/05/31 14:12:09 INFO SparkContext: Starting job: processCmd at CliDriver.java:376

18/05/31 14:12:09 INFO DAGScheduler: Got job 5 (processCmd at CliDriver.java:376) with 1 output partitions

18/05/31 14:12:09 INFO DAGScheduler: Final stage: ResultStage 3 (processCmd at CliDriver.java:376)

18/05/31 14:12:09 INFO DAGScheduler: Parents of final stage: List()

18/05/31 14:12:09 INFO DAGScheduler: Missing parents: List()

18/05/31 14:12:09 INFO DAGScheduler: Submitting ResultStage 3 (MapPartitionsRDD[21] at processCmd at CliDriver.java:376), which has no missing parents

18/05/31 14:12:09 INFO MemoryStore: Block broadcast_7 stored as values in memory (estimated size 10.1 KB, free 365.8 MB)

18/05/31 14:12:09 INFO MemoryStore: Block broadcast_7_piece0 stored as bytes in memory (estimated size 4.6 KB, free 365.8 MB)

18/05/31 14:12:09 INFO BlockManagerInfo: Added broadcast_7_piece0 in memory on node204.hmbank.com:37444 (size: 4.6 KB, free: 366.2 MB)

18/05/31 14:12:09 INFO SparkContext: Created broadcast 7 from broadcast at DAGScheduler.scala:1039

18/05/31 14:12:09 INFO DAGScheduler: Submitting 1 missing tasks from ResultStage 3 (MapPartitionsRDD[21] at processCmd at CliDriver.java:376) (first 15 tasks are for partitions Vector(0))

18/05/31 14:12:09 INFO TaskSchedulerImpl: Adding task set 3.0 with 1 tasks

18/05/31 14:12:09 INFO TaskSetManager: Starting task 0.0 in stage 3.0 (TID 4, 10.30.16.202, executor 1, partition 0, ANY, 8395 bytes)

18/05/31 14:12:09 INFO BlockManagerInfo: Added broadcast_7_piece0 in memory on 10.30.16.202:36243 (size: 4.6 KB, free: 366.2 MB)

18/05/31 14:12:09 INFO BlockManagerInfo: Added broadcast_6_piece0 in memory on 10.30.16.202:36243 (size: 24.6 KB, free: 366.2 MB)

18/05/31 14:12:09 INFO TaskSetManager: Finished task 0.0 in stage 3.0 (TID 4) in 595 ms on 10.30.16.202 (executor 1) (1/1)

18/05/31 14:12:09 INFO TaskSchedulerImpl: Removed TaskSet 3.0, whose tasks have all completed, from pool

18/05/31 14:12:09 INFO DAGScheduler: ResultStage 3 (processCmd at CliDriver.java:376) finished in 0.603 s

18/05/31 14:12:09 INFO DAGScheduler: Job 5 finished: processCmd at CliDriver.java:376, took 0.607284 s

1 1 nn

Time taken: 0.793 seconds, Fetched 1 row(s)

18/05/31 14:12:09 INFO SparkSQLCLIDriver: Time taken: 0.793 seconds, Fetched 1 row(s)

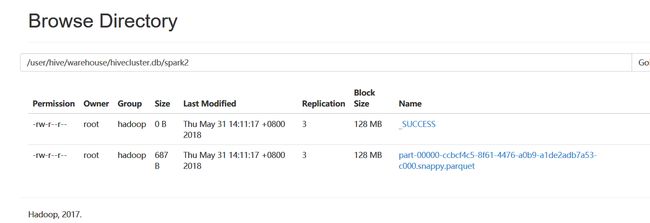

在hdfs 上的 hive 的 warehouse目录下查看:

可以看到数据已经正确的写入到hive中 ;

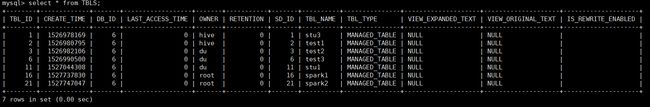

查看hive 元数据库 表的存储情况:

Done!