categorical variable分类变量 kaggle机器学习教程机翻笔记

Introduction

A categorical variable takes only a limited number of values.

分类变量仅接受有限数量的值。

-

Consider a survey that asks how often you eat breakfast and provides four options: “Never”, “Rarely”, “Most days”, or “Every day”. In this case, the data is categorical, because responses fall into a fixed set of categories.

-

考虑一个调查,问你多久吃一次早餐,并提供四个选项:“从不”,“很少”,“大多数天”,或“每天”。在本例中,数据是分类的,因为响应属于一组固定的类别。

-

If people responded to a survey about which what brand of car they owned, the responses would fall into categories like “Honda”, “Toyota”, and “Ford”. In this case, the data is also categorical.

如果人们回答一项关于他们拥有的汽车的品牌的调查,回答将分为“本田”、“丰田”和“福特”。在这种情况下,数据也是分类的。

You will get an error if you try to plug these variables into most machine learning models in Python without preprocessing them first. In this tutorial, we’ll compare three approaches that you can use to prepare your categorical data.

如果您尝试将这些变量插入Python中的大多数机器学习模型而不先对其进行预处理,则会出现错误。在本教程中,我们将比较三种可用于准备分类数据的方法。

Three Approaches

1) Drop Categorical Variables

The easiest approach to dealing with categorical variables is to simply remove them from the dataset. This approach will only work well if the columns did not contain useful information.

处理分类变量的最简单方法是从数据集中删除它们。仅当列中没有有用的信息时,此方法才能很好地工作

2) Label Encoding

Label encoding assigns each unique value to a different integer

标签编码将每个唯一值分配给不同的整数

This approach assumes(假设) an ordering of the categories:

“Never” (0) < “Rarely” (1) < “Most days” (2) < “Every day” (3).

此方法假定以下类别的排序:

“从不”(0)<“很少”(1)<“大多数日子”(2)<“每天”(3)。

This assumption makes sense in this example, because there is an indisputable (不容置疑的,无可争辩的)ranking to the categories. Not all categorical variables(n. [数] 变量) have a clear ordering in the values, but we refer to those that do as ordinal variables. For tree-based models (like decision trees and random forests), you can expect label encoding to work well with ordinal variables.

在这个例子中,这个假设是有意义的,因为这些类别的排名是无可争议的。并不是所有的分类变量在值中都有一个明确的顺序,但是我们将那些有顺序的变量称为有序变量。对于基于树的模型(如决策树和随机森林),您可以期望标签编码能够很好地处理有序变量。

3) One-Hot Encoding

One-hot encoding creates new columns indicating(表明,要求;暗示;指示;) the presence (or absence) of each possible value in the original data. To understand this, we’ll work through an example.

独热编码会创建新列,以指示原始数据中每个可能值的存在(或不存在)。为了理解这一点,我们将通过一个示例进行研究。

In the original dataset, “Color” is a categorical variable with three categories: “Red”, “Yellow”, and “Green”. The corresponding one-hot encoding contains one column for each possible value, and one row for each row in the original dataset. Wherever the original value was “Red”, we put a 1 in the “Red” column; if the original value was “Yellow”, we put a 1 in the “Yellow” column, and so on.

在原始数据集中,“Color”是一个包含“Red”、“Yellow”和“Green”三个类别的分类变量。对应的one-hot编码为每个可能的值包含一列,为原始数据集中的每一行包含一行。当原始值为“Red”时,我们在“Red”列中放入1;如果原始值是“Yellow”,则在“Yellow”列中放入1,以此类推。

将特征中的变量分为0&1组成的DF

In contrast to(与……形成对照) label encoding, one-hot encoding does not assume an ordering of the categories. Thus, you can expect this approach to work particularly well if there is no clear ordering in the categorical data (e.g., “Red” is neither more nor less than “Yellow”). We refer to categorical variables without an intrinsic ranking as nominal variables.

与标签编码不同,独热编码不假定类别的顺序。因此,如果在分类数据中没有明确的顺序(例如,“红色”既不比“黄色”多也不比“黄色”少),可以期望这种方法特别有效。我们称没有内在排序的分类变量为名义变量。

One-hot encoding generally does not perform well if the categorical variable takes on a large number of values (i.e., you generally won’t use it for variables taking more than 15 different values).

如果分类变量具有大量的值,则独热编码通常不能很好地执行即。,一般情况下,你不会将它用于包含超过15个不同值的变量)

这很好理解,数据特征多了运算就很麻烦

Example

As in the previous tutorial, we will work with the Melbourne Housing dataset.

We won’t focus on the data loading step. Instead, you can imagine you are at a point where you already have the training and validation data in X_train, X_valid, y_train, and y_valid.

我们不会专注于数据加载步骤。相反,您可以想象您正处在X_train,X_valid,y_train和y_valid中的训练和验证数据的位置。

X_train.head()

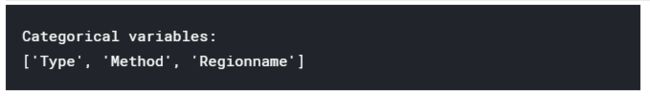

Next, we obtain a list of all of the categorical variables in the training data.

接下来,我们获得训练数据中所有类别变量的列表。

We do this by checking the data type (or dtype) of each column. The object dtype indicates a column has text (there are other things it could theoretically be, but that’s unimportant for our purposes). For this dataset, the columns with text indicate categorical variables.

我们通过检查每个列的数据类型(或dtype)来做到这一点。对象dtype表示列有文本(理论上它还可以是其他东西,但对于我们的目的来说并不重要)。对于这个数据集,带有文本的列表示分类变量。

# Get list of categorical variables

s = (X_train.dtypes == 'object')

object_cols = list(s[s].index)

print("Categorical variables:")

print(object_cols)

Define Function to Measure Quality of Each Approach

定义功能来度量每种方法的质量

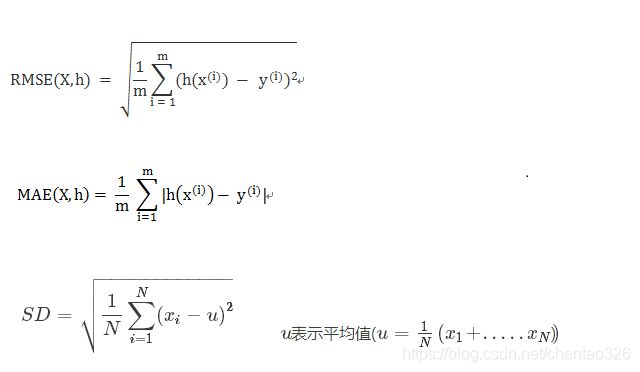

We define a function score_dataset()to compare the three different approaches to dealing with categorical variables. This function reports the mean absolute error (MAE) from a random forest model. In general, we want the MAE to be as low as possible!

我们定义了一个函数score_dataset()来比较处理分类变量的三种不同方法。该函数报告随机森林模型的平均绝对误差(MAE)。一般来说,我们希望MAE越低越好!

RMSE

Root Mean Square Error,均方根误差

是观测值与真值偏差的平方和与观测次数m比值的平方根。

是用来衡量观测值同真值之间的偏差

MAE

Mean Absolute Error ,平均绝对误差

是绝对误差的平均值

能更好地反映预测值误差的实际情况.

Standard Deviation ,

Standard Deviation,标准差

是方差的算数平方根

是用来衡量一组数自身的离散程度

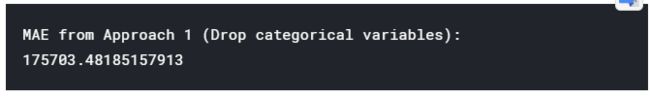

Score from Approach 1 (Drop Categorical Variables)

方法1的得分(删除分类变量)

We drop the object columns with the select_dtypes() method.

们使用select_dtypes()方法删除对象列。

drop_X_train = X_train.select_dtypes(exclude=['object'])

drop_X_valid = X_valid.select_dtypes(exclude=['object'])

print("MAE from Approach 1 (Drop categorical variables):")

print(score_dataset(drop_X_train, drop_X_valid, y_train, y_valid))

Score from Approach 2 (Label Encoding)

Scikit-learn has a LabelEncoder class that can be used to get label encodings. We loop over the categorical variables and apply the label encoder separately to each column.

Scikit-learn有一个标签编码器类,可以用来获取标签编码。我们循环遍历分类变量,并将标签编码器分别应用于每一列。

from sklearn.preprocessing import LabelEncoder

# Make copy to avoid changing original data

label_X_train = X_train.copy()

label_X_valid = X_valid.copy()

# Apply label encoder to each column with categorical data

label_encoder = LabelEncoder()

for col in object_cols:

label_X_train[col] = label_encoder.fit_transform(X_train[col])

label_X_valid[col] = label_encoder.transform(X_valid[col])

print("MAE from Approach 2 (Label Encoding):")

print(score_dataset(label_X_train, label_X_valid, y_train, y_valid))

In the code cell above, for each column, we randomly assign each unique value to a different integer(整数). This is a common approach that is simpler than providing custom labels; however, we can expect an additional boost (额外的增加)in performance(性能) if we provide better-informed labels for all ordinal variables.

在上面的代码单元格中,对于每个列,我们将每个惟一的值随机分配给一个不同的整数。这是一种比提供自定义标签更简单的常见方法;然而,如果我们为所有有序变量提供更好的信息标签,我们可以期望性能的额外提高。

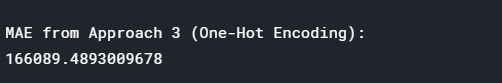

Score from Approach 3 (One-Hot Encoding)¶

We use the OneHotEncoder class from scikit-learn to get one-hot encodings. There are a number of parameters that can be used to customize its behavior

我们使用来自scikit-learn的OneHotEncoder类来获得独热编码。有许多参数可用于自定义其行为。

-

We set

handle_unknown='ignore'to avoid errors when the validation data contains classes that aren’t represented in the training data, and

当验证数据包含训练数据中未表示的类时,我们设置handle_unknown ='ignore'以避免错误, -

setting

sparse=Falseensures that the encoded columns are returned as a numpy array (instead of a sparse matrix).

设置sparse=False可以确保将已编码的列作为numpy数组(而不是稀疏矩阵)返回。

To use the encoder, we supply only the categorical columns that we want to be one-hot encoded. For instance, to encode the training data, we supply X_train[object_cols]. (object_cols in the code cell below is a list of the column names with categorical data, and so X_train[object_cols] contains all of the categorical data in the training set.)

要使用编码器,我们只提供我们希望进行独热编码的分类列。例如,为了对训练数据进行编码,我们提供了X_train[object_cols]。(下面代码单元中的object_cols是包含分类数据的列名列表,因此X_train[object_cols]包含训练集中的所有分类数据。)

from sklearn.preprocessing import OneHotEncoder

# Apply one-hot encoder to each column with categorical data

OH_encoder = OneHotEncoder(handle_unknown='ignore', sparse=False)

OH_cols_train = pd.DataFrame(OH_encoder.fit_transform(X_train[object_cols]))

OH_cols_valid = pd.DataFrame(OH_encoder.transform(X_valid[object_cols]))

# One-hot encoding removed index; put it back

OH_cols_train.index = X_train.index

OH_cols_valid.index = X_valid.index

# Remove categorical columns (will replace with one-hot encoding)

num_X_train = X_train.drop(object_cols, axis=1)

num_X_valid = X_valid.drop(object_cols, axis=1)

# Add one-hot encoded columns to numerical features

OH_X_train = pd.concat([num_X_train, OH_cols_train], axis=1)

OH_X_valid = pd.concat([num_X_valid, OH_cols_valid], axis=1)

print("MAE from Approach 3 (One-Hot Encoding):")

print(score_dataset(OH_X_train, OH_X_valid, y_train, y_valid))

Which approach is best?

In this case, dropping the categorical columns (Approach 1) performed worst, since it had the highest MAE score. As for the other two approaches, since the returned MAE scores are so close in value, there doesn’t appear to be any meaningful benefit to one over the other.

在这种情况下,删除分类列(法1)的性能最差,因为它有最高的MAE分数。至于另外两种方法,由于返回的MAE分数值非常接近,因此对其中一种方法来说,似乎没有任何有意义的好处。

In general, one-hot encoding (Approach 3) will typically perform best, and dropping the categorical columns (Approach 1) typically performs worst, but it varies on a case-by-case basis.

通常,独热编码(方法3)的性能最好,删除分类列(方法1)的性能最差,但具体情况有所不同。

原网站:

https://www.kaggle.com/alexisbcook/categorical-variables