ubuntu20.04+3090+tf1/tf2+pytorch+keras全套安装流程

主要参考这篇文章,其中修改了自己遇到的问题

py37或py38

cuda11.1

tf-nightly-gpu==2.6.0.dev20210507

pytorch1.8

keras2.3

- 安装gcc

sudo apt install build-essential

gcc -v #查看gcc版本

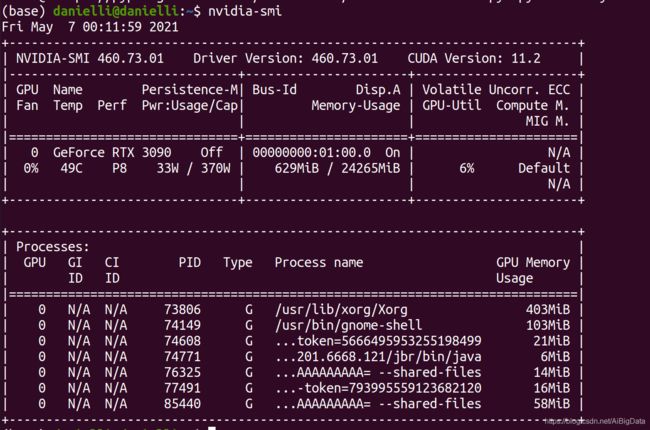

- 官网下载对应版本显卡驱动及cuda:(以下版本对应11.1cuda,此处安装cuda是为了tf的某个缺失的文件)

wget https://developer.download.nvidia.com/compute/cuda/11.1.0/local_installers/cuda_11.1.0_455.23.05_linux.run

sudo sh cuda_11.1.0_455.23.05_linux.run

- 安装Anaconda并换源

wget https://repo.anaconda.com/archive/Anaconda3-5.2.0-Linux-x86_64.sh

bash Anaconda3-5.2.0-Linux-x86_64.sh

anaconda已经安装成功了,可以直接使用。

vim ~/.bashrc

export PATH=/home/danielli/anaconda3/bin:$PATH(在文件末尾处添加该语句)

source ~/.bashrc

conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main/

conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/free/

conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/conda-forge

conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/msys2/

conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/pytorch/

conda config --set show_channel_urls yes

之后vim ~/.condarc,把defaults删掉

- 创建虚拟环境,一般用py37或py38(以下都在虚拟环境中操作)

# 下面的环境安装TF1+pytorch+keras

conda create -n py38 python==3.8

conda activate py38

# 下面的环境安装TF2+pytorch+keras

conda create -n py38_2 python==3.8

conda activate py38_2

- 安装pytorch

官网下载cuda11.1轮子:

wget https://download.pytorch.org/whl/cu111/torch-1.8.1%2Bcu111-cp38-cp38-linux_x86_64.whl

pip install torch-1.8.1+cu111-cp38-cp38-linux_x86_64.whl #在轮子所在的目录下操作

- 装最新版本的tf2(py38_2虚拟环境上安装)

这个需要重新安装

# 没有使用国内的镜像,发现使用国内的镜像总是报错

pip install tf-nightly-gpu

pip install tf-nightly

cp /usr/local/cuda-11.2/targets/x86_64-linux/lib/libcusolver.so.11.0.2.68 /home/danielli/anaconda3/envs/py38/lib

/home/danielli/anaconda3/envs/py38/lib

mv /home/danielli/anaconda3/envs/py38/lib/libcusolver.so.11.0.2.68 /home/danielli/anaconda3/envs/py38/lib/libcusolver.so.11.0

- 装tf1.15(py38虚拟环境上安装)

pip install nvidia-pyindex -i http://pypi.douban.com/simple --trusted-host pypi.douban.com

pip install nvidia-tensorflow -i http://pypi.douban.com/simple --trusted-host pypi.douban.com

- 装keras2.3

pip install keras==2.3 -i http://pypi.douban.com/simple --trusted-host pypi.douban.com

- 测试

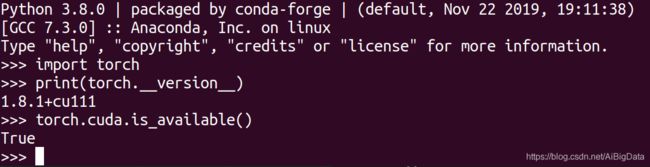

pytorch

import torch

print(torch.__version__)

torch.cuda.is_available()

tensorflow-2.6.0-dev20210507或1.15.5

import tensorflow as tf

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2' # 不显示等级2以下的提示信息

print('GPU', tf.test.is_gpu_available())

a = tf.constant(2.0)

b = tf.constant(4.0)

print(a + b)

keras(tensorflow1)

检查keras有没有在利用gpu:

from keras import backend as K

K.tensorflow_backend._get_available_gpus()

keras(tensorflow2)

检查keras有没有在利用gpu:

import tensorflow.python.keras.backend as K

K._get_available_gpus()

后记:不需要单独配cuda、cudnn,在虚拟环境里搞就行了。