Flink+iceberg环境搭建以及问题处理

概述

作为实时计算的新贵,Flink受到越来越多公司的青睐,它强大的流批一体的处理能力可以很好地解决流处理和批处理需要构建实时和离线两套处理平台的问题,可以通过一套Flink处理完成,降低成本,Flink结合数据湖的处理方式可以满足我们实时数仓和离线数仓的需求,构建一套数据湖,存储多样化的数据,实现离线查询和实时查询的需求。目前数据湖方面有Hudi和Iceberg,Hudi属于相对成熟的数据湖方案,主要用于增量的数据处理,它跟spark结合比较紧密,Flink结合Hudi的方案目前应用不多。Iceberg属于数据湖的后起之秀,可以实现高性能的分析与可靠的数据管理,目前跟Flink集合方面相对较好。

环境搭建

环境:

hadoop 2.7.7

hive 2.3.6

Flink 1.11.3

iceberg 0.11.1

jdk 1.8

mac os

下载软件

Hadoop :https://archive.apache.org/dist/hadoop/core/hadoop-2.7.7/

Hive:https://archive.apache.org/dist/hive/hive-2.3.6/

Flink: https://mirrors.tuna.tsinghua.edu.cn/apache/flink/flink-1.13.0/flink-1.13.0-bin-scala_2.11.tgz

Iceberg:https://repo.maven.apache.org/maven2/org/apache/iceberg/iceberg-flink-runtime/0.11.1/

查看环境

安装配置

安装软件

解压hadoop压缩包:

tar -xvf hadoop-2.7.7.tar.gz /Users/xxx/work

解压hive压缩包:

tar -xvf apache-hive-2.3.4-bin.tar.gz /Users/xxx/work/hadoop-2.7.7/apache-hive-2.3.4-bin

重命名:

cd /Users/xxx/work/hadoop-2.7.7/

mv apache-hive-2.3.4-bin hive

解压flink压缩包:

tar -xvf flink-1.11.3-bin-scala_2.11.tgz /Users/xxx/work

配置环境变量

打开配置文件(针对mac系统):

cd ~

vim .bash_profile

添加环境变量:

export HADOOP_HOME=/Users/xxx/work/hadoop-2.7.7

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export HADOOP_HDFS_HOME=$HADOOP_HOME

export HIVE_HOME=/Users/xxx/work/hadoop-2.7.7/hive

export PATH=$PATH:$HADOOP_HOME/bin:$HIVE_HOME/bin:$HIVE_HOME/conf

执行source:

source .bash_profile

验证是否配置完成:

xxx@jiacunxu ~ % hadoop version

Hadoop 2.7.7

Subversion Unknown -r c1aad84bd27cd79c3d1a7dd58202a8c3ee1ed3ac

Compiled by stevel on 2018-07-18T22:47Z

Compiled with protoc 2.5.0

xxx@jiacunxu ~ % hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/Users/xxx/work/hadoop-2.7.7/hive/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/Users/xxx/work/hadoop-2.7.7/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Logging initialized using configuration in file:/Users/xxx/work/hadoop-2.7.7/hive/conf/hive-log4j2.properties Async: true

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

hive>

如上显示标识,hadoop和hive环境变量配置OK,已经生效

配置Hadoop

进入hadoop目录:

cd /Users/xxx/work/hadoop-2.7.7/etc/hadoop

配置hadoop-env.sh,配置如下一行

export JAVA_HOME=/Library/Java/JavaVirtualMachines/jdk1.8.0_281.jdk/Contents/Home

配置core-site.xml:

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

配置hdfs-site.xml:

<configuration>

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/Users/xxx/hadoop/hdfs/namenode</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/Users/xxx/hadoop/hdfs/datanode</value>

</property>

</configuration>

</configuration>

格式化hdfs:

hdfs namenode -format

启动hadoop:

cd /Users/xxx/work/hadoop-2.7.7/sbin

./start-all.sh

查看启动是否正常:

xxx@xxx sbin % jps

2210 NameNode

2294 DataNode

2599 NodeManager

2397 SecondaryNameNode

11085 Launcher

2510 ResourceManager

9774 RunJar

出现NameNode和DataNode表示已经正常启动

配置Hive

创建hdfs目录

hdfs dfs -mkdir -p /user/hive/warehouse

hdfs dfs -mkdir /tmp

hdfs dfs -chmod g+w /user/hive/warehouse

hdfs dfs -chmod g+w /tmp

配置Hive

Hive的元数据是用derby

配置hive-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:derby:;databaseName=/Users/xxx/work/hadoop-2.7.7/hive/bin/metastore_db;create=true</value>

</property>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive/warehouse</value>

<description>location of default database for the warehouse</description>

</property>

<property>

<name>hive.metastore.uris</name>

<value/>

<description>Thrift URI for the remote metastore. Used by metastore client to connect to remote metastore.</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>org.apache.derby.jdbc.EmbeddedDriver</value>

</property>

<property>

<name>javax.jdo.PersistenceManagerFactoryClass</name>

<value>org.datanucleus.api.jdo.JDOPersistenceManagerFactory</value>

</property>

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

</property>

<property>

<name>datanucleus.schema.autoCreateAll</name>

<value>true</value>

</property>

</configuration>

配置hive-env.sh

HADOOP_HOME=/Users/joniers/work/bigdata/hadoop-2.7.7

创建Hive metastore

schematool -initSchema -dbType derby --verbose

如果创建失败,请查看hive/scripts/metastore/upgrade/derby目录下hive-schema-2.3.0.derby.sql文件(Hvie 2.3.6我可以直接创建成功,2.3.4会创建失败)。

执行成功,会在bin目录下创建metastore_db目录,如果需要重新执行上面命令,请手动删除metastore_db,否则会报错。

启动hive metaservice:

hive --service metastore &

检查启动是否成功:

xxx@xxx derby % lsof -i:9083

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

java 9774 xxx 580u IPv4 0xc88e6bed23c31537 0t0 TCP *:9083 (LISTEN)

看到9083正常监听,表示启动正常。

配置Flink

将iceberg-flink-runtime-0.11.1.jar和flink-sql-connector-hive-2.3.6_2.11-1.11.0.jar放入到flink的lib目录下,用来启动Flink sql client,进行iceberg操作,flink结合iceberg会有很多依赖包,也需要放到lib目录下,否则无法正常启动,不一一列举,参照下图:

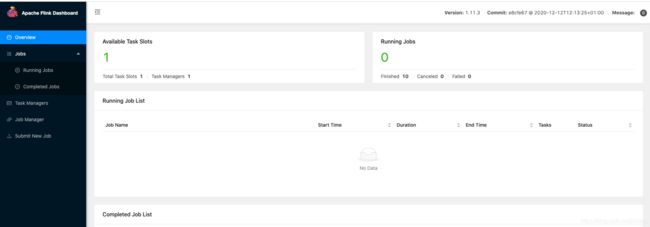

启动flink

./start-cluster.sh

浏览器输入:http://localhost:8081/,如果能正常打开,证明启动OK。

启动flink sql client

进入目录:

cd /Users/xxx/work/flink-1.11.3/bin

执行命令:

./sql-client.sh embedded \

-j /Users/xxx/work/flink-1.11.3/lib/iceberg-flink-runtime-0.11.1.jar \

-j /Users/xxx/work/flink-1.11.3/lib/flink-sql-connector-hive-2.3.6_2.11-1.11.0.jar \

shell

创建和使用catalogs

创建hive_catalog,执行下面命令:

CREATE CATALOG hive_catalog WITH (

‘type’=‘iceberg’,

‘catalog-type’=‘hive’,

‘uri’=‘thrift://localhost:9083’,

‘clients’=‘5’,

‘property-version’=‘1’,

‘warehouse’=‘hdfs://localhost:9000/user/hive/warehouse’

);

这里针对命令简单面试一下,我们需要配置uri,也就是我们启动hive metaservice的地址,warehouse执行我们创建的hive存储路径

在Flink sql下执行上面命令,你会发现,你失败了,不要灰心,这很正常,iceberg官网有类似的问题描述,不过上面的解决方式不适用于我,一般是版本不匹配或者jdk不匹配导致,报错信息见下:

Exception in thread "main" org.apache.flink.table.client.SqlClientException: Unexpected exception. This is a bug. Please consider filing an issue.

at org.apache.flink.table.client.SqlClient.main(SqlClient.java:213)

Caused by: java.lang.VerifyError: Stack map does not match the one at exception handler 70

Exception Details:

Location:

org/apache/iceberg/hive/HiveCatalog.loadNamespaceMetadata(Lorg/apache/iceberg/catalog/Namespace;)Ljava/util/Map; @70: astore_2

Reason:

Type 'org/apache/hadoop/hive/metastore/api/NoSuchObjectException' (current frame, stack[0]) is not assignable to 'org/apache/thrift/TException' (stack map, stack[0])

Current Frame:

bci: @27

flags: {

}

locals: {

'org/apache/iceberg/hive/HiveCatalog', 'org/apache/iceberg/catalog/Namespace' }

stack: {

'org/apache/hadoop/hive/metastore/api/NoSuchObjectException' }

Stackmap Frame:

bci: @70

flags: {

}

locals: {

'org/apache/iceberg/hive/HiveCatalog', 'org/apache/iceberg/catalog/Namespace' }

stack: {

'org/apache/thrift/TException' }

Bytecode:

0x0000000: 2a2b b700 c59a 0016 bb01 2c59 1301 2e04

0x0000010: bd01 3059 032b 53b7 0133 bf2a b400 3e2b

0x0000020: ba02 8e00 00b6 00e8 c002 904d 2a2c b702

0x0000030: 944e b201 2213 0296 2b2d b902 5d01 00b9

0x0000040: 012a 0400 2db0 4dbb 012c 592c 1301 2e04

0x0000050: bd01 3059 032b 53b7 0281 bf4d bb01 3559

0x0000060: bb01 3759 b701 3813 0283 b601 3e2b b601

0x0000070: 4113 0208 b601 3eb6 0144 2cb7 0147 bf4d

0x0000080: b800 46b6 014a bb01 3559 bb01 3759 b701

0x0000090: 3813 0285 b601 3e2b b601 4113 0208 b601

0x00000a0: 3eb6 0144 2cb7 0147 bf

Exception Handler Table:

bci [27, 69] => handler: 70

bci [27, 69] => handler: 70

bci [27, 69] => handler: 91

bci [27, 69] => handler: 127

Stackmap Table:

same_frame(@27)

same_locals_1_stack_item_frame(@70,Object[#191])

same_locals_1_stack_item_frame(@91,Object[#191])

same_locals_1_stack_item_frame(@127,Object[#193])

at org.apache.iceberg.flink.CatalogLoader$HiveCatalogLoader.loadCatalog(CatalogLoader.java:112)

at org.apache.iceberg.flink.FlinkCatalog.<init>(FlinkCatalog.java:111)

at org.apache.iceberg.flink.FlinkCatalogFactory.createCatalog(FlinkCatalogFactory.java:127)

at org.apache.iceberg.flink.FlinkCatalogFactory.createCatalog(FlinkCatalogFactory.java:117)

at org.apache.flink.table.api.internal.TableEnvironmentImpl.createCatalog(TableEnvironmentImpl.java:1087)

at org.apache.flink.table.api.internal.TableEnvironmentImpl.executeOperation(TableEnvironmentImpl.java:1021)

at org.apache.flink.table.api.internal.TableEnvironmentImpl.executeSql(TableEnvironmentImpl.java:691)

at org.apache.flink.table.client.gateway.local.LocalExecutor.lambda$executeSql$7(LocalExecutor.java:360)

at org.apache.flink.table.client.gateway.local.ExecutionContext.wrapClassLoader(ExecutionContext.java:255)

at org.apache.flink.table.client.gateway.local.LocalExecutor.executeSql(LocalExecutor.java:360)

at org.apache.flink.table.client.cli.CliClient.callDdl(CliClient.java:642)

at org.apache.flink.table.client.cli.CliClient.callDdl(CliClient.java:637)

at org.apache.flink.table.client.cli.CliClient.callCommand(CliClient.java:357)

at java.util.Optional.ifPresent(Optional.java:159)

at org.apache.flink.table.client.cli.CliClient.open(CliClient.java:212)

at org.apache.flink.table.client.SqlClient.openCli(SqlClient.java:142)

at org.apache.flink.table.client.SqlClient.start(SqlClient.java:114)

at org.apache.flink.table.client.SqlClient.main(SqlClient.java:201)

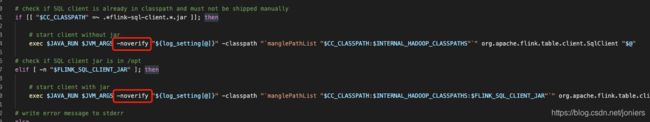

解决方式见下:

进入flink bin目录下,打开sql-client.sh文件,在jar包启动的地方加上-noverify ,跳过字节码校验,见下图:

然后再次启动,执行:

Flink SQL> CREATE CATALOG hive_catalog WITH (

> 'type'='iceberg',

> 'catalog-type'='hive',

> 'uri'='thrift://localhost:9083',

> 'clients'='5',

> 'property-version'='1',

> 'warehouse'='hdfs://localhost:9000/user/hive/warehouse'

> );

2021-05-11 10:43:42,344 INFO org.apache.hadoop.hive.conf.HiveConf [] - Found configuration file null

[INFO] Catalog has been created.

Flink SQL>

创建成功,查看一下:

Flink SQL> show catalogs;

default_catalog

hive_catalog

Flink SQL>

hive_catalog已经创建完成

未完待续

结语

以上就是Flink+iceberg环境搭建以及问题处理,我是参照iceberg官方文档指导一步步做的,发现趟坑无所,网上也没有想过的解决方案,后面我会把遇到的坑,整理出来,单独发布,无力吐槽一下官方文档