hadoop入门(九)Mapreduce中的简单排序(手机流量排序)

需求:

对日志数据中的上下行流量信息汇总,并输出按照总流量倒序排序的结果

数据如下:电话号-上行流量-下行流量(下面是模拟的数据)

13823434356 20 3015844021203 30 40

18688788797 40 50

15844939284 50 60

17646566767 90 70

18688988989 10 20

11385768543 40 44

分析:

基本思路:实现自定义的bean来封装流量信息,并将bean作为map输出的key来传输

MR程序在处理数据的过程中会对数据排序(map输出的kv对传输到reduce之前,会排序),排序的依据是map输出的key

所以,我们如果要实现自己需要的排序规则,则可以考虑将排序因素放到key中,让key实现接口:WritableComparable

然后重写key的compareTo方法。

最后在reduce中把传入的maperr处理好的数据的key,value进行调换,这样输出结果就是手机号在前,其他的在后了。

实现:

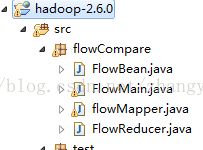

1、eclipes程序,准备环境(略):

2.分别把上面几个类写完就齐活了。

先写自定义Bean-FlowBean:

package flowCompare;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

import org.apache.hadoop.io.WritableComparable;

public class FlowBean implements WritableComparable{

private long upflow;

private long downflow;

private long sumflow;

public FlowBean(){}//空参构造

public FlowBean(long upflow,long downflow){

this.upflow=upflow;

this.downflow=downflow;

this.sumflow=upflow+downflow;

}

//序列化,把字段写入输入流

@Override

public void write(DataOutput out) throws IOException {

// TODO Auto-generated method stub

out.writeLong(upflow);

out.writeLong(downflow);

out.writeLong(sumflow);

}

//反序列化,从输入流读取各个字段信息

@Override

public void readFields(DataInput in) throws IOException {

// TODO Auto-generated method stub

upflow = in.readLong();

downflow = in.readLong();

sumflow = in.readLong();

}

//定义倒序逻辑

@Override

public int compareTo(FlowBean o) {

return sumflow>o.getSumflow()?-1:1;

}

//剩下的get/set方法,tostring方法略。。。 写mapper:

package flowCompare;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

public class flowMapper extends Mapper{

@Override

protected void map(LongWritable key,Text value,Context context){

try {

String line = value.toString();

String[] fields = line.split("\t");//tab键的空格,和文件中空格类型一致

String teleNumber= fields[0];

long upflowd = Long.parseLong(fields[1]);

long downflowd =Long.parseLong(fields[2]);

FlowBean flowBean=new FlowBean(upflowd,downflowd);

//按key是流量,value是手机号写出,就可以对key按我们flowBean中的排序原则进行排序

context.write(flowBean, new Text(teleNumber));

} catch (Exception e) {

e.printStackTrace();

}

}

}

package flowCompare;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

public class FlowReducer extends Reducer{

protected void reduce(FlowBean flowBean,Iterable teleNumber,Context context){

try {

Text teleNum = teleNumber.iterator().next();

context.write(teleNum, flowBean);

} catch (Exception e) {

e.printStackTrace();

}

}

} 写执行函数main:

package flowCompare;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class FlowMain {

public static void main(String[] args) throws Exception {

Configuration conf=new Configuration();

Job job = Job.getInstance(conf);

job.setJarByClass(FlowMain.class);

//告知job mapper和reducer

job.setMapperClass(flowMapper.class);

job.setReducerClass(FlowReducer.class);

//mapper的输出数据类型

job.setMapOutputKeyClass(FlowBean.class);

job.setMapOutputValueClass(Text.class);

//reduce的输出数据类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(FlowBean.class);

//设置输入输出路径

FileInputFormat.setInputPaths(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

//写入

job.waitForCompletion(true);

}

}注意:args[0] 和 args[1]路径参数会在执行jar时我们自己传入。

打包:

把写好的代码,打包jar,我打包的交flow.jar 。可以在打包时指定主函数所在的类(我已经指定了),也可以在运行时在指定。

测试:

1、把要排序的数据文件上传到hdfs目录中,我这里是上传在根目录了,叫teleflow.txt

2、启动环境,在安装环境下执行

[root@CentOS hadoop-2.6.0]# hadoop jar flow.jar /teleflow.txt /aa

这里注意:01,其中/teleflow.txt------>args[0] 目标文件

/aa------>args[1] 计算好的文件存储路径

02,如果你没有在打包时指定main 在哪个类中,那上面命令就得这样写

hadoop jar flow.jar flowCompare.FlowMain /teleflow.txt /aa 在flow.jar后面加上主类路径(包名.类名)

3、查看执行结果:

进入根目录,会看到生成2个文件,第一个_SUCCESS大小为0,代表成功标记;实际数据是在part-r-00000

[root@CentOS hadoop-2.6.0]# hadoop dfs -ls /aa

-rw-r--r-- 3 root supergroup 0 2017-09-23 06:10 /aa/_SUCCESS

-rw-r--r-- 3 root supergroup 408 2017-09-23 06:10 /aa/part-r-00000

进入part-r-00000中看到结果,按流量总和大小降序排列。

[root@CentOS hadoop-2.6.0]# hadoop dfs -text /aa/part-r-00000

17646566767 FlowBean [upflow=90, downflow=70, sumflow=160]

15844939284 FlowBean [upflow=50, downflow=60, sumflow=110]

18688788797 FlowBean [upflow=40, downflow=50, sumflow=90]

11385768543 FlowBean [upflow=40, downflow=44, sumflow=84]

15844021203 FlowBean [upflow=30, downflow=40, sumflow=70]

13823434356 FlowBean [upflow=20, downflow=30, sumflow=50]

18688988989 FlowBean [upflow=10, downflow=20, sumflow=30]