paddle2.0高层API快速实现LeNet(MNIST手写数字识别)

文章目录

- paddle2.0高层API快速实现LeNet(MNIST手写数字识别)

-

- DL万能公式

- 数据加载和预处理

- 查看数据

- 搭建LeNet-5卷积神经网络

- 网络模型可视化

- 模型配置

- 模型评估

- 模型预测

-

- 批量预测

- 单张图片预测

- 部署上线

-

- 保存模型

- 继续调优训练

- 保存预测模型

paddle2.0高层API快速实现LeNet(MNIST手写数字识别)

『深度学习7日打卡营·快速入门特辑』

零基础解锁深度学习神器飞桨框架高层API,七天时间助你掌握CV、NLP领域最火模型及应用。

-

课程地址

传送门:https://aistudio.baidu.com/aistudio/course/introduce/6771 -

目标

- 掌握深度学习常用模型基础知识

- 熟练掌握一种国产开源深度学习框架

- 具备独立完成相关深度学习任务的能力

- 能用所学为AI加一份年味

DL万能公式

import paddle

import numpy as np

import matplotlib.pyplot as plt

paddle.__version__

'2.0.0'

数据加载和预处理

import paddle.vision.transforms as T

# 数据加载和预处理

# [0-255] -> [0-1]

transform = T.Normalize(mean=[127.5], std=[127.5])

# 训练数据集

train_dataset = paddle.vision.datasets.MNIST(mode='train', transform=transform)

# 评估数据集

eval_dataset = paddle.vision.datasets.MNIST(mode='test', transform=transform)

print(f"训练集样本量:{len(train_dataset)},验证集样本量:{len(eval_dataset)}")

训练集样本量:60000,验证集样本量:10000

查看数据

%matplotlib inline

plt.figure()

plt.imshow(train_dataset[0][0].reshape([28, 28]), cmap=plt.cm.binary)

plt.show()

print("label:", train_dataset[0][1])

print("data shape:", train_dataset[0][0].shape)

label: [5]

data shape: (1, 28, 28)

搭建LeNet-5卷积神经网络

选用LeNet-5网络结构。

LeNet-5模型源于论文“LeCun Y, Bottou L, Bengio Y, et al. Gradient-based learning applied to document recognition[J]. Proceedings of the IEEE, 1998, 86(11): 2278-2324.”,

论文地址:https://ieeexplore.ieee.org/document/726791

![]()

每个阶段用到的Layer

![]()

# 网络搭建

net = paddle.nn.Sequential(

('C1', paddle.nn.Conv2D(in_channels=1, out_channels=6, kernel_size=3, padding=1, stride=1)),

# 6x28x28

('ReLU1', paddle.nn.ReLU()),

('S2', paddle.nn.MaxPool2D(kernel_size=2, stride=2, ceil_mode=True)),

# 6x14x14

('C3', paddle.nn.Conv2D(6, 16, kernel_size=5, stride=1, padding=0)),

# 16x10x10

('ReLU2', paddle.nn.ReLU()),

('S4', paddle.nn.MaxPool2D(kernel_size=2, stride=2, ceil_mode=True)),

# 16x5x5

('C5', paddle.nn.Conv2D(16, 120, kernel_size=5, stride=1, padding=0)),

# 120x1x1

('ReLU3', paddle.nn.ReLU()),

('ReLU4', paddle.nn.Flatten()),

# 120

('F6', paddle.nn.Linear(120, 84)),

# 84

('ReLU5', paddle.nn.ReLU()),

('OUTPUT', paddle.nn.Linear(84, 10))

)

网络模型可视化

# 模型封装

model = paddle.Model(net)

# 模型可视化

model.summary((8, 1, 28, 28)) # n c h w

---------------------------------------------------------------------------

Layer (type) Input Shape Output Shape Param #

===========================================================================

Conv2D-1 [[8, 1, 28, 28]] [8, 6, 28, 28] 60

ReLU-1 [[8, 6, 28, 28]] [8, 6, 28, 28] 0

MaxPool2D-1 [[8, 6, 28, 28]] [8, 6, 14, 14] 0

Conv2D-2 [[8, 6, 14, 14]] [8, 16, 10, 10] 2,416

ReLU-2 [[8, 16, 10, 10]] [8, 16, 10, 10] 0

MaxPool2D-2 [[8, 16, 10, 10]] [8, 16, 5, 5] 0

Conv2D-3 [[8, 16, 5, 5]] [8, 120, 1, 1] 48,120

ReLU-3 [[8, 120, 1, 1]] [8, 120, 1, 1] 0

Flatten-1 [[8, 120, 1, 1]] [8, 120] 0

Linear-1 [[8, 120]] [8, 84] 10,164

ReLU-4 [[8, 84]] [8, 84] 0

Linear-2 [[8, 84]] [8, 10] 850

===========================================================================

Total params: 61,610

Trainable params: 61,610

Non-trainable params: 0

---------------------------------------------------------------------------

Input size (MB): 0.02

Forward/backward pass size (MB): 0.90

Params size (MB): 0.24

Estimated Total Size (MB): 1.16

---------------------------------------------------------------------------

{'total_params': 61610, 'trainable_params': 61610}

模型配置

- 优化器:SGD

- 损失函数:交叉熵(cross entropy)

- 评估指标:Accuracy

# 配置优化器,损失函数,评估指标

model.prepare(optimizer=paddle.optimizer.Adam(learning_rate=0.001, parameters=net.parameters()),

loss=paddle.nn.CrossEntropyLoss(),

metrics=paddle.metric.Accuracy())

# 启动模型全流程训练

model.fit(train_data=train_dataset,

eval_data=eval_dataset,

batch_size=64,

epochs=5,

verbose=1,

shuffle=True)

The loss value printed in the log is the current step, and the metric is the average value of previous step.

Epoch 1/5

D:\Anaconda3\envs\paddle2\lib\site-packages\paddle\fluid\layers\utils.py:77: DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated since Python 3.3,and in 3.9 it will stop working

return (isinstance(seq, collections.Sequence) and

step 938/938 [==============================] - loss: 0.0460 - acc: 0.9391 - 14ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 157/157 [==============================] - loss: 0.0032 - acc: 0.9759 - 11ms/step

Eval samples: 10000

Epoch 2/5

step 938/938 [==============================] - loss: 0.0375 - acc: 0.9801 - 14ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 157/157 [==============================] - loss: 0.0014 - acc: 0.9863 - 8ms/step

Eval samples: 10000

Epoch 3/5

step 938/938 [==============================] - loss: 0.0199 - acc: 0.9850 - 13ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 157/157 [==============================] - loss: 0.0128 - acc: 0.9847 - 15ms/step

Eval samples: 10000

Epoch 4/5

step 938/938 [==============================] - loss: 0.0043 - acc: 0.9884 - 21ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 157/157 [==============================] - loss: 0.0019 - acc: 0.9836 - 8ms/step

Eval samples: 10000

Epoch 5/5

step 938/938 [==============================] - loss: 0.0069 - acc: 0.9914 - 14ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 157/157 [==============================] - loss: 2.2102e-04 - acc: 0.9884 - 14ms/step

Eval samples: 10000

模型评估

result = model.evaluate(eval_dataset, verbose=1)

print(result)

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 10000/10000 [==============================] - loss: 2.0623e-05 - acc: 0.9884 - 4ms/step

Eval samples: 10000

{'loss': [2.0622994e-05], 'acc': 0.9884}

模型预测

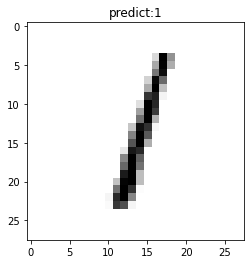

批量预测

使用model.predit接口完成对大量数据集的批量预测

result = model.predict(eval_dataset)

# 定义画图方法

def show_img(img, predict):

plt.figure()

plt.title('predict:{}'.format(predict))

plt.imshow(img.reshape([28, 28]), cmap=plt.cm.binary)

plt.show()

# 抽样展示

indexs = [2, 15, 38, 211]

for idx in indexs:

show_img(eval_dataset[idx][0], np.argmax(result[0][idx]))

Predict begin...

step 10000/10000 [==============================] - 4ms/step

Predict samples: 10000

单张图片预测

采用model.predict_batch来进行单张或者少量多张图片的预测。

# 读取单张图片

img = eval_dataset[233][0]

result = model.predict_batch([img[np.newaxis, ...]]) # 需要多添加一个batch轴,不然报错

print(result)

show_img(img, np.argmax(result))

[array([[-3.4706905, -6.674865 , -1.9018929, 3.8094432, -5.66697 ,

1.5752668, -6.6928353, -2.2028043, 9.449063 , 3.296681 ]],

dtype=float32)]

部署上线

保存模型

model.save('finetuning/mnist', training=True)

继续调优训练

from paddle.static import InputSpec

model_2 = paddle.Model(net, inputs=[InputSpec(shape=[-1, 1, 28, 28], dtype='float32', name='image')])

model_2.load('./finetuning/mnist')

# 配置优化器,损失函数,评估指标

model_2.prepare(optimizer=paddle.optimizer.Adam(learning_rate=0.0001, parameters=net.parameters()),

loss=paddle.nn.CrossEntropyLoss(),

metrics=paddle.metric.Accuracy())

# 启动模型全流程训练

model_2.fit(train_data=train_dataset,

eval_data=eval_dataset,

batch_size=64,

epochs=1,

verbose=1)

The loss value printed in the log is the current step, and the metric is the average value of previous step.

Epoch 1/1

step 938/938 [==============================] - loss: 3.6613e-04 - acc: 0.9966 - 13ms/step

Eval begin...

The loss value printed in the log is the current batch, and the metric is the average value of previous step.

step 157/157 [==============================] - loss: 7.5415e-05 - acc: 0.9908 - 9ms/step

Eval samples: 10000

保存预测模型

model_2.save('./infer/mnist', training=False)