因为需要在照相页面显示一些效果,所以只能自己实现照相页面,5.0以下可以使用Camera+SurfaceView实现,在这里只考虑了5.0以上使用了Camera2+TextureView+GlSurfaceView实现。使用GlSurfaceView可以实现一些特殊预览显示效果,比如说黑白,美白,底片等。

这里是两种效果的样子:

ezgif.com-video-to-gif.gif

ezgif.com-video-to-gif.gif

下面是主要的Camera2 api的调用,这里需要注意的是图片处理,如果使用Intent传数据,就不能直接传bitmap对象,intent对图片大小有限制很容易超出崩溃,如果传递byte[] 也需要注意大小,有的手机像素过高也会导致崩溃,这里只进行了简单的图片压缩,照相的特殊效果使用的是ColorMatrix 的矩阵设置,这个仅仅是更改照相之后的照片效果,之后会介绍如何处理预览显示的效果:

import android.Manifest;

import android.app.Activity;

import android.content.Context;

import android.content.pm.PackageManager;

import android.graphics.Bitmap;

import android.graphics.BitmapFactory;

import android.graphics.ImageFormat;

import android.graphics.Matrix;

import android.graphics.SurfaceTexture;

import android.hardware.camera2.CameraAccessException;

import android.hardware.camera2.CameraCaptureSession;

import android.hardware.camera2.CameraCharacteristics;

import android.hardware.camera2.CameraDevice;

import android.hardware.camera2.CameraManager;

import android.hardware.camera2.CaptureRequest;

import android.hardware.camera2.TotalCaptureResult;

import android.hardware.camera2.params.StreamConfigurationMap;

import android.media.Image;

import android.media.ImageReader;

import android.os.Handler;

import android.os.HandlerThread;

import android.os.Looper;

import android.support.annotation.NonNull;

import android.support.v4.app.ActivityCompat;

import android.util.Size;

import android.view.Surface;

import java.io.File;

import java.io.FileOutputStream;

import java.nio.ByteBuffer;

import java.util.Arrays;

/**

* camera2 的方法调用封装

*/

public class CameraV2 {

private Activity mActivity;

private CameraDevice mCameraDevice;

private String mCameraId;

private Size mPreviewSize;

private HandlerThread mCameraThread;

private Handler mCameraHandler;

private SurfaceTexture mSurfaceTexture;

private CaptureRequest.Builder mCaptureRequestBuilder;

private CaptureRequest mCaptureRequest;

private CameraCaptureSession mCameraCaptureSession;

private ImageReader mImageReader;

private CaptureCallBack mCallBack;

private boolean mIsPortrait=true;//默认为竖直状态

public CameraV2(Activity activity) {

mActivity = activity;

startCameraThread();

}

/**

* 初始配置,和拍照之后图片的图像数据回调

* @param width 预览相机宽

* @param height 预览相机高

* @param fileName 图片保存的文件名

*/

public void setupCamera(int width, int height, final String fileName) {

final CameraManager cameraManager = (CameraManager) mActivity.getSystemService(Context.CAMERA_SERVICE);

try {

if(cameraManager!=null) {

for (String id : cameraManager.getCameraIdList()) {

CameraCharacteristics characteristics = cameraManager.getCameraCharacteristics(id);

if (characteristics.get(CameraCharacteristics.LENS_FACING) == CameraCharacteristics.LENS_FACING_FRONT) {//判断前后摄像头,这里使用后摄像头

continue;

}

StreamConfigurationMap map = characteristics.get(CameraCharacteristics.SCALER_STREAM_CONFIGURATION_MAP);

mPreviewSize = getCloselyPreSize(width, height, map.getOutputSizes(SurfaceTexture.class));

mCameraId = id;

}

}

// 创建一个ImageReader对象,用于获取摄像头的图像数据

mImageReader = ImageReader.newInstance(mPreviewSize.getWidth(),mPreviewSize.getHeight(),

ImageFormat.JPEG, 1);

mImageReader.setOnImageAvailableListener(new ImageReader.OnImageAvailableListener() {

// 当照片数据可用时激发该方法

@Override

public void onImageAvailable(final ImageReader reader) {

// 获取捕获的照片数据

Image image = reader.acquireNextImage();

ByteBuffer buffer = image.getPlanes()[0].getBuffer();

final byte[] bytes = new byte[buffer.remaining()];

buffer.get(bytes);

Handler handler=new Handler(Looper.getMainLooper());

handler.post(new Runnable() {

@Override

public void run() {

BitmapFactory.Options options = new BitmapFactory.Options();

//只保存图片尺寸大小,不保存图片到内存

options.inJustDecodeBounds = false;

//缩放比例

options.inSampleSize = 2;

// 根据拍照所得的数据创建位图

Bitmap bitmap = BitmapFactory.decodeByteArray(bytes, 0,

bytes.length, options);

int width = options.outWidth;

Matrix matrix = new Matrix();

matrix.setRotate(90);//旋转90度,照出来的图片初始是横着的

float rate = (float)bitmap.getHeight() / 1080;//适配设置

float marginRight = (float) 292 * rate;

float marginTop= (float) 142 * rate;

float cropBitmapWidth = (float) 1240 * rate;

float cropBitmapHeight = (float) 796 * rate;

//创建裁剪之后的bitmap,原图片不能直接操作

bitmap = Bitmap.createBitmap(bitmap, (int)(width-marginRight-cropBitmapWidth)

, (int)(marginTop)

, (int)(cropBitmapWidth)

, (int)(cropBitmapHeight), matrix, true);

//这里是对照相得到的图片进行处理 黑白效果

//Canvas canvas=new Canvas(bitmap);

//Paint paint=new Paint();

//ColorMatrix colorMatrix=new ColorMatrix(new float[]{

// 0.213f, 0.715f,0.072f,0,0,

// 0.213f, 0.715f,0.072f,0,0,

// 0.213f, 0.715f,0.072f,0,0,

// 0,0,0,1,0,

//});

//paint.setColorFilter(new ColorMatrixColorFilter(colorMatrix));

//canvas.drawBitmap(bitmap,0,0,paint);

//746px // 1198px

mCallBack.photoData(bitmap);

}

});

if(fileName==null){

image.close();

return;

}

// 使用IO流将照片写入指定文件

File file = new File(fileName);

try (FileOutputStream output = new FileOutputStream(file)) {

output.write(bytes);

} catch (Exception e) {

e.printStackTrace();

}

finally {

image.close();

}

}

}, null);//最后一个参数可控制结果执行在哪个线程因为保存图片需在子线程执行,并没有指定线程

} catch (Exception e) {

e.printStackTrace();

}

}

/**

* 通过对比得到与宽高比最接近的尺寸(如果有相同尺寸,优先选择)

*

* @param surfaceWidth

* 需要被进行对比的原宽

* @param surfaceHeight

* 需要被进行对比的原高

* @param preSizeList

* 需要对比的预览尺寸列表

* @return 得到与原宽高比例最接近的尺寸

*/

private Size getCloselyPreSize(int surfaceWidth, int surfaceHeight,

Size[] preSizeList) {

int reqTmpWidth;

int reqTmpHeight;

// 当屏幕为垂直的时候需要把宽高值进行调换,保证宽大于高

if (mIsPortrait) {

reqTmpWidth = surfaceHeight;

reqTmpHeight = surfaceWidth;

} else {

reqTmpWidth = surfaceWidth;

reqTmpHeight = surfaceHeight;

}

//先查找preview中是否存在与surfaceview相同宽高的尺寸

for(Size size : preSizeList){

if((size.getWidth() == reqTmpWidth) && (size.getHeight() == reqTmpHeight)){

return size;

}

}

// 得到与传入的宽高比最接近的size

float reqRatio = ((float) reqTmpWidth) / reqTmpHeight;

float curRatio, deltaRatio;

float deltaRatioMin = Float.MAX_VALUE;

Size retSize = null;

for (Size size : preSizeList) {

curRatio = ((float) size.getWidth()) / size.getHeight();

deltaRatio = Math.abs(reqRatio - curRatio);

if (deltaRatio < deltaRatioMin) {

deltaRatioMin = deltaRatio;

retSize = size;

}

}

return retSize;

}

/**

* 创建照相机执行线程

*/

private void startCameraThread() {

mCameraThread = new HandlerThread("CameraThread");

mCameraThread.start();

mCameraHandler = new Handler(mCameraThread.getLooper());

}

public boolean openCamera() {

CameraManager cameraManager = (CameraManager) mActivity.getSystemService(Context.CAMERA_SERVICE);

try {

if (ActivityCompat.checkSelfPermission(mActivity, Manifest.permission.CAMERA) != PackageManager.PERMISSION_GRANTED) {

return false;

}

if(cameraManager!=null) {

cameraManager.openCamera(mCameraId, mStateCallback, mCameraHandler);

}

} catch (CameraAccessException e) {

e.printStackTrace();

return false;

}

return true;

}

private CameraDevice.StateCallback mStateCallback = new CameraDevice.StateCallback() {

@Override

public void onOpened(@NonNull CameraDevice camera) {

mCameraDevice = camera;

}

@Override

public void onDisconnected(@NonNull CameraDevice camera) {

camera.close();

mCameraDevice = null;

}

@Override

public void onError(@NonNull CameraDevice camera, int error) {

camera.close();

mCameraDevice = null;

}

};

/**

* 设置预览显示的surfaceTexture

*/

public void setPreviewTexture(SurfaceTexture surfaceTexture) {

mSurfaceTexture = surfaceTexture;

}

/**

* 预览显示

*/

public void startPreview() {

mSurfaceTexture.setDefaultBufferSize(mPreviewSize.getWidth(), mPreviewSize.getHeight());

Surface surface = new Surface(mSurfaceTexture);

try {

mCaptureRequestBuilder = mCameraDevice.createCaptureRequest(CameraDevice.TEMPLATE_PREVIEW);

mCaptureRequestBuilder.addTarget(surface);

mCameraDevice.createCaptureSession(Arrays.asList(surface,mImageReader.getSurface()), new CameraCaptureSession.StateCallback() {

@Override

public void onConfigured(@NonNull CameraCaptureSession session) {

try {

mCaptureRequest = mCaptureRequestBuilder.build();

mCameraCaptureSession = session;

mCameraCaptureSession.setRepeatingRequest(mCaptureRequest, null, mCameraHandler);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

@Override

public void onConfigureFailed(@NonNull CameraCaptureSession session) {

// ToastUtils.showLong("配置失败");

}

}, mCameraHandler);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

public void capture(CaptureCallBack callBack) {

mCallBack=callBack;

try {

//首先我们创建请求拍照的CaptureRequest

final CaptureRequest.Builder mCaptureBuilder = mCameraDevice.createCaptureRequest(CameraDevice.TEMPLATE_STILL_CAPTURE);

//设置CaptureRequest输出到mImageReader

mCaptureBuilder.addTarget(mImageReader.getSurface());

//设置拍照方向 默认竖屏

// mCaptureBuilder.set(CaptureRequest.JPEG_ORIENTATION, ORIENTATIONS.get(ScreenUtils.getScreenRotation(ActivityUtils.getTopActivity())));

//这个回调接口用于拍照结束时重启预览,因为拍照会导致预览停止

mCameraCaptureSession.capture(mCaptureRequestBuilder.build(), new CameraCaptureSession.CaptureCallback() {

@Override

public void onCaptureCompleted(@NonNull CameraCaptureSession session, @NonNull CaptureRequest request, @NonNull TotalCaptureResult result) {

super.onCaptureCompleted(session, request, result);

}

}, mCameraHandler);

CameraCaptureSession.CaptureCallback mImageSavedCallback = new CameraCaptureSession.CaptureCallback() {

@Override

public void onCaptureCompleted(@NonNull CameraCaptureSession session,@NonNull CaptureRequest request,@NonNull TotalCaptureResult result) {

}

};

//停止预览

mCameraCaptureSession.stopRepeating();

//开始拍照,然后回调上面的接口重启预览,因为mCaptureBuilder设置ImageReader作为target,所以会自动回调ImageReader的onImageAvailable()方法保存图片

mCameraCaptureSession.capture(mCaptureBuilder.build(), mImageSavedCallback, null);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

/**

* 重新开启预览

*/

public void restartPreview() {

try {

//执行setRepeatingRequest方法就行了,注意mCaptureRequest是之前开启预览设置的请求

mCameraCaptureSession.setRepeatingRequest(mCaptureRequest, null, mCameraHandler);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

public interface CaptureCallBack{

void photoData(Bitmap bytes);

}

public void closeCamera(){

mCameraDevice.close();

mCameraDevice = null;

mCameraThread.interrupt();

mCameraHandler=null;

}

}

自定义的GlSurfaceView,和GlSurfaceView渲染器

import android.content.Context;

import android.opengl.GLSurfaceView;

import android.util.AttributeSet;

/**

* 显示界面

*/

public class CameraV2GLSurfaceView extends GLSurfaceView {

public CameraV2GLSurfaceView(Context context) {

super(context);

}

public CameraV2GLSurfaceView(Context context, AttributeSet attrs) {

super(context, attrs);

}

public void init(CameraV2 camera, boolean isPreviewStarted, Context context) {

setEGLContextClientVersion(2);

CameraV2Renderer cameraV2Renderer = new CameraV2Renderer();

cameraV2Renderer.init(this, camera, isPreviewStarted, context);

setRenderer(cameraV2Renderer);

}

}

import android.content.Context;

import android.graphics.SurfaceTexture;

import android.opengl.GLES11Ext;

import android.opengl.GLSurfaceView;

import java.nio.FloatBuffer;

import javax.microedition.khronos.egl.EGLConfig;

import javax.microedition.khronos.opengles.GL10;

import static android.opengl.GLES11Ext.GL_TEXTURE_EXTERNAL_OES;

import static android.opengl.GLES20.GL_FLOAT;

import static android.opengl.GLES20.GL_FRAMEBUFFER;

import static android.opengl.GLES20.GL_TRIANGLES;

import static android.opengl.GLES20.glActiveTexture;

import static android.opengl.GLES20.glBindFramebuffer;

import static android.opengl.GLES20.glBindTexture;

import static android.opengl.GLES20.glClearColor;

import static android.opengl.GLES20.glDrawArrays;

import static android.opengl.GLES20.glEnableVertexAttribArray;

import static android.opengl.GLES20.glGenFramebuffers;

import static android.opengl.GLES20.glGetAttribLocation;

import static android.opengl.GLES20.glGetUniformLocation;

import static android.opengl.GLES20.glUniform1i;

import static android.opengl.GLES20.glUniformMatrix4fv;

import static android.opengl.GLES20.glVertexAttribPointer;

import static android.opengl.GLES20.glViewport;

/**

* 渲染器

*/

public class CameraV2Renderer implements GLSurfaceView.Renderer {

private Context mContext;

private CameraV2GLSurfaceView mCameraV2GLSurfaceView;

private CameraV2 mCamera;

private boolean bIsPreviewStarted;

private int mOESTextureId = -1;

private SurfaceTexture mSurfaceTexture;

private float[] transformMatrix = new float[16];

private FloatBuffer mDataBuffer;

private int mShaderProgram = -1;

private int[] mFBOIds = new int[1];

public void init(CameraV2GLSurfaceView surfaceView, CameraV2 camera, boolean isPreviewStarted, Context context) {

mContext = context;

mCameraV2GLSurfaceView = surfaceView;

mCamera = camera;

bIsPreviewStarted = isPreviewStarted;

}

@Override

public void onSurfaceCreated(GL10 gl, EGLConfig config) {

mOESTextureId = RawUtils.createOESTextureObject();

FilterEngine filterEngine = new FilterEngine(mContext);

mDataBuffer = filterEngine.getBuffer();

mShaderProgram = filterEngine.getShaderProgram();

glGenFramebuffers(1, mFBOIds, 0);

glBindFramebuffer(GL_FRAMEBUFFER, mFBOIds[0]);

}

@Override

public void onSurfaceChanged(GL10 gl, int width, int height) {

glViewport(0, 0, width, height);

}

@Override

public void onDrawFrame(GL10 gl) {

if (mSurfaceTexture != null) {

mSurfaceTexture.updateTexImage();

mSurfaceTexture.getTransformMatrix(transformMatrix);

}

if (!bIsPreviewStarted) {

bIsPreviewStarted = initSurfaceTexture();

bIsPreviewStarted = true;

return;

}

//glClear(GL_COLOR_BUFFER_BIT);

glClearColor(1.0f, 0.0f, 0.0f, 0.0f);

int aPositionLocation = glGetAttribLocation(mShaderProgram, FilterEngine.POSITION_ATTRIBUTE);

int aTextureCoordLocation = glGetAttribLocation(mShaderProgram, FilterEngine.TEXTURE_COORD_ATTRIBUTE);

int uTextureMatrixLocation = glGetUniformLocation(mShaderProgram, FilterEngine.TEXTURE_MATRIX_UNIFORM);

int uTextureSamplerLocation = glGetUniformLocation(mShaderProgram, FilterEngine.TEXTURE_SAMPLER_UNIFORM);

glActiveTexture(GL_TEXTURE_EXTERNAL_OES);

glBindTexture(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, mOESTextureId);

glUniform1i(uTextureSamplerLocation, 0);

glUniformMatrix4fv(uTextureMatrixLocation, 1, false, transformMatrix, 0);

if (mDataBuffer != null) {

mDataBuffer.position(0);

glEnableVertexAttribArray(aPositionLocation);

glVertexAttribPointer(aPositionLocation, 2, GL_FLOAT, false, 16, mDataBuffer);

mDataBuffer.position(2);

glEnableVertexAttribArray(aTextureCoordLocation);

glVertexAttribPointer(aTextureCoordLocation, 2, GL_FLOAT, false, 16, mDataBuffer);

}

//glDrawElements(GL_TRIANGLE_FAN, 6,GL_UNSIGNED_INT, 0);

//glDrawArrays(GL_TRIANGLE_FAN, 0 , 6);

glDrawArrays(GL_TRIANGLES, 0, 6);

//glDrawArrays(GL_TRIANGLES, 3, 3);

glBindFramebuffer(GL_FRAMEBUFFER, 0);

}

/**

* 初始化SurfaceTexture

*/

private boolean initSurfaceTexture() {

if (mCamera == null || mCameraV2GLSurfaceView == null) {

return false;

}

mSurfaceTexture = new SurfaceTexture(mOESTextureId);

mSurfaceTexture.setOnFrameAvailableListener(new SurfaceTexture.OnFrameAvailableListener() {

@Override

public void onFrameAvailable(SurfaceTexture surfaceTexture) {

mCameraV2GLSurfaceView.requestRender();

}

});

mCamera.setPreviewTexture(mSurfaceTexture);

mCamera.startPreview();

return true;

}

}

import android.content.Context;

import java.nio.ByteBuffer;

import java.nio.ByteOrder;

import java.nio.FloatBuffer;

import static android.opengl.GLES20.GL_FRAGMENT_SHADER;

import static android.opengl.GLES20.GL_VERTEX_SHADER;

import static android.opengl.GLES20.glAttachShader;

import static android.opengl.GLES20.glCompileShader;

import static android.opengl.GLES20.glCreateProgram;

import static android.opengl.GLES20.glCreateShader;

import static android.opengl.GLES20.glGetError;

import static android.opengl.GLES20.glLinkProgram;

import static android.opengl.GLES20.glShaderSource;

import static android.opengl.GLES20.glUseProgram;

public class FilterEngine {

private FloatBuffer mBuffer;

private int mShaderProgram;

public FilterEngine( Context context) {

mBuffer = createBuffer(vertexData);

int vertexShader = loadShader(GL_VERTEX_SHADER, RawUtils.readShaderFromResource(context, R.raw.base_vertex_shader));

int fragmentShader = loadShader(GL_FRAGMENT_SHADER, RawUtils.readShaderFromResource(context, R.raw.base_fragment_shader));

mShaderProgram = linkProgram(vertexShader, fragmentShader);

}

private static final float[] vertexData = {

1f, 1f, 1f, 1f,

-1f, 1f, 0f, 1f,

-1f, -1f, 0f, 0f,

1f, 1f, 1f, 1f,

-1f, -1f, 0f, 0f,

1f, -1f, 1f, 0f

};

public static final String POSITION_ATTRIBUTE = "aPosition";

public static final String TEXTURE_COORD_ATTRIBUTE = "aTextureCoordinate";

public static final String TEXTURE_MATRIX_UNIFORM = "uTextureMatrix";

public static final String TEXTURE_SAMPLER_UNIFORM = "uTextureSampler";

private FloatBuffer createBuffer(float[] vertexData) {

FloatBuffer buffer = ByteBuffer.allocateDirect(vertexData.length * 4)

.order(ByteOrder.nativeOrder())

.asFloatBuffer();

buffer.put(vertexData, 0, vertexData.length).position(0);

return buffer;

}

private int loadShader(int type, String shaderSource) {

int shader = glCreateShader(type);

if (shader == 0) {

throw new RuntimeException("Create Shader Failed!" + glGetError());

}

glShaderSource(shader, shaderSource);

glCompileShader(shader);

return shader;

}

private int linkProgram(int verShader, int fragShader) {

int program = glCreateProgram();

if (program == 0) {

throw new RuntimeException("Create Program Failed!" + glGetError());

}

glAttachShader(program, verShader);

glAttachShader(program, fragShader);

glLinkProgram(program);

glUseProgram(program);

return program;

}

public int getShaderProgram() {

return mShaderProgram;

}

public FloatBuffer getBuffer() {

return mBuffer;

}

}

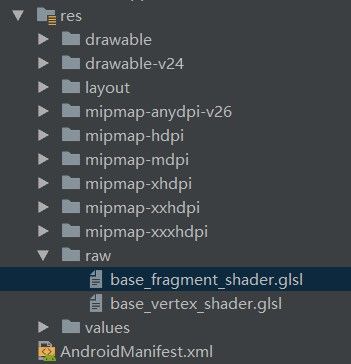

其中的base_fragment_shader这种资源文件需要在如图所示的地方创建:

内容分别为:

base_fragment_shader(就是在这里处理预览的显示特殊效果):

#extension GL_OES_EGL_image_external : require

precision mediump float;

uniform samplerExternalOES uTextureSampler;

varying vec2 vTextureCoord;

void main()

{

vec4 vCameraColor = texture2D(uTextureSampler, vTextureCoord);

float fGrayColor = (0.3*vCameraColor.r + 0.59*vCameraColor.g + 0.11*vCameraColor.b);//黑白滤镜

gl_FragColor = vec4(vCameraColor.r, vCameraColor.g, vCameraColor.b, 1.0);

}

base_vertex_shader:

attribute vec4 aPosition;

uniform mat4 uTextureMatrix;

attribute vec4 aTextureCoordinate;

varying vec2 vTextureCoord;

void main()

{

vTextureCoord = (uTextureMatrix * aTextureCoordinate).xy;

gl_Position = aPosition;

}

工具类:

import android.content.Context;

import android.opengl.GLES11Ext;

import android.opengl.GLES20;

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStream;

import java.io.InputStreamReader;

import javax.microedition.khronos.opengles.GL10;

public class RawUtils {

public static int createOESTextureObject() {

int[] tex = new int[1];

GLES20.glGenTextures(1, tex, 0);

GLES20.glBindTexture(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, tex[0]);

GLES20.glTexParameterf(GLES11Ext.GL_TEXTURE_EXTERNAL_OES,

GL10.GL_TEXTURE_MIN_FILTER, GL10.GL_NEAREST);

GLES20.glTexParameterf(GLES11Ext.GL_TEXTURE_EXTERNAL_OES,

GL10.GL_TEXTURE_MAG_FILTER, GL10.GL_LINEAR);

GLES20.glTexParameterf(GLES11Ext.GL_TEXTURE_EXTERNAL_OES,

GL10.GL_TEXTURE_WRAP_S, GL10.GL_CLAMP_TO_EDGE);

GLES20.glTexParameterf(GLES11Ext.GL_TEXTURE_EXTERNAL_OES,

GL10.GL_TEXTURE_WRAP_T, GL10.GL_CLAMP_TO_EDGE);

GLES20.glBindTexture(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, 0);

return tex[0];

}

public static String readShaderFromResource(Context context, int resourceId) {

StringBuilder builder = new StringBuilder();

InputStream is = null;

InputStreamReader isr = null;

BufferedReader br = null;

try {

is = context.getResources().openRawResource(resourceId);

isr = new InputStreamReader(is);

br = new BufferedReader(isr);

String line;

while ((line = br.readLine()) != null) {

builder.append(line + "\n");

}

} catch (IOException e) {

e.printStackTrace();

} finally {

try {

if (is != null) {

is.close();

is = null;

}

if (isr != null) {

isr.close();

isr = null;

}

if (br != null) {

br.close();

br = null;

}

} catch (IOException e) {

e.printStackTrace();

}

}

return builder.toString();

}

}

这里还需要一个显示中间框的自定义view,这里的大小位置直接写死了,可以根据需求更改:

/**

* 显示照相机界面

*/

public class PreviewBorderView extends SurfaceView implements SurfaceHolder.Callback, Runnable {

private int mScreenH;

private int mScreenW;

private Canvas mCanvas;

private Paint mPaint;

private SurfaceHolder mHolder;

private Thread mThread;

public PreviewBorderView(Context context) {

this(context, null);

}

public PreviewBorderView(Context context, AttributeSet attrs) {

this(context, attrs, 0);

}

public PreviewBorderView(Context context, AttributeSet attrs, int defStyleAttr) {

super(context, attrs, defStyleAttr);

init();

}

/**

* 初始化绘图变量

*/

private void init() {

this.mHolder = getHolder();

this.mHolder.addCallback(this);

this.mHolder.setFormat(PixelFormat.TRANSPARENT);

setZOrderOnTop(true);

this.mPaint = new Paint();

this.mPaint.setAntiAlias(true);

this.mPaint.setColor(Color.WHITE);

this.mPaint.setStyle(Paint.Style.FILL_AND_STROKE);

this.mPaint.setXfermode(new PorterDuffXfermode(PorterDuff.Mode.CLEAR));

setKeepScreenOn(true);

}

/**

* 绘制取景框

*/

private void draw() {

try {

this.mCanvas = this.mHolder.lockCanvas();

this.mCanvas.drawARGB(100, 0, 0, 0);

float rate = (float)mScreenW / 1080;

this.mCanvas.drawRect(new RectF(162*rate

, mScreenH-312*rate-1200*rate, mScreenW-162*rate

, mScreenH-312*rate), this.mPaint);

} catch (Exception e) {

e.printStackTrace();

} finally {

if (this.mCanvas != null) {

this.mHolder.unlockCanvasAndPost(this.mCanvas);

}

}

}

@Override

public void surfaceCreated(SurfaceHolder holder) {

//获得宽高,开启子线程绘图

this.mScreenW = getWidth();

this.mScreenH = getHeight();

this.mThread = new Thread(this);

this.mThread.start();

}

@Override

public void surfaceChanged(SurfaceHolder holder, int format, int width, int height) {

}

@Override

public void surfaceDestroyed(SurfaceHolder holder) {

//停止线程

try {

mThread.interrupt();

mThread = null;

} catch (Exception e) {

e.printStackTrace();

}

}

@Override

public void run() {

//子线程绘图

draw();

}

}

照相显示界面:

import android.app.Activity;

import android.content.Context;

import android.content.Intent;

import android.graphics.Bitmap;

import android.os.Bundle;

import android.view.View;

import android.view.Window;

import android.view.WindowManager;

import android.widget.ImageView;

import android.widget.RelativeLayout;

import android.widget.TextView;

import java.io.ByteArrayOutputStream;

import butterknife.BindView;

import butterknife.ButterKnife;

public class CameraActivity extends Activity implements View.OnClickListener {

@BindView(R.id.camera_take_photo_text_view)

ImageView tradingCameraTakePhotoTextView;

@BindView(R.id.camera_retry_text_view)

TextView tradingCameraRetryTextView;

@BindView(R.id.camera_sure_text_view)

ImageView tradingCameraSureTextView;

@BindView(R.id.camera_bottom_relative_layout)

RelativeLayout tradingCameraBottomRelativeLayout;

@BindView(R.id.camera_middle_image_view)

ImageView tradingCameraMiddleImageView;

@BindView(R.id.camera_hint_text_view)

TextView tradingCameraHintTextView;

@BindView(R.id.camera_close_image_view)

ImageView tradingCameraCloseImageView;

@BindView(R.id.trading_record_surface_view)

CameraV2GLSurfaceView tradingRecordSurfaceView;

@BindView(R.id.camera_bg_view)

View tradingCameraBgView;

private CameraV2 mCamera;

private Bitmap mPhotoBitmap;

private String mPath;

public static Intent newIntent(Context context,String path){

Intent intent=new Intent(context,CameraActivity.class);

intent.putExtra("path",path);

return intent;

}

@Override

protected void onCreate(Bundle savedInstanceState) {

this.requestWindowFeature(Window.FEATURE_NO_TITLE);

getWindow().setFlags(WindowManager.LayoutParams.FLAG_FULLSCREEN, WindowManager.LayoutParams.FLAG_FULLSCREEN);

hideBottomUIMenu();

super.onCreate(savedInstanceState);

mPath = getIntent().getStringExtra("path");

setContentView(R.layout.activity_camera);

ButterKnife.bind(this);

initView();

}

private void initView() {

tradingCameraTakePhotoTextView.setOnClickListener(this);

tradingCameraRetryTextView.setOnClickListener(this);

tradingCameraSureTextView.setOnClickListener(this);

tradingCameraCloseImageView.setOnClickListener(this);

mCamera = new CameraV2(this);

mCamera.setupCamera(ScreenUtils.getScreenWidth(), ScreenUtils.getScreenHeight(),mPath);

if (!mCamera.openCamera()) {

return;

}

tradingRecordSurfaceView.init(mCamera, false, this);

}

@Override

public void onClick(View v) {

switch (v.getId()) {

case R.id.camera_close_image_view:

finish();

break;

case R.id.camera_take_photo_text_view:

mCamera.capture(new CameraV2.CaptureCallBack() {

@Override

public void photoData(Bitmap bitmap) {

mPhotoBitmap = bitmap;

tradingCameraMiddleImageView.setImageBitmap(bitmap);

tradingCameraBgView.setVisibility(View.VISIBLE);

tradingCameraRetryTextView.setVisibility(View.VISIBLE);

tradingCameraSureTextView.setVisibility(View.VISIBLE);

tradingCameraTakePhotoTextView.setVisibility(View.GONE);

}

});

break;

case R.id.camera_retry_text_view:

tradingCameraMiddleImageView.setImageBitmap(null);

tradingCameraRetryTextView.setVisibility(View.GONE);

tradingCameraSureTextView.setVisibility(View.GONE);

tradingCameraTakePhotoTextView.setVisibility(View.VISIBLE);

tradingCameraBgView.setVisibility(View.GONE);

mCamera.restartPreview();

break;

case R.id.camera_sure_text_view://bitmap不能直接回传图片过大,导致卡在页面

Intent intent=new Intent();

ByteArrayOutputStream baos = new ByteArrayOutputStream();

mPhotoBitmap.compress(Bitmap.CompressFormat.JPEG, 100, baos);

byte[] datas = baos.toByteArray();

intent.putExtra("photo",datas);

setResult(RESULT_OK,intent);

finish();

break;

}

}

@Override

protected void onDestroy() {

mCamera.closeCamera();

super.onDestroy();

}

/**

* 隐藏虚拟按键,并且全屏

*/

protected void hideBottomUIMenu() {

//for new api versions.

View decorView = getWindow().getDecorView();

int uiOptions = View.SYSTEM_UI_FLAG_HIDE_NAVIGATION

| View.SYSTEM_UI_FLAG_IMMERSIVE_STICKY | View.SYSTEM_UI_FLAG_FULLSCREEN;

decorView.setSystemUiVisibility(uiOptions);

}

}

最开始调用显示界面(布局就不贴了,就一个简单的imageview):

import android.content.Intent;

import android.graphics.Bitmap;

import android.graphics.BitmapFactory;

import android.os.Bundle;

import android.support.v7.app.AppCompatActivity;

import android.view.View;

import android.widget.ImageView;

import butterknife.BindView;

import butterknife.ButterKnife;

public class MainActivity extends AppCompatActivity {

@BindView(R.id.image_view)

ImageView imageView;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

ButterKnife.bind(this);

imageView.setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View v) {

Intent intent = CameraActivity.newIntent(MainActivity.this,null);

startActivityForResult(intent,1);

}

});

}

@Override

protected void onActivityResult(int requestCode, int resultCode, Intent data) {

super.onActivityResult(requestCode, resultCode, data);

if(requestCode==1 && resultCode==RESULT_OK){

Bundle extras = data.getExtras();

if(extras!=null) {

byte[] photos = data.getByteArrayExtra("photo");

Bitmap bitmap = BitmapFactory.decodeByteArray(photos, 0, photos.length);

imageView.setImageBitmap(bitmap);

}

}

}

}

注:

ColorMatrix和gl中RGB相加=1就为黑白,矩阵那可以随意更改只要保证RGB的三个横排相加等于1就可以,可以在这里自由设置显示不同效果。