(梳理)用Tensorflow实现SE-ResNet(SENet ResNet ResNeXt VGG16)的数据输入,训练,预测的完整代码框架(cifar10准确率90%)

//之前的代码有问题,重发一遍

这里做个整理,打算给一个ResNet的完整代码实现

这篇博客不对ResNet DenseNet思路做详解,只是提供一个大致的代码实现和编写思路

以及一些自己的小实验

当然也包括ResNeXt和SENet

其中SE-ResNeXt是最后一届ImageNet比赛的冠军模型

当然可能我写得烂……参数没精调…准确率提升也不是很明显

首先是数据处理部分,我自己实现过几个框架

多线程数据读入和处理框架(tf.data)

较早版本的队列多线程读入处理框架

最普通的单线程(用法和自带MNIST一样)读取数据

我用了多线程tf.data

Cifar10原生数据集是python打包的

至于图片的话,参考我的这篇,把Cifar10图片提取出来

主要是为了制成TFRecord格式,这样数据读取速度会很快(毕竟自家的东西优化得给足)

VGG AlexNet之前已经跑过了就不跑了,滑动平均下准确率已经在85%以上了

我自己的AlexNet和VGG16

(均嵌入SENet,并且移除全连接层,当然甚至可以把池化也扔掉,用步长2x2的卷积代替池化,这样的话网络看起来像是全卷积网络FCN)

我用全局平均池化+1x1卷积代替全连接层,同时还加上了BN,SENet等后来才有的结构

准确率能跑到85%以上

比较深的网络(VGG19)不加上BN很难收敛,我跑Kaggle的时候跑了10个epoch还是50%的准确率

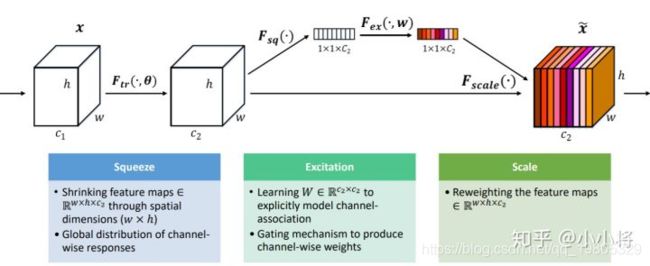

戳我了解SENet

戳我了解ResNeXt

SENet感觉像是一种Attention机制

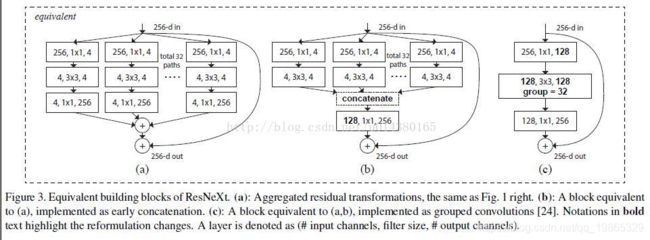

ResNet和ResNeXt的差别不是很大,就是卷积分成了几个通路,像Inception那样

不同的是每个通路都是同构的

有三种等价的实现,我这里采用了c的结构

学习率使用warmup策略,因而学习率可以设置到一个比较大的数字(ResNet50设置到了0.036都基本可以正常训练,不过有一定概率中期崩掉)

网络中的一些超参数:

Optimizer: MomentumOptimizer(SGDM)

conv_initializer:xavier(tf.layers.conv2d默认kernel_initializer,实测比stddev=0.01的截断正态分布好)

momentum:0.9

warmup_step:batch_num*10

learning_rate_base:0.012

learning_rate_decay:0.96

learning_rate_step:batch_num

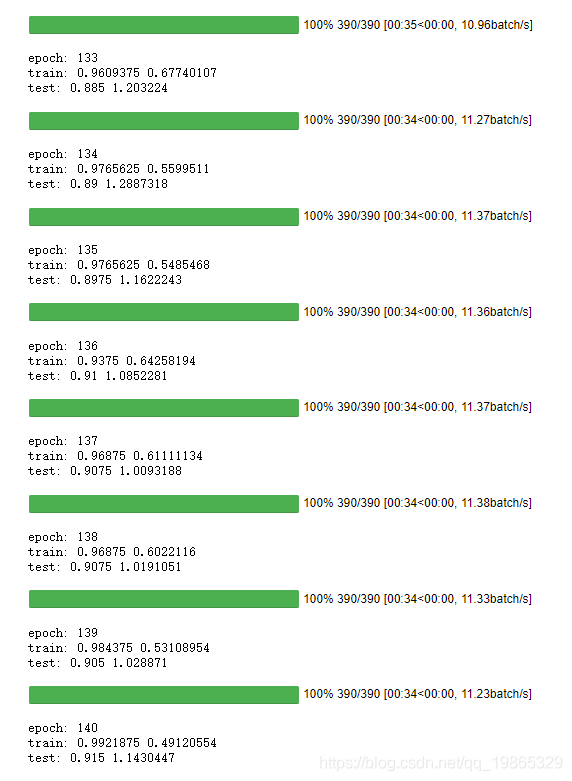

train_epochs:140

batch_size:128

batch_num:50000/batch_size

SE_rate:32

cardinality:8

regularizer_rate:0.008

drop_rate:0.3

exponential_moving_average_decay:0.999

虽然cifar10只有32x32

但是实际输入网络的时候被resize为64x64 或者128x128 (测试准确率差异在1%以内,可以忽略不记)

完整代码:

ResNet50/ResNeXt/SE-AlexNet

数据处理部分:

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

from time import time

from PIL import Image

from sklearn.utils import shuffle

from tqdm import tqdm_notebook as tqdm

import os

from tqdm import tqdm_notebook as tqdm

data_path="C:\\Users\\Shijunfeng\\tensorflow_gpu\\dataseats\\cifar10\\Image"

mode_path="C:\\Users\\Shijunfeng\\tensorflow_gpu\\dataseats\\cifar10\\Image\\TFMode"

tf.reset_default_graph()

def imshows(classes,images,labels,index,amount,predictions=None):

#classes 类别数组

#image 图片数组

#labels 标签数组

#index amount 从数组第index开始输出amount张照片

#prediction 预测结果

fig=plt.gcf()

fig.set_size_inches(10,20)#大小看怎么调整合适把

for i in range(amount):

title="lab:"+classes[np.argmax(labels[index+i])]

if predictions is not None:

title=title+"prd:"+name[np.argmax(predictions[index+i])]

ax=plt.subplot(5,6,i+1)#每行五个,输出6行

ax.set_title(title)

ax.imshow(images[index+i])

plt.show()

class DatasetReader(object):

def __init__(self,data_path,image_size=None):

self.data_path=data_path

self.img_size=image_size

self.img_size.append(3)

self.train_path=os.path.join(data_path,"train")

self.test_path=os.path.join(data_path,"test")

self.TF_path=os.path.join(data_path,"TFRecordData")

self.tf_train_path=os.path.join(self.TF_path,"train")

self.tf_test_path=os.path.join(self.TF_path,"test")

self.classes=os.listdir(self.train_path)

self.__Makedirs()

self.train_batch_initializer=None

self.test_batch_initializer=None

self.__CreateTFRecord(self.train_path,self.tf_train_path)

self.__CreateTFRecord(self.test_path,self.tf_test_path)

def __CreateTFRecord(self,read_path,save_path):

path=os.path.join(save_path,"data.TFRecord")

if os.path.exists(path):

print("find file "+(os.path.join(save_path,"data.TFRecords")))

return

else:

print("cannot find file %s,ready to recreate"%(os.path.join(save_path,"data.TFRecords")))

writer=tf.python_io.TFRecordWriter(path=path)

image_path=[]

image_label=[]

image_size=[int(self.img_size[0]*1.3),int(self.img_size[1]*1.3)]

for label,class_name in enumerate(self.classes):

class_path=os.path.join(read_path,class_name)

for image_name in os.listdir(class_path):

image_path.append(os.path.join(class_path,image_name))

image_label.append(label)

for i in range(5):image_path,image_label=shuffle(image_path,image_label)

for i in tqdm(range(len(image_path)),desc="TFRecord"):

image,label=Image.open(image_path[i]).resize(image_size,Image.ANTIALIAS),image_label[i]

image=image.convert("RGB")

image=image.tobytes()

example=tf.train.Example(features=tf.train.Features(feature={

"label":tf.train.Feature(int64_list=tf.train.Int64List(value=[label])),

"image":tf.train.Feature(bytes_list=tf.train.BytesList(value=[image]))

}))

writer.write(example.SerializeToString())

writer.close()

def __Makedirs(self):

if not os.path.exists(self.TF_path):

os.makedirs(self.TF_path)

if not os.path.exists(self.tf_train_path):

os.makedirs(self.tf_train_path)

if not os.path.exists(self.tf_test_path):

os.makedirs(self.tf_test_path)

def __parsed(self,tensor):

raw_image_size=[int(self.img_size[0]*1.3),int(self.img_size[1]*1.3),3]

feature=tf.parse_single_example(tensor,features={

"image":tf.FixedLenFeature([],tf.string),

"label":tf.FixedLenFeature([],tf.int64)

})

image=tf.decode_raw(feature["image"],tf.uint8)

image=tf.reshape(image,raw_image_size)

image=tf.random_crop(image,self.img_size)

image=tf.image.per_image_standardization(image)

label=tf.cast(feature["label"],tf.int32)

label=tf.one_hot(label,len(self.classes))

return image,label

def __parsed_distorted(self,tensor):

raw_image_size=[int(self.img_size[0]*1.3),int(self.img_size[1]*1.3),3]

feature=tf.parse_single_example(tensor,features={

"image":tf.FixedLenFeature([],tf.string),

"label":tf.FixedLenFeature([],tf.int64)

})

image=tf.decode_raw(feature["image"],tf.uint8)

image=tf.reshape(image,raw_image_size)

image=tf.random_crop(image,self.img_size)

image=tf.image.random_flip_left_right(image)

image=tf.image.random_flip_up_down(image)

image=tf.image.random_brightness(image,max_delta=0.4)

image=tf.image.random_hue(image,max_delta=0.4)

image=tf.image.random_contrast(image,lower=0.7,upper=1.4)

image=tf.image.random_saturation(image,lower=0.7,upper=1.4)

image=tf.image.per_image_standardization(image)

label=tf.cast(feature["label"],tf.int32)

label=tf.one_hot(label,len(self.classes))

return image,label

def __GetBatchIterator(self,path,parsed,batch_size):

filename=[os.path.join(path,name)for name in os.listdir(path)]

dataset=tf.data.TFRecordDataset(filename)

dataset=dataset.prefetch(tf.contrib.data.AUTOTUNE)

dataset=dataset.apply(tf.data.experimental.shuffle_and_repeat(buffer_size=10000,count=None,seed=99997))

dataset=dataset.apply(tf.data.experimental.map_and_batch(parsed,batch_size))

dataset=dataset.apply(tf.data.experimental.prefetch_to_device("/gpu:0"))

iterator=dataset.make_initializable_iterator()

return iterator.initializer,iterator.get_next()

def __detail(self,path):

Max=-1e9

Min=1e9

print("train dataset:")

path=[os.path.join(path,name)for name in self.classes]

for i in range(len(self.classes)):

num=len(os.listdir(path[i]))

print("%-12s:%3d"%(self.classes[i],num))

Max=max(Max,num)

Min=min(Min,num)

print("max:%d min:%d"%(Max,Min))

def detail(self):

print("class num:",len(self.classes))

self.__detail(self.train_path)

self.__detail(self.test_path)

def global_variables_initializer(self):

initializer=[]

initializer.append(self.train_batch_initializer)

initializer.append(self.test_batch_initializer)

initializer.append(tf.global_variables_initializer())

return initializer

def test_batch(self,batch_size):

self.test_batch_initializer,batch=self.__GetBatchIterator(self.tf_test_path,self.__parsed,batch_size)

return batch

def train_batch(self,batch_size):

self.train_batch_initializer,batch=self.__GetBatchIterator(self.tf_train_path,self.__parsed_distorted,batch_size)

return batch

网络结构定义:

#tf.reset_default_graph()

def warmup_exponential_decay(warmup_step,rate_base,global_step,decay_step,decay_rate,staircase=False):

linear_increase=rate_base*tf.cast(global_step/warmup_step,tf.float32)

exponential_decay=tf.train.exponential_decay(rate_base,global_step-warmup_step,decay_step,decay_rate,staircase)

return tf.cond(global_step<=warmup_step,lambda:linear_increase,lambda:exponential_decay)

class ConvNet(object):

def __init__(self,training=False,regularizer_rate=0.0,drop_rate=0.0,SE_rate=32,cardinality=8,average_class=None):

self.training=training

self.SE_rate=SE_rate

self.cardinality=cardinality

self.drop_rate=drop_rate

if regularizer_rate!=0.0:self.regularizer=tf.contrib.layers.l2_regularizer(regularizer_rate)

else:self.regularizer=None

if average_class is not None:self.ema=average_class.average

else:self.ema=None

def Xavier(self):

tf.contrib.layers.xavier_initializer_conv2d()

def BatchNorm(self,x,name="BatchNorm"):

return tf.layers.batch_normalization(x,training=self.training,reuse=tf.AUTO_REUSE)

def Dropout(self,x):

return tf.layers.dropout(x,rate=self.drop_rate,training=self.training)

def Conv2d(self,x,filters,ksize,strides=[1,1],padding="SAME",activation=tf.nn.relu,use_bn=True,dilation=[1,1],name="conv"):

#Conv BN Relu

with tf.variable_scope(name,reuse=tf.AUTO_REUSE):

input_channel=x.shape.as_list()[-1]

kernel=tf.get_variable("kernel",ksize+[input_channel,filters],initializer=self.Xavier())

bias=tf.get_variable("bias",[filters],initializer=tf.constant_initializer(0.0))

if self.ema is not None and self.training==False: #滑动平均

kernel,bias=self.ema(kernel),self.ema(bias)

conv=tf.nn.conv2d(x,kernel,strides=[1]+strides+[1],padding=padding,dilations=[1]+dilation+[1],name=name)

bias_add=tf.nn.bias_add(conv,bias)

if use_bn:bias_add=self.BatchNorm(bias_add) #BN

if activation is not None:bias_add=activation(bias_add) #Relu

if self.regularizer is not None:tf.add_to_collection("losses",self.regularizer(kernel))

return bias_add

def CardConv2d(self,x,filters,ksize,strides=[1,1],padding="SAME",activation=tf.nn.relu,use_bn=True,dilation=[1,1],name="cardconv"):

'''ResNeXt的多路卷积层'''

with tf.variable_scope(name,tf.AUTO_REUSE):

split=tf.split(x,num_or_size_splits=self.cardinality,axis=-1)

filters=int(filters/self.cardinality)

for i in range(len(split)):

split[i]=self.Conv2d(x,filters,ksize,strides,padding,activation,use_bn,dilation,name)

merge=tf.concat(split,axis=-1)

return merge

def MaxPool(self,x,ksize,strides=[1,1],padding="SAME",name="max_pool"):

input_channel=x.shape.as_list()[-1]

return tf.nn.max_pool(x,ksize=[1]+ksize+[1],strides=[1]+strides+[1],padding=padding,name=name)

def GlobalAvgPool(self,x,name="GAP"):

ksize=[1]+x.shape.as_list()[1:-1]+[1]

return tf.nn.avg_pool(x,ksize=ksize,strides=[1,1,1,1],padding="VALID",name=name)

def FC_conv(self,x,dim,activation=tf.nn.relu,use_bn=True,name="conv_1x1"):

'''1x1卷积代替全连接层'''

return self.Conv2d(x,dim,[1,1],activation=activation,use_bn=use_bn,name=name)

def SENet(self,x,name="SENet"):

with tf.variable_scope(name,tf.AUTO_REUSE):

channel=x.shape.as_list()[-1]

squeeze=self.GlobalAvgPool(x,name="squeeze")

excitation=self.FC_conv(x,int(channel/self.SE_rate),name="excitation_1")

excitation=self.FC_conv(excitation,channel,activation=tf.nn.sigmoid,name="excitation_2")

return excitation*x

def Residual(self,x,channels,name="Residual"):

with tf.variable_scope(name,tf.AUTO_REUSE):

res=self.Conv2d(x,channels[0],[1,1],name="conv_1")

res=self.Conv2d(res,channels[1],[3,3],name="conv_2")

res=self.Conv2d(res,channels[2],[1,1],activation=None,use_bn=True,name="conv_3")

res=self.SENet(res)

if x.shape.as_list()[-1]!=channels[2]:

x=self.Conv2d(x,channels[2],[1,1],activation=None,use_bn=False,name="conv_linear")

return tf.nn.relu(res+x)

def ResidualX(self,x,channels,name="Residual"):

with tf.variable_scope(name,tf.AUTO_REUSE):

res=self.Conv2d(x,channels[0],[1,1],name="conv_1")

res=self.CardConv2d(res,channels[1],[3,3],name="conv_2")

res=self.Conv2d(res,channels[2],[1,1],activation=None,use_bn=True,name="conv_3")

res=self.SENet(res)

if x.shape.as_list()[-1]!=channels[2]:

x=self.Conv2d(x,channels[2],[1,1],activation=None,use_bn=False,name="conv_linear")

return tf.nn.relu(res+x)

def ResNet(self,x):

x=self.Conv2d(x,64,[7,7],[2,2],name="conv1")

x=self.MaxPool(x,[3,3],[2,2],name="pool1")

x=self.Residual(x,[64,64,128],name="Residual1_1")

x=self.Residual(x,[64,64,128],name="Residual1_2")

x=self.Residual(x,[64,64,128],name="Residual1_3")

x=self.MaxPool(x,[3,3],[2,2],name="pool2")

x=self.Residual(x,[128,128,256],name="Residual2_1")

x=self.Residual(x,[128,128,256],name="Residual2_2")

x=self.Residual(x,[128,128,256],name="Residual2_3")

x=self.Residual(x,[128,128,256],name="Residual2_4")

x=self.MaxPool(x,[3,3],[2,2],name="pool2")

x=self.Residual(x,[256,256,512],name="Residual3_1")

x=self.Residual(x,[256,256,512],name="Residual3_2")

x=self.Residual(x,[256,256,512],name="Residual3_3")

x=self.Residual(x,[256,256,512],name="Residual3_4")

x=self.Residual(x,[256,256,512],name="Residual3_5")

x=self.Residual(x,[256,256,512],name="Residual3_6")

x=self.MaxPool(x,[3,3],[2,2],name="pool3")

x=self.Residual(x,[512,512,1024],name="Residual4_1")

x=self.Residual(x,[512,512,1024],name="Residual4_2")

x=self.Residual(x,[512,512,1024],name="Residual4_3")

x=self.Residual(x,[512,512,1024],name="Residual4_4")

x=self.MaxPool(x,[3,3],[2,2],name="pool3")

x=self.GlobalAvgPool(x,name="GAP")

x=self.Dropout(x)

x=self.FC_conv(x,512,name="FC1")

x=self.Dropout(x)

x=self.FC_conv(x,10,activation=None,use_bn=False,name="FC2")

return tf.reshape(x,[-1,10])

def ResNeXt(self,x):

x=self.Conv2d(x,64,[7,7],[2,2],name="conv1")

x=self.MaxPool(x,[3,3],[2,2],name="pool1")

x=self.ResidualX(x,[64,64,128],name="Residual1_1")

x=self.ResidualX(x,[64,64,128],name="Residual1_2")

x=self.ResidualX(x,[64,64,128],name="Residual1_3")

x=self.MaxPool(x,[3,3],[2,2],name="pool2")

x=self.ResidualX(x,[128,128,256],name="Residual2_1")

x=self.ResidualX(x,[128,128,256],name="Residual2_2")

x=self.ResidualX(x,[128,128,256],name="Residual2_3")

x=self.ResidualX(x,[128,128,256],name="Residual2_4")

x=self.MaxPool(x,[3,3],[2,2],name="pool2")

x=self.ResidualX(x,[256,256,512],name="Residual3_1")

x=self.ResidualX(x,[256,256,512],name="Residual3_2")

x=self.ResidualX(x,[256,256,512],name="Residual3_3")

x=self.ResidualX(x,[256,256,512],name="Residual3_4")

x=self.ResidualX(x,[256,256,512],name="Residual3_5")

x=self.ResidualX(x,[256,256,512],name="Residual3_6")

x=self.MaxPool(x,[3,3],[2,2],name="pool3")

x=self.ResidualX(x,[512,512,1024],name="Residual4_1")

x=self.ResidualX(x,[512,512,1024],name="Residual4_2")

x=self.ResidualX(x,[512,512,1024],name="Residual4_3")

x=self.ResidualX(x,[512,512,1024],name="Residual4_4")

x=self.MaxPool(x,[3,3],[2,2],name="pool3")

x=self.GlobalAvgPool(x,name="GAP")

x=self.Dropout(x)

x=self.FC_conv(x,512,name="FC1")

x=self.Dropout(x)

x=self.FC_conv(x,10,activation=None,use_bn=False,name="FC2")

return tf.reshape(x,[-1,10])

def VGG19(self,x):

x=self.Conv2d(x,64,[3,3],[1,1],name="conv1_1")

x=self.Conv2d(x,64,[3,3],[1,1],name="conv1_2")

x=self.Maxpool2d(x,[3,3],[2,2],name="pool1")

x=self.SENet(x,name="SE_1")

x=self.Conv2d(x,128,[3,3],[1,1],name="conv2_1")

x=self.Conv2d(x,128,[3,3],[1,1],name="conv2_2")

x=self.Maxpool2d(x,[3,3],[2,2],name="pool2")

x=self.SENet(x,name="SE_2")

x=self.Conv2d(x,256,[3,3],[1,1],name="conv3_1")

x=self.Conv2d(x,256,[3,3],[1,1],name="conv3_2")

x=self.Conv2d(x,256,[3,3],[1,1],name="conv3_3")

x=self.Conv2d(x,256,[3,3],[1,1],name="conv3_4")

x=self.Maxpool2d(x,[3,3],[2,2],name="pool3")

x=self.SENet(x,name="SE_3")

x=self.Conv2d(x,256,[3,3],[1,1],name="conv4_1")

x=self.Conv2d(x,256,[3,3],[1,1],name="conv4_2")

x=self.Conv2d(x,256,[3,3],[1,1],name="conv4_3")

x=self.Conv2d(x,256,[3,3],[1,1],name="conv4_4")

x=self.Maxpool2d(x,[3,3],[2,2],name="pool4")

x=self.SENet(x,name="SE_4")

x=self.Conv2d(x,512,[3,3],[1,1],name="conv5_1")

x=self.Conv2d(x,512,[3,3],[1,1],name="conv5_2")

x=self.Conv2d(x,512,[3,3],[1,1],name="conv5_3")

x=self.Conv2d(x,512,[3,3],[1,1],name="conv5_4")

x=self.Maxpool2d(x,[3,3],[2,2],name="pool5")

x=self.GlobalAveragPool(x,name="GAP")

x=self.FC_conv(x,128,name="FC_1")

x=self.Dropout(x)

x=self.FC_conv(x,10,activation=None,bn=False,name="FC_2")

return tf.reshape(x,[-1,10])

def AlexNet(self,x):

x=self.Conv2d(x,96,[11,11],[4,4],padding="valid",name="conv_1")

x=self.Maxpool2d(x,[3,3],[2,2],padding="valid",name="pool_1")

x=self.SENet(x,name="SE_1")

x=self.Conv2d(x,256,[5,5],[2,2],name="conv_2")

x=self.Maxpool2d(x,[3,3],[2,2],name="pool_2")

x=self.SENet(x,name="SE_2")

x=self.Conv2d(x,512,[3,3],[1,1],name="conv_3")

x=self.SENet(x,name="SE_3")

x=self.Conv2d(x,384,[3,3],[1,1],name="conv_4")

x=self.SENet(x,name="SE_4")

x=self.Conv2d(x,256,[3,3],[1,1],name="conv_5")

x=self.Maxpool2d(x,[3,3],[2,2],name="pool_3")

x=self.SENet(x,name="SE_5")

x=self.GlobalAveragPool(x,name="GAP")

x=self.SENet(x,name="SE_6")

x=self.FC_conv(x,512,name="FC_1")

x=self.Dropout(x)

x=self.FC_conv(x,512,name="FC_2")

x=self.Dropout(x)

x=self.FC_conv(x,10,activation=None,bn=False,name="FC_3")

return tf.reshape(x,[-1,10])

x=tf.placeholder(tf.float32,[None,128,128,3])

y=tf.placeholder(tf.float32,[None,10])

is_training=tf.placeholder(tf.bool)

global_step=tf.Variable(0,trainable=False)

train_epochs=160

batch_size=64

batch_num=int(50000/batch_size)

SE_rate=32

cardinality=8

regularizer_rate=0.01

drop_rate=0.5

#数据输入

data=DatasetReader(data_path,[128,128])

train_batch=data.train_batch(batch_size=batch_size)

test_batch=data.test_batch(batch_size=256)

#学习率

warmup_step=batch_num*3

learning_rate_base=0.003

learning_rate_decay=0.97

learning_rate_step=batch_num

learning_rate=exponential_decay_with_warmup(warmup_step,learning_rate_base,global_step,learning_rate_step,learning_rate_decay)

#前向预测

forward=ConvNet(training=is_training,regularizer_rate=regularizer_rate,drop_rate=drop_rate,SE_rate=SE_rate,cardinality=cardinality).ResNeXt(x)

#滑动平均&预测

ema_decay=0.999

ema=tf.train.ExponentialMovingAverage(ema_decay,global_step)

ema_op=ema.apply(tf.trainable_variables())

ema_forward=ConvNet(SE_rate=SE_rate,cardinality=cardinality).ResNeXt(x)

prediction=tf.nn.softmax(ema_forward)

correct_pred=tf.equal(tf.argmax(prediction,1),tf.argmax(y,1))

accuracy=tf.reduce_mean(tf.cast(correct_pred,tf.float32))

#优化器

momentum=0.9

update_ops=tf.get_collection(tf.GraphKeys.UPDATE_OPS)

cross_entropy=tf.losses.softmax_cross_entropy(onehot_labels=y,logits=forward,label_smoothing=0.1)

l2_regularizer_loss=tf.add_n(tf.get_collection("losses"))#l2正则化损失

loss_function=cross_entropy+l2_regularizer_loss

update_ops=tf.get_collection(tf.GraphKeys.UPDATE_OPS)

with tf.control_dependencies(update_ops):

optimizer=tf.train.MomentumOptimizer(learning_rate,momentum).minimize(loss_function,global_step=global_step)

#模型保存地址

if not os.path.exists(mode_path):

os.makedirs(mode_path)

saver=tf.train.Saver(tf.global_variables())

网络训练:

gpu_options = tf.GPUOptions(allow_growth=True)

loss_list,acc_list=[5.0],[0.0]

step,decay,acc,loss=0,0,0,0

maxacc,minloss=0.5,1e5

with tf.Session(config=tf.ConfigProto(gpu_options=gpu_options)) as sess:

print("train start")

sess.run(data.global_variables_initializer())

for epoch in range(train_epochs):

bar=tqdm(range(batch_num),unit=" step",desc="epoch %d:"%(epoch+1))

bar.set_postfix({

"acc:":acc,"loss:":loss})

for batch in bar:

images,labels=sess.run(train_batch)

sess.run(optimizer,feed_dict={

x:images,y:labels,is_training:True})

step+=1

if step%50==0:

decay=min(0.9,(step/50+1)/(step/50+10))

images,labels=sess.run(test_batch)

acc,loss=sess.run([accuracy,loss_function],feed_dict={

x:images,y:labels,is_training:False})

acc_list.append(decay*acc_list[-1]+(1-decay)*acc)

loss_list.append(decay*loss_list[-1]+(1-decay)*loss)

bar.set_postfix({

"acc:":acc,"loss:":loss})

print("update model with acc:%.3f%%"%(acc_list[-1]*100))

#pred=sess.run(prediction,feed_dict={x:images,is_training:False})

#print(pred)

可视化损失和准确率变化曲线

plt.plot(acc_list)

plt.legend(["ResNet"],loc="lower right")

plt.show()

plt.plot(loss_list)

plt.legend(["ResNet"],loc="upper right")

np.save("ResNet_acc.npy",acc_list)

np.save("ResNet_loss.npy",loss_list)

用模型来进行预测:

#raw_image std_image是本地图片,std_image输入网络,raw_image为原图片

image_path="C:\\Users\\Shijunfeng\\tensorflow_gpu\\dataseats\\kaggle\\Img"

image_list=[os.path.join(image_path,name)for name in os.listdir(image_path)]

def imshows(classes,images,labels,index,amount,predictions=None):#显示图片和对应标签

fig=plt.gcf()

fig.set_size_inches(10,50)#大小看怎么调整合适把

for i in range(amount):

title="lab:"+classes[np.argmax(labels[index+i])]

if predictions is not None:

title=title+"prd:"+name[np.argmax(predictions[index+i])]

ax=plt.subplot(15,2,i+1)#每行五个,输出6行

ax.set_title(title)

ax.set_xticks([])

ax.set_yticks([])

ax.imshow(images[index+i])

plt.show()

def decode(image):

image=Image.open(image)

image=tf.image.resize_images(image,[128,128],method=2)

image=tf.image.per_image_standardization(image)

return image

raw_images=[Image.open(image)for image in image_list]

std_images=[decode(image)for image in image_list]

gpu_options = tf.GPUOptions(allow_growth=True)

with tf.Session(config=tf.ConfigProto(gpu_options=gpu_options)) as sess: #加载模型,这里网络定义部分同样需要

sess.run(data.global_variables_initializer())

ckp_state=tf.train.get_checkpoint_state(mode_path)

if ckp_state and ckp_state.model_checkpoint_path:

saver.restore(sess,ckp_state.model_checkpoint_path)

images=sess.run(std_image)

labels=sess.run(prediction,feed_dict={

x:images,is_training:False})

imshows(data.classes,images,labels,0,10) #输出前10张照片以及对应标签

在测试集上准确率接近100%(后面过拟合了,目前位置没有解决过拟合问题)

在训练集上能达到90%以上的准确率

总共训练耗时81分钟左右

ResNet101

疯狂堆残差快

def ResNet(self,x):

x=self.Conv2d(x,64,[7,7],[2,2],name="conv1")

x=self.MaxPool(x,[3,3],[2,2],name="pool1")

x=self.Residual(x,[64,64,256],name="Residual1_1")

x=self.Residual(x,[64,64,256],name="Residual1_2")

x=self.Residual(x,[64,64,256],name="Residual1_3")

x=self.MaxPool(x,[3,3],[2,2],name="pool2")

x=self.Residual(x,[128,128,512],name="Residual2_1")

x=self.Residual(x,[128,128,512],name="Residual2_2")

x=self.Residual(x,[128,128,512],name="Residual2_3")

x=self.Residual(x,[128,128,512],name="Residual2_4")

x=self.MaxPool(x,[3,3],[2,2],name="pool3")

x=self.Residual(x,[256,256,1024],name="Residual3_1")

x=self.Residual(x,[256,256,1024],name="Residual3_2")

x=self.Residual(x,[256,256,1024],name="Residual3_3")

x=self.Residual(x,[256,256,1024],name="Residual3_4")

x=self.Residual(x,[256,256,1024],name="Residual3_5")

x=self.Residual(x,[256,256,1024],name="Residual3_6")

x=self.Residual(x,[256,256,1024],name="Residual3_7")

x=self.Residual(x,[256,256,1024],name="Residual3_8")

x=self.Residual(x,[256,256,1024],name="Residual3_9")

x=self.Residual(x,[256,256,1024],name="Residual3_10")

x=self.Residual(x,[256,256,1024],name="Residual3_11")

x=self.Residual(x,[256,256,1024],name="Residual3_12")

x=self.Residual(x,[256,256,1024],name="Residual3_13")

x=self.Residual(x,[256,256,1024],name="Residual3_14")

x=self.Residual(x,[256,256,1024],name="Residual3_15")

x=self.Residual(x,[256,256,1024],name="Residual3_16")

x=self.Residual(x,[256,256,1024],name="Residual3_17")

x=self.Residual(x,[256,256,1024],name="Residual3_18")

x=self.Residual(x,[256,256,1024],name="Residual3_19")

x=self.Residual(x,[256,256,1024],name="Residual3_20")

x=self.Residual(x,[256,256,1024],name="Residual3_21")

x=self.Residual(x,[256,256,1024],name="Residual3_22")

x=self.Residual(x,[256,256,1024],name="Residual3_23")

x=self.MaxPool(x,[3,3],[2,2],name="pool4")

x=self.Residual(x,[512,512,2048],name="Residual4_1")

x=self.Residual(x,[512,512,2048],name="Residual4_2")

x=self.Residual(x,[512,512,2048],name="Residual4_3")

x=self.Residual(x,[512,512,2048],name="Residual4_4")

x=self.MaxPool(x,[3,3],[2,2],name="pool5")

x=self.GlobalAvgPool(x,name="GAP")

x=self.Dropout(x)

x=self.FC_conv(x,784,name="FC1")

x=self.Dropout(x)

x=self.FC_conv(x,10,activation=None,use_bn=False,name="output")

return tf.reshape(x,[-1,10])

可以看到网络测试集准确率能够稳定在90%以上

最后20个epoch求平均值得到准确率为90.945%(91%)

最高准确率为94%(batch_size=256)

SE-ResNet101

残差块后面加上SENet子结构即可

def Residual(self,x,channels,name="Residual"):

with tf.variable_scope(name,tf.AUTO_REUSE):

res=self.Conv2d(x,channels[0],[1,1],name="conv_1")

res=self.Conv2d(res,channels[1],[3,3],name="conv_2")

res=self.Conv2d(res,channels[2],[1,1],activation=None,use_bn=True,name="conv_3")

res=self.SENet(res)

if x.shape.as_list()[-1]!=channels[2]:

x=self.Conv2d(x,channels[2],[1,1],activation=None,use_bn=False,name="conv_linear")

return tf.nn.relu(res+x)

结果暂时未出,待更新