【AI人工智能】:白话机器学习之(三)逻辑回归,附全套人工智能学习资料礼包!

概述

前面讲述了线性回归,线性回归的模型 y=wT+by = w^T + by=wT+b。模型的预测值逼近真实标记y。那么可否令模型的预测值逼近真实标记y的衍生物呢。比如说模型的预测值逼近真实标记的对数函数。下面引入逻辑回归的知识。

首先庆祝大家1024程序员节日快乐!!

文末有福利!

转换函数

我们需要一个单调可微函数将分类任务的真实标记y与线性回归模型的预测值联系起来,所以需要一个转换函数将线性模型的值与实际的预测值关联起来。 考虑二分类问题,其输出标记是y属于{0,1},而线性模型产生的预测值是z=wT+bz = w^T + b z=wT+b是实值,那么我们需要将这个实值转化成0/1值,最理想的函数是单位阶跃函数。

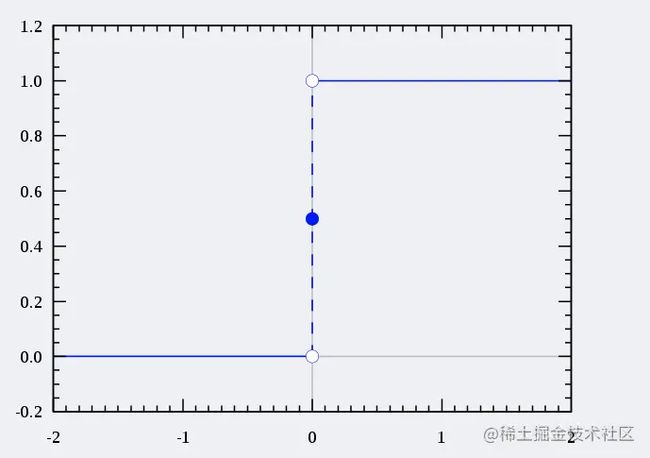

单位阶跃函数

单位阶跃函数(unit-step function),如下图,如果预测值大于零则判断为正例;如果预测值小于零则判断为反例;为零的则任意判断。如下图所示。

\begin{equation} y = \begin{cases} 0 & \mbox{if z < 0}\\ 0.5 & \mbox{if z = 0} \\ 1 & \mbox{if z > 0} \end{cases} \end{equation}

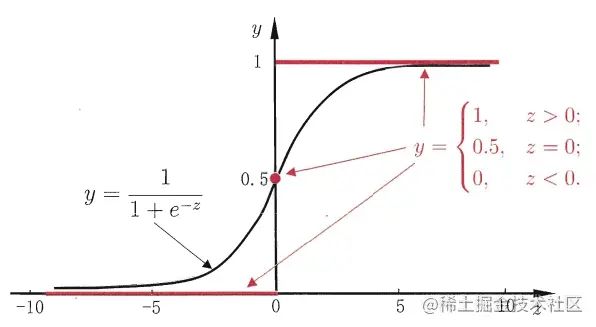

sigmoid function

从图中可以看出,单位阶跃函数不连续因此不适合用来计算。这里我们引入sigmoid函数,进行计算。

y=11+e−zy = \dfrac{1}{1 + e^{-z}}y=1+e−z1

将z值转化为一个接近0或1的y值,并且其输出值在z=0的附近变化很陡。那么我们现在的模型变化成

y=11+e−(wT+b)y = \dfrac{1}{1 + e^{-(w^T + b)}}y=1+e−(wT+b)1

几率与对数几率

几率:如果将y作为正例的可能性,1-y作为负例的可能性,那么两者的比值y1−y \dfrac{y}{1 - y}1−yy称为几率,反应了x作为正例的相对可能性。则根据sigmoid函数可得。

lny1−y=wT+b ln\dfrac{y}{1 - y} = w^T + bln1−yy=wT+b

lny1−y ln\dfrac{y}{1 - y}ln1−yy称为对数几率;

**由此可以看出,y=11+e−(wT+b)y = \dfrac{1}{1 + e^{-(w^T + b)}}y=1+e−(wT+b)1实际上是用线性模型的预测结果去逼近真实标记的对数几率,因此,其对应的模型称为“对数几率回归” **

下面介绍损失函数以及计算方法。

损失函数

因为:lny1−y=wT+b ln\dfrac{y}{1 - y} = w^T + bln1−yy=wT+b。所以

p(y=1∣x)=e(wT+b)1+e(wT+b)p(y=1|x) = \dfrac{e^{(w^T + b)}}{1 + e^{(w^T + b)}}p(y=1∣x)=1+e(wT+b)e(wT+b)

p(y=0∣x)=11+e(wT+b)p(y=0|x) = \dfrac{1}{1 + e^{(w^T + b)}}p(y=0∣x)=1+e(wT+b)1

我们采用极大似然估计法进行求解,由于是二分类问题,所以符合概率里面的0-1分布,所以似然函数为 令p(y=1∣x)=e(wT+b)1+e(wT+b)=f(x)p(y=1|x) = \dfrac{e^{(w^T + b)}}{1 + e^{(w^T + b)}} = f(x)p(y=1∣x)=1+e(wT+b)e(wT+b)=f(x),p(y=0∣x)=11+e(wT+b)=1−f(x)p(y=0|x) = \dfrac{1}{1 + e^{(w^T + b)}}=1-f(x)p(y=0∣x)=1+e(wT+b)1=1−f(x)

L(w)=∏i=1n[f(xi)]yi[1−f(xi)]1−yiL(w)=\prod_{i=1}^{n}[f(x_i)]^{y_i}[1 - f(x_i)]^{1- y_i}L(w)=∏i=1n[f(xi)]yi[1−f(xi)]1−yi

对数似然函数为:

l(w)=lnL(w)=∑i=1n[yilnf(xi)+(1−yi)ln(1−f(xi))]l(w)=lnL(w)=\sum_{i = 1}^n [y_ilnf(x_i) + (1 -y_i)ln(1 - f(x_i))] l(w)=lnL(w)=∑i=1n[yilnf(xi)+(1−yi)ln(1−f(xi))] l(w)=lnL(w)=∑i=1n[yilnf(xi)1−f(xi)+ln(1−f(xi))]l(w)=lnL(w)=\sum_{i = 1}^n [y_iln\dfrac{f(x_i)}{1 - f(x_i)} + ln(1-f(x_i))] l(w)=lnL(w)=∑i=1n[yiln1−f(xi)f(xi)+ln(1−f(xi))] l(w)=lnL(w)=∑i=1n[yi(wxi)−ln(1+ewxi)]l(w)=lnL(w)=\sum_{i = 1}^n [y_i(wx_i) - ln(1 + e^{wx_i})] l(w)=lnL(w)=∑i=1n[yi(wxi)−ln(1+ewxi)]

求这个函数的最大值,加个负号,求最小值。运用前面章节介绍的梯度下降和牛顿法都可以求解,这里不再赘述。

代码实战

这里讲述通过梯度上升法进行求解,首先,我们需要界定、分析下这个问题,那么我们需要什么样的信息呢?

- 输入信息的变量

- 样本:包括特征与分类标记、正例与负例

- 回归系数的初始化;

- 步长的计算;

- 损失函数的已经确定;

- 损失函数的梯度的计算;

- 通过损失函数的梯度和步长确实每次迭代;

- 迭代的停止条件;

- 输入数据(确定上面的变量)

- 样本信息:包括特征、分类标记(从机器学习实战中提取,后续将贴出;

- 回归函数的系数都初始化为1.0;

- 为简单起见,设置步长alpha = 0.001;

- 损失函数,上面已经介绍:

l(w)=lnL(w)=∑i=1n[yi(wxi)−ln(1+ewxi)]l(w)=lnL(w)=\sum_{i = 1}^n [y_i(wx_i) - ln(1 + e^{wx_i})] l(w)=lnL(w)=∑i=1n[yi(wxi)−ln(1+ewxi)]

- 损失函数的梯度:

[∂f2∂xi∂yj]nxn[\frac {\partial f^2} {\partial x_i \partial y_j}]_{nxn}[∂xi∂yj∂f2]nxn l(w)=lnL(w)=∑i=1n[yi(wxi)−ln(1+ewxi)]l(w)=lnL(w)=\sum_{i = 1}^n [y_i(wx_i) - ln(1 + e^{wx_i})] l(w)=lnL(w)=∑i=1n[yi(wxi)−ln(1+ewxi)]

[∂(lnL(w))∂w]=∑i=1n[yixi−e(wxi)1+e(wxi)xi]=∑i=1n[xi(yi−e(wxi)1+e(wxi))][\frac {\partial (lnL(w))} {\partial w}] = \sum_{i = 1}^n[y_ix_i - \dfrac{e^{(wx_i)}}{1 + e^{(wx_i)}}x_i] = \sum_{i = 1}^n[x_i (y_i- \dfrac{e^{(wx_i)}}{1 + e^{(wx_i)}})][∂w∂(lnL(w))]=∑i=1n[yixi−1+e(wxi)e(wxi)xi]=∑i=1n[xi(yi−1+e(wxi)e(wxi))]

[∂(lnL(w))∂w]=∑i=1n[xi(yi−11+e(−wxi))][\frac {\partial (lnL(w))} {\partial w}] = \sum_{i = 1}^n[x_i (y_i- \dfrac{1}{1 + e^{(-wx_i)}})][∂w∂(lnL(w))]=∑i=1n[xi(yi−1+e(−wxi)1)]

- 迭代的停止条件:maxCycles = 500,迭代500次。

- 代码

def loadDataSet():

dataMat = []; labelMat = []

fr = open('testSet.txt')

for line in fr.readlines():

lineArr = line.strip().split()

dataMat.append([1.0, float(lineArr[0]), float(lineArr[1])])

labelMat.append(int(lineArr[2]))

return dataMat,labelMat

def sigmoid(inX):

return 1.0/(1+exp(-inX))

def gradAscent(dataMatIn, classLabels):

dataMatrix = mat(dataMatIn) #convert to NumPy matrix

labelMat = mat(classLabels).transpose() #convert to NumPy matrix

m,n = shape(dataMatrix)

alpha = 0.001

maxCycles = 500

weights = ones((n,1))

for k in range(maxCycles): #heavy on matrix operations

h = sigmoid(dataMatrix*weights) #matrix mult

error = (labelMat - h) #vector subtraction

weights = weights + alpha * dataMatrix.transpose()* error # 这里就是用梯度迭代修改参数的值

return weights

复制代码- 样本数据

-0.017612 14.053064 0

-1.395634 4.662541 1

-0.752157 6.538620 0

-1.322371 7.152853 0

0.423363 11.054677 0

0.406704 7.067335 1

0.667394 12.741452 0

-2.460150 6.866805 1

0.569411 9.548755 0

-0.026632 10.427743 0

0.850433 6.920334 1

1.347183 13.175500 0

1.176813 3.167020 1

-1.781871 9.097953 0

-0.566606 5.749003 1

0.931635 1.589505 1

-0.024205 6.151823 1

-0.036453 2.690988 1

-0.196949 0.444165 1

1.014459 5.754399 1

1.985298 3.230619 1

-1.693453 -0.557540 1

-0.576525 11.778922 0

-0.346811 -1.678730 1

-2.124484 2.672471 1

1.217916 9.597015 0

-0.733928 9.098687 0

-3.642001 -1.618087 1

0.315985 3.523953 1

1.416614 9.619232 0

-0.386323 3.989286 1

0.556921 8.294984 1

1.224863 11.587360 0

-1.347803 -2.406051 1

1.196604 4.951851 1

0.275221 9.543647 0

0.470575 9.332488 0

-1.889567 9.542662 0

-1.527893 12.150579 0

-1.185247 11.309318 0

-0.445678 3.297303 1

1.042222 6.105155 1

-0.618787 10.320986 0

1.152083 0.548467 1

0.828534 2.676045 1

-1.237728 10.549033 0

-0.683565 -2.166125 1

0.229456 5.921938 1

-0.959885 11.555336 0

0.492911 10.993324 0

0.184992 8.721488 0

-0.355715 10.325976 0

-0.397822 8.058397 0

0.824839 13.730343 0

1.507278 5.027866 1

0.099671 6.835839 1

-0.344008 10.717485 0

1.785928 7.718645 1

-0.918801 11.560217 0

-0.364009 4.747300 1

-0.841722 4.119083 1

0.490426 1.960539 1

-0.007194 9.075792 0

0.356107 12.447863 0

0.342578 12.281162 0

-0.810823 -1.466018 1

2.530777 6.476801 1

1.296683 11.607559 0

0.475487 12.040035 0

-0.783277 11.009725 0

0.074798 11.023650 0

-1.337472 0.468339 1

-0.102781 13.763651 0

-0.147324 2.874846 1

0.518389 9.887035 0

1.015399 7.571882 0

-1.658086 -0.027255 1

1.319944 2.171228 1

2.056216 5.019981 1

-0.851633 4.375691 1

-1.510047 6.061992 0

-1.076637 -3.181888 1

1.821096 10.283990 0

3.010150 8.401766 1

-1.099458 1.688274 1

-0.834872 -1.733869 1

-0.846637 3.849075 1

1.400102 12.628781 0

1.752842 5.468166 1

0.078557 0.059736 1

0.089392 -0.715300 1

1.825662 12.693808 0

0.197445 9.744638 0

0.126117 0.922311 1

-0.679797 1.220530 1

0.677983 2.556666 1

0.761349 10.693862 0

-2.168791 0.143632 1

1.388610 9.341997 0

0.317029 14.739025 0

复制代码- 获得结果

>>> import logRegres

>>> param_mat, label_mat = logRegres.loadDataSet()

>>>

>>> logRegres.gradAscent(param_mat, label_mat)

matrix([[ 4.12414349],

[ 0.48007329],

[-0.6168482 ]])

>>>

复制代码这里每次迭代采用的是全部的样本,计算量较大,可以修改为每次迭代选取随机的样本。

参考

- 机器学习实战

ps:迪迦粉丝专属福利来了!为感谢大家的支持~,(只要是关注了迪迦的朋友,200G人工智能学习大礼包免费领取!内含:两大Pytorch、TensorFlow实战框架视频、图像识别、OpenCV、计算机视觉、深度学习与神经网络等视频、代码、PPT以及深度学习书籍)

只需要你点个关注,然后扫码添加助手小姐姐VX即可无套路领取,或者加入迪迦的粉丝交流群:796741749也可领取!

扫码添加即可