BERT概念+调用transformers库加载自己数据集做BERT预训练

一、简单介绍Word Embedding

在NLP任务中,我们需要对文本进行编码,使之成为计算机可以读懂的语言。在编码时,我们期望句子之间保持词语间的相似性。word embedding做的事情就是把一个词映射到低维的稠密空间,切语义相近的词向量离得比较近。

word2vec的缺点:

1、相同词对应的向量训练好就固定了。

2. 在不同的场景中,词的意思是相同的。(即便是skip-gram,学习到的只是多个场景的综合意思)

BERT就是改进这两个缺点。

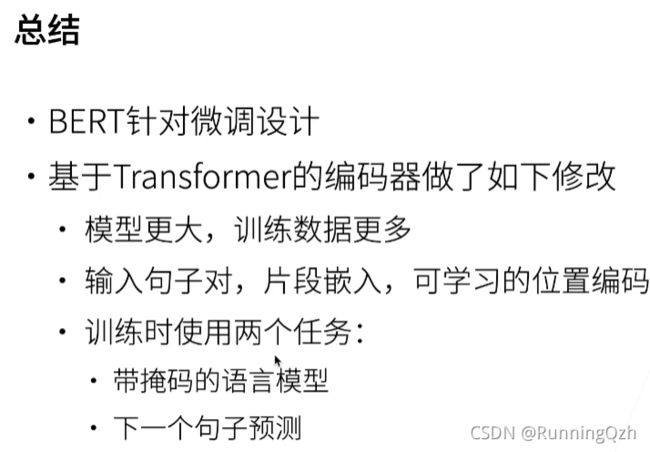

二、BERT的概念

说白了就是transformer的encoder部分,并不需要标签,有语料就能训练了。

BERT模型,本质可以把其看做是新的word2Vec。对于现有的任务,只需把BERT的输出看做是word2vec,在其之上建立自己的模型即可了。

BERT架构

- 只有编码器的transformer

- 两个版本:

- base: blocks =12, hiddensize=768, heads = 12;

- large: blocks =24, hiddensize=1024, heads = 18;

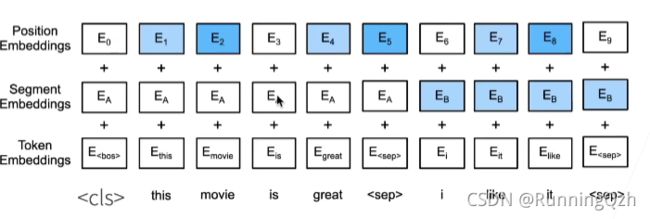

对输入的修改

- 每个样本是一个句子对

- 加入额外的片端嵌入

- 位置编码可学习

cls是句子的开头,sep是两个句子的结尾。

segmentEmbed 前面一个句子是0,后面是1.

postionEmbend是自己学的。

三、bert代码

def get_tokens_and_segments(tokens_a, tokens_b=None):

"""获取输入序列的词元及其片段索引。"""

tokens = ['' ] + tokens_a + ['' ]

# 0和1分别标记片段A和B

segments = [0] * (len(tokens_a) + 2)

if tokens_b is not None:

tokens += tokens_b + ['' ]

segments += [1] * (len(tokens_b) + 1)

return tokens, segments

其中传入两个句子的tokens,构造成cls+tokena+sep+tokenb+sep的输入格式,构造segment时,前一个要+2,因为手动加上了cls和sep,后一个+1,因为只手动加了一个sep。

class BERTEncoder(nn.Module):

"""BERT encoder."""

def __init__(self, vocab_size, num_hiddens, norm_shape, ffn_num_input,

ffn_num_hiddens, num_heads, num_layers, dropout,

max_len=1000, key_size=768, query_size=768, value_size=768,

**kwargs):

super(BERTEncoder, self).__init__(**kwargs)

self.token_embedding = nn.Embedding(vocab_size, num_hiddens)

self.segment_embedding = nn.Embedding(2, num_hiddens)

self.blks = nn.Sequential()

for i in range(num_layers):

self.blks.add_module(f"{

i}", d2l.EncoderBlock(

key_size, query_size, value_size, num_hiddens, norm_shape,

ffn_num_input, ffn_num_hiddens, num_heads, dropout, True))

# 在BERT中,位置嵌入是可学习的,因此我们创建一个足够长的位置嵌入参数

self.pos_embedding = nn.Parameter(torch.randn(1, max_len,

num_hiddens))

def forward(self, tokens, segments, valid_lens):

# 在以下代码段中,`X`的形状保持不变:(批量大小,最大序列长度,`num_hiddens`)

X = self.token_embedding(tokens) + self.segment_embedding(segments)

X = X + self.pos_embedding.data[:, :X.shape[1], :]

for blk in self.blks:

X = blk(X, valid_lens)

return X

四、使用transformers

import os

os.environ['CUDA_VISIBLE_DEVICES'] = '1'

import torch

from transformers import AdamW, AutoTokenizer, AutoModelForSequenceClassification

# Same as before

checkpoint = "./model/bert/bert-base-uncased"

tokenizer = AutoTokenizer.from_pretrained(checkpoint, use_fast=True)

model = AutoModelForSequenceClassification.from_pretrained(checkpoint)

sequences = [

"I've been waiting for a HuggingFace course my whole life.",

"This course is amazing!",

]

batch = tokenizer(sequences, padding=True, truncation=True, return_tensors="pt")

batch["labels"] = torch.tensor([1, 1])

optimizer = AdamW(model.parameters())

loss = model(**batch).loss

outputs = model(**batch)

print(outputs.logits)

loss = outputs.loss

print(loss.item())

loss.backward()

optimizer.step()

使用from_pretrained加载tokenizer和model ,tokenizer可以分句、增加cls和sep标签、转换ids、padding、truncation,最后返回一个字典:

- input_ids 是加了cls和sep标签之后的句子的ids,101代表[CLS]、102代表[SEP]

- token_type_ids是bert特有的,表示这是bert输入中的第几句话。0是第一句,1是第二句(因为bert可以预测两句话是否是相连的)

- attention_mask是设置注意力范围,即1是原先句子中的部分,0是padding的部分。

文本分类小任务 ( 将BERT中添加自己的数据集,做预训练。)

import os

os.environ["CUDA_VISIBLE_DEVICES"] = "1"

import warnings

warnings.filterwarnings('ignore')

import pandas as pd

import transformers as tfs

import numpy as np

import random

import torch

from torch.utils.data import TensorDataset, DataLoader, random_split

from transformers import BertTokenizer

from transformers import BertForSequenceClassification, AdamW

from transformers import get_linear_schedule_with_warmup

from sklearn.metrics import f1_score, accuracy_score

def flat_accuracy(preds, labels):

"""A function for calculating accuracy scores"""

pred_flat = np.argmax(preds, axis=1).flatten()

labels_flat = labels.flatten()

return accuracy_score(labels_flat, pred_flat)

seed = 42

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed_all(seed)

torch.backends.cudnn.deterministic = True

device = torch.device('cuda')

train_df = pd.read_csv('./train.tsv', delimiter='\t', header=None)

train_set = train_df[:3000]

model_class, tokenizer_class, pretrained_weights = (tfs.BertModel, tfs.BertTokenizer, '../model/bert/bert-base-uncased',)

tokenizer = tokenizer_class.from_pretrained(pretrained_weights,do_lower_case=True)

# 拆装数据和标签

sequence = list(train_set.iloc[0:,0])

labels = list(train_set.iloc[0:,1])

def encode_fn(text_list):

all_input_ids = []

for text in text_list:

input_ids = tokenizer.encode(

text,

add_special_tokens = True, # 添加special tokens, 也就是CLS和SEP

max_length = 160, # 设定最大文本长度

pad_to_max_length = True, # pad到最大的长度

return_tensors = 'pt' # 返回的类型为pytorch tensor

)

all_input_ids.append(input_ids)

all_input_ids = torch.cat(all_input_ids, dim=0)

return all_input_ids

all_input_ids = encode_fn(sequence)

labels = torch.tensor(labels)

#print(all_input_ids.shape)

#torch.Size([3000, 160])

#print(labels.shape)

#torch.Size([3000])

epochs = 4

batch_size = 32

# Split data into train and validation

dataset = TensorDataset(all_input_ids, labels)

train_size = int(0.90 * len(dataset))

val_size = len(dataset) - train_size

train_dataset, val_dataset = random_split(dataset, [train_size, val_size])

# Create train and validation dataloaders

train_dataloader = DataLoader(train_dataset, batch_size = batch_size, shuffle = True)

val_dataloader = DataLoader(val_dataset, batch_size = batch_size, shuffle = False)

# Load the pretrained BERT model

model = BertForSequenceClassification.from_pretrained(pretrained_weights, num_labels=2,

output_attentions=False, output_hidden_states=False)

model.cuda()

# create optimizer and learning rate schedule

optimizer = AdamW(model.parameters(), lr=2e-5)

total_steps = len(train_dataloader) * epochs

scheduler = get_linear_schedule_with_warmup(optimizer, num_warmup_steps=0, num_training_steps=total_steps)

# batch[0]是句子 batch[1]是标签

for epoch in range(epochs):

model.train()

total_loss, total_val_loss = 0, 0

total_eval_accuracy = 0

for step, batch in enumerate(train_dataloader):

sequences = batch[0].to(device)

labels = batch[1].to(device)

model.zero_grad()

output = model(sequences, token_type_ids=None,

attention_mask=(batch[0] > 0).to(device),labels=labels)

loss = output.loss

logits = output.logits

# print(loss.item()) #返回数值

# print(logits) #返回tensor

total_loss += loss.item()

loss.backward()

torch.nn.utils.clip_grad_norm_(model.parameters(), 1.0)

optimizer.step()

scheduler.step()

model.eval()

for i, batch in enumerate(val_dataloader):

with torch.no_grad():

output = model(batch[0].to(device), token_type_ids=None,

attention_mask=(batch[0] > 0).to(device),labels=batch[1].to(device))

loss = output.loss

logits = output.logits

total_val_loss += loss.item()

logits = logits.detach().cpu().numpy()

label_ids = batch[1].to('cpu').numpy()

total_eval_accuracy += flat_accuracy(logits, label_ids)

avg_train_loss = total_loss / len(train_dataloader)

avg_val_loss = total_val_loss / len(val_dataloader)

avg_val_accuracy = total_eval_accuracy / len(val_dataloader)

print(f'Train loss : {

avg_train_loss}')

print(f'Validation loss: {

avg_val_loss}')

print(f'Accuracy: {

avg_val_accuracy:.2f}')

print('\n')