【详细代码注释】基于CNN卷积神经网络实现随机森林算法

-

随机森林算法简介:

-

随机森林(Random Forest)是一种灵活性很高的机器学习算法。

-

它的底层是利用多棵树对样本进行训练并预测的一种分类器。在机器学习的许多领域都有广泛地应用。

-

例如构建医学疾病监测和病患易感性的预测模型。笔者自己曾经将RF算法应用于癌变细胞的检测和分析上。

-

-

随机森林算法原理:

-

随机森林的本质就是通过集成学习(Ensemble Learning)将多棵决策树集成的一种算法。

-

那么我们先简单了解集成学习。

- 继承学习的意义是构建并结合多个学习器来完成学习任务,也被称为多分类器系统。

-

集成学习分为 序列集成方法与 并行集成方法。随机森林算法属于后者的典型代表。

-

使用这种方法的集成学习,优点在于充分利用了基础学习器之间的独立性,通过对结果进行平均显著降低错误的概率。

-

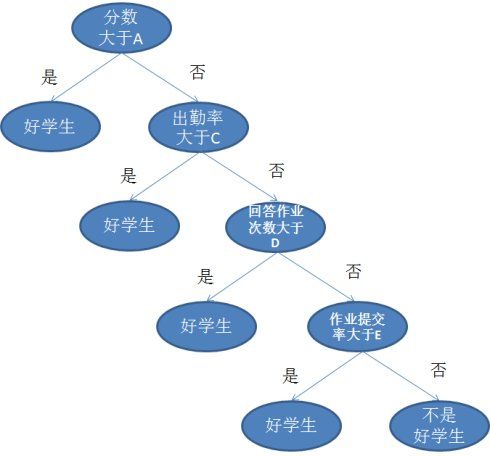

下面再简单说下决策树的原理

-

决策树是一种数据集划分的方法

-

数据划分为具有相似值的子集来构建出一个完整的树。决策树上每一个非叶节点是它对应的特征属性的测试集合。

-

经过每个特征属性的测试,产生了多个分支,而每个分支就是对于特征属性测试中某个值域的输出子集。

-

决策树上每个叶子节点就是表达输出结果的数据。

- 综合上面对集成学习和决策树的介绍,我们可以得出结论:随机森林是由很多的相互不关联的决策树组成的,利用集成学习思想搭建的一种机器学习算法 它的准确率要远高于单一决策树。

- 随机森林算法搭建步骤:

1.用N来表示训练用例(样本)的个数,M表示特征数目。

2.输入特征数目m,用于确定决策树上一个节点的决策结果;其中m应远小于M。

3. 从N个训练用例(样本)中以有放回抽样的方式,取样N次,形成一个训练集(即bootstrap取样),并用未抽到的用例(样本)作预测,评估其误差。

4. 对于每一个节点,随机选择m个特征,决策树上每个节点的决定都是基于这些特征确定的。根据这m个特征,计算其最佳的分裂方式。每棵树都会完整成长而不会剪枝,这有可能在建完一棵正常树状分类器后会被采用)。

- 随机森林的优点:

- 可以判断出不同特征之间的相互影响

- 因为是并行算法,所以训练速度较快。

- 不容易过拟合。

- 随机森林的缺点:

- 随机森林已经被证明在某些噪音较大的分类或回归问题上会过拟合。

- Python代码实现

'''

算法原理:

随机森林是一个包含多个决策树的分类器, 并且其输出的类别是由个别树输出的类别的众数而定。

'''

from random import seed

from random import randint

import numpy as np

# 建立一棵CART树

def data_split(index, value, dataset):

left, right = list(), list()

for row in dataset:

if row[index] < value:

left.append(row)

else:

right.append(row)

return left, right

# 计算基尼指数

def calc_gini(groups, class_values):

gini = 0.0

total_size = 0

for group in groups:

total_size += len(group)

for group in groups:

size = len(group)

if size == 0:

continue

for class_value in class_values:

proportion = [row[-1] for row in group].count(class_value) / float(size)

gini += (size / float(total_size)) * (proportion * (1.0 - proportion))

return gini

# 找最佳分叉点

def get_split(dataset, n_features):

class_values = list(set(row[-1] for row in dataset))

b_index, b_value, b_score, b_groups = 999, 999, 999, None

features = list()

while len(features) < n_features:

# 往features添加n_features个特征(n_feature等于特征数的根号),特征索引从dataset中随机取

index = randint(0, len(dataset[0]) - 2)

if index not in features:

features.append(index)

for index in features:

for row in dataset:

groups = data_split(index, row[index], dataset)

gini = calc_gini(groups, class_values)

if gini < b_score:

b_index, b_value, b_score, b_groups = index, row[index], gini, groups

# 每个节点由字典组成

return {

'index': b_index, 'value': b_value, 'groups': b_groups}

# 多数表决

def to_terminal(group):

outcomes = [row[-1] for row in group]

return max(set(outcomes), key=outcomes.count)

# 分枝

def split(node, max_depth, min_size, n_features, depth):

left, right = node['groups']

del (node['groups'])

if not left or not right:

node['left'] = node['right'] = to_terminal(left + right)

return

if depth >= max_depth:

node['left'], node['right'] = to_terminal(left), to_terminal(right)

return

if len(left) <= min_size:

node['left'] = to_terminal(left)

else:

node['left'] = get_split(left, n_features)

split(node['left'], max_depth, min_size, n_features, depth + 1)

if len(right) <= min_size:

node['right'] = to_terminal(right)

else:

node['right'] = get_split(right, n_features)

split(node['right'], max_depth, min_size, n_features, depth + 1)

# 建立一棵树

def build_one_tree(train, max_depth, min_size, n_features):

root = get_split(train, n_features)

split(root, max_depth, min_size, n_features, 1)

return root

# 用一棵树来预测

def predict(node, row):

if row[node['index']] < node['value']:

if isinstance(node['left'], dict):

return predict(node['left'], row)

else:

return node['left']

else:

if isinstance(node['right'], dict):

return predict(node['right'], row)

else:

return node['right']

# 随机森林类

class randomForest:

def __init__(self,trees_num, max_depth, leaf_min_size, sample_ratio, feature_ratio):

self.trees_num = trees_num # 森林的树的数目

self.max_depth = max_depth # 树深

self.leaf_min_size = leaf_min_size # 建立树时,停止的分枝样本最小数目

self.samples_split_ratio = sample_ratio # 采样,创建子集的比例(行采样)

self.feature_ratio = feature_ratio # 特征比例(列采样)

self.trees = list() # 森林

# 有放回的采样,创建数据子集

def sample_split(self, dataset):

sample = list()

n_sample = round(len(dataset) * self.samples_split_ratio)

while len(sample) < n_sample:

index = randint(0, len(dataset) - 2)

sample.append(dataset[index])

return sample

# 建立随机森林

def build_randomforest(self, train):

max_depth = self.max_depth

min_size = self.leaf_min_size

n_trees = self.trees_num

# 列采样,从M个feature中,选择m个(m远小于M)

n_features = int(self.feature_ratio * (len(train[0])-1))

for i in range(n_trees):

sample = self.sample_split(train)

tree = build_one_tree(sample, max_depth, min_size, n_features)

self.trees.append(tree)

return self.trees

# 随机森林预测的多数表决

def bagging_predict(self, onetestdata):

predictions = [predict(tree, onetestdata) for tree in self.trees]

return max(set(predictions), key=predictions.count)

# 计算建立的森林的精确度

def accuracy_metric(self, testdata):

correct = 0

for i in range(len(testdata)):

predicted = self.bagging_predict(testdata[i])

if testdata[i][-1] == predicted:

correct += 1

return correct / float(len(testdata)) * 100.0

# 数据处理

def load_csv(filename):

dataset = list()

with open(filename, 'r') as file:

csv_reader = reader(file)

for row in csv_reader:

if not row:

continue

dataset.append(row)

return dataset

# 划分训练数据与测试数据,默认取20%的数据当做测试数据

def split_train_test(dataset, ratio=0.2):

num = len(dataset)

train_num = int((1-ratio) * num)

dataset_copy = list(dataset)

traindata = list()

while len(traindata) < train_num:

index = randint(0,len(dataset_copy)-1)

traindata.append(dataset_copy.pop(index))

testdata = dataset_copy

return traindata, testdata

# 测试

if __name__ == '__main__':

train_feat = np.load("train_feat.npy")

train_label = np.load("train_label.npy")

test_feat = np.load("test_feat.npy")

test_label = np.load("test_label.npy")

train_label = np.expand_dims(train_label, 1)

test_label = np.expand_dims(test_label, 1)

traindata = np.concatenate((train_feat, train_label), axis=1)

testdata = np.concatenate((train_feat, train_label), axis=1)

# 决策树深度不能太深,不然容易导致过拟合

max_depth = 20

min_size = 1

sample_ratio = 1

trees_num = 20

feature_ratio = 0.3

RF = randomForest(trees_num, max_depth, min_size, sample_ratio, feature_ratio)

RF.build_randomforest(traindata)

acc = RF.accuracy_metric(testdata[:-1])

print('模型准确率:', acc, '%')