简单词性标注实战

文章目录

- 词性标注实战

-

- 讲解

- 代码实现

-

- 读取词性标注数据集

- 构建上述三个特征数组

- 进行词性标注

- 查看一下路径对应的词性,以及错误词性的dp值

- 学自NLPCamp

词性标注实战

讲解

对于一段文本我们要知道其中每个词的词性。形式如下:

# Z为词性总和:{z1,z2,...,zn},S为句子(词总和):{w1,w2,...,wn}

# S中每个词的词性肯能有多种,而组合起来的Z就更多了

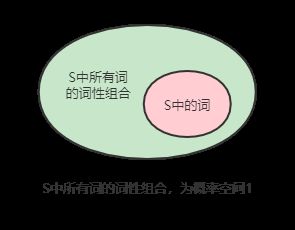

所以对于要求的P(Z|S),S是一个小概率,Z是大概率。所以其是求不出来的,使用贝叶斯公式求。

我们要求的是:从S的所有词的词性组合中选择一个概率最大的词性组合(也就是这个词性最符合语法)

P(Z|S) = P(S|Z) * P(Z) / P(S)

# S为已知量,Z为变量。所以省略常数分母

P(Z|S) = P(S|Z) * P(Z)

可以看出这就是一个噪声信道模型。

由于Z是S中所有词的词性的组合,S是S中所有词的列表。其上式可写成:

argmax P(Z|S) = P(z1,z2,...,zn|w1,w2,...,wn)

# Translation Model * Language Model

= P(w1,w2,...,wn|z1,z2,...,zn) * P(z1,z2,...,zn)

# 假设独立

= P(w1|z1)*P(w2|z2)*...*P(wn|zn) * P(z1,z2,...,zn)

# 使用BiGram

= P(w1|z1)*P(w2|z2)*...*P(wn|zn) * P(z1)*P(z2|z1)*...*P(zn|zn-1)

= ∏(1<=i<=n)P(wi|zi) * P(z1) * ∏(2<=i<=n)P(zi|zi-1)

- 假设独立:假设w之间是独立的,z之间是独立的。但是w与z之间不是独立的,每个w与它对应的z相关。

加个log防止溢出:

argmaxZ∈S中每个单词词性的所有组合 logP(Z|S) = ∑1<=i<=nlogP(wi|zi) + logP(z1) + ∑2<=i<=nlogP(zi|zi-1)

- Z={z1,z2,…,zn},S={w1,w2,…,wn}

还有一个思路是:单独算每个单词的 argmax P(zi|wi),然后将所有单词的这个乘起来作为整个句子的词性表达。

P(zi|wi) = P(wi|zi) * P(zi)

Z = P(w1|z1) * P(w2|z2) * ... * P(wn|zn) * P(z1) * ... * P(zn)

这个思路是不太正确的思路,因为没有考虑到上下文。从上式可以看出后项是Unigram。

这三个矩阵分别对应:

- A

- logP(wi|zi)

- 大小为n * m。n为词典库大小,m为词标注表大小

- B

- logP(z1)

- 大小为m。m为词标注表大小

- C

- logP(zi|zi-1)

- 大小为m * m。m为词标注表大小

通过扫描语料库构建这三个矩阵。三个矩阵中的每一项都是模型的一个参数。

由于Z是句子中所有单词词性的一个组合。所以我们可以将组合问题优化为动态规划问题(Viterbi算法的思想)。

通过动态规划五部曲来解答问题:

-

DP数组下标及其含义是什么?

- DP[i] [j]:第 i 个单词选择第 j 个词性时,从第一个单词到第 i 个单词的概率大小。

-

递推公式是什么?

dp[i][j] = max for z in range(0, m): dp[i-1][z] + a[j][i] + b[j] + c[j-1][j]

-

怎样初始化?

- 第一行是第一个单词,所以对其进行初始化。

dp[0][j] = a[j][0] + b[j]

- 第一行是第一个单词,所以对其进行初始化。

-

遍历顺序是什么?

- 从左到右遍历,从上到下遍历

-

终止条件是什么?

- 遍历到dp数组尾部

代码实现

使用人人网词性标注数据集。

import jieba

from collections import defaultdict, Counter

import numpy as np

import math

读取词性标注数据集

读取词性标注数据集,并且获取词及其词性。做特征提取并进行token化。

words = []

tags = []

is_head = []

with open('sources/TagData/PeopleDaily199801.txt', 'r', encoding='utf8') as f:

while True:

line = f.readline()

if not line:

break

line = line.strip()

# 用于组合词

combine_word = ''

combine_tag = ''

combine_flag = False

for i, word in enumerate(line.split()):

if i == 0:

# 去掉开头的报纸日期

continue

if i == 1:

is_head.append(1)

else:

is_head.append(0)

if word.find('[') != -1:

combine_word += word.split('[')[1].split('/')[0]

combine_flag = True

continue

elif word.rfind(']') != -1:

combine_word += word.split(']')[0].split('/')[0]

combine_tag = word.split(']')[1]

words.append(combine_word)

tags.append(combine_tag)

combine_word = ''

combine_flag = False

continue

if combine_flag:

combine_word += word.split('/')[0]

continue

words.append(word.split('/')[0])

tags.append(word.split('/')[1])

ids_to_word = list(set(words))

ids_to_tag = list(set(tags))

words_len = len(ids_to_word)

tags_len = len(ids_to_tag)

word_to_ids = dict((w, i) for i, w in enumerate(ids_to_word))

tag_to_ids = dict((t, i) for i, t in enumerate(ids_to_tag))

word_to_tag = dict((w, t) for w, t in zip(words, tags))

构建上述三个特征数组

A = [[0 for j in range(words_len)] for i in range(tags_len)]

B = [0 for i in range(tags_len)]

C = [[0 for j in range(tags_len)] for i in range(tags_len)]

# 构建A数组

pre_word, pre_tag, pre_head = 0, 0, 0

for word, tag, head in zip(words, tags, is_head):

if head:

B[tag_to_ids[tag]] += 1

else:

A[tag_to_ids[tag]][word_to_ids[word]] += 1

C[tag_to_ids[pre_tag]][tag_to_ids[tag]] += 1

pre_word, pre_tag, pre_head = word, tag, head

A = np.array(A, dtype=float)

B = np.array(B, dtype=float)

C = np.array(C, dtype=float)

for i in range(A.shape[0]):

summ = A[i].sum()

for j in range(A.shape[1]):

A[i][j] = (A[i][j] + 1) / (summ + A.shape[1])

summ = B.sum()

for i in range(B.shape[0]):

B[i] = (B[i] + 1) / (summ + B.shape[0])

for i in range(C.shape[0]):

summ = C[i].sum()

for j in range(C.shape[1]):

C[i][j] = (C[i][j] + 1) / (summ + C.shape[1])

最后三个for循环是为了进行归一化、平滑化。防止0的出现。

进行词性标注

正确词性:19980103-02-013-001/m 云南/ns 全面/ad 完成/v 党报/n 党刊/n 发行/vn 任务/n

content = "云南 全面 完成 党报 党刊 发行 任务".split()

dp = [[0 for j in range(tags_len)] for i in range(len(content))]

path = [0 for i in range(len(content))]

res = []

for tag in range(tags_len):

dp[0][tag] = -math.log(A[tag][word_to_ids[content[0]]]) - math.log(B[tag])

for i in range(1, len(content)):

minn = float('inf')

minn_tag = ''

for z in range(tags_len):

if dp[i-1][z] < minn:

minn, minn_tag = dp[i-1][z], z

path[i-1] = minn_tag

for j in range(tags_len):

dp[i][j] = -math.log(A[j][word_to_ids[content[i]]]) - math.log(C[minn_tag][j]) + minn

minn = float('inf')

minn_tag = ''

for z in range(tags_len):

if dp[-1][z] < minn:

minn, minn_tag = dp[-1][z], z

path[-1] = minn_tag

print(path)

[6, 16, 7, 30, 30, 7, 30]

查看一下路径对应的词性,以及错误词性的dp值

for i in path:

print(ids_to_tag[i], end=' ')

print()

print(sorted(list(enumerate(dp[-2])), key=lambda i: i[1]))

ns ad v n n v n

[(7, 62.52403804697192), (18, 63.54269168062274), (31, 66.31538434533402), (12, 66.84356020041032), (30, 66.98141761571875), (29, 67.15506401995253), (42, 67.2579442909328), (41, 67.32280128709618), (21, 67.76834136366989), (35, 67.77954985376014), (22, 68.1156403237701), (13, 68.16223680506411), (4, 68.89857138751013), (16, 68.90836896977878), (15, 69.2393456247602), (25, 69.27639029481048), (3, 69.32057529175151), (5, 69.333718912759), (24, 69.38224259821669), (17, 69.5266120576496), (0, 69.69754138240673), (38, 69.96903098819377), (6, 69.97206357901055), (19, 70.0542828964721), (9, 70.06770949242326), (2, 70.20966944201218), (26, 70.30691706175277), (10, 70.40364083385651), (43, 70.97207322317294), (40, 71.36526036755518), (8, 72.02383165009198), (28, 72.2356456654909), (39, 72.31657495384712), (32, 72.34134999730088), (23, 72.62285124657133), (20, 73.24008077749231), (14, 74.84844776211168), (34, 74.84877086114435), (1, 75.25396389279734), (33, 75.2545589639259), (11, 75.94695799781421), (27, 75.94695799781421), (36, 75.94695799781421), (37, 75.94706005077978)]

我们再看一下tag与其对应的id:

print(tag_to_ids)

{'j': 0, 'Rg': 1, 'y': 2, 'i': 3, 'r': 4, 'l': 5, 'ns': 6, 'v': 7, 'q': 8, 's': 9, 'Vg': 10, 'Yg': 11, 'u': 12, 'm': 13, 'Bg': 14, 't': 15, 'ad': 16, 'Ng': 17, 'vn': 18, 'nt': 19, 'o': 20, 'p': 21, 'a': 22, 'Tg': 23, 'b': 24, 'k': 25, 'z': 26, 'na': 27, 'Dg': 28, 'd': 29, 'n': 30, 'w': 31, 'Ag': 32, 'h': 33, 'e': 34, 'nr': 35, 'vvn': 36, 'Mg': 37, 'an': 38, 'nx': 39, 'nz': 40, 'c': 41, 'f': 42, 'vd': 43}

我们可以看到输出错误的那个词性的是动词,我们再详细看一下矩阵中的值:

print("发行作为v的概率:", A[7][word_to_ids['发行']])

print("发行作为vn的概率:", A[18][word_to_ids['发行']])

print("n作为前词性v作为当前词性的概率:", C[30][7])

print("n作为前词性vn作为当前词性的概率:", C[30][18])

发行作为v的概率: 0.0003537863463443465

发行作为vn的概率: 0.00035066275260241855

n作为前词性v作为当前词性的概率: 0.1478553505803919

n作为前词性vn作为当前词性的概率: 0.05386328343799666

可以看到,发行这个词作为v和vn的概率差别不大。但是在整个语料中v作为n的后词的概率要比vn大,而vn作为n的后词在语料中出现的次数少。所以受C矩阵影响将“发行”这个词作为了v词性。