搭建一个Quantize Aware Trained深度学习模型

什么是量化

量化是将深度学习模型参数(weights, activations和bias)从较高浮点精度转换到较少位表示的过程。

为什么需要量化

可以将模型大小压缩4倍,如果TF核心模型大小为40M,则可以减小到10M。模型尺寸的减小使模型更加轻巧,从而减小了计算量,所需的内存更小,从而减小了延迟。

量化后模型:

- 占用更小的空间

- 在带宽较低的网络上有更快的下载时间

- 占用较少的内存,使模型拥有更快的推断速度,降低功耗

- 对精度有一定的影响

量化有哪些类型

目前有两种类型的量化应用于深度学习模型:

- Quantize Aware Training: QAT应用于预训练模型,从而产生量化模型。QAT可以确保训练和推理的时候,前向传播的能够有匹配的精度。你可以为整个模型或者部分模型生成量化感知。

- Post Training Quantization: 使用TF lite格式将量化应用于已经训练的TF模型。你可以使用训练后动态范围量化,float16量化以及全整数量化。在训练后量化中,权重在训练后被量化,并且激活在推理时被动态的量化。

如何构建量化感知模型

简而言之,我们将按照以下步骤构建量化感知训练模型:

- 创建一个深度学习模型

- 训练模型

- 保存深度学习模型

- 为预训练模型创建一个量化感知模型

- 对量化感知模型进行量化

- 将量化模型转换成TFlite

- 使用TFlite进行推理

数据集来自 Intel Image Classification,数据集中有6类:建筑物,森林,冰川,山脉,海洋和街道。

1. 搭建一个深度学习模型

import tensorflow.keras as keras

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from tensorflow.keras import optimizers

# setting the train, test and val directories

train_dir = r'.\archive\seg_train\seg_train'

test_dir = r'.\archive\seg_pred'

val_dir = r'.\archive\seg_test\seg_test'

# setting basic parameters to the model

IMG_WIDTH = 100

IMG_HEIGHT = 100

IMG_DIM = (IMG_HEIGHT, IMG_WIDTH)

batch_size = 16

epochs = 25

# creating Image Data generator

image_gen_train = ImageDataGenerator(rescale=1. / 255,

zoom_range=0.3,

rotation_range=25,

shear_range=0.1,

featurewise_std_normalization=False)

# Creating train data generator

train_data_gen = image_gen_train.flow_from_directory(batch_size=batch_size,

directory=train_dir,

shuffle=True,

target_size=IMG_DIM,

class_mode='sparse')

# Creating validation data generator

image_gen_val = ImageDataGenerator(rescale=1. / 255)

val_data_gen = image_gen_val.flow_from_directory(batch_size=batch_size,

directory=val_dir,

target_size=IMG_DIM,

class_mode='sparse')

model = keras.Sequential([

keras.layers.InputLayer(input_shape=(IMG_HEIGHT, IMG_WIDTH, 3)),

keras.layers.Reshape(target_shape=(IMG_HEIGHT, IMG_WIDTH, 3)),

keras.layers.Conv2D(filters=32, kernel_size=(3, 3), activation='relu'),

keras.layers.MaxPooling2D(pool_size=(2, 2)),

keras.layers.Dropout(0.3),

keras.layers.Conv2D(filters=64, kernel_size=(3, 3), activation='relu'),

keras.layers.MaxPooling2D(pool_size=(2, 2)),

keras.layers.Dropout(0.3),

keras.layers.Conv2D(filters=128, kernel_size=(3, 3), activation='relu'),

keras.layers.MaxPooling2D(pool_size=(2, 2)),

keras.layers.Flatten(),

keras.layers.Dense(256, activation='relu'),

keras.layers.Dropout(0.4),

keras.layers.Dense(512, activation='relu'),

keras.layers.Dense(6, activation='softmax')

])

2. 训练模型

创建一个模型后,我们可以编译并训练它100个epochs:

optimizer = optimizers.Adam(lr=0.0005)

model.compile(optimizer=optimizer,

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

# Training the model

history = model.fit_generator(

train_data_gen,

steps_per_epoch=len(train_data_gen) / batch_size,

epochs=100,

validation_data=val_data_gen,

validation_steps=len(val_data_gen) / batch_size

)

3. 保存训练模型

将训练的模型保存成H5文件。

model.save('./model/Intel_base_model.h5')

4. 为预训练模型创建一个量化感知模型

为了创建一个QAT模型,确保你已经安装了tensorflow-model-optimization库,安装方法如下:

pip install -q tensorflow-model-optimization

使用下面的代码,你会获得一个8bit量化模型:

import tensorflow_model_optimization as tfmot

quantize_model = tfmot.quantization.keras.quantize_model

# q_aware stands for for quantization aware.

q_aware_model = quantize_model(model)

optimizer = optimizers.Adam(lr=0.0001)

# `quantize_model` requires a recompile.

q_aware_model.compile(optimizer=optimizer, loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),metrics=['accuracy'])

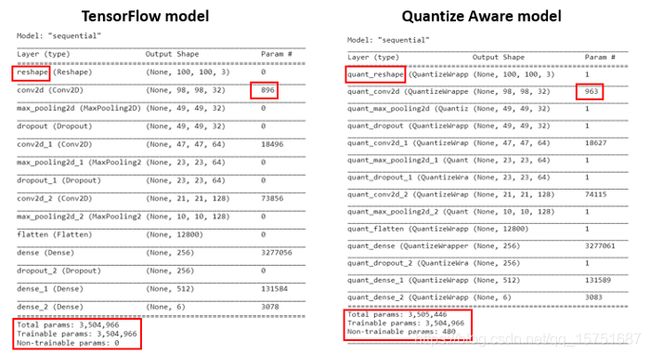

q_aware_model.summary()

模型到此还没有被量化,下面对比了TF模型和QAT模型的差异:

5. 对QAT模型量化

history = q_aware_model.fit_generator(

train_data_gen,

steps_per_epoch=len(train_data_gen)/batch_size,

epochs=100,

validation_data=val_data_gen,

validation_steps=len(val_data_gen)/batch_size

)

对比TF模型和QAT模型的准确率:

_, model_accuracy = model.evaluate_generator(val_data_gen)

print('TF Model accuracy:', model_accuracy)

_, qat_model_accuracy = q_aware_model.evaluate_generator(val_data_gen)

print('Quant Model accuracy:', qat_model_accuracy)

TF Model accuracy: 0.7926667

Quant Model accuracy: 0.694

精度还是掉了不少,可能参数还要调整。

保存量化模型:

q_aware_model.save('Intel_quantize_aware_model.h5')

6. 将QAT模型转换成Tflite

这一步会创建一个量化模型,并保存:

converter = tf.lite.TFLiteConverter.from_keras_model(q_aware_model)

converter.optimizations = [tf.lite.Optimize.DEFAULT]

quantized_tflite_model = converter.convert()

with open('./model/Intel_QAT.tflite', 'wb') as f:

f.write(quantized_tflite_model)

7. 使用TFLite模型推理

class_names = list(train_data_gen.class_indices.keys())

#Create the interpreter for the TfLite model

interpreter = tf.lite.Interpreter(model_content=quantized_tflite_model)

interpreter.allocate_tensors()

#Create input and output tensors from the interpreter

input_index = interpreter.get_input_details()[0]["index"]

output_index = interpreter.get_output_details()[0]["index"]

# Create the image data for prediction

dataset_list = tf.data.Dataset.list_files(test_dir + '\\*')

for i in range(10):

image = next(iter(dataset_list))

image = tf.io.read_file(image)

image = tf.io.decode_jpeg(image, channels=3)

image = tf.image.resize(image, (100,100))

image = tf.cast(image / 255., tf.float32)

image = tf.expand_dims(image, 0)

#Set the tensor for image into input index

interpreter.set_tensor(input_index, image)

# Run inference.

interpreter.invoke()

# find the prediction with highest probability.

output = interpreter.tensor(output_index)

pred = np.argmax(output()[0])

print(class_names[pred])

总结

- 深度学习模型的量化有助于减小模型的大小和延迟,从而帮助模型部署在边缘设备。

- QAT可以作用于全部模型或者模型的部分层上

- 训练后量化应用于全部的训练模型上

本文使用数据集链接:Baidu网盘

提取码:71jo

本文使用代码链接:github

原文链接:Building a Quantize Aware Trained Deep Learning Model