Xgboost集成算法

Xgboost集成算法

学完神经网络之后,感觉脑子一片浆糊,大部分听不懂的状态,休息了三四天,还得继续向下学习。。。。唉,我就是太笨了啊!

xgboost介绍

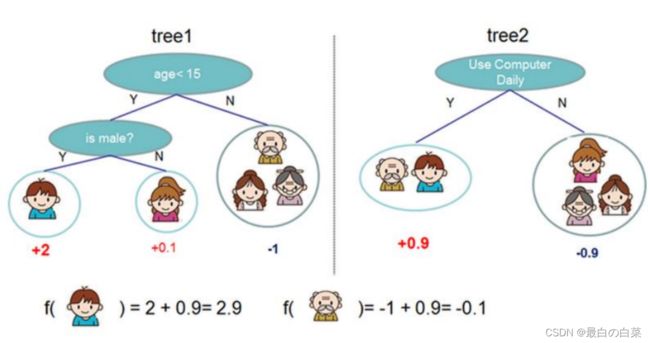

xgboost算法是跟决策树联系在一起的

既可以做分类任务,也可以做回归任务。

y ^ i = ∑ j w j x i j \hat{y}_{i}=\sum_{j} w_{j} x_{i j} y^i=∑jwjxij 样本与权值的线性组合

目标函数: l ( y i , y ^ i ) = ( y i − y ^ i ) 2 l\left(y_{i}, \hat{y}_{i}\right)=\left(y_{i}-\hat{y}_{i}\right)^{2} l(yi,y^i)=(yi−y^i)2

如何最优函数解? F ∗ ( x ⃗ ) = arg min E ( x , y ) [ L ( y , F ( x ⃗ ) ) ] F^{*}(\vec{x})=\arg \min E_{(x, y)}[L(y, F(\vec{x}))] F∗(x)=argminE(x,y)[L(y,F(x))]

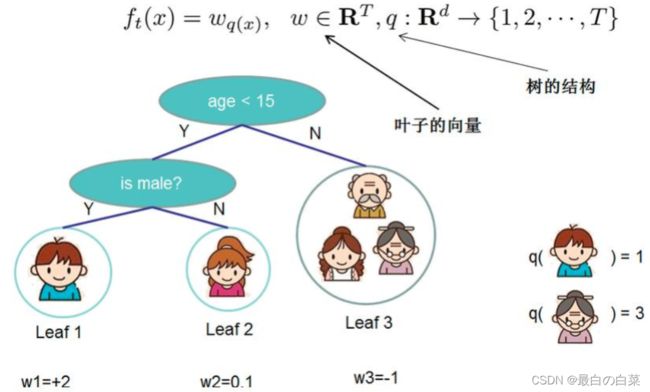

集成算法的表示: y ^ i = ∑ k = 1 K f k ( x i ) , f k ∈ F \hat{y}_{i}=\sum_{k=1}^{K} f_{k}\left(x_{i}\right), \quad f_{k} \in \mathcal{F} y^i=∑k=1Kfk(xi),fk∈F

Ω ( f t ) = γ T + 1 2 λ ∑ j = 1 T w j = 1 2 \Omega\left(f_{t}\right)=\gamma T+\frac{1}{2} \lambda \sum_{j=1}^{T} w_{j=1}^{2} Ω(ft)=γT+21λ∑j=1Twj=12 前面限制叶子节点个数 后面是正则化惩罚项

Ω = γ 3 + 1 2 λ ( 4 + 0.01 + 1 ) \Omega=\gamma 3+\frac{1}{2} \lambda(4+0.01+1) Ω=γ3+21λ(4+0.01+1)

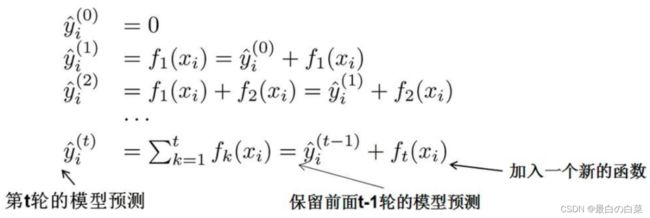

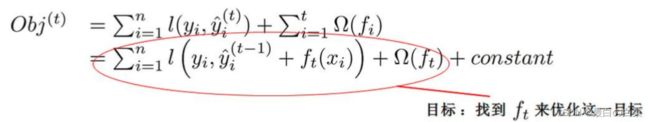

现在还剩下一个问题,我们如何选择每一轮加入什么f呢?答案是非常直接的,选取一个f来使得我们的目标函数尽量最大地降低

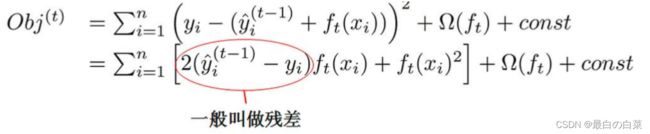

目标 O b j ( t ) = ∑ i = 1 n l ( y i , y ^ i ( t − 1 ) + f t ( x i ) ) + Ω ( f t ) + constant \text { 目标 } O b j^{(t)}=\sum_{i=1}^{n} l\left(y_{i}, \hat{y}_{i}^{(t-1)}+f_{t}\left(x_{i}\right)\right)+\Omega\left(f_{t}\right)+\text { constant } 目标 Obj(t)=∑i=1nl(yi,y^i(t−1)+ft(xi))+Ω(ft)+ constant

用泰勒展开来近似我们原来的目标

泰勒展开: $f(x+\Delta x) \simeq f(x)+f^{\prime}(x) \Delta x+\frac{1}{2} f^{\prime \prime}(x) \Delta x^{2} $

定义:$ g_{i}=\partial_{\hat{y}^{(t-1)}} l\left(y_{i}, \hat{y}^{(t-1)}\right), \quad h_{i}=\partial_{\hat{y}{(t-1)}}{2} l\left(y_{i}, \hat{y}^{(t-1)}\right) $

O b j ( t ) ≃ ∑ i = 1 n [ l ( y i , y ^ i ( t − 1 ) ) + g i f t ( x i ) + 1 2 h i f t 2 ( x i ) ] + Ω ( f t ) + constant O b j^{(t)} \simeq \sum_{i=1}^{n}\left[l\left(y_{i}, \hat{y}_{i}^{(t-1)}\right)+g_{i} f_{t}\left(x_{i}\right)+\frac{1}{2} h_{i} f_{t}^{2}\left(x_{i}\right)\right]+\Omega\left(f_{t}\right)+\text { constant } Obj(t)≃∑i=1n[l(yi,y^i(t−1))+gift(xi)+21hift2(xi)]+Ω(ft)+ constant

∑ i = 1 n [ g i f t ( x i ) + 1 2 h i f t 2 ( x i ) ] + Ω ( f t ) \sum_{i=1}^{n}\left[g_{i} f_{t}\left(x_{i}\right)+\frac{1}{2} h_{i} f_{t}^{2}\left(x_{i}\right)\right]+\Omega\left(f_{t}\right) ∑i=1n[gift(xi)+21hift2(xi)]+Ω(ft)

g i = ∂ y ^ ( t − 1 ) l ( y i , y ^ ( t − 1 ) ) , h i = ∂ y ^ ( t − 1 ) 2 l ( y i , y ^ ( t − 1 ) ) g_{i}=\partial_{\hat{y}^{(t-1)}} l\left(y_{i}, \hat{y}^{(t-1)}\right), \quad h_{i}=\partial_{\hat{y}^{(t-1)}}^{2} l\left(y_{i}, \hat{y}^{(t-1)}\right) gi=∂y^(t−1)l(yi,y^(t−1)),hi=∂y^(t−1)2l(yi,y^(t−1))

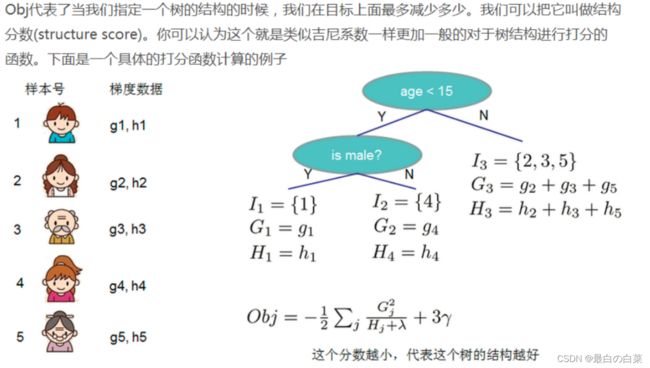

样本上遍历(i=1~n) 叶子节点上遍历(j=1~T)

O b j ( t ) ≃ ∑ i = 1 n [ g i f t ( x i ) + 1 2 h i f t 2 ( x i ) ] + Ω ( f t ) = ∑ i = 1 n [ g i w q ( x i ) + 1 2 h i w q ( x i ) 2 ] + γ T + λ 1 2 ∑ j = 1 T w j 2 = ∑ j = 1 T [ ( ∑ i ∈ I j g i ) w j + 1 2 ( ∑ i ∈ I j h i + λ ) w j 2 ] + γ T \begin{aligned} O b j^{(t)} & \simeq \sum_{i=1}^{n}\left[g_{i} f_{t}\left(x_{i}\right)+\frac{1}{2} h_{i} f_{t}^{2}\left(x_{i}\right)\right]+\Omega\left(f_{t}\right) \\ &=\sum_{i=1}^{n}\left[g_{i} w_{q\left(x_{i}\right)}+\frac{1}{2} h_{i} w_{q\left(x_{i}\right)}^{2}\right]+\gamma T+\lambda \frac{1}{2} \sum_{j=1}^{T} w_{j}^{2} \\ &=\sum_{j=1}^{T}\left[\left(\sum_{i \in I_{j}} g_{i}\right) w_{j}+\frac{1}{2}\left(\sum_{i \in I_{j}} h_{i}+\lambda\right) w_{j}^{2}\right]+\gamma T \end{aligned} Obj(t)≃i=1∑n[gift(xi)+21hift2(xi)]+Ω(ft)=i=1∑n[giwq(xi)+21hiwq(xi)2]+γT+λ21j=1∑Twj2=j=1∑T⎣⎡⎝⎛i∈Ij∑gi⎠⎞wj+21⎝⎛i∈Ij∑hi+λ⎠⎞wj2⎦⎤+γT

G j = ∑ i ∈ I j g i H j = ∑ i ∈ I j h i G_{j}=\sum_{i \in I_{j}} g_{i} \quad H_{j}=\sum_{i \in I_{j}} h_{i} Gj=∑i∈IjgiHj=∑i∈Ijhi

O b j ( t ) = ∑ j = 1 T [ ( ∑ i ∈ I j g i ) w j + 1 2 ( ∑ i ∈ I j h i + λ ) w j 2 ] + γ T = ∑ j = 1 T [ G j w j + 1 2 ( H j + λ ) w j 2 ] + γ T \begin{array}{l} O b j^{(t)}=\sum_{j=1}^{T}\left[\left(\sum_{i \in I_{j}} g_{i}\right) w_{j}+\frac{1}{2}\left(\sum_{i \in I_{j}} h_{i}+\lambda\right) w_{j}^{2}\right]+\gamma T \\ =\sum_{j=1}^{T}\left[G_{j} w_{j}+\frac{1}{2}\left(H_{j}+\lambda\right) w_{j}^{2}\right]+\gamma T \end{array} Obj(t)=∑j=1T[(∑i∈Ijgi)wj+21(∑i∈Ijhi+λ)wj2]+γT=∑j=1T[Gjwj+21(Hj+λ)wj2]+γT

取最小值

∂ J ( f t ) ∂ w j = G j + ( H j + λ ) w j = 0 w j = − G j H j + λ O b j = − 1 2 ∑ j = 1 T G j 2 H j + λ + γ T \begin{array}{l} \frac{\partial J\left(f_{t}\right)}{\partial w_{j}}=G_{j}+\left(H_{j}+\lambda\right) w_{j}=0 \\ w_{j}=-\frac{G_{j}}{H_{j}+\lambda} \\ O b j=-\frac{1}{2} \sum_{j=1}^{T} \frac{G_{j}^{2}}{H_{j}+\lambda}+\gamma T \end{array} ∂wj∂J(ft)=Gj+(Hj+λ)wj=0wj=−Hj+λGjObj=−21∑j=1THj+λGj2+γT 带回原目标函数

安装xgboost

记录下自己的一种超简单实用的安装流程。

进入:https://www.lfd.uci.edu/~gohlke/pythonlibs/#xgboost

我选的是这个xgboost-1.5.1-cp37-cp37m-win_amd64.whl(cp37指的是我python版本是3.7,根据版本选择),然后需要启动CMD,切换目录到C:\Users\LH\Anaconda3\myLib(文件安装目录,根据自己下载的位置进行更改),在输入 pip install xgboost-1.5.1-cp37-cp37m-win_amd64.whl。

实战演示

import xgboost

# First XGBoost model for Pima Indians dataset

from numpy import loadtxt

from xgboost import XGBClassifier

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

# load data

dataset = loadtxt('pima-indians-diabetes.csv', delimiter=",")

# split data into X and y

X = dataset[:,0:8]

Y = dataset[:,8]

# split data into train and test sets

seed = 7

test_size = 0.33

X_train, X_test, y_train, y_test = train_test_split(X, Y, test_size=test_size, random_state=seed)

# fit model no training data

model = XGBClassifier()

model.fit(X_train, y_train)

# make predictions for test data

y_pred = model.predict(X_test)

predictions = [round(value) for value in y_pred]

# evaluate predictions

accuracy = accuracy_score(y_test, predictions)

print("Accuracy: %.2f%%" % (accuracy * 100.0))

Accuracy: 74.02%

from numpy import loadtxt

from xgboost import XGBClassifier

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

# load data

dataset = loadtxt('pima-indians-diabetes.csv', delimiter=",")

# split data into X and y

X = dataset[:,0:8]

Y = dataset[:,8]

# split data into train and test sets

seed = 7

test_size = 0.33

X_train, X_test, y_train, y_test = train_test_split(X, Y, test_size=test_size, random_state=seed)

# fit model no training data

model = XGBClassifier()

eval_set = [(X_test, y_test)]

model.fit(X_train, y_train, early_stopping_rounds=10, eval_metric="logloss", eval_set=eval_set, verbose=True)

# make predictions for test data

y_pred = model.predict(X_test)

predictions = [round(value) for value in y_pred]

# evaluate predictions

accuracy = accuracy_score(y_test, predictions)

print("Accuracy: %.2f%%" % (accuracy * 100.0))

[0] validation_0-logloss:0.60491

[1] validation_0-logloss:0.55934

[2] validation_0-logloss:0.53068

[3] validation_0-logloss:0.51795

[4] validation_0-logloss:0.51153

[5] validation_0-logloss:0.50935

[6] validation_0-logloss:0.50818

[7] validation_0-logloss:0.51097

[8] validation_0-logloss:0.51760

[9] validation_0-logloss:0.51912

[10] validation_0-logloss:0.52503

[11] validation_0-logloss:0.52697

[12] validation_0-logloss:0.53335

[13] validation_0-logloss:0.53905

[14] validation_0-logloss:0.54546

[15] validation_0-logloss:0.54613

[16] validation_0-logloss:0.54982

Accuracy: 74.41%

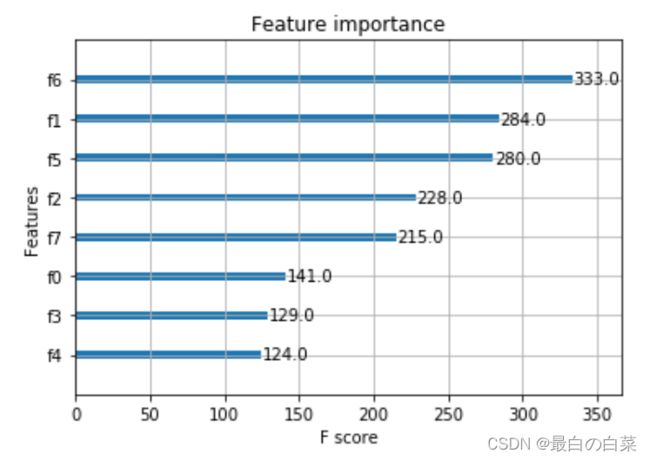

from numpy import loadtxt

from xgboost import XGBClassifier

from xgboost import plot_importance

from matplotlib import pyplot

# load data

dataset = loadtxt('pima-indians-diabetes.csv', delimiter=",")

# split data into X and y

X = dataset[:,0:8]

y = dataset[:,8]

# fit model no training data

model = XGBClassifier()

model.fit(X, y)

# plot feature importance

plot_importance(model)

pyplot.show()

# Tune learning_rate

from numpy import loadtxt

from xgboost import XGBClassifier

from sklearn.model_selection import GridSearchCV

from sklearn.model_selection import StratifiedKFold

# load data

dataset = loadtxt('pima-indians-diabetes.csv', delimiter=",")

# split data into X and y

X = dataset[:,0:8]

Y = dataset[:,8]

# grid search

model = XGBClassifier()

learning_rate = [0.0001, 0.001, 0.01, 0.1, 0.2, 0.3]

param_grid = dict(learning_rate=learning_rate)

kfold = StratifiedKFold(n_splits=10, shuffle=True, random_state=7)

grid_search = GridSearchCV(model, param_grid, scoring="neg_log_loss", n_jobs=-1, cv=kfold)

grid_result = grid_search.fit(X, Y)

# summarize results

print("Best: %f using %s" % (grid_result.best_score_, grid_result.best_params_))

means = grid_result.cv_results_['mean_test_score']

params = grid_result.cv_results_['params']

for mean, param in zip(means, params):

print("%f with: %r" % (mean, param))

Best: -0.530152 using {‘learning_rate’: 0.01}

-0.689563 with: {‘learning_rate’: 0.0001}

-0.660868 with: {‘learning_rate’: 0.001}

-0.530152 with: {‘learning_rate’: 0.01}

-0.552723 with: {‘learning_rate’: 0.1}

-0.653341 with: {‘learning_rate’: 0.2}

-0.718789 with: {‘learning_rate’: 0.3}

参数调节

1.learning rate

2.tree

max_depth

min_child_weight

subsample, colsample_bytree

gamma

3.正则化参数

lambda

alpha

xgb1 = XGBClassifier(

learning_rate =0.1,

n_estimators=1000,

max_depth=5,

min_child_weight=1,

gamma=0,

subsample=0.8,

colsample_bytree=0.8,

objective= 'binary:logistic',

nthread=4,

scale_pos_weight=1,

seed=27)