最近部署的web程序,在服务器上出现不少

time_wait的连接状态,会占用tcp端口,费了几天时间排查。

之前我有结论:HTTP keep-alive 是在应用层对TCP连接的滑动续约复用,如果客户端、服务器稳定续约,就成了名副其实的长连接。

目前所有的HTTP网络库(不论是客户端、服务端)都默认开启了HTTP Keep-Alive,通过Request/Response的Connection标头来协商复用连接。

非常规做法导致的短连接

我手上有个项目,由于历史原因,客户端禁用了Keep-Alive,服务端默认开启了Keep-Alive,如此一来协商复用连接失败, 客户端每次请求会使用新的TCP连接, 也就是回退为短连接。

客户端强制禁用Keep-Alive

package main

import (

"fmt"

"io/ioutil"

"log"

"net/http"

"time"

)

func main() {

tr := http.Transport{

DisableKeepAlives: true,

}

client := &http.Client{

Timeout: 10 * time.Second,

Transport: &tr,

}

for {

requestWithClose(client)

time.Sleep(time.Second * 1)

}

}

func requestWithClose(client *http.Client) {

resp, err := client.Get("http://10.100.219.9:8081")

if err != nil {

fmt.Printf("error occurred while fetching page, error: %s", err.Error())

return

}

defer resp.Body.Close()

c, err := ioutil.ReadAll(resp.Body)

if err != nil {

log.Fatalf("Couldn't parse response body. %+v", err)

}

fmt.Println(string(c))

}web服务端默认开启Keep-Alive

package main

import (

"fmt"

"log"

"net/http"

)

// 根据RemoteAddr 知道客户端使用的持久连接

func IndexHandler(w http.ResponseWriter, r *http.Request) {

fmt.Println("receive a request from:", r.RemoteAddr, r.Header)

w.Write([]byte("ok"))

}

func main() {

fmt.Printf("Starting server at port 8081\n")

// net/http 默认开启持久连接

if err := http.ListenAndServe(":8081", http.HandlerFunc(IndexHandler)); err != nil {

log.Fatal(err)

}

}

从服务端的日志看,确实是短连接。

receive a request from: 10.22.38.48:54722 map[Accept-Encoding:[gzip] Connection:[close] User-Agent:[Go-http-client/1.1]]

receive a request from: 10.22.38.48:54724 map[Accept-Encoding:[gzip] Connection:[close] User-Agent:[Go-http-client/1.1]]

receive a request from: 10.22.38.48:54726 map[Accept-Encoding:[gzip] Connection:[close] User-Agent:[Go-http-client/1.1]]

receive a request from: 10.22.38.48:54728 map[Accept-Encoding:[gzip] Connection:[close] User-Agent:[Go-http-client/1.1]]

receive a request from: 10.22.38.48:54731 map[Accept-Encoding:[gzip] Connection:[close] User-Agent:[Go-http-client/1.1]]

receive a request from: 10.22.38.48:54733 map[Accept-Encoding:[gzip] Connection:[close] User-Agent:[Go-http-client/1.1]]

receive a request from: 10.22.38.48:54734 map[Accept-Encoding:[gzip] Connection:[close] User-Agent:[Go-http-client/1.1]]

receive a request from: 10.22.38.48:54738 map[Accept-Encoding:[gzip] Connection:[close] User-Agent:[Go-http-client/1.1]]

receive a request from: 10.22.38.48:54740 map[Accept-Encoding:[gzip] Connection:[close] User-Agent:[Go-http-client/1.1]]

receive a request from: 10.22.38.48:54741 map[Accept-Encoding:[gzip] Connection:[close] User-Agent:[Go-http-client/1.1]]

receive a request from: 10.22.38.48:54743 map[Accept-Encoding:[gzip] Connection:[close] User-Agent:[Go-http-client/1.1]]

receive a request from: 10.22.38.48:54744 map[Accept-Encoding:[gzip] Connection:[close] User-Agent:[Go-http-client/1.1]]

receive a request from: 10.22.38.48:54746 map[Accept-Encoding:[gzip] Connection:[close] User-Agent:[Go-http-client/1.1]]谁是主动断开方?

我想当然的以为 客户端是主动断开方,被现实啪啪打脸。

某一天服务器上超过300的time_wait报警告诉我这tmd是服务器主动终断连接。

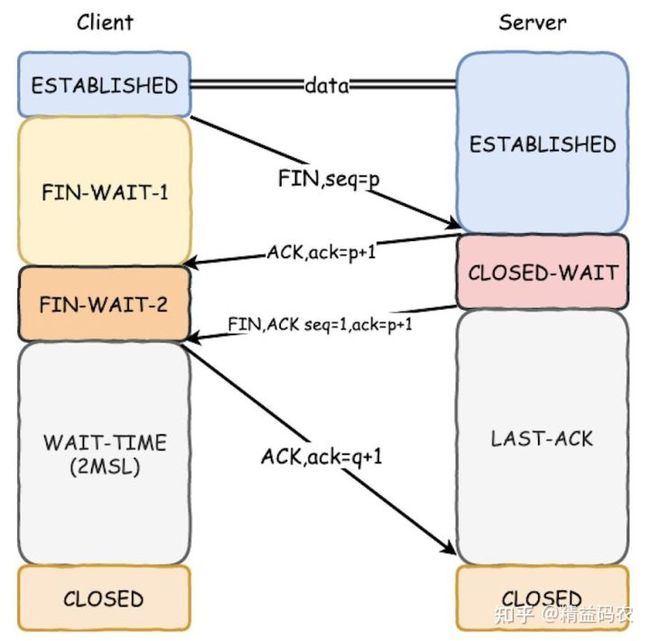

常规的TCP4次挥手, 主动断开方会进入time_wait状态,等待2MSL后释放占用的SOCKET

以下是从服务器上tcpdump抓取的tcp连接信息。

红框2,3部分明确提示是从 Server端发起TCP的FIN消息, 之后Client回应ACK确认收到Server的关闭通知; 之后Client再发FIN消息,告知现在可以关闭了, Server端最终发ACK确认收到,并进入Time_WAIT状态,等待2MSL的时间关闭Socket。

特意指出,红框1表示TCP双端同时关闭,此时会在Client,Server同时留下

time_wait痕迹,发生概率较小。

没有源码说个串串

此种情况是服务端主动关闭,我们往回翻一翻golang httpServer的源码

- http.ListenAndServe(":8081")

- server.ListenAndServe()

- srv.Serve(ln)

- go c.serve(connCtx) 使用go协程来处理每个请求

服务器连接处理请求的简略源码如下:

func (c *conn) serve(ctx context.Context) {

c.remoteAddr = c.rwc.RemoteAddr().String()

ctx = context.WithValue(ctx, LocalAddrContextKey, c.rwc.LocalAddr())

defer func() {

if !c.hijacked() {

c.close()

c.setState(c.rwc, StateClosed, runHooks)

}

}()

......

// HTTP/1.x from here on.

ctx, cancelCtx := context.WithCancel(ctx)

c.cancelCtx = cancelCtx

defer cancelCtx()

c.r = &connReader{conn: c}

c.bufr = newBufioReader(c.r)

c.bufw = newBufioWriterSize(checkConnErrorWriter{c}, 4<<10)

for {

w, err := c.readRequest(ctx)

switch {

case err == errTooLarge:

const publicErr = "431 Request Header Fields Too Large"

fmt.Fprintf(c.rwc, "HTTP/1.1 "+publicErr+errorHeaders+publicErr)

c.closeWriteAndWait()

return

case isUnsupportedTEError(err):

code := StatusNotImplemented

fmt.Fprintf(c.rwc, "HTTP/1.1 %d %s%sUnsupported transfer encoding", code, StatusText(code), errorHeaders)

return

case isCommonNetReadError(err):

return // don't reply

default:

if v, ok := err.(statusError); ok {

fmt.Fprintf(c.rwc, "HTTP/1.1 %d %s: %s%s%d %s: %s", v.code, StatusText(v.code), v.text, errorHeaders, v.code, StatusText(v.code), v.text)

return

}

publicErr := "400 Bad Request"

fmt.Fprintf(c.rwc, "HTTP/1.1 "+publicErr+errorHeaders+publicErr)

return

}

}

serverHandler{c.server}.ServeHTTP(w, w.req)

w.cancelCtx()

if c.hijacked() {

return

}

w.finishRequest()

if !w.shouldReuseConnection() {

if w.requestBodyLimitHit || w.closedRequestBodyEarly() {

c.closeWriteAndWait()

}

return

}

c.setState(c.rwc, StateIdle, runHooks)

c.curReq.Store((*response)(nil))

if !w.conn.server.doKeepAlives() {

// We're in shutdown mode. We might've replied

// to the user without "Connection: close" and

// they might think they can send another

// request, but such is life with HTTP/1.1.

return

}

if d := c.server.idleTimeout(); d != 0 {

c.rwc.SetReadDeadline(time.Now().Add(d))

if _, err := c.bufr.Peek(4); err != nil {

return

}

}

c.rwc.SetReadDeadline(time.Time{})

}

}我们需要关注

- for循环,表示尝试复用该conn,用于处理迎面而来的请求

- w.shouldReuseConnection() = false, 表明读取到Client

Connection:Close标头,设置closeAfterReply=true,跳出dor循环,协程即将结束,结束之前执行defer函数,defer函数内close该连接

c.close()

......

// Close the connection.

func (c *conn) close() {

c.finalFlush()

c.rwc.Close()

}- 如果 w.shouldReuseConnection() = true,则将该连接状态置为idle, 并继续走for循环,处理后续请求。

我的收获

- tcp 4次挥手的八股文

- 短连接在服务器上的效应,time_wait,占用可用的SOCKET, 根据实际业务看是否需要切换为长连接

- golang http keep-alive复用tcp连接的源码级分析

- tcpdump抓包的姿势