概述

pxc为mysql的一种集群模型,我们结合operator和k8s 完成pxc的部署和扩容

硬盘使用local卷,如何管理local卷请翻阅 我的另一篇文章

https://www.jianshu.com/p/bfa204cef8c0

英文文档详情 https://percona.github.io/percona-xtradb-cluster-operator/configure/backups

下载git

git clone-brelease-0.3.0 https://github.com/percona/percona-xtradb-cluster-operator

cd percona-xtradb-cluster-operator

安装自定义资源CRD

kubectl apply-fdeploy/crd.yaml

创建pxc namespace

kubectl create namespace pxc

kubectl config set-context $(kubectl config current-context) -n pxc

创建k8s用户和权限

kubectl apply-fdeploy/rbac.yaml

部署operator

kubectl apply-fdeploy/operator.yaml

配置初始密码

生成加密字符串

echo -n 'plain-text-password' | base64

vi deploy/secrets.yaml

apiVersion: v1

kind: Secret

metadata:

name: my-cluster-secrets

type: Opaque

data:

root: 加密字符

xtrabackup: 加密字符

monitor: 加密字符

clustercheck: 加密字符

proxyuser: 加密字符

proxyadmin: 加密字符

pmmserver: 加密字符

部署

kubectl apply-fdeploy/secrets.yaml

部署集群

自定义pxc参数

vi deploy/cr.yaml

apiVersion: "pxc.percona.com/v1alpha1"

kind: "PerconaXtraDBCluster"

metadata:

#集群名称

name: "cluster1"

finalizers:

- delete-pxc-pods-in-order

# - delete-proxysql-pvc

# - delete-pxc-pvc

spec:

secretsName: my-cluster-secrets

pxc:

#集群节点数量

size: 3

image: perconalab/pxc-openshift:0.2.0

#资源信息

resources:

requests:

memory: 1G

cpu: 600m

limits:

memory: 1G

cpu: "1"

volumeSpec:

#存储卷信息

storageClass: local-storage

accessModes: [ "ReadWriteOnce" ]

#大小

size: 6Gi

affinity:

topologyKey: "kubernetes.io/hostname"

# advanced:

# nodeSelector:

# disktype: ssd

# tolerations:

# - key: "node.alpha.kubernetes.io/unreachable"

# operator: "Exists"

# effect: "NoExecute"

# tolerationSeconds: 6000

# priorityClassName: high-priority

# annotations:

# iam.amazonaws.com/role: role-arn

# imagePullSecrets:

# - name: private-registry-credentials

# labels:

# rack: rack-22

proxysql:

#是否部署proxy来完成读写分离

enabled: true

#proxysql几点数量

size: 1

image: perconalab/proxysql-openshift:0.2.0

resources:

requests:

memory: 1G

cpu: 600m

# limits:

# memory: 1G

# cpu: 700m

volumeSpec:

storageClass: ssd-local-storage

accessModes: [ "ReadWriteOnce" ]

size: 2Gi

# affinity:

# topologyKey: "failure-domain.beta.kubernetes.io/zone"

# # advanced:

# nodeSelector:

# disktype: ssd

# tolerations:

# - key: "node.alpha.kubernetes.io/unreachable"

# operator: "Exists"

# effect: "NoExecute"

# tolerationSeconds: 6000

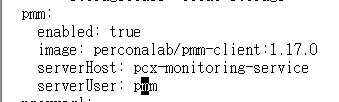

pmm:

#是否开启pmm

enabled: false

image: perconalab/pmm-client:1.17.0

serverHost: monitoring-service

serverUser: pmm

backup:

image: perconalab/backupjob-openshift:0.2.0

# imagePullSecrets:

# - name: private-registry-credentials

schedule:

- name: "sat-night-backup"

#crontab计划

schedule: "0 0 * * 6"

#保存几份备份

keep: 3

volume:

storageClass: local-storage

size: 6Gi

kubectl apply -f deploy/cr.yaml

查看pxc集群

kubectl -n pxc get po --show-labels

查看service

kubectl -n pxc get svc

将nodePORT

kubectl -n pxc edit svc cluster1-pxc-proxysql

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

#

apiVersion: v1

kind: Service

metadata:

creationTimestamp: "2019-03-25T10:58:37Z"

labels:

app: pxc

cluster: cluster1

name: cluster1-pxc-proxysql

namespace: pxc

ownerReferences:

- apiVersion: pxc.percona.com/v1alpha1

controller: true

kind: PerconaXtraDBCluster

name: cluster1

uid: f0a9a0ba-4eec-11e9-ba71-005056ac2dbb

resourceVersion: "2771712"

selfLink: /api/v1/namespaces/pxc/services/cluster1-pxc-proxysql

uid: f0c3c844-4eec-11e9-9e18-005056ac20e9

spec:

clusterIP: 10.99.239.180

externalTrafficPolicy: Cluster

ports:

- name: mysql

nodePort: 31239

port: 3306

protocol: TCP

targetPort: 3306

- name: proxyadm

nodePort: 31531

port: 6032

protocol: TCP

targetPort: 6032

selector:

component: cluster1-pxc-proxysql

sessionAffinity: None

type: NodePort

status:

loadBalancer: {}

再次查看

连接mysql

mysql -h 10.16.16.119 -P 31239 -u root -p

连接proxyadmin

mysql -h 10.16.16.119 -P 31531 -u proxyadmin -p

扩容

将3节点扩容到5节点

需要注意必须为奇数节点,

而且po分布在不同的k8snode上 所以k8s node必须大于pxc节点,不然po会一直处于pend状态

kubectl -n pxc get pxc/cluster1 -o yaml | sed -e 's/size: 3/size: 5/' | kubectl -n pxc apply -f -

查看读写分离,新节点已经加入

缩减节点

kubectl -n pxc get pxc/cluster1 -o yaml | sed -e 's/size: 4/size: 3/' | kubectl -n pxc apply -f -

创建用户和同步用户到proxysql

mysql -u root -p -P 31239 -h node1

create database database1;

GRANT ALL PRIVILEGES ON database1.* TO 'user1'@'%' IDENTIFIED BY 'password1';

同步proxy

kubectl -n pxc exec -it cluster1-pxc-proxysql-0 -- proxysql-admin --config-file=/etc/proxysql-admin.cnf --syncusers

测试

备份和恢复

crontab 已经在创建集群的时候创建完毕

手动backup

cat < apiVersion: "pxc.percona.com/v1alpha1" kind: "PerconaXtraDBBackup" metadata: name: "backup20190326" spec: pxcCluster: "cluster1" volume: storageClass: local-storage size: 6G EOF 查看备份 kubectl get pxc-backup 查看pxc集群 kubectl get pxc 查看备份 kubectl get pxc-backup 查看pxc集群 kubectl get pxc 将指定备份恢复到指定集群 将备份copy到本地 ./deploy/backup/copy-backup.sh <备份名称> path/to/dir helm repo add percona https://percona-charts.storage.googleapis.com helm repo update 查看pmmserver 参数 helm inspect percona/pmm-server 安装 helm install percona/pmm-server --name pcx-monitoring --namespace=pxc --set platform=kubernetes --set persistence.storageClass=local-storage --set credentials.username=pmm 如果下载有问题可以代理下载 https://percona-charts.storage.googleapis.com/pmm-server-1.17.1.tgz 然后放到本机 tar -xvf ~/pmm-server-1.17.1.tgz 更改: vi ./pmm-server/templates/statefulset.yaml 115行加入 storageClassName: {{ .Values.persistence.storageClass }} 类似下图 helm install ./pmm-server --name pcx-monitoring --namespace=pxc --set platform=kubernetes --set persistence.storageClass=local-storage --set credentials.username=pmm --set "credentials.password=密码" 这里的密码必须和my-cluster-secrets中的pmmserver一致 如果忘记kubectl -n pxc get secret 查看 kubectl -n pxc edit secret 密码文件 修改 查看 开启客户端pmm kubectl -n pxc get pxc kubectl -n pxc get svc 获取pmmserver名称 kubectl -n pxc edit pxc cluster1 骑宠serverHost为kubectl -n pxc get svc 获取pmmserver的名称 serverUser为账号从备份恢复数据

./deploy/backup/restore-backup.sh <备份名称> <集群名称>安装PMM监控