scrapy下载器中间件初探

初步学习下载器中间件 ,这个玩意儿还是挺复杂的

主要复杂在他的请求、响应的变化,如果不存在拦截什么的情况 ,这就好弄一点

在settings.py里面启用

DOWNLOADER_MIDDLEWARES = {

'test_middle_demo.middlewares.TestMiddleDemoDownloaderMiddleware': 543,

}

@classmethod

def from_crawler(cls, crawler):

# This method is used by Scrapy to create your spiders.

s = cls()

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

return s

第一个spider_opened 和 下面函数一起的

def spider_opened(self, spider):

spider.logger.info('Spider opened: %s' % spider.name)

print('1.爬虫被运行起来了')

def process_request(self, request, spider):

# Called for each request that goes through the downloader

# middleware.

# Must either:

# - return None: continue processing this request

# - or return a Response object

# - or return a Request object

# - or raise IgnoreRequest: process_exception() methods of

# installed downloader middleware will be called

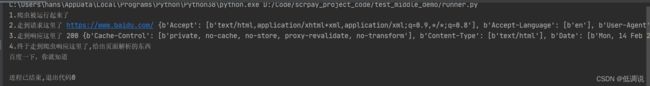

print('2.走到请求这里了', request.url, request.headers)

return None

"""

return none 继续将请求发送到中间件或下载器 不做拦截

return Response 直接返回响应 ,中间件下载器都不执行了,向前传递

return Request 返回请求对象 返回引擎 ,引擎 返回调度器 ,继续走下面的流程

""

def process_response(self, request, response, spider):

# Called with the response returned from the downloader.

# Must either;

# - return a Response object # 响应给上层,给到引擎

# - return a Request object # 返回请求,给引擎 ,给调度器

# - or raise IgnoreRequest

print('3.走到响应这里了', response.status, response.headers)

return response

import scrapy

from bs4 import BeautifulSoup

class TestMSpider(scrapy.Spider):

name = 'test_m'

allowed_domains = ['baidu.com']

start_urls = ['https://www.baidu.com/']

def parse(self, response, **kwargs):

print('4.终于走到爬虫响应这里了,给出页面解析的东西')

soup = BeautifulSoup(response.text, 'lxml')

title = soup.find('title').text

print(title)

举个栗子

那如果是多个下载器中间件,如下面代码所示

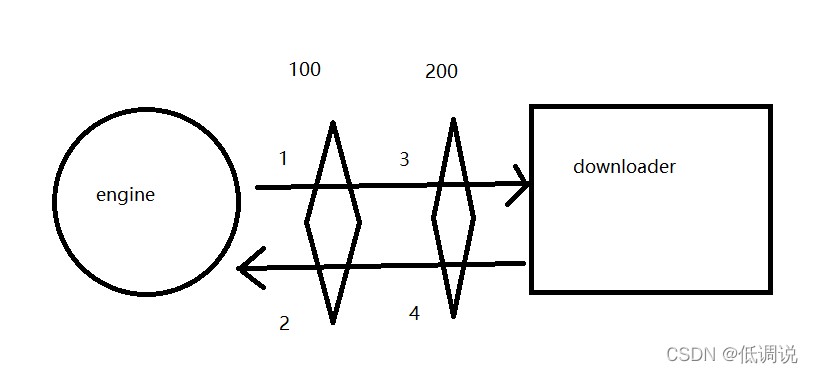

划重点

这个100,200 这个数字 就是 中间件到 引擎的距离

这个东西的走法是线性的

DOWNLOADER_MIDDLEWARES = {

'test_middle_demo.middlewares.TestMiddleDemoDownloaderMiddleware_01': 100,

'test_middle_demo.middlewares.TestMiddleDemoDownloaderMiddleware_02': 200,

}

class TestMiddleDemoDownloaderMiddleware_01:

def process_request(self, request, spider):

print(1)

return None

def process_response(self, request, response, spider):

print(2)

return response

class TestMiddleDemoDownloaderMiddleware_02:

def process_request(self, request, spider):

print(3)

return None

def process_response(self, request, response, spider):

print(4)

return response