机器学习基石笔记(四):学习的可行性

文章目录

- Lecture 4: Feasibility of Learning

-

- Learning is Impossible?

-

- No Free Lunch

- Fun Time

- Probability to the Rescue

-

- Hoeffding’s Inequality

- Fun Time

- Connection to Learning

-

- Fun Time

- Connection to Real Learning

-

- Multiple h

- BAD Sample and BAD Data

- The ‘Statistical’ Learning Flow

- Fun Time

- Summary

-

- 讲义总结

- 参考文献

Lecture 4: Feasibility of Learning

Learning is Impossible?

absolutely no free lunch outside D D D

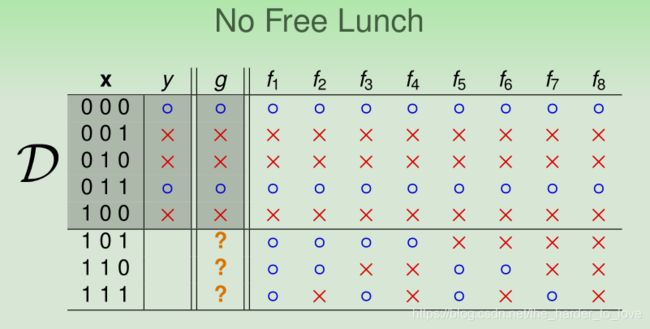

No Free Lunch

在已知的样本( D x D_x Dx)中, f 1 ∼ f 8 f_1 \sim f_8 f1∼f8都符合( y x = g x y_x=g_x yx=gx),但不同的f所预测的y完全不同。

你不能说这八个模型中谁比谁好,也就是说这八个模型预测都是对的,

Fun Time

This is a popular ‘brain-storming’ problem, with a claim that 2% of the world’s cleverest population can crack its ‘hidden pattern’.

( 5 , 3 , 2 ) → 151022 , ( 7 , 2 , 5 ) → ? (5,3,2) → 151022, (7,2,5) → ? (5,3,2)→151022,(7,2,5)→?

It is like a ‘learning problem’ with N = 1 , x 1 = ( 5 , 3 , 2 ) , y 1 = 151022 N = 1, x_1 = (5,3,2), y_1 = 151022 N=1,x1=(5,3,2),y1=151022.

Learn a hypothesis from the one example to predict on x = (7,2,5).

What is your answer?

1 151026

2 143547

3 I need more examples to get the correct answer

4 there is no ‘correct’ answer ✓ \checkmark ✓

Explanation

根据“没有免费的午餐”,我们根据有限的输入,理论上在输入外可以得到一定数量的等价模型。

所以输入越多,归纳得到的规律 f f f就越靠近 g g g。

但是实际上我们永远得不到g,除非你拥有所有的样本(但如果你拥有所有的样本,直接对比查询即可,用不着用模型模拟了)或者加上其他的条件/倾向。

? 所以说数列要写通项公式才规范,小时候写的数学规律题目其实都不严谨,因为我发现一道填空题可能有多个答案,而标准答案往往只给一个。

Probability to the Rescue

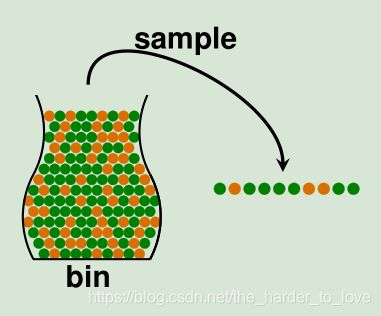

我们使用统计学与概率论工具,用已知样本的分布预估未知样本的分布。简单来说类似于抽样检测(实际上我们所做的相反:用样本估计整体),如下图所示。

Hoeffding’s Inequality

In big sample ( N N N large), ν ν ν is probably close to µ µ µ (within ϵ \epsilon ϵ)

P [ ∣ ν − μ ∣ > ϵ ] ≤ 2 exp ( − 2 ϵ 2 N ) \mathbb{P}[|\nu-\mu|>\epsilon] \leq 2 \exp \left(-2 \epsilon^{2} N\right) P[∣ν−μ∣>ϵ]≤2exp(−2ϵ2N)

for marbles, coin, polling, …

概率上界的大小取决于宽松间隙( ϵ \epsilon ϵ)和样本数量( N N N),两者越大,概率上界就越小。

ν ≈ μ \nu \approx \mu ν≈μ,概率最大,接近1.

N N N越大,越有可能用 ν \nu ν推测 μ \mu μ

Fun Time

Let μ \mu μ = 0.4. Use Hoeffding’s Inequality

P [ ∣ ν − μ ∣ > ϵ ] ≤ 2 exp ( − 2 ϵ 2 N ) \mathbb{P}[|\nu-\mu|>\epsilon] \leq 2 \exp \left(-2 \epsilon^{2} N\right) P[∣ν−μ∣>ϵ]≤2exp(−2ϵ2N)

to bound the probability that a sample of 10 marbles will have ν ≤ 0.1 \nu ≤ 0.1 ν≤0.1. What bound do you get?

1 0.67

2 0.40

3 0.33 ✓ \checkmark ✓

4 0.05

Explanation

∣ ν − μ ∣ ≥ 0.3 ∴ ϵ = 0.3 又 N = 10 b o u n d = 0.3305977764431732 |\nu-\mu| \ge 0.3 \\ \therefore \epsilon=0.3 \\ 又 \, N=10 \\ bound = 0.3305977764431732 ∣ν−μ∣≥0.3∴ϵ=0.3又N=10bound=0.3305977764431732

? 霍夫丁不等式告诉了数据之间差距的概率上界,若知道数据的概率分布(概率密度函数|分布列),可积分直接求出概率。

霍夫丁不等式是统计学和概率论中最重要的几个定理之一。

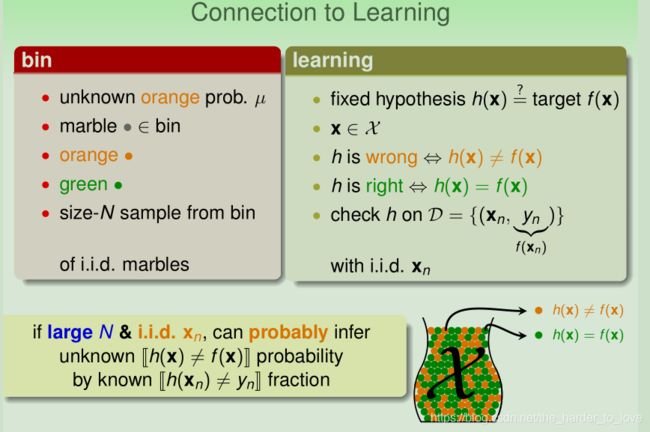

Connection to Learning

E in ( h ) = 1 N ∑ n = 1 N [ h ( x n ) ≠ y n ] ⇒ E out ( h ) = E x ∼ P [ h ( x ) ≠ f ( x ) ] {E_{\text { in }}(\mathrm{h})=\frac{1}{N} \sum_{n=1}^{N}\left[h\left(\mathbf{x}_{n}\right) \neq y_{n}\right]} \Rightarrow {E_{\text { out }}(\mathrm{h})=\underset{\mathbf{x} \sim P}{\mathcal{E}}[h(\mathbf{x}) \neq f(\mathbf{x})]} E in (h)=N1n=1∑N[h(xn)̸=yn]⇒E out (h)=x∼PE[h(x)̸=f(x)]

如果 N N N足够大,我们可以用 E i n ( h ) E_{in}(h) Ein(h)(in-sample error)来估计 E o u t ( h ) E_{out}(h) Eout(h)(out-sample error)。

P [ ∣ E in ( h ) − E out ( h ) ∣ > ϵ ] ≤ 2 exp ( − 2 ϵ 2 N ) \mathbb{P}\left[ | E_{\text { in }}(h)-E_{\text { out }}(h) |>\epsilon\right] \leq 2 \exp \left(-2 \epsilon^{2} N\right) P[∣E in (h)−E out (h)∣>ϵ]≤2exp(−2ϵ2N)

∀ b i g N , s m a l l E E i n ( h ) ≈ E o u t ( h ) E i n ( h ) s m a l l ⇒ g ≈ f E i n ( h ) n o t s m a l l ⇒ g ≠ f \forall \ big \ N, \ small \ \mathcal{E} \\ E_{\mathrm{in}}(h) \approx E_{\mathrm{out}}(h) \\ E_{\mathrm{in}}(h) \ small \Rightarrow g \approx f \\ E_{\mathrm{in}}(h) \ not \ small \Rightarrow g \neq f ∀ big N, small EEin(h)≈Eout(h)Ein(h) small⇒g≈fEin(h) not small⇒g̸=f

Fun Time

Your friend tells you her secret rule in investing in a particular stock: ‘Whenever the stock goes down in the morning, it will go up in the afternoon;vice versa.’

To verify the rule, you chose 100 days uniformly at random from the past 10 years of stock data, and found that 80 of them satisfy the rule.

What is the best guarantee that you can get from the verification?

1 You’ll definitely be rich by exploiting the rule in the next 100 days.

2 You’ll likely be rich by exploiting the rule in the next 100 days, if the market behaves similarly to the last 10 years. ✓ \checkmark ✓

3 You’ll likely be rich by exploiting the ‘best rule’ from 20 more friends in the next 100 days.

4 You’d definitely have been rich if you had exploited the rule in the past 10 years.

Explanation

股票市场风云变幻,你用历史数据搭建了一个优秀的模型当且仅当未来的趋势跟历史发展一致时,你才能利用这个模型获益。

从霍夫丁不等式的角度来看:历史数据搭建了一个优秀的模型( E i n ( h ) s m a l l E_{\mathrm{in}}(h) \ small Ein(h) small), 且未来的趋势跟历史发展一致时 E i n ( h ) ≈ E o u t ( h ) E_{\mathrm{in}}(h) \approx E_{\mathrm{out}}(h) Ein(h)≈Eout(h),那么模型对于未来趋势预测的效果优秀( E o u t ( h ) s m a l l E_{\mathrm{out}}(h) \ small Eout(h) small),你最终就能获益。

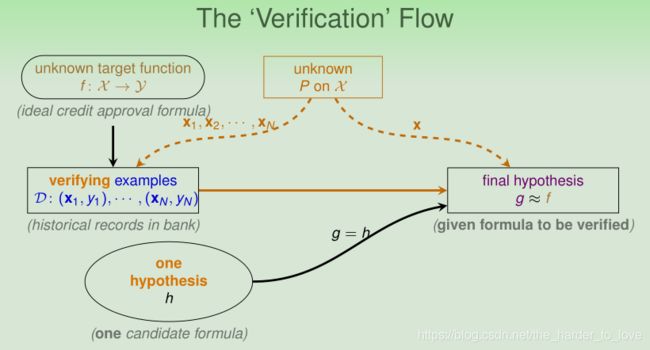

Connection to Real Learning

之前是验证1个假设,别忘了,我们的假设集有多个假设,来看看真实的学习环境下,霍夫丁不等式应用的改变。

Multiple h

假设现在有150名同学,每人抛五次硬币,问出现连续朝上五枚硬币的概率是多少?

1 − ( 31 32 ) 150 = 99.145 % 1-\left(\frac{31}{32}\right)^{150} = 99.145 \% 1−(3231)150=99.145%

由于同学数量高达150名,出现连续朝上五枚硬币的概率达到 99 99% 99,倘若我们用硬币全部朝上的数据而选择一直猜硬币朝上, E i n = 0 E_in = 0 Ein=0 and E o u t = 1 2 E_out = \frac{1}{2} Eout=21

BAD Sample and BAD Data

由于假设集增多,极端数据出现的可能性增大,由于我们的选择,导致 E i n E_{in} Ein and E o u t E_{out} Eout差别过大,我们将这样的选择称为 B a d S a m p l e Bad \ Sample Bad Sample.

E o u t E_{out} Eout big (far from f f f), but E i n E_{in} Ein small (correct on most examples) called BAD Data.

P D [ B A D D ] = ∑ all possible D P ( D ) ⋅ [ B A D D ] \mathbb{P}_{\mathcal{D}}[\mathrm{BAD} \ \mathcal{D}]=\sum_{\text { all possible } \mathcal{D}} \mathbb{P}(\mathcal{D}) \cdot[\mathrm{BAD} \ \mathcal{D}] PD[BAD D]= all possible D∑P(D)⋅[BAD D]

$$ \begin{aligned} \ \mathbb{P}_{\mathcal{D}}[\mathrm{BAD} \ \mathcal{D}] & = \mathbb{P}_{\mathcal{D}}[\mathrm{BAD} \ \mathcal{D} \ for \ h_1 \ or \ \mathrm{BAD} \ \mathcal{D} \ for \ h_2 \ or \ ... \ or \ \mathrm{BAD} \ \mathcal{D} \ for \ h_M] \\ \ & \le \mathbb{P}_{\mathcal{D}}[\mathrm{BAD} \ \mathcal{D} \ for \ h_1]+\mathbb{P}_{\mathcal{D}}[\mathrm{BAD} \ \mathcal{D} \ for \ h_2]+...+\mathbb{P}_{\mathcal{D}}[\mathrm{BAD} \ \mathcal{D} \ for \ h_M](union \ bound) \\ \ & \leq 2 \exp \left(-2 \epsilon^{2} N\right)+2 \exp \left(-2 \epsilon^{2} N\right)+\ldots+2 \exp \left(-2 \epsilon^{2} N\right) \\ \ & {=2 M \exp \left(-2 \epsilon^{2} N\right)} \end{aligned} $$

The ‘Statistical’ Learning Flow

若假设集( ∣ H ∣ |H| ∣H∣)大小为有限的 M M M, N N N足够大,通过机器学习演算法( A A A)学习到的对于任意一个 g g g,都有 E i n ( g ) ≈ E o u t ( g ) E_{\mathrm{in}}(g) \approx E_{\mathrm{out}}(g) Ein(g)≈Eout(g).

若此时 A A A学到一个 E i n ( g ) ≈ 0 E_{\mathrm{in}}(g) \approx 0 Ein(g)≈0,就能保证 E o u t ( g ) ≈ 0 E_{\mathrm{out}}(g) \approx 0 Eout(g)≈0 => learning impossible 真正学到了知识:-).

Fun Time

Consider 4 hypotheses.

h 1 ( x ) = sign ( x 1 ) , h 2 ( x ) = sign ( x 2 ) h 3 ( x ) = sign ( − x 1 ) , h 4 ( x ) = sign ( − x 2 ) \begin {array}{c}{h_{1}(\mathbf{x})=\operatorname{sign}\left(x_{1}\right), \quad h_{2}(\mathbf{x})=\operatorname{sign}\left(x_{2}\right)} \\ {h_{3}(\mathbf{x})=\operatorname{sign}\left(-x_{1}\right), \quad h_{4}(\mathbf{x})=\operatorname{sign}\left(-x_{2}\right)}\end{array} h1(x)=sign(x1),h2(x)=sign(x2)h3(x)=sign(−x1),h4(x)=sign(−x2)

For any N N N and ϵ \epsilon ϵ, which of the following statement is not true?

1 the BAD data of h 1 h_1 h1 and the BAD data of h 2 h_2 h2 are exactly the same. ✓ \checkmark ✓

2 the BAD data of h 1 h_1 h1 and the BAD data of h 3 h_3 h3 are exactly the same.

3 P D [ B A D f o r s o m e h k ] ≤ 8 exp ( − 2 ϵ 2 N ) \mathbb{P_D}[\mathrm{BAD} \ for \ some \ h_k] \leq 8 \exp \left(-2 \epsilon^{2} N\right) PD[BAD for some hk]≤8exp(−2ϵ2N)

4 P D [ B A D f o r s o m e h k ] ≤ 4 exp ( − 2 ϵ 2 N ) \mathbb{P_D}[\mathrm{BAD} \ for \ some \ h_k] \leq 4 \exp \left(-2 \epsilon^{2} N\right) PD[BAD for some hk]≤4exp(−2ϵ2N)

Explanation

参考多个 g g g中,霍夫丁不等式的上界推导公式。

对于BAD data x x x, E i n E_{in} Ein和 E o u t E_{out} Eout相差大,那么假设h对于 − x -x −x,所得到 − E i n -E_{in} −Ein和 − E o u t -E_{out} −Eout相差也会大,所以 x x x和 − x -x −x都是BAD data.

所以 sign ( x ) \operatorname{sign}\left(x_{}\right) sign(x)和 sign ( − x ) \operatorname{sign}\left(-x_{}\right) sign(−x)有相同的BAD data.

Summary

讲义总结

假设集( ∣ H ∣ |H| ∣H∣)大小为有限的 M M M, N N N足够大,通过机器学习演算法( A A A)学习到的对于任意一个 g g g,都有 E i n ( g ) ≈ E o u t ( g ) E_{\mathrm{in}}(g) \approx E_{\mathrm{out}}(g) Ein(g)≈Eout(g).

若此时 A A A学到一个 E i n ( g ) ≈ 0 E_{\mathrm{in}}(g) \approx 0 Ein(g)≈0,就能保证 E o u t ( g ) ≈ 0 E_{\mathrm{out}}(g) \approx 0 Eout(g)≈0 => learning impossible 真正学到了知识:-).

学习是可行的。

Learning is Impossible?

absolutely no free lunch outside D D D

Probability to the Rescue

probably approximately correct outside D D D

Connection to Learning

verification possible if E i n ( h ) E_{in}(h) Ein(h) small for fixed h h h

Connection to Real Learning

learning possible if ∣ H ∣ |H| ∣H∣ finite and E i n ( g ) E_{in}(g) Ein(g) small

参考文献

《Machine Learning Foundations》(机器学习基石)—— Hsuan-Tien Lin (林轩田)