Jetson Nano b1双目立体

[更新中]

0. CSI-Camera

Jetson Nano b1支持双路的CSI接口,同时插上两个相机120度视场的IMX219模块组成双目摄像头。

关于相机程序github上已有代码。包括单目、双目、人脸识别。可以自行测试

1. 采集&保存

在代码的基础上加入opencv保存图片的部分,文件名为left1.bmp、left2.bmp、left3.bmp…;right1.bmp、right2.bmp、right3.bmp… 对应好左右视图

cams_save.py

# MIT License

# Copyright (c) 2019,2020 JetsonHacks

# See license

# A very simple code snippet

# Using two CSI cameras (such as the Raspberry Pi Version 2) connected to a

# NVIDIA Jetson Nano Developer Kit (Rev B01) using OpenCV

# Drivers for the camera and OpenCV are included in the base image in JetPack 4.3+

# This script will open a window and place the camera stream from each camera in a window

# arranged horizontally.

# The camera streams are each read in their own thread, as when done sequentially there

# is a noticeable lag

# For better performance, the next step would be to experiment with having the window display

# in a separate thread

import cv2

import threading

import numpy as np

# gstreamer_pipeline returns a GStreamer pipeline for capturing from the CSI camera

# Flip the image by setting the flip_method (most common values: 0 and 2)

# display_width and display_height determine the size of each camera pane in the window on the screen

left_camera = None

right_camera = None

class CSI_Camera:

def __init__(self):

# Initialize instance variables

# OpenCV video capture element

self.video_capture = None

# The last captured image from the camera

self.frame = None

self.grabbed = False

# The thread where the video capture runs

self.read_thread = None

self.read_lock = threading.Lock()

self.running = False

def open(self, gstreamer_pipeline_string):

try:

self.video_capture = cv2.VideoCapture(

gstreamer_pipeline_string, cv2.CAP_GSTREAMER

)

except RuntimeError:

self.video_capture = None

print("Unable to open camera")

print("Pipeline: " + gstreamer_pipeline_string)

return

# Grab the first frame to start the video capturing

self.grabbed, self.frame = self.video_capture.read()

def start(self):

if self.running:

print('Video capturing is already running')

return None

# create a thread to read the camera image

if self.video_capture != None:

self.running = True

self.read_thread = threading.Thread(target=self.updateCamera)

self.read_thread.start()

return self

def stop(self):

self.running = False

self.read_thread.join()

def updateCamera(self):

# This is the thread to read images from the camera

while self.running:

try:

grabbed, frame = self.video_capture.read()

with self.read_lock:

self.grabbed = grabbed

self.frame = frame

except RuntimeError:

print("Could not read image from camera")

# FIX ME - stop and cleanup thread

# Something bad happened

def read(self):

with self.read_lock:

frame = self.frame.copy()

grabbed = self.grabbed

return grabbed, frame

def release(self):

if self.video_capture != None:

self.video_capture.release()

self.video_capture = None

# Now kill the thread

if self.read_thread != None:

self.read_thread.join()

# Currently there are setting frame rate on CSI Camera on Nano through gstreamer

# Here we directly select sensor_mode 3 (1280x720, 59.9999 fps)

def gstreamer_pipeline(

sensor_id=0,

sensor_mode=3,

capture_width=1280,

capture_height=720,

display_width=1280,

display_height=720,

framerate=30,

flip_method=0,

):

return (

"nvarguscamerasrc sensor-id=%d sensor-mode=%d ! "

"video/x-raw(memory:NVMM), "

"width=(int)%d, height=(int)%d, "

"format=(string)NV12, framerate=(fraction)%d/1 ! "

"nvvidconv flip-method=%d ! "

"video/x-raw, width=(int)%d, height=(int)%d, format=(string)BGRx ! "

"videoconvert ! "

"video/x-raw, format=(string)BGR ! appsink"

% (

sensor_id,

sensor_mode,

capture_width,

capture_height,

framerate,

flip_method,

display_width,

display_height,

)

)

def start_cameras():

left_camera = CSI_Camera()

left_camera.open(gstreamer_pipeline(sensor_id=0, sensor_mode=3, flip_method=0,display_height=360, display_width=640,))

left_camera.start()

right_camera = CSI_Camera()

right_camera.open(gstreamer_pipeline(sensor_id=1, sensor_mode=3, flip_method=0,display_height=360, display_width=640,))

right_camera.start()

cv2.namedWindow("CSI Cameras", cv2.WINDOW_AUTOSIZE)

if (not left_camera.video_capture.isOpened() or

not right_camera.video_capture.isOpened()):

# Cameras did not open, or no camera attached

print("Unable to open any cameras")

# TODO: Proper Cleanup

SystemExit(0)

start = time.time()

count = 0

while cv2.getWindowProperty("CSI Cameras", 0) >= 0:

_, left_image = left_camera.read()

_, right_image = right_camera.read()

camera_images = np.hstack((left_image, right_image))

cv2.imshow("CSI Cameras", camera_images)

# This also acts as

keyCode = cv2.waitKey(30) & 0xFF

# Stop the program on the ESC key

end = time.time()

if keyCode == ord('s'):#按s保存

count+=1

cv2.imwrite('./pic/left'+str(count)+'.bmp', left_image)

cv2.imwrite('./pic/right'+str(count)+'.bmp', right_image)

# break

if keyCode == 27: #esc退出

break

left_camera.stop()

left_camera.release()

right_camera.stop()

right_camera.release()

cv2.destroyAllWindows()

if __name__ == "__main__":

start_cameras()

运行后,按键盘上s,即可保存当前显示的左右视图到pic文件夹;esc即可退出程序

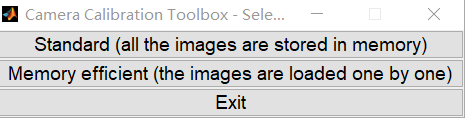

2. 立体标定

2.1 相机内外参标定

借助matlab的标定工具箱进行。

2.1.1 将pic文件夹的所有图片放到。解压到TOOLBOX_calib下。

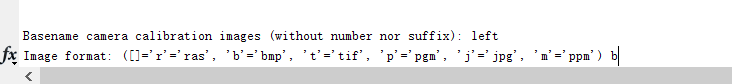

2.1.2 matlab打开到此路径下,命令行输入

calib_gui

弹出:

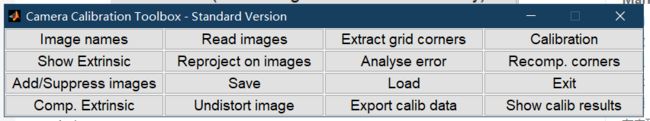

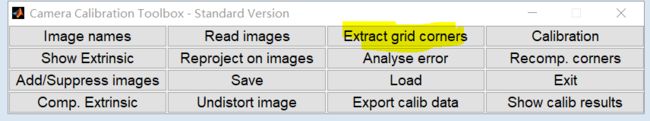

2.1.3.选择Standard.出现:

2.1.4 首先使用左边相机采集到的图片进行标定

点击Image names,转到命令行

命令行窗口会提示你输入图片的名:left 回车

根据自己的图片格式继续输入 b

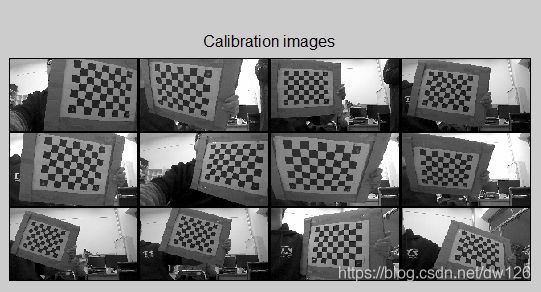

matlab会自动载入所有left开头的图片,如图我这里有12张图片

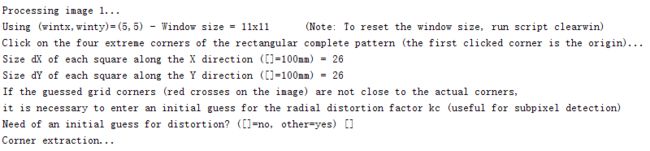

2.1.5 提取网格角点

选择所有图像,设置finder尺寸等

在弹出的figure窗口点选角点,会自动选出区域

此时,看命令行! 根据自己的棋盘格大小,设置参数,我这里是26mm

所有左视图片来一遍,完成

2.1.6 回到gui,点击标定

也可以可视化外参show extrinsic

重要! Save 保存为.mat文件

2.1.7

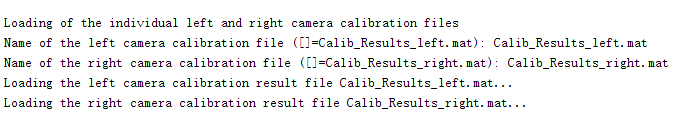

为便于后面立体标定,修改上述文件名为Calib_Results_left.mat

重复5-6的过程,left改为right,并将这次保存的参数文件改为Calib_Results_right.mat

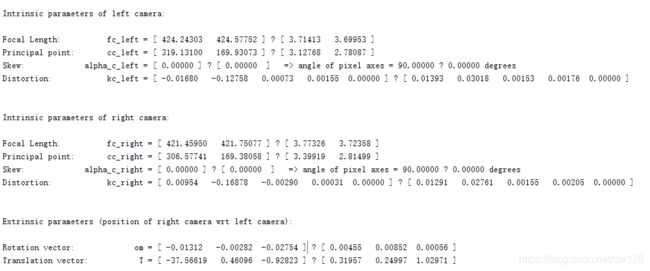

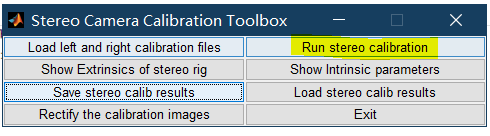

2.2 执行立体标定

关闭calib_gui,命令行输入stereo_gui,会提示分别输入左右视图的标定文件

按run stereo calibration

得到

3.调整参数并显示深度图

camera_configs.py

import cv2

import numpy as np

left_camera_matrix = np.array([[424.24303, 0., 319.13100 ],

[0., 424.57752 ,169.93073 ],

[0., 0., 1.]])

left_distortion = np.array([[ -0.01680, -0.12758 , 0.00073 , 0.00155 ,0.00000]])

right_camera_matrix = np.array([[ 421.45950, 0., 306.57741 ],

[0., 421.75077 , 169.38058 ],

[0., 0., 1.]])

right_distortion = np.array([[0.00954 , -0.16878 , -0.00290 , 0.00031 ,0.00000 ]])

om = np.array([ -0.01312 , -0.00282 ,-0.02754 ]) # 旋转关系向量

R = cv2.Rodrigues(om)[0] # 使用Rodrigues变换将om变换为R

T = np.array([ -37.56619 , 0.46096 , -0.92823]) # 平移关系向量

size = (720, 1280) # 图像尺寸

# 进行立体更正

R1, R2, P1, P2, Q, validPixROI1, validPixROI2 = cv2.stereoRectify(left_camera_matrix, left_distortion,

right_camera_matrix, right_distortion, size, R,

T)

# 计算更正map

left_map1, left_map2 = cv2.initUndistortRectifyMap(left_camera_matrix, left_distortion, R1, P1, size, cv2.CV_16SC2)

right_map1, right_map2 = cv2.initUndistortRectifyMap(right_camera_matrix, right_distortion, R2, P2, size, cv2.CV_16SC2)

实时生成深度图使用BM算法

import cv2

import time

import numpy as np

import camera_configs

import threading

left_camera = None

right_camera = None

class CSI_Camera:

def __init__(self):

# Initialize instance variables

# OpenCV video capture element

self.video_capture = None

# The last captured image from the camera

self.frame = None

self.grabbed = False

# The thread where the video capture runs

self.read_thread = None

self.read_lock = threading.Lock()

self.running = False

def open(self, gstreamer_pipeline_string):

try:

self.video_capture = cv2.VideoCapture(

gstreamer_pipeline_string, cv2.CAP_GSTREAMER

)

except RuntimeError:

self.video_capture = None

print("Unable to open camera")

print("Pipeline: " + gstreamer_pipeline_string)

return

# Grab the first frame to start the video capturing

self.grabbed, self.frame = self.video_capture.read()

def start(self):

if self.running:

print('Video capturing is already running')

return None

# create a thread to read the camera image

if self.video_capture != None:

self.running = True

self.read_thread = threading.Thread(target=self.updateCamera)

self.read_thread.start()

return self

def stop(self):

self.running = False

self.read_thread.join()

def updateCamera(self):

# This is the thread to read images from the camera

while self.running:

try:

grabbed, frame = self.video_capture.read()

with self.read_lock:

self.grabbed = grabbed

self.frame = frame

except RuntimeError:

print("Could not read image from camera")

# FIX ME - stop and cleanup thread

# Something bad happened

def read(self):

with self.read_lock:

frame = self.frame.copy()

grabbed = self.grabbed

return grabbed, frame

def release(self):

if self.video_capture != None:

self.video_capture.release()

self.video_capture = None

# Now kill the thread

if self.read_thread != None:

self.read_thread.join()

# Currently there are setting frame rate on CSI Camera on Nano through gstreamer

# Here we directly select sensor_mode 3 (1280x720, 59.9999 fps)

def gstreamer_pipeline(

sensor_id=0,

sensor_mode=3,

capture_width=1280,

capture_height=720,

display_width=1280,

display_height=720,

framerate=30,

flip_method=0,

):

return (

"nvarguscamerasrc sensor-id=%d sensor-mode=%d ! "

"video/x-raw(memory:NVMM), "

"width=(int)%d, height=(int)%d, "

"format=(string)NV12, framerate=(fraction)%d/1 ! "

"nvvidconv flip-method=%d ! "

"video/x-raw, width=(int)%d, height=(int)%d, format=(string)BGRx ! "

"videoconvert ! "

"video/x-raw, format=(string)BGR ! appsink"

% (

sensor_id,

sensor_mode,

capture_width,

capture_height,

framerate,

flip_method,

display_width,

display_height,

)

)

def start_cameras():

cv2.namedWindow('bar')

cv2.createTrackbar("num", "bar", 0, 10, lambda x: None)

cv2.createTrackbar("blockSize", "bar", 5, 255, lambda x: None)

def callbackFunc(e, x, y, f, p):

if e == cv2.EVENT_LBUTTONDOWN:

print(threeD[y][x])

cv2.setMouseCallback("CSI Cameras", callbackFunc, None)

left_camera = CSI_Camera()

left_camera.open(gstreamer_pipeline(sensor_id=0, sensor_mode=3, flip_method=0,

display_height=360, display_width=640,))

left_camera.start()

right_camera = CSI_Camera()

right_camera.open(gstreamer_pipeline(sensor_id=1, sensor_mode=3, flip_method=0,

display_height=360, display_width=640,))

right_camera.start()

if (not left_camera.video_capture.isOpened() or

not right_camera.video_capture.isOpened()):

# Cameras did not open, or no camera attached

print("Unable to open any cameras")

# TODO: Proper Cleanup

SystemExit(0)

start = time.time()

count = 0

while cv2.getWindowProperty("CSI Cameras", 0) >= 0:

_, left_image = left_camera.read()

_, right_image = right_camera.read()

# 根据更正map对图片进行重构

img1_rectified = cv2.remap(left_image, camera_configs.left_map1, camera_configs.left_map2, cv2.INTER_LINEAR)

img2_rectified = cv2.remap(right_image, camera_configs.right_map1, camera_configs.right_map2, cv2.INTER_LINEAR)

# 将图片置为灰度图,为StereoBM作准备

imgL = cv2.cvtColor(img1_rectified, cv2.COLOR_BGR2GRAY)

imgR = cv2.cvtColor(img2_rectified, cv2.COLOR_BGR2GRAY)

# 两个trackbar用来调节不同的参数查看效果

num = cv2.getTrackbarPos("num", "depth")

blockSize = cv2.getTrackbarPos("blockSize", "depth")

if blockSize % 2 == 0:

blockSize += 1

if blockSize < 5:

blockSize = 5

# 根据Block Maching方法生成差异图

stereo = cv2.StereoBM_create(numDisparities=16 * num, blockSize=blockSize)

disparity = stereo.compute(imgL, imgR)

disp = cv2.normalize(disparity, disparity, alpha=0, beta=255, norm_type=cv2.NORM_MINMAX, dtype=cv2.CV_8U)

# camera_images = np.vstack((left_image, disp))

cv2.imshow("CSI Cameras", disp)

# This also acts as

keyCode = cv2.waitKey(10) & 0xFF

# Stop the program on the ESC key

if keyCode == 27: # or end - start > 10:

break

left_camera.stop()

left_camera.release()

right_camera.stop()

right_camera.release()

cv2.destroyAllWindows()

if __name__ == "__main__":

start_cameras()

未完待续…

参考

https://blog.csdn.net/sunanger_wang/article/details/7744025