人脸微笑识别和口罩识别模型训练和测试(卷积神经网络CNN)及实时的微笑和口罩识别

目录

- 一、人脸微笑识别

-

- 1.准备工作

- 2.genki4k笑脸数据集准备

- 导入需要的包

- 划分数据集

- 3.网络模型

- 4.数据预处理

- 5.开始训练

- 6.数据增强

- 7.创建新的网络

- 8.对单张图片的笑脸测试

- 二、口罩识别

-

- 1.数据准备

- 2.网络模型

- 3.数据预处理

- 4.开始训练

- 5.使用数据增强

- 6.对单张人物测试是否戴了口罩

- 三、摄像头实时采集人脸、并对表情(笑脸/非笑脸)、戴口罩和没戴口罩的实时分类判读(输出分类文字)

-

- 1.笑脸实时检测识别

- 2.是否戴口罩的实时检测识别

一、人脸微笑识别

1.准备工作

需要安装tensorflow、keras以及dlib。

2.genki4k笑脸数据集准备

下载图像数据集genki4k.tar,把它解压到相应的目录(我的目录是在jupyter工作目录下的GENKI4K-datasets目录下)

可以看到里面有些许不合格的数据,我们需要将其剔除。

导入需要的包

import keras #导入人工神经网络库

import matplotlib.pyplot as plt # matplotlib进行画图

import matplotlib.image as mpimg # # mpimg 用于读取图片

import numpy as np # numpy操作数组

from IPython.display import Image #显示图像

import os #os:操作系统接口

划分数据集

(在GENKI4K-dataset同级目录下会产生一个GENKI4K-data的文件夹,包括 train :训练集;validation:验证集;test:测试集。)

# 原目录的路径

original_dataset_dir = 'GENKI4K-datasets'

# 结果路径,存储较小的数据集

base_dir = 'GENKI4K-data'

os.mkdir(base_dir)

# 训练目录,验证和测试拆分

train_dir = os.path.join(base_dir, 'train')

os.mkdir(train_dir)

validation_dir = os.path.join(base_dir, 'validation')

os.mkdir(validation_dir)

test_dir = os.path.join(base_dir, 'test')

os.mkdir(test_dir)

# 训练微笑图片目录

train_smile_dir = os.path.join(train_dir, 'smile')

os.mkdir(train_smile_dir)

# 训练没微笑图片目录

train_unsmile_dir = os.path.join(train_dir, 'unsmile')

#s.mkdir(train_dogs_dir)

# 验证的微笑图片目录

validation_smile_dir = os.path.join(validation_dir, 'smile')

os.mkdir(validation_smile_dir)

# 验证的没微笑图片目录

validation_unsmile_dir = os.path.join(validation_dir, 'unsmile')

os.mkdir(validation_unsmile_dir)

# 测试的微笑图片目录

test_smile_dir = os.path.join(test_dir, 'smile')

os.mkdir(test_smile_dir)

# 测试的没微笑图片目录

test_unsmile_dir = os.path.join(test_dir, 'unsmile')

os.mkdir(test_unsmile_dir)

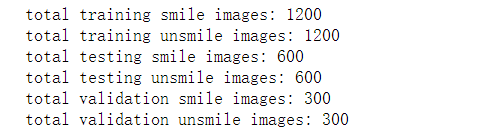

分配数据集,可以使用人为划分和代码划分

进行一次检查,计算每个分组中有多少张照片(训练/验证/测试)

print('total training smile images:', len(os.listdir(train_smile_dir)))

print('total training unsmile images:', len(os.listdir(train_umsmile_dir)))

print('total testing smile images:', len(os.listdir(test_smile_dir)))

print('total testing unsmile images:', len(os.listdir(test_umsmile_dir)))

print('total validation smile images:', len(os.listdir(validation_smile_dir)))

print('total validation unsmile images:', len(os.listdir(validation_unsmile_dir)))

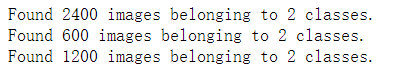

有2400个训练图像,然后是600个验证图像,1200个测试图像,其中每个分类都有相同数量的样本,是一个平衡的二元分类问题,意味着分类准确度将是合适的度量标准。

3.网络模型

from keras import layers

from keras import models

model = models.Sequential()

model.add(layers.Conv2D(32, (3, 3), activation='relu',input_shape=(150, 150, 3)))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(64, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(128, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(128, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Flatten())

model.add(layers.Dense(512, activation='relu'))

model.add(layers.Dense(1, activation='sigmoid'))

model.summary()

在编译步骤里,使用RMSprop优化器。由于用一个单一的神经元(Sigmoid的激活函数)结束了网络,将使用二进制交叉熵(binary crossentropy)作为损失函数

from keras import optimizers

model.compile(loss='binary_crossentropy',

optimizer=optimizers.RMSprop(lr=1e-4),

metrics=['acc'])

4.数据预处理

from keras.preprocessing.image import ImageDataGenerator

# 所有的图像将重新进行归一化处理 Rescaled by 1./255

train_datagen = ImageDataGenerator(rescale=1./255)

validation_datagen=ImageDataGenerator(rescale=1./255)

test_datagen = ImageDataGenerator(rescale=1./255)

# 直接从目录读取图像数据

train_generator = train_datagen.flow_from_directory(

# 训练图像的目录

train_dir,

# 所有图像大小会被转换成150x150

target_size=(150, 150),

# 每次产生20个图像的批次

batch_size=20,

# 由于这是一个二元分类问题,y的label值也会被转换成二元的标签

class_mode='binary')

# 直接从目录读取图像数据

validation_generator = test_datagen.flow_from_directory(

validation_dir,

target_size=(150, 150),

batch_size=20,

class_mode='binary')

test_generator = test_datagen.flow_from_directory(test_dir,

target_size=(150, 150),

batch_size=20,

class_mode='binary')

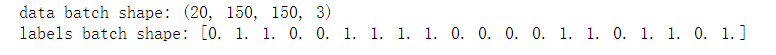

for data_batch, labels_batch in train_generator:

print('data batch shape:', data_batch.shape)

print('labels batch shape:', labels_batch)

break

train_generator.class_indices

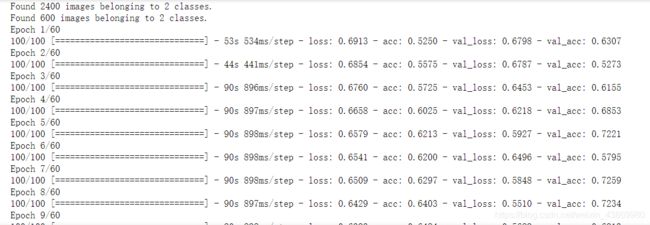

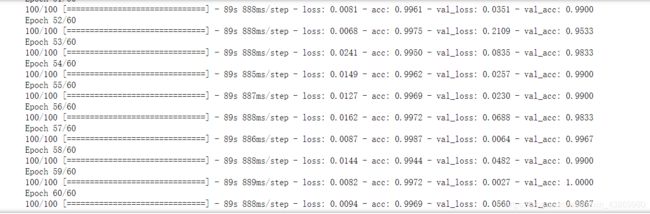

5.开始训练

epochs值为训练轮数

history = model.fit_generator(

train_generator,

steps_per_epoch=120,

epochs=10,

validation_data=validation_generator,

validation_steps=50)

训练集中共有1200张,选择10轮训练,每轮120张

训练完后将模型保存下来

model.save('GENKI4K-data/smileAndUnsmile_1.h5')

使用图表来绘制在训练过程中模型对训练和验证数据的损失(loss)和准确性(accuracy)数据

6.数据增强

datagen = ImageDataGenerator(

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,

fill_mode='nearest')

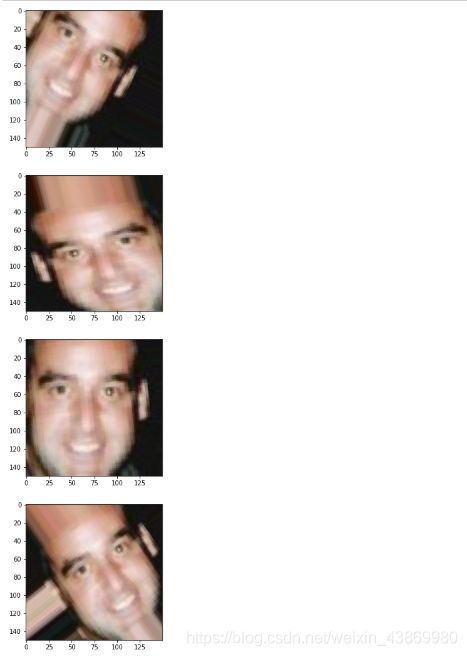

查看增强后的图片例子

import matplotlib.pyplot as plt

# 图像预处理功能模块

from keras.preprocessing import image

# 取得训练数据集中微笑的图片列表

fnames = [os.path.join(train_smile_dir, fname) for fname in os.listdir(train_smile_dir)]

img_path = fnames[3] # 取一个图像

img = image.load_img(img_path, target_size=(150, 150)) # 读入图像并进行大小处理

x = image.img_to_array(img) # 转换成Numpy array 并且shape(150,150,3)

x = x.reshape((1,) + x.shape) # 重新Reshape成(1,150,150,3)以便输入到模型中

# 通过flow()方法将会随机产生新的图像,它会无线循环,所以我们需要在某个时候“断开”循环

i = 0

for batch in datagen.flow(x, batch_size=1):

plt.figure(i)

imgplot = plt.imshow(image.array_to_img(batch[0]))

i += 1

if i % 4 == 0:

break

plt.show()

7.创建新的网络

model = models.Sequential()

model.add(layers.Conv2D(32, (3, 3), activation='relu',

input_shape=(150, 150, 3)))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(64, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(128, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(128, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Flatten())

model.add(layers.Dropout(0.5))

model.add(layers.Dense(512, activation='relu'))

model.add(layers.Dense(1, activation='sigmoid'))

model.compile(loss='binary_crossentropy',

optimizer=optimizers.RMSprop(lr=1e-4),

metrics=['acc'])

开始训练模型

#归一化处理

train_datagen = ImageDataGenerator(

rescale=1./255,

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,)

# 注意,验证数据不应该扩充

test_datagen = ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(

# 这是图像资料的目录

train_dir,

# 所有的图像大小会被转换成150x150

target_size=(150, 150),

batch_size=32,

# 由于这是一个二元分类问题,y的label值也会被转换成二元的标签

class_mode='binary')

validation_generator = test_datagen.flow_from_directory(

validation_dir,

target_size=(150, 150),

batch_size=32,

class_mode='binary')

history = model.fit_generator(

train_generator,

steps_per_epoch=100,

epochs=60,

validation_data=validation_generator,

validation_steps=50)

train_generator.class_indices

![]()

依旧是:0为笑脸 1为非笑脸

保存模型

model.save('GENKI4K-data/smileAndUnsmile_2.h5')

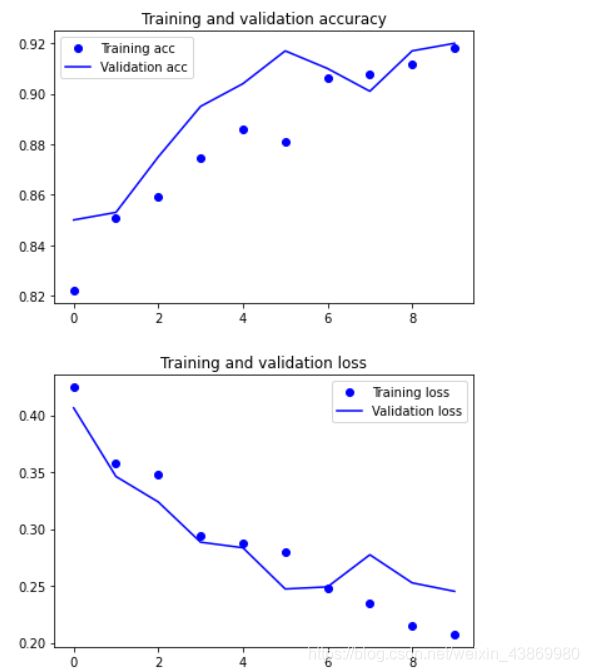

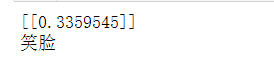

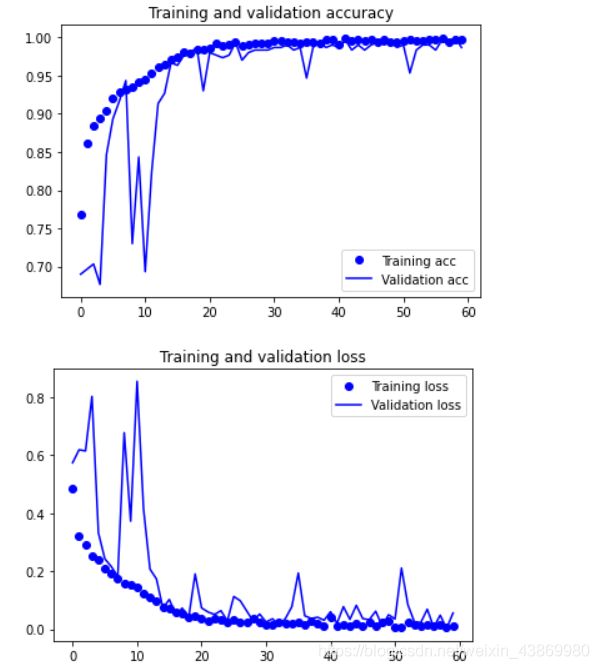

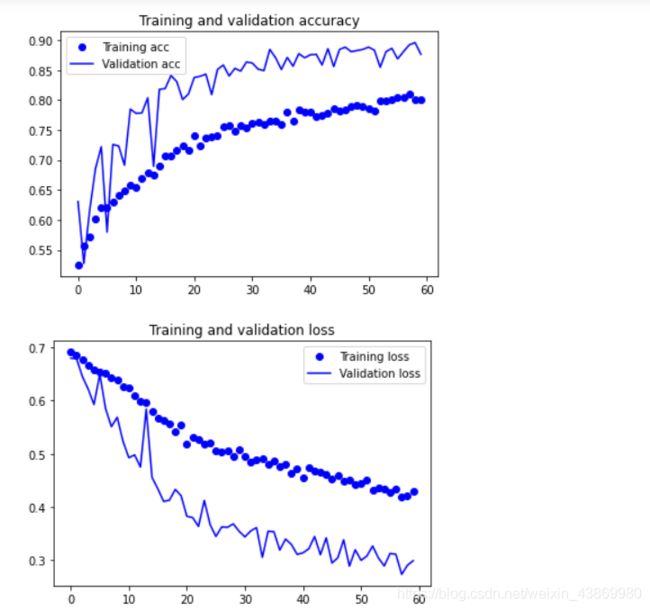

数据增强后的训练集与验证集的精确度与损失度

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs, loss, 'bo', label='Training loss')

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

我们发现验证的loss值有所下降,并且和训练集的loss值(较高)差距较大,同时验证集的精确度也在增加,所以数据增强后还是有效果的。

8.对单张图片的笑脸测试

# 单张图片进行判断 是笑脸还是非笑脸

from keras.preprocessing import image

from keras.models import load_model

import numpy as np

#加载模型

model = load_model('GENKI4K-data/smileAndUnsmile_2.h5')

#本地图片路径

img_path='GENKI4K-data/stest.jpg'

img = image.load_img(img_path, target_size=(150, 150))

img_tensor = image.img_to_array(img)/255.0

img_tensor = np.expand_dims(img_tensor, axis=0)

prediction =model.predict(img_tensor)

print(prediction)

if prediction[0][0]>0.5:

result='非笑脸'

else:

result='笑脸'

print(result)

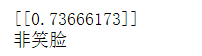

# 单张图片进行判断 是笑脸还是非笑脸

from keras.preprocessing import image

from keras.models import load_model

import numpy as np

#加载模型

model = load_model('GENKI4K-data/smileAndUnsmile_2.h5')

#本地图片路径

img_path='GENKI4K-data/ustest.jpg'

img = image.load_img(img_path, target_size=(150, 150))

img_tensor = image.img_to_array(img)/255.0

img_tensor = np.expand_dims(img_tensor, axis=0)

prediction =model.predict(img_tensor)

print(prediction)

if prediction[0][0]>0.5:

result='非笑脸'

else:

result='笑脸'

print(result)

ustest.jpg为下图

结果:

看来训练的模型还是比较准确的,但是我也拿了一样笑脸特征不是很明显的测试了一下,显示为笑脸,所以还是存在偏差的。

二、口罩识别

1.数据准备

下载好口罩数据集后我放到了jupyter工作目录下新建的maskdata里

简单看一下mask和nomask

——将正样本(有口罩)数据集重命名为连续序列,以便后面调整

#coding:utf-8

import os

path = "maskdata/mask" # 人脸口罩数据集正样本的路径

filelist = os.listdir(path)

count=1000 #开始文件名1000.jpg

for file in filelist:

Olddir=os.path.join(path,file)

if os.path.isdir(Olddir):

continue

filename=os.path.splitext(file)[0]

filetype=os.path.splitext(file)[1]

Newdir=os.path.join(path,str(count)+filetype)

os.rename(Olddir,Newdir)

count+=1

#coding:utf-8

import os

path = "maskdata/nomask" # 人脸口罩数据集负样本的路径

filelist = os.listdir(path)

count=10000 #开始文件名10000.jpg

for file in filelist:

Olddir=os.path.join(path,file)

if os.path.isdir(Olddir):

continue

filename=os.path.splitext(file)[0]

filetype=os.path.splitext(file)[1]

Newdir=os.path.join(path,str(count)+filetype)

os.rename(Olddir,Newdir)

count+=1

import pandas as pd

import cv2

for n in range(1000,1606):#代表正数据集中开始和结束照片的数字

path='maskdata//mask//'+str(n)+'.jpg'

# 读取图片

img = cv2.imread(path)

img=cv2.resize(img,(20,20)) #修改样本像素为20x20

cv2.imwrite('maskdata//mask//' + str(n) + '.jpg', img)

n += 1

#修改负样本像素

import pandas as pd

import cv2

for n in range(10000,11790):#代表负样本数据集中开始和结束照片的数字

path='maskdata//nomask//'+str(n)+'.jpg'

# 读取图片

img = cv2.imread(path)

img=cv2.resize(img,(80,80)) #修改样本像素为80x80

cv2.imwrite('maskdata//nomask//' + str(n) + '.jpg', img)

n += 1

处理后的

——划分数据集(在同级目录下会产生一个mask的文件夹,包括 train :训练集;validation:验证集;test:测试集。)

original_dataset_dir = 'maskdata' # 原始数据集的路径

base_dir = 'maskout' # 存放图像数据集的目录

os.mkdir(base_dir)

train_dir = os.path.join(base_dir, 'train') # 训练图像的目录

os.mkdir(train_dir)

validation_dir = os.path.join(base_dir, 'validation') # 验证图像的目录

os.mkdir(validation_dir)

test_dir = os.path.join(base_dir, 'test') # 测试图像的目录

os.mkdir(test_dir)

train_havemask_dir = os.path.join(train_dir, 'mask') # 有口罩的图片的训练资料的目录

os.mkdir(train_havemask_dir)

train_nomask_dir = os.path.join(train_dir, 'nomask') # 没有口罩的图片的训练资料的目录

os.mkdir(train_nomask_dir)

validation_havemask_dir = os.path.join(validation_dir, 'mask') # 有口罩的图片的验证集目录

os.mkdir(validation_havemask_dir)

validation_nomask_dir = os.path.join(validation_dir, 'nomask')# 没有口罩的图片的验证集目录

os.mkdir(validation_nomask_dir)

test_havemask_dir = os.path.join(test_dir, 'mask') # 有口罩的图片的测试数据集目录

os.mkdir(test_havemask_dir)

test_nomask_dir = os.path.join(test_dir, 'nomask') # 没有口罩的图片的测试数据集目录

os.mkdir(test_nomask_dir)

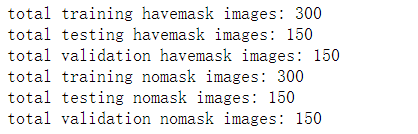

print('total training havemask images:', len(os.listdir(train_havemask_dir)))

print('total testing havemask images:', len(os.listdir(test_havemask_dir)))

print('total validation havemask images:', len(os.listdir(validation_havemask_dir)))

print('total training nomask images:', len(os.listdir(train_nomask_dir)))

print('total testing nomask images:', len(os.listdir(test_nomask_dir)))

print('total validation nomask images:', len(os.listdir(validation_nomask_dir)))

一共有600个训练图像,然后是300个验证图像,300个测试图像,其中每个分类都有相同数量的样本,是一个平衡的二元分类问题,意味着分类准确度将是合适的度量标准。

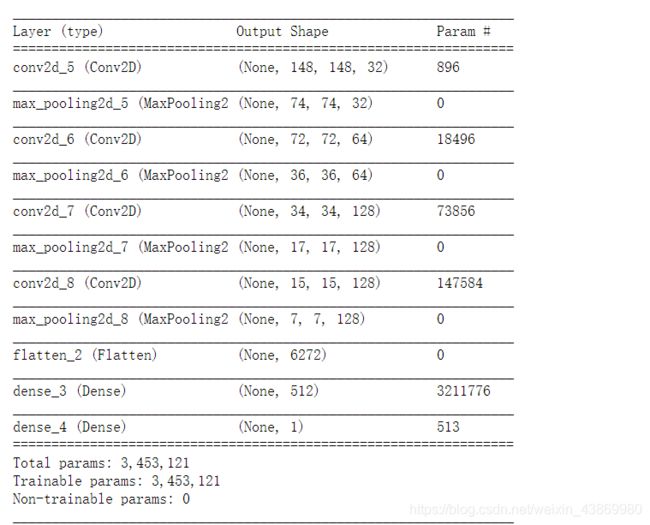

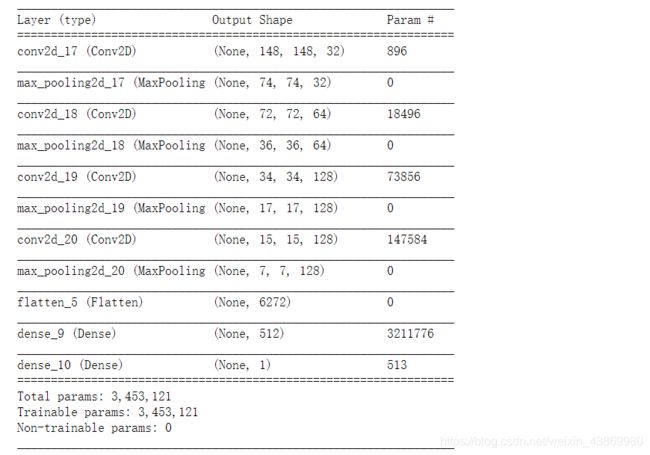

2.网络模型

#创建模型

from keras import layers

from keras import models

model = models.Sequential()

model.add(layers.Conv2D(32, (3, 3), activation='relu',

input_shape=(150, 150, 3)))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(64, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(128, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(128, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Flatten())

model.add(layers.Dense(512, activation='relu'))

model.add(layers.Dense(1, activation='sigmoid'))

——看特征图的尺寸如何随着每个连续的图层而改变,打印网络结构

在编译步骤里,使用RMSprop优化器。由于用一个单一的神经元(Sigmoid的激活函数)结束了网络,将使用二进制交叉熵(binary crossentropy)作为损失函数

from keras import optimizers

model.compile(loss='binary_crossentropy',

optimizer=optimizers.RMSprop(lr=1e-4),

metrics=['acc'])

3.数据预处理

from keras.preprocessing.image import ImageDataGenerator

# 所有的图像将重新进行归一化处理 Rescaled by 1./255

train_datagen = ImageDataGenerator(rescale=1./255)

validation_datagen=ImageDataGenerator(rescale=1./255)

test_datagen = ImageDataGenerator(rescale=1./255)

# 直接从目录读取图像数据

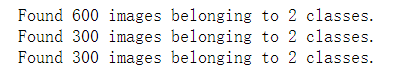

train_generator = train_datagen.flow_from_directory(

# 训练图像的目录

train_dir,

# 所有图像大小会被转换成150x150

target_size=(150, 150),

# 每次产生20个图像的批次

batch_size=20,

# 由于这是一个二元分类问题,y的label值也会被转换成二元的标签

class_mode='binary')

# 直接从目录读取图像数据

validation_generator = test_datagen.flow_from_directory(

validation_dir,

target_size=(150, 150),

batch_size=20,

class_mode='binary')

test_generator = test_datagen.flow_from_directory(test_dir,

target_size=(150, 150),

batch_size=20,

class_mode='binary')

——图像张量生成器(generator)的输出,它产生150x150 RGB图像(形状"(20,150,150,3)")和二进制标签(形状"(20,)")的批次张量。20是每个批次中的样品数(批次大小)

for data_batch, labels_batch in train_generator:

print('data batch shape:', data_batch.shape)

print('labels batch shape:', labels_batch)

break

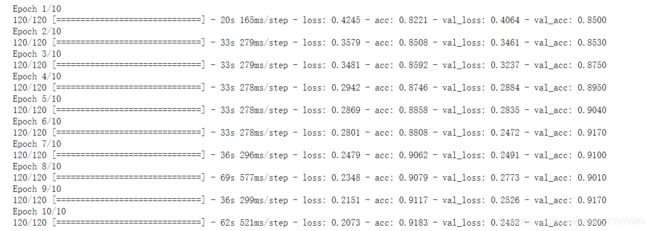

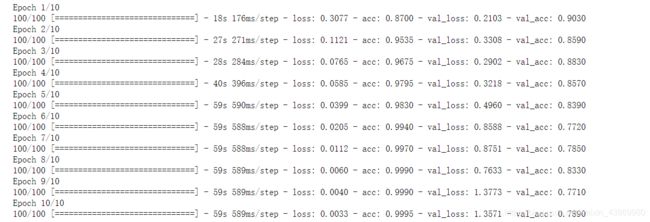

4.开始训练

history = model.fit_generator(

train_generator,

steps_per_epoch=100,

epochs=10,

validation_data=validation_generator,

validation_steps=50)

可以看出随着训练轮数的增加,训练集的loss值下降,acc精确度上升,但验证集就完全相反,这也是非常符合实际的。

——保存模型

model.save('maskout/maskAndNomask_1.h5')

——使用图表来绘制在训练过程中模型对训练和验证数据的损失(loss)和准确性(accuracy)数据

import matplotlib.pyplot as plt

# import h5py

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

# print('acc:',acc)

# print('val_acc:',val_acc)

# print('loss:',loss)

# print('val_loss:',val_loss)

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs, loss, 'bo', label='Training loss')

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

5.使用数据增强

datagen = ImageDataGenerator(

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,

fill_mode='nearest')

查看数据增强后的图片

import matplotlib.pyplot as plt

from keras.preprocessing import image

fnames = [os.path.join(train_havemask_dir, fname) for fname in os.listdir(train_havemask_dir)]

img_path = fnames[3]

img = image.load_img(img_path, target_size=(150, 150))

x = image.img_to_array(img)

x = x.reshape((1,) + x.shape)

i = 0

for batch in datagen.flow(x, batch_size=1):

plt.figure(i)

imgplot = plt.imshow(image.array_to_img(batch[0]))

i += 1

if i % 4 == 0:

break

plt.show()

model = models.Sequential()

model.add(layers.Conv2D(32, (3, 3), activation='relu',

input_shape=(150, 150, 3)))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(64, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(128, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(128, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Flatten())

model.add(layers.Dropout(0.5))

model.add(layers.Dense(512, activation='relu'))

model.add(layers.Dense(1, activation='sigmoid'))

model.compile(loss='binary_crossentropy',

optimizer=optimizers.RMSprop(lr=1e-4),

metrics=['acc'])

#归一化处理

train_datagen = ImageDataGenerator(

rescale=1./255,

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,)

# Note that the validation data should not be augmented!

test_datagen = ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(

# This is the target directory

train_dir,

# All images will be resized to 150x150

target_size=(150, 150),

batch_size=32,

# Since we use binary_crossentropy loss, we need binary labels

class_mode='binary')

validation_generator = test_datagen.flow_from_directory(

validation_dir,

target_size=(150, 150),

batch_size=32,

class_mode='binary')

history = model.fit_generator(

train_generator,

steps_per_epoch=100,

epochs=60,

validation_data=validation_generator,

validation_steps=50)

train_generator.class_indices

#保存模型

model.save('maskout/maskAndNomask_2.h5')

绘制数据增强后的训练集与验证集的精确度与损失度的图形,看一遍结果

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs, loss, 'bo', label='Training loss')

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

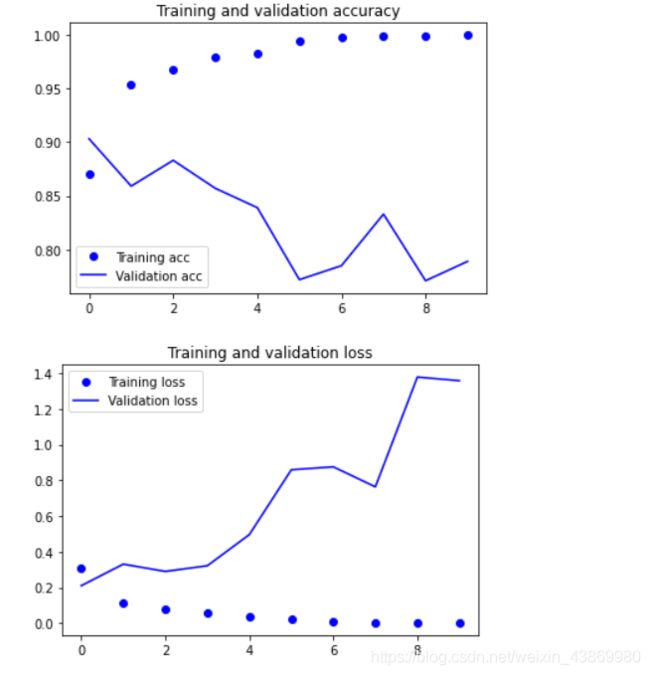

6.对单张人物测试是否戴了口罩

from keras.preprocessing import image

from keras.models import load_model

import numpy as np

model = load_model('maskout//maskAndNomask_2.h5')

img_path='maskout//masktest.jpg'

img = image.load_img(img_path, target_size=(150, 150))

#print(img.size)

img_tensor = image.img_to_array(img)/255.0

img_tensor = np.expand_dims(img_tensor, axis=0)

prediction =model.predict(img_tensor)

print(prediction)

if prediction[0][0]>0.5:

result='未戴口罩'

else:

result='戴口罩'

print(result)

masktest.jpg如下:

再来测试一下不戴口罩的 识别

from keras.preprocessing import image

from keras.models import load_model

import numpy as np

model = load_model('maskout//maskAndNomask_2.h5')

img_path='maskout//nomasktest.jpg'

img = image.load_img(img_path, target_size=(150, 150))

#print(img.size)

img_tensor = image.img_to_array(img)/255.0

img_tensor = np.expand_dims(img_tensor, axis=0)

prediction =model.predict(img_tensor)

print(prediction)

if prediction[0][0]>0.5:

result='未戴口罩'

else:

result='戴口罩'

print(result)

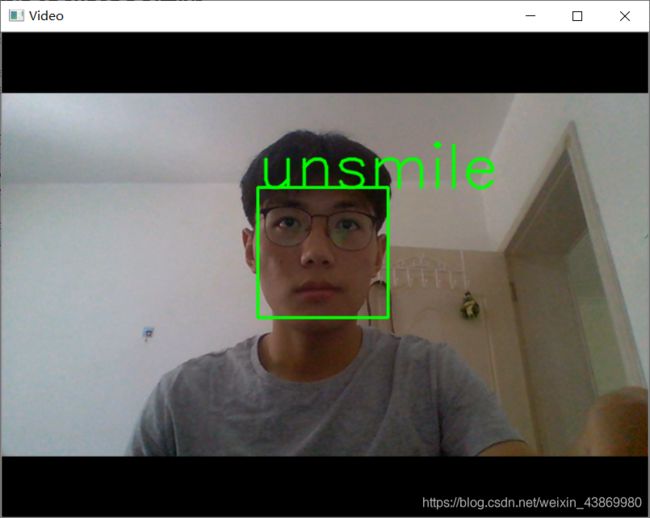

三、摄像头实时采集人脸、并对表情(笑脸/非笑脸)、戴口罩和没戴口罩的实时分类判读(输出分类文字)

1.笑脸实时检测识别

#检测视频或者摄像头中的人脸

import cv2

from keras.preprocessing import image

from keras.models import load_model

import numpy as np

import dlib

from PIL import Image

model = load_model('GENKI4K-data/smileAndUnsmile_2.h5')

detector = dlib.get_frontal_face_detector()

video=cv2.VideoCapture(0)

font = cv2.FONT_HERSHEY_SIMPLEX

def rec(img):

gray=cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

dets=detector(gray,1)

if dets is not None:

for face in dets:

left=face.left()

top=face.top()

right=face.right()

bottom=face.bottom()

cv2.rectangle(img,(left,top),(right,bottom),(0,255,0),2)

img1=cv2.resize(img[top:bottom,left:right],dsize=(150,150))

img1=cv2.cvtColor(img1,cv2.COLOR_BGR2RGB)

img1 = np.array(img1)/255.

img_tensor = img1.reshape(-1,150,150,3)

prediction =model.predict(img_tensor)

if prediction[0][0]>0.5:

result='unsmile'

else:

result='smile'

cv2.putText(img, result, (left,top), font, 2, (0, 255, 0), 2, cv2.LINE_AA)

cv2.imshow('Video', img)

while video.isOpened():

res, img_rd = video.read()

if not res:

break

rec(img_rd)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

video.release()

cv2.destroyAllWindows()

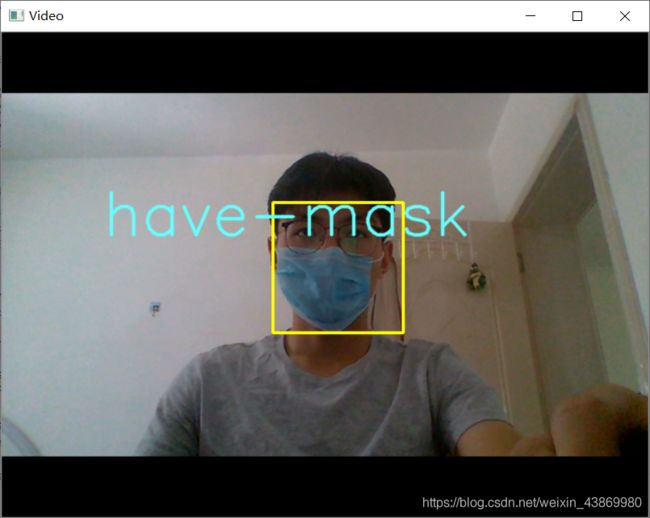

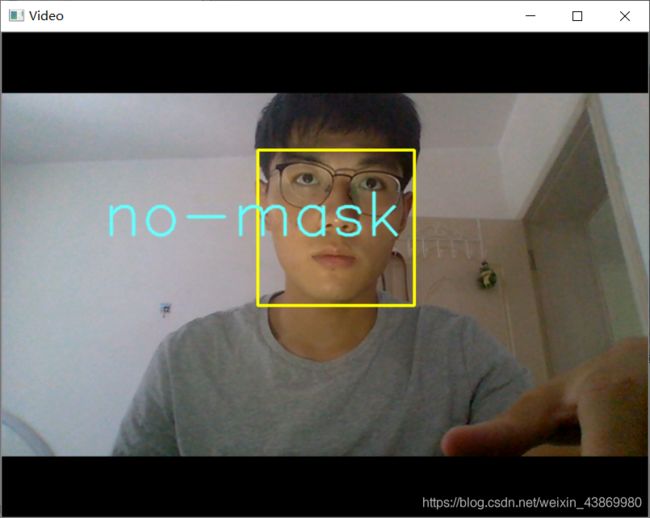

2.是否戴口罩的实时检测识别

import cv2

from keras.preprocessing import image

from keras.models import load_model

import numpy as np

import dlib

from PIL import Image

model = load_model('maskout/maskAndNomask_2.h5')

detector = dlib.get_frontal_face_detector()

# video=cv2.VideoCapture('media/video.mp4')

# video=cv2.VideoCapture('data/face_recognition.mp4')

video=cv2.VideoCapture(0)

font = cv2.FONT_HERSHEY_SIMPLEX

def rec(img):

gray=cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

dets=detector(gray,1)

if dets is not None:

for face in dets:

left=face.left()

top=face.top()

right=face.right()

bottom=face.bottom()

cv2.rectangle(img,(left,top),(right,bottom),(0,255,255),2)

def mask(img):

img1=cv2.resize(img,dsize=(150,150))

img1=cv2.cvtColor(img1,cv2.COLOR_BGR2RGB)

img1 = np.array(img1)/255.

img_tensor = img1.reshape(-1,150,150,3)

prediction =model.predict(img_tensor)

if prediction[0][0]>0.5:

result='no-mask'

else:

result='have-mask'

cv2.putText(img, result, (100,200), font, 2, (255, 255, 100), 2, cv2.LINE_AA)

cv2.imshow('Video', img)

while video.isOpened():

res, img_rd = video.read()

if not res:

break

#将视频每一帧传入两个函数,分别用于圈出人脸与判断是否带口罩

rec(img_rd)

mask(img_rd)

#q关闭窗口

if cv2.waitKey(1) & 0xFF == ord('q'):

break

video.release()

cv2.destroyAllWindows()

本次人脸表情识别和口罩识别利用卷积神经网路(CNN),博主本人也当了此次实验的实验对象,感觉能将自己和实验融合起来还挺有意思的。作为一个greenbird,欢迎大家来访和交流。