Kubernetes CKA认证运维工程师笔记-Kubernetes安全

Kubernetes CKA认证运维工程师笔记-Kubernetes安全

- 1. Kubernetes安全框架

- 2. 鉴权,授权,准入控制

-

- 2.1 鉴权

- 2.2 授权

- 2.3 准入控制

- 3. 基于角色的权限访问控制:RBAC

- 4. 案例:为指定用户授权访问不同命名空间权限

- 5. 网络策略概述

- 6. 案例:对项目Pod出入流量访问控制

1. Kubernetes安全框架

- K8S安全控制框架主要由下面3个阶段进行控制,每一个阶段都支持插件方式,通过API Server配置来启用插件。

- 1.Authentication(鉴权)

- 2.Authorization(授权)

- 3.Admission Control(准入控制)

- 客户端要想访问K8s集群API Server,一般需要证书、Token或者用户名+密码;如果Pod访问,需要ServiceAccount

2. 鉴权,授权,准入控制

2.1 鉴权

三种客户端身份认证:

- HTTPS 证书认证:基于CA证书签名的数字证书认证

- HTTP Token认证:通过一个Token来识别用户

- HTTP Base认证:用户名+密码的方式认证

2.2 授权

RBAC(Role-Based Access Control,基于角色的访问控制):负责完成授权(Authorization)工作。

RBAC根据API请求属性,决定允许还是拒绝。

比较常见的授权维度:

- user:用户名

- group:用户分组

- 资源,例如pod、deployment

- 资源操作方法:get,list,create,update,patch,watch,delete

- 命名空间

- API组

2.3 准入控制

AdminssionControl实际上是一个准入控制器插件列表,发送到APIServer的请求都需要经过这个列表中的每个准入控制器插件的检查,检查不通过,则拒绝请求。

3. 基于角色的权限访问控制:RBAC

RBAC(Role-Based Access Control,基于角色的访问控制),允许通过Kubernetes API动态配置策略。

角色

- Role:授权特定命名空间的访问权限

- ClusterRole:授权所有命名空间的访问权限

角色绑定

- RoleBinding:将角色绑定到主体(即subject)

- ClusterRoleBinding:将集群角色绑定到主体

主体(subject)

- User:用户

- Group:用户组

- ServiceAccount:服务账号

4. 案例:为指定用户授权访问不同命名空间权限

示例:为aliang用户授权default命名空间Pod读取权限

- 用K8S CA签发客户端证书

- 生成kubeconfig授权文件

- 创建RBAC权限策略

# 生成kubeconfig授权文件:

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.crt \

--embed-certs=true \

--server=https://192.168.31.61:6443 \

--kubeconfig=aliang.kubeconfig

# 设置客户端认证

kubectl config set-credentials aliang \

--client-key=aliang-key.pem \

--client-certificate=aliang.pem \

--embed-certs=true \

--kubeconfig=aliang.kubeconfig

# 设置默认上下文

kubectl config set-context kubernetes \

--cluster=kubernetes \

--user=aliang \

--kubeconfig=aliang.kubeconfig

# 设置当前使用配置

kubectl config use-context kubernetes --kubeconfig=aliang.kubeconfig

[root@k8s-master ~]# ls /etc/kubernetes/pki/

apiserver.crt apiserver-kubelet-client.key front-proxy-ca.key

apiserver-etcd-client.crt ca.crt front-proxy-client.crt

apiserver-etcd-client.key ca.key front-proxy-client.key

apiserver.key etcd sa.key

apiserver-kubelet-client.crt front-proxy-ca.crt sa.pub

[root@k8s-master ~]# rz -E

rz waiting to receive.

[root@k8s-master ~]# unzip rbac.zip

Archive: rbac.zip

creating: rbac/

inflating: rbac/cert.sh

inflating: rbac/kubeconfig.sh

inflating: rbac/rbac.yaml

[root@k8s-master ~]# cd

[root@k8s-master ~]# cd rbac/

[root@k8s-master rbac]# ls

cert.sh kubeconfig.sh rbac.yaml

[root@k8s-master rbac]# vi cert.sh

[root@k8s-master rbac]# rz -E

rz waiting to receive.

[root@k8s-master rbac]# tar -zvxf cfssl.tar.gz

cfssl

cfssl-certinfo

cfssljson

[root@k8s-master rbac]# ls

cert.sh cfssl cfssl-certinfo cfssljson cfssl.tar.gz kubeconfig.sh rbac.yaml

[root@k8s-master rbac]# ll

total 24536

-rw-r--r-- 1 root root 741 Dec 22 15:16 cert.sh

-rwxr-xr-x 1 root root 10376657 Nov 25 2019 cfssl

-rwxr-xr-x 1 root root 6595195 Nov 25 2019 cfssl-certinfo

-rwxr-xr-x 1 root root 2277873 Nov 25 2019 cfssljson

-rw-r--r-- 1 root root 5850685 Nov 16 2020 cfssl.tar.gz

-rw-r--r-- 1 root root 622 Sep 1 2019 kubeconfig.sh

-rw-r--r-- 1 root root 477 Aug 25 2019 rbac.yaml

[root@k8s-master rbac]# mv cfssl* /usr/bin/cfssl

mv: target ‘/usr/bin/cfssl’ is not a directory

[root@k8s-master rbac]# mv cfssl* /usr/bin/

[root@k8s-master rbac]# ls

cert.sh kubeconfig.sh rbac.yaml

[root@k8s-master rbac]# cd /usr/bin/

[root@k8s-master bin]# rm -rf cfssl.tar.gz

[root@k8s-master bin]# cd -

/root/rbac

[root@k8s-master rbac]# cfssl

No command is given.

Usage:

Available commands:

ocspserve

selfsign

scan

print-defaults

certinfo

sign

gencrl

revoke

bundle

serve

version

ocspdump

ocspsign

info

genkey

gencert

ocsprefresh

Top-level flags:

-allow_verification_with_non_compliant_keys

Allow a SignatureVerifier to use keys which are technically non-compliant with RFC6962.

-loglevel int

Log level (0 = DEBUG, 5 = FATAL) (default 1)

[root@k8s-master rbac]# vi cert.sh

[root@k8s-master rbac]# bash cert.sh

2021/12/22 15:23:24 [INFO] generate received request

2021/12/22 15:23:24 [INFO] received CSR

2021/12/22 15:23:24 [INFO] generating key: rsa-2048

2021/12/22 15:23:24 [INFO] encoded CSR

2021/12/22 15:23:24 [INFO] signed certificate with serial number 153136750969096983457453824455230094856825212109

2021/12/22 15:23:24 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-master rbac]# ls

adu.csr adu-key.pem ca-config.json kubeconfig.sh

adu-csr.json adu.pem cert.sh rbac.yaml

[root@k8s-master rbac]# cat /root/.kube/config

apiVersion: v1

clusters:

- cluster:

certificate-authority-data:

...

[root@k8s-master rbac]# vi kubeconfig.sh

[root@k8s-master rbac]# bash kubeconfig.sh

Cluster "kubernetes" set.

User "adu" set.

Context "kubernetes" created.

Switched to context "kubernetes".

[root@k8s-master rbac]# cat adu.kubeconfig

apiVersion: v1

clusters:

- cluster:

...

创建RBAC权限策略:

指定kubeconfig文件测试:

kubectl get pods --kubeconfig=./adu.kubeconfig

[root@k8s-master rbac]# vi rbac.yaml

[root@k8s-master rbac]# cat rbac.yaml

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

namespace: default

name: pod-reader

rules:

- apiGroups: [""] # 核心组

resources: ["pods","deployments"] # 资源

verbs: ["get", "watch", "list"] # 对资源的操作

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: read-pods

namespace: default

subjects:

- kind: User

name: adu

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: Role

name: pod-reader

apiGroup: rbac.authorization.k8s.io

[root@k8s-master rbac]# kubectl apply -f rbac.yaml

role.rbac.authorization.k8s.io/pod-reader created

rolebinding.rbac.authorization.k8s.io/read-pods created

[root@k8s-master ~]# kubectl --kubeconfig=/root/rbac/adu.kubeconfig get pods

NAME READY STATUS RESTARTS AGE

configmap-demo-pod 1/1 Running 1 28h

my-pod2 1/1 Running 5 35h

nfs-client-provisioner-58d675cd5-dx7n4 1/1 Running 1 30h

pod-taint 1/1 Running 6 7d10h

secret-demo-pod 1/1 Running 1 27h

sh 1/1 Running 2 29h

test-76846b5956-gftn9 1/1 Running 1 29h

test-76846b5956-r7s9k 1/1 Running 1 29h

test-76846b5956-trpbn 1/1 Running 1 29h

test2-78c4694588-87b9r 1/1 Running 1 30h

web-0 1/1 Running 1 29h

web-1 1/1 Running 1 29h

web-2 1/1 Running 1 29h

[root@k8s-master ~]# kubectl --kubeconfig=/root/rbac/adu.kubeconfig get deployment

Error from server (Forbidden): deployments.apps is forbidden: User "adu" cannot list resource "deployments" in API group "apps" in the namespace "default"

[root@k8s-master ~]# kubectl --kubeconfig=/root/rbac/adu.kubeconfig get svc

Error from server (Forbidden): services is forbidden: User "adu" cannot list resource "services" in API group "" in the namespace "default"

[root@k8s-master rbac]# vi rbac.yaml

[root@k8s-master rbac]# cat rbac.yaml

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

namespace: default

name: pod-reader

rules:

- apiGroups: ["","apps"] # 核心组

resources: ["pods","deployments"] # 资源

verbs: ["get", "watch", "list"] # 对资源的操作

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: read-pods

namespace: default

subjects:

- kind: User

name: adu

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: Role

name: pod-reader

apiGroup: rbac.authorization.k8s.io

[root@k8s-master rbac]# kubectl apply -f rbac.yaml

role.rbac.authorization.k8s.io/pod-reader configured

rolebinding.rbac.authorization.k8s.io/read-pods unchanged

[root@k8s-master ~]# kubectl --kubeconfig=/root/rbac/adu.kubeconfig get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

nfs-client-provisioner 1/1 1 1 35h

test 3/3 3 3 36h

test2 1/1 1 1 35h

web 3/3 3 3 30d

[root@k8s-master rbac]# vi rbac.yaml

[root@k8s-master rbac]# cat rbac.yaml

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

namespace: default

name: pod-reader

rules:

- apiGroups: ["","apps"] # 核心组

resources: ["pods","deployments","services"] # 资源

verbs: ["get", "watch", "list"] # 对资源的操作

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: read-pods

namespace: default

subjects:

- kind: User

name: adu

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: Role

name: pod-reader

apiGroup: rbac.authorization.k8s.io

[root@k8s-master rbac]# kubectl apply -f rbac.yaml

role.rbac.authorization.k8s.io/pod-reader configured

rolebinding.rbac.authorization.k8s.io/read-pods unchanged

[root@k8s-master ~]# kubectl --kubeconfig=/root/rbac/adu.kubeconfig get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 30d

my-dep NodePort 10.111.199.51 <none> 80:31734/TCP 27d

my-service NodePort 10.100.228.0 <none> 80:32433/TCP 21d

nginx ClusterIP None <none> 80/TCP 34h

web NodePort 10.96.132.243 <none> 80:31340/TCP 30d

[root@k8s-master rbac]# vi rbac.yaml

[root@k8s-master rbac]# cat rbac.yaml

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

namespace: default

name: pod-reader

rules:

- apiGroups: ["","apps"] # 核心组

resources: ["pods","deployments","services"] # 资源

verbs: ["get", "watch", "list","delete"] # 对资源的操作

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: read-pods

namespace: default

subjects:

- kind: User

name: adu

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: Role

name: pod-reader

apiGroup: rbac.authorization.k8s.io

[root@k8s-master rbac]# kubectl apply -f rbac.yaml

role.rbac.authorization.k8s.io/pod-reader configured

rolebinding.rbac.authorization.k8s.io/read-pods unchanged

[root@k8s-master ~]# kubectl --kubeconfig=/root/rbac/adu.kubeconfig delete svc web

service "web" deleted

认证流程

客户端是kubectl和kubeconfig

客户端是kubectl和kubeconfig

证书内容是在cert.sh中

[root@k8s-master rbac]# cat cert.sh

cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

}

EOF

cat > adu-csr.json <<EOF

{

"CN": "adu",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -ca=/etc/kubernetes/pki/ca.crt -ca-key=/etc/kubernetes/pki/ca.key -config=ca-config.json -profile=kubernetes adu-csr.json | cfssljson -bare adu

[root@k8s-master rbac]# kubectl get role

NAME CREATED AT

leader-locking-nfs-client-provisioner 2021-12-21T02:23:25Z

pod-reader 2021-12-22T08:54:09Z

[root@k8s-master rbac]# kubectl get rolebinding

NAME ROLE AGE

leader-locking-nfs-client-provisioner Role/leader-locking-nfs-client-provisioner 35h

read-pods Role/pod-reader 5h9m

[root@k8s-master rbac]# kubectl get clusterrole

NAME CREATED AT

admin 2021-11-21T15:18:40Z

calico-kube-controllers 2021-11-21T15:37:14Z

calico-node 2021-11-21T15:37:14Z

cluster-admin 2021-11-21T15:18:40Z

edit 2021-11-21T15:18:40Z

kubeadm:get-nodes 2021-11-21T15:18:41Z

kubernetes-dashboard 2021-11-22T07:43:15Z

nfs-client-provisioner-runner 2021-12-21T02:23:25Z

nginx-ingress-clusterrole 2021-12-16T14:37:27Z

system:aggregate-to-admin 2021-11-21T15:18:40Z

system:aggregate-to-edit 2021-11-21T15:18:40Z

system:aggregate-to-view 2021-11-21T15:18:40Z

system:aggregated-metrics-reader 2021-11-28T21:40:06Z

system:auth-delegator 2021-11-21T15:18:40Z

system:basic-user 2021-11-21T15:18:40Z

system:certificates.k8s.io:certificatesigningrequests:nodeclient 2021-11-21T15:18:40Z

system:certificates.k8s.io:certificatesigningrequests:selfnodeclient 2021-11-21T15:18:40Z

system:certificates.k8s.io:kube-apiserver-client-approver 2021-11-21T15:18:40Z

system:certificates.k8s.io:kube-apiserver-client-kubelet-approver 2021-11-21T15:18:40Z

system:certificates.k8s.io:kubelet-serving-approver 2021-11-21T15:18:40Z

system:certificates.k8s.io:legacy-unknown-approver 2021-11-21T15:18:40Z

system:controller:attachdetach-controller 2021-11-21T15:18:40Z

system:controller:certificate-controller 2021-11-21T15:18:40Z

system:controller:clusterrole-aggregation-controller 2021-11-21T15:18:40Z

system:controller:cronjob-controller 2021-11-21T15:18:40Z

system:controller:daemon-set-controller 2021-11-21T15:18:40Z

system:controller:deployment-controller 2021-11-21T15:18:40Z

system:controller:disruption-controller 2021-11-21T15:18:40Z

system:controller:endpoint-controller 2021-11-21T15:18:40Z

system:controller:endpointslice-controller 2021-11-21T15:18:40Z

system:controller:endpointslicemirroring-controller 2021-11-21T15:18:40Z

system:controller:expand-controller 2021-11-21T15:18:40Z

system:controller:generic-garbage-collector 2021-11-21T15:18:40Z

system:controller:horizontal-pod-autoscaler 2021-11-21T15:18:40Z

system:controller:job-controller 2021-11-21T15:18:40Z

system:controller:namespace-controller 2021-11-21T15:18:40Z

system:controller:node-controller 2021-11-21T15:18:40Z

system:controller:persistent-volume-binder 2021-11-21T15:18:40Z

system:controller:pod-garbage-collector 2021-11-21T15:18:40Z

system:controller:pv-protection-controller 2021-11-21T15:18:40Z

system:controller:pvc-protection-controller 2021-11-21T15:18:40Z

system:controller:replicaset-controller 2021-11-21T15:18:40Z

system:controller:replication-controller 2021-11-21T15:18:40Z

system:controller:resourcequota-controller 2021-11-21T15:18:40Z

system:controller:route-controller 2021-11-21T15:18:40Z

system:controller:service-account-controller 2021-11-21T15:18:40Z

system:controller:service-controller 2021-11-21T15:18:40Z

system:controller:statefulset-controller 2021-11-21T15:18:40Z

system:controller:ttl-controller 2021-11-21T15:18:40Z

system:coredns 2021-11-21T15:18:42Z

system:discovery 2021-11-21T15:18:40Z

system:heapster 2021-11-21T15:18:40Z

system:kube-aggregator 2021-11-21T15:18:40Z

system:kube-controller-manager 2021-11-21T15:18:40Z

system:kube-dns 2021-11-21T15:18:40Z

system:kube-scheduler 2021-11-21T15:18:40Z

system:kubelet-api-admin 2021-11-21T15:18:40Z

system:metrics-server 2021-11-28T21:40:20Z

system:node 2021-11-21T15:18:40Z

system:node-bootstrapper 2021-11-21T15:18:40Z

system:node-problem-detector 2021-11-21T15:18:40Z

system:node-proxier 2021-11-21T15:18:40Z

system:persistent-volume-provisioner 2021-11-21T15:18:40Z

system:public-info-viewer 2021-11-21T15:18:40Z

system:volume-scheduler 2021-11-21T15:18:40Z

view 2021-11-21T15:18:40Z

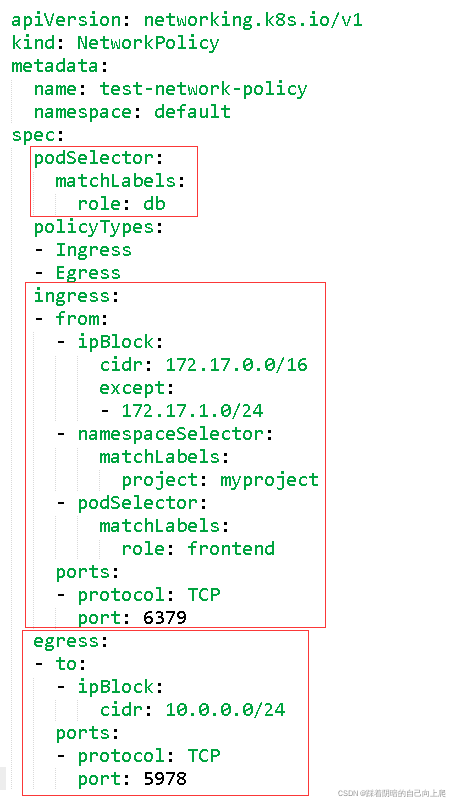

5. 网络策略概述

网络策略(Network Policy),用于限制Pod出入流量,提供Pod级别和Namespace级别网络访问控制。

一些应用场景:

- 应用程序间的访问控制。例如微服务A允许访问微服务B,微服务C不能访问微服务A

- 开发环境命名空间不能访问测试环境命名空间Pod

- 当Pod暴露到外部时,需要做Pod白名单

- 多租户网络环境隔离

Pod网络入口方向隔离:

- 基于Pod级网络隔离:只允许特定对象访问Pod(使用标签定义),允许白名单上的IP地址或者IP段访问Pod

- 基于Namespace级网络隔离:多个命名空间,A和B命名空间Pod完全隔离。

Pod网络出口方向隔离:

- 拒绝某个Namespace上所有Pod访问外部

- 基于目的IP的网络隔离:只允许Pod访问白名单上的IP地址或者IP段

- 基于目标端口的网络隔离:只允许Pod访问白名单上的端口

podSelector:目标Pod,根据标签选择

policyTypes:策略类型,指定策略用于入站、出站流量。

Ingress:from是可以访问的白名单,可以来自于IP段、命名空间、Pod标签等,ports是可以访问的端口。

Egress:这个Pod组可以访问外部的IP段和端口。

6. 案例:对项目Pod出入流量访问控制

需求1:将default命名空间携带run=web标签的Pod隔离,只允许default命名空间携带run=client1标签的Pod访问80端口。

准备测试环境:

kubectl create deployment web --image=nginx

kubectl run client1 --image=busybox --command --sleep 36000

kubectl run client2 --image=busybox --command --sleep 36000

[root@k8s-master rbac]# kubectl delete deployment web

deployment.apps "web" deleted

[root@k8s-master rbac]# kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

nfs-client-provisioner 1/1 1 1 35h

test 3/3 3 3 36h

test2 1/1 1 1 36h

[root@k8s-master rbac]# cd

[root@k8s-master ~]# kubectl create deployment web --image=nginx

deployment.apps/web created

[root@k8s-master ~]# kubectl run client1 --image=busybox -- sleep 36000

pod/client1 created

[root@k8s-master ~]# kubectl run client2 --image=busybox -- sleep 36000

pod/client2 created

[root@k8s-master ~]# kubectl get pods --show-label

Error: unknown flag: --show-label

See 'kubectl get --help' for usage.

[root@k8s-master ~]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

client1 1/1 Running 0 37s run=client1

client2 1/1 Running 0 30s run=client2

configmap-demo-pod 1/1 Running 1 34h <none>

my-pod2 1/1 Running 5 41h <none>

nfs-client-provisioner-58d675cd5-dx7n4 1/1 Running 1 35h app=nfs-client-provisioner,pod-template-hash=58d675cd5

pod-taint 1/1 Running 6 7d16h run=nginx

secret-demo-pod 1/1 Running 1 33h <none>

sh 1/1 Running 2 35h run=sh

test-76846b5956-gftn9 1/1 Running 1 34h app=nginx2,pod-template-hash=76846b5956

test-76846b5956-r7s9k 1/1 Running 1 34h app=nginx2,pod-template-hash=76846b5956

test-76846b5956-trpbn 1/1 Running 1 34h app=nginx2,pod-template-hash=76846b5956

test2-78c4694588-87b9r 1/1 Running 1 36h app=nginx2,pod-template-hash=78c4694588

web-0 1/1 Running 1 35h app=nginx,controller-revision-hash=web-67bb74dc,statefulset.kubernetes.io/pod-name=web-0

web-1 1/1 Running 1 35h app=nginx,controller-revision-hash=web-67bb74dc,statefulset.kubernetes.io/pod-name=web-1

web-2 1/1 Running 1 35h app=nginx,controller-revision-hash=web-67bb74dc,statefulset.kubernetes.io/pod-name=web-2

web-96d5df5c8-vc9kf 1/1 Running 0 2m49s app=web,pod-template-hash=96d5df5c8

[root@k8s-master ~]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

client1 1/1 Running 0 54s run=client1

client2 1/1 Running 0 47s run=client2

configmap-demo-pod 1/1 Running 1 34h <none>

my-pod2 1/1 Running 5 41h <none>

nfs-client-provisioner-58d675cd5-dx7n4 1/1 Running 1 35h app=nfs-client-provisioner,pod-templat

pod-taint 1/1 Running 6 7d16h run=nginx

secret-demo-pod 1/1 Running 1 33h <none>

sh 1/1 Running 2 35h run=sh

test-76846b5956-gftn9 1/1 Running 1 34h app=nginx2,pod-template-hash=76846b595

test-76846b5956-r7s9k 1/1 Running 1 34h app=nginx2,pod-template-hash=76846b595

test-76846b5956-trpbn 1/1 Running 1 34h app=nginx2,pod-template-hash=76846b595

test2-78c4694588-87b9r 1/1 Running 1 36h app=nginx2,pod-template-hash=78c469458

web-0 1/1 Running 1 35h app=nginx,controller-revision-hash=web

web-1 1/1 Running 1 35h app=nginx,controller-revision-hash=web

web-2 1/1 Running 1 35h app=nginx,controller-revision-hash=web

web-96d5df5c8-vc9kf 1/1 Running 0 3m6s app=web,pod-template-hash=96d5df5c8

[root@k8s-master ~]# vi network.yaml

[root@k8s-master ~]# cat network.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

namespace: default

spec:

podSelector:

matchLabels:

app: web

policyTypes:

- Ingress

ingress:

- from:

- namespaceSelector:

matchLabels:

project: default

- podSelector:

matchLabels:

run: client1

ports:

- protocol: TCP

port: 80

[root@k8s-master ~]# kubectl get pod web-96d5df5c8-vc9kf

NAME READY STATUS RESTARTS AGE

web-96d5df5c8-vc9kf 1/1 Running 0 7m41s

[root@k8s-master ~]# kubectl get pod web-96d5df5c8-vc9kf -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

web-96d5df5c8-vc9kf 1/1 Running 0 7m48s 10.244.169.186 k8s-node2 <none> <none>

[root@k8s-master ~]# kubectl exec -it client1 -- sh

/ # ping 10.244.169.186

PING 10.244.169.186 (10.244.169.186): 56 data bytes

64 bytes from 10.244.169.186: seq=0 ttl=62 time=4.648 ms

64 bytes from 10.244.169.186: seq=1 ttl=62 time=0.953 ms

64 bytes from 10.244.169.186: seq=2 ttl=62 time=3.352 ms

^C

--- 10.244.169.186 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.953/2.984/4.648 ms

/ # wget 10.244.169.186

Connecting to 10.244.169.186 (10.244.169.186:80)

saving to 'index.html'

index.html 100% |*******************************************************************| 615 0:00:00 ETA

'index.html' saved

/ # cat index.html

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

/ # ^C

/ #

command terminated with exit code 130

[root@k8s-master ~]# kubectl exec -it client2 -- sh

/ # ping 10.244.169.186

PING 10.244.169.186 (10.244.169.186): 56 data bytes

64 bytes from 10.244.169.186: seq=0 ttl=62 time=0.490 ms

64 bytes from 10.244.169.186: seq=1 ttl=62 time=0.390 ms

64 bytes from 10.244.169.186: seq=2 ttl=62 time=0.499 ms

^C

--- 10.244.169.186 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.390/0.459/0.499 ms

/ # wget 10.244.169.186

Connecting to 10.244.169.186 (10.244.169.186:80)

saving to 'index.html'

index.html 100% |*******************************************************************| 615 0:00:00 ETA

'index.html' saved

/ # exit

[root@k8s-master ~]# kubectl apply -f network.yaml

networkpolicy.networking.k8s.io/test-network-policy created

[root@k8s-master ~]# kubectl get networkpolicy

NAME POD-SELECTOR AGE

test-network-policy app=web 22s

[root@k8s-master ~]# kubectl get pods -l app=web

NAME READY STATUS RESTARTS AGE

web-96d5df5c8-vc9kf 1/1 Running 0 11m

[root@k8s-master ~]# kubectl exec -it client1 -- sh

/ # ping 10.244.169.186

PING 10.244.169.186 (10.244.169.186): 56 data bytes

^C

--- 10.244.169.186 ping statistics ---

14 packets transmitted, 0 packets received, 100% packet loss

/ # wget 10.244.169.186

Connecting to 10.244.169.186 (10.244.169.186:80)

wget: can't open 'index.html': File exists

/ # rm index.html

/ # wget 10.244.169.186

Connecting to 10.244.169.186 (10.244.169.186:80)

saving to 'index.html'

index.html 100% |*******************************************************************| 615 0:00:00 ETA

'index.html' saved

/ # exit

[root@k8s-master ~]# kubectl exec -it client2 -- sh

/ # ping 10.244.169.186

PING 10.244.169.186 (10.244.169.186): 56 data bytes

^C

--- 10.244.169.186 ping statistics ---

10 packets transmitted, 0 packets received, 100% packet loss

/ # rm index.html

/ # wget 10.244.169.186

Connecting to 10.244.169.186 (10.244.169.186:80)

^C

/ #

需求2:default命名空间下所有pod可以互相访问,也可以访问其他命名空间Pod,但其他命名空间不能访问default命名空间Pod。

- podSelector: {}:如果未配置,默认所有Pod

- from.podSelector: {} : 如果未配置,默认不允许

[root@k8s-master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

client1 1/1 Running 0 16m

client2 1/1 Running 0 16m

configmap-demo-pod 1/1 Running 1 34h

my-pod2 1/1 Running 6 41h

nfs-client-provisioner-58d675cd5-dx7n4 1/1 Running 1 36h

pod-taint 1/1 Running 6 7d16h

secret-demo-pod 1/1 Running 1 33h

sh 1/1 Running 2 35h

test-76846b5956-gftn9 1/1 Running 1 35h

test-76846b5956-r7s9k 1/1 Running 1 35h

test-76846b5956-trpbn 1/1 Running 1 35h

test2-78c4694588-87b9r 1/1 Running 1 36h

web-0 1/1 Running 1 35h

web-1 1/1 Running 1 35h

web-2 1/1 Running 1 35h

web-96d5df5c8-vc9kf 1/1 Running 0 19m

[root@k8s-master ~]# kubectl run client1 --image=busybox -n kube-system -- sleep 36000

pod/client1 created

[root@k8s-master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-97769f7c7-z6npb 1/1 Running 12 30d

calico-node-4pwdc 1/1 Running 12 30d

calico-node-9r6zd 1/1 Running 12 30d

calico-node-vqzdj 1/1 Running 12 30d

client1 1/1 Running 0 30s

coredns-6d56c8448f-9xlmw 1/1 Running 5 40h

coredns-6d56c8448f-gcgrh 1/1 Running 12 30d

etcd-k8s-master 1/1 Running 13 30d

filebeat-5pwh7 1/1 Running 7 7d16h

filebeat-pt848 1/1 Running 7 7d16h

kube-apiserver-k8s-master 1/1 Running 21 30d

kube-controller-manager-k8s-master 1/1 Running 21 30d

kube-proxy-q2xfq 1/1 Running 12 30d

kube-proxy-tvzpd 1/1 Running 12 30d

kube-proxy-vtb7r 1/1 Running 6 6d

kube-scheduler-k8s-master 1/1 Running 23 30d

metrics-server-84f9866fdf-rz676 1/1 Running 9 40h

[root@k8s-master ~]# kubectl exec -it client1 -n kube-system

error: you must specify at least one command for the container

[root@k8s-master ~]# kubectl exec -it client1 -n kube-system -- sh

/ # ping 10.244.169.186

PING 10.244.169.186 (10.244.169.186): 56 data bytes

64 bytes from 10.244.169.186: seq=0 ttl=63 time=0.133 ms

64 bytes from 10.244.169.186: seq=1 ttl=63 time=0.096 ms

64 bytes from 10.244.169.186: seq=2 ttl=63 time=0.123 ms

^C

--- 10.244.169.186 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.096/0.117/0.133 ms

[root@k8s-master ~]# vi network2.yaml

[root@k8s-master ~]# cat network2.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: deny-from-other-namespaces

namespace: default

spec:

podSelector: {}

policyTypes:

- Ingress

ingress:

- from:

- podSelector: {}

[root@k8s-master ~]# kubectl apply -f network2.yaml

networkpolicy.networking.k8s.io/deny-from-other-namespaces created

[root@k8s-master ~]# kubectl get networkpolicy

NAME POD-SELECTOR AGE

deny-from-other-namespaces <none> 18s

/ # ping 10.244.169.186

PING 10.244.169.186 (10.244.169.186): 56 data bytes

^C

--- 10.244.169.186 ping statistics ---

10 packets transmitted, 0 packets received, 100% packet loss

/ # ll

sh: ll: not found

/ # exit

command terminated with exit code 127

[root@k8s-master ~]# kubectl exec -it client1 -- sh

/ # ping 10.244.169.186

PING 10.244.169.186 (10.244.169.186): 56 data bytes

64 bytes from 10.244.169.186: seq=0 ttl=62 time=0.622 ms

64 bytes from 10.244.169.186: seq=1 ttl=62 time=5.711 ms

64 bytes from 10.244.169.186: seq=2 ttl=62 time=1.773 ms

^C

--- 10.244.169.186 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.622/2.702/5.711 ms

/ # rm index.html

/ # wget 10.244.169.186

Connecting to 10.244.169.186 (10.244.169.186:80)

saving to 'index.html'

index.html 100% |*******************************************************************| 615 0:00:00 ETA

'index.html' saved

/ #

课后作业:

1、完成案例1:为指定用户授权访问不同命名空间权限

如上需求1

2、完成案例2:对项目Pod出入流量访问控制

如上需求2