win10 yolov5 tensorRT 部署

参考:

1、Win10—YOLOv5实战+TensorRT部署+VS2019编译(小白教程~易懂易上手)—超详细]

2、YOLOV5(Pytorch)目标检测实战:TensorRT加速部署 视频

目录

- 硬软配置

- 一、TensorRT

- 二、测试步骤

-

- 1.确认CUDA 版本

- 2.确认cudnn 版本

- 3.安装tensorrt

- 4.测试tensorrt示例代码

- 5.YOLOv5的TensorRT加速

- 总结

硬软配置

显卡:NVIDIA GeForce GTX 1650 SUPER

CPU:i7-7920HQ CPU @ 3.10GHz 3.10GHz

内存:16

系统:X64

—————————————————————

Visual Studio 2019

OPENCV 4.5

Cmake 3.18.2

CUDA 10.2 cudnn 7.6.5

python 3.8

现配置:yolov5 5.0 torch 1.7.0 torchvision 0.8.0

测试:tensorrt-yolov5(github)

一、TensorRT

官网教程:https://docs.nvidia.com/deeplearning/tensorrt/install-guide/index.html

下载安装包:https://developer.nvidia.com/tensorrt

本人使用版本:TensorRT-7.2.3.4

用于Windows 10和CUDA 10.2 ZIP包的TensorRT 8.2 GA

二、测试步骤

1.确认CUDA 版本

(yolo_V5) C:\Users\Administrator>nvcc -V

2.确认cudnn 版本

在include文件夹底下找到 cudnn.h文件 7.6.5

3.安装tensorrt

点击 立即下载(Download Now)

配置环境变量

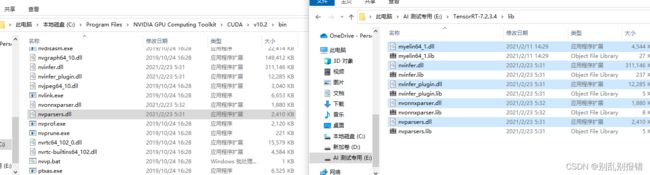

1、解压得到TensorRT-7.2.3.4的文件夹,即 : E:\TensorRT-7.2.3.4

![]()

打开TensorRT-7.2.3.4\lib将里边的lib绝对路径添加到环境变量中,

2、将TensorRT解压位置\lib下的dll文件复制到C:\Program Files\NVIDIA GPU Computing

Toolkit\CUDA\v10.2\bin目录下

安装pycuda

如果要使用python接口的tensorrt,则需要安装pycuda

pip install pycuda

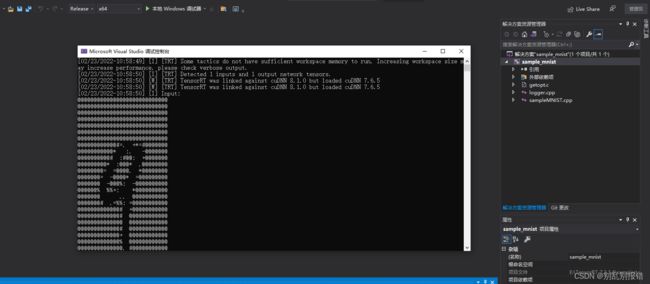

4.测试tensorrt示例代码

配置VS2019

用VS2019打开sampleMNIST示例(E:\tensorrt_tar\TensorRT-7.0.0.11\samples\sampleMNIST) a.

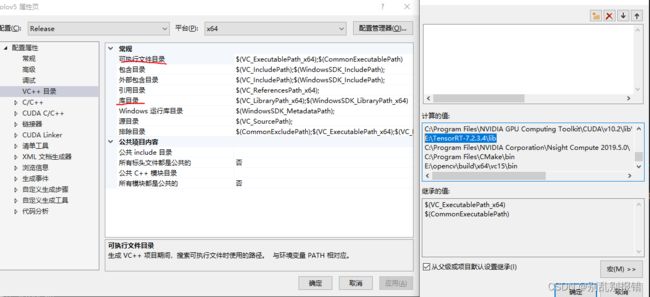

将E:\tensorrt_tar\TensorRT-7.2.3.4\lib加入 项目->属性->VC++目录–>可执行文件目录 和 库目录

b. 将E:\tensorrt_tar\TensorRT-7.2.3.4\lib\include加入C/C++ --> 常规 --> 附加包含目录

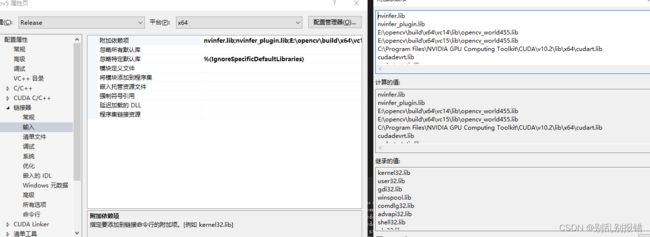

c.将nvinfer.lib、nvinfer_plugin.lib、nvonnxparser.lib和nvparsers.lib加入链接器–>输入–>附加

依赖项

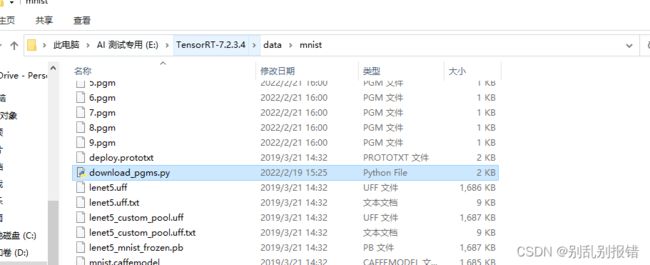

x.pgm下载

说明:PGM 是便携式灰度图像格式(portable graymap file format),在黑白超声图像系统中经常使用PGM格式的图像.

执行:tensorrt目录下的data文件夹找到对应数据集的download_pgms.py,然后运行。运行的时候没输

出,等一会看到文件夹下有了x.pgm文件就说明下载好了。即执行:

python download_pgms.py

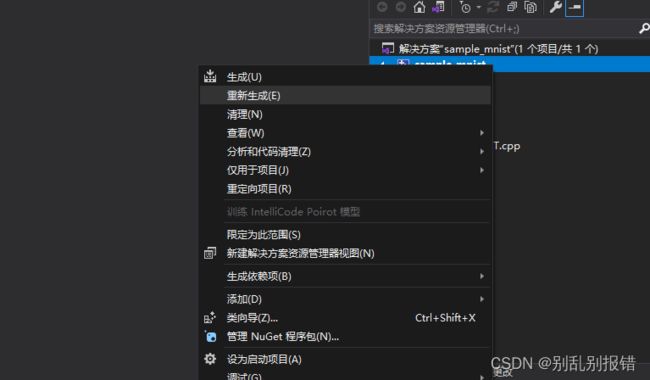

打开E:\TensorRT-7.2.3.4\samples\sampleMNIST\sample_mnist.sln

1.右键生成

2.调试——开始执行

5.YOLOv5的TensorRT加速

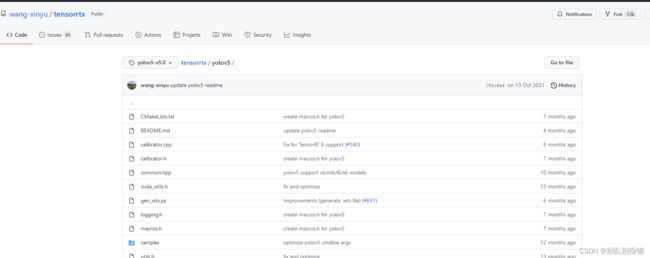

克隆tensorrtx

git clone https://github.com/wang-xinyu/tensorrtx.git

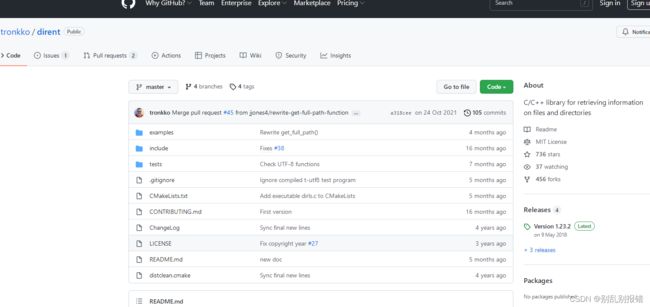

下载文件dirent.h

Dirent是一个C/C++编程接口,允许程序员在Linux/UNIX下检索关于文件和目录的信息。这个项目为Windows提供了Linux兼容的接口。

下载文件dirent.h, 下载地址 https://github.com/tronkko/dirent

放置到** tensorrtx/include**文件夹下,文件夹需新建

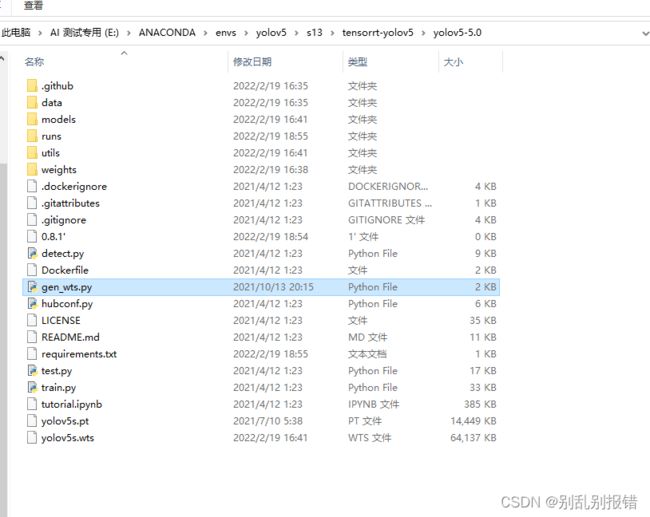

生成yolov5s.wts文件

// 下载权重文件yolov5s.pt // 将文件tensorrtx/yolov5/gen_wts.py 复制到ultralytics/yolov5 底下 执行

python gen_wts.py -w **.pt -o **.wts

成功后会生成 对应模型的.wts文件

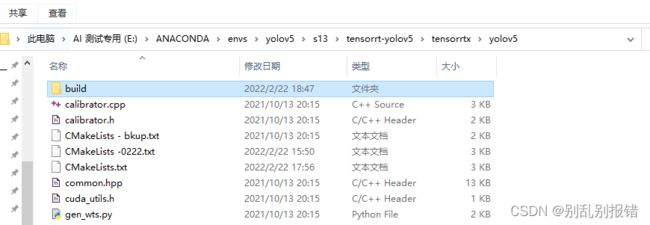

在tensorrtx底下新建build文件夹 用于编译存放

**

修改CMakeList.txt

默认的CMakeList是linux版本的,想在Windows系统下运行项目需要修改CMakeList文件。具体修改完成后的内容如下:

copy如下后需要修改 ##1—##5地址。

cmake_minimum_required(VERSION 2.6)

project(yolov5)

set(OpenCV_DIR "E:/opencv/build") ##1

set(OpenCV_INCLUDE_DIRS "E:/opencv/build/include") ##2

set(OpenCV_LIBS "E:\\opencv\\build\\x64\\vc14\\lib\\opencv_world455.lib") ##3

set(TRT_DIR "E:/TensorRT-7.2.3.4") ##4

add_definitions(-DAPI_EXPORTS)

add_definitions(-std=c++11)

option(CUDA_USE_STATIC_CUDA_RUNTIME OFF)

set(CMAKE_CXX_STANDARD 11)

set(CMAKE_BUILD_TYPE Debug)

set(THREADS_PREFER_PTHREAD_FLAG ON)

find_package(Threads)

# setup CUDA

find_package(CUDA REQUIRED)

message(STATUS " libraries: ${CUDA_LIBRARIES}")

message(STATUS " include path: ${CUDA_INCLUDE_DIRS}")

include_directories(${CUDA_INCLUDE_DIRS})

####

enable_language(CUDA) # add this line, then no need to setup cuda path in vs

####

include_directories(${PROJECT_SOURCE_DIR}/include)

include_directories(${TRT_DIR}\\include)

include_directories(E:\\ANACONDA\\envs\\yolov5\\s13\\tensorrt-yolov5\\tensorrtx\\include) ##5

#find_package(OpenCV)

include_directories(${OpenCV_INCLUDE_DIRS})

include_directories(${OpenCV_INCLUDE_DIRS}\\opencv2) #6

# -D_MWAITXINTRIN_H_INCLUDED for solving error: identifier "__builtin_ia32_mwaitx" is undefined

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++11 -Wall -Ofast -D_MWAITXINTRIN_H_INCLUDED")

# setup opencv

find_package(OpenCV QUIET

NO_MODULE

NO_DEFAULT_PATH

NO_CMAKE_PATH

NO_CMAKE_ENVIRONMENT_PATH

NO_SYSTEM_ENVIRONMENT_PATH

NO_CMAKE_PACKAGE_REGISTRY

NO_CMAKE_BUILDS_PATH

NO_CMAKE_SYSTEM_PATH

NO_CMAKE_SYSTEM_PACKAGE_REGISTRY

)

message(STATUS "OpenCV library status:")

message(STATUS " version: ${OpenCV_VERSION}")

message(STATUS " libraries: ${OpenCV_LIBS}")

message(STATUS " include path: ${OpenCV_INCLUDE_DIRS}")

include_directories(${OpenCV_INCLUDE_DIRS})

link_directories(${TRT_DIR}\\lib)

link_directories(${OpenCV_DIR}\\x64\\vc14\\lib) #8

add_executable(yolov5 ${PROJECT_SOURCE_DIR}/calibrator.cpp yolov5 ${PROJECT_SOURCE_DIR}/yolov5.cpp ${PROJECT_SOURCE_DIR}/yololayer.cu ${PROJECT_SOURCE_DIR}/yololayer.h)

target_link_libraries(yolov5 "nvinfer" "nvinfer_plugin") #9

target_link_libraries(yolov5 ${OpenCV_LIBS}) #10

target_link_libraries(yolov5 ${CUDA_LIBRARIES}) #11

target_link_libraries(yolov5 Threads::Threads) #12

————————————————————————————————————————————————————————————————————————————————

编译tensorrtx/yolov5

下载地址:https://cmake.org/

1.打开cmake-gui软件

2.设置 需要编译的包

3.Configure并设置环境

4.点击Finish,等待Configure done

5.点击Generate并等待Generate done

6.点击Open Project

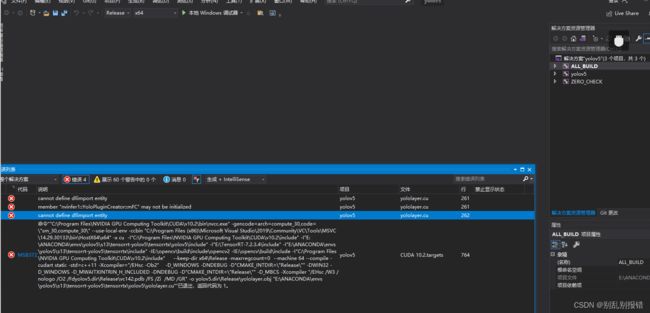

编译时报错 解决经验分享如下:

报错1.

解决:在add_definitions(-std=c++11)上面添加add_definitions(-DAPI_EXPORTS)。

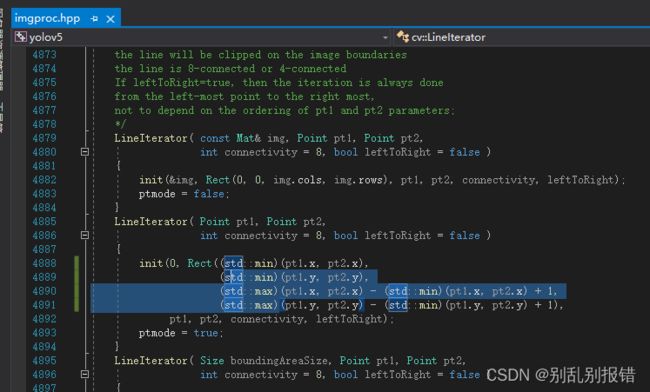

报错2. error C2589: “(”: “::”右边的非法标记;error C2059: 语法错误 : “::”

解决:std::min 增加括号()

编译成功之后 在build>Release 底下 产生项目 yolov5.exe

在yolov5文件下配置:

yolov5.exe -s yolov5s.wts yolov5s.engine s

成功生成yolov5s.engine文件

yolov5.exe -d yolov5s.engine /samplse

总结

都2022年了。原本想着在WIN10下配置yolov5_trt.py来跑rtsp监控的。python的资料网上太少看来只能用c++来跑。不想浪费时间。决定把系统做了。

建议学习配置的兄弟们还是直接在linux下跑吧。