deepstream-yolov5-6.0 存在精度误差修改

问题:经过工程create engine的会导致精度丢失。

1、 通过使用 https://github.com/shouxieai和python同时跑同一个pt,验证同样的pt

2、仿照github的开源处理how to convert the outputs of the yolov5.onnx to boxes ,labels and scores . · Issue #708 · ultralytics/yolov5 · GitHub

github上的开源工程,是巨人,我是站在他的肩膀上。

此工程是使用了,文件layer的内容修改了层结构。所以在create engine的时候会要求使用他们的方式创建,,但是和yolov5-6.0的函数有变化,没有做修改。

于是开启了自己的苦逼之路。

第一步解析:

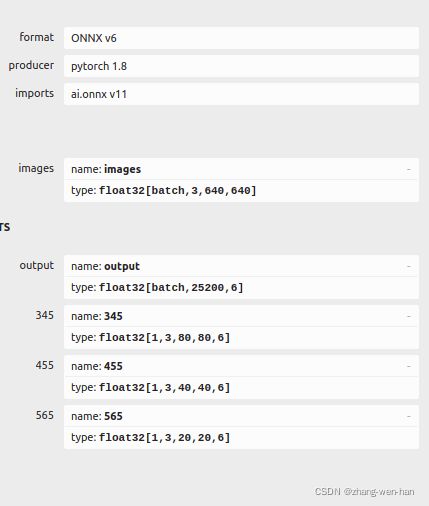

如上图:

在巨人的工程里面是分别计算345.455.565层的数据,进行的合并。且经过他们的wts+config的方式创建的engine的输出是18*80*80 18*40*40 18*2020。

可以把 layer.inferDims.d[0]打印出来验证。。上面的masks.size是计算节点,此处循环3次。

里面的decodeYoloTensor 解析函数:

后面的 addBBoxProposal ->convertBBox主要实现的是:框的坐标

经过这些步骤后,就把框等数据处理好,丢给std::vector

返回给deepstream渲染了。

第二步思路:

1、官方的代码生成onnx,经过trtexec转engine ,直接拿engine过来使用。

存在的问题:输出节点的维度不一致,这个巨人的工程是三个维度,我是四个维度。且需要对应相应的anchor存在问题。

2、使用1x25200x85的节点数据,进行数据处理

第三部上代码:

dimensions =5+类别数 比如说80个类就是85 页就是1x25200x85的85

confidenceIndex =4 置信度的位置 0.1.2.3 分别是x,y,w,h 。4为目标框的概率

循环25200次,

int index = i * dimensions;

if(output[index+confidenceIndex] <= 0.4f) continue;

每次都取index =次数*类别数+置信度的位置。目标框小于0.4丢弃掉。

output[index+j] = output[index+j] * output[index+confidenceIndex];

将大于0.4的类别概率分别与目标框概率相乘。

for (int k = labelStartIndex; k < dimensions; ++k) {

if(output[index+k] <= 0.5f) continue;

const float bx = output[index];

const float by = output[index+1];

const float bw = output[index+2];

const float bh = output[index+3];

const float maxProb = output[index+k];

const int maxIndex = k -5; // k是5+0的位置,所以类别是k-5

addBBoxProposal(bx, by, bw, bh, networkInfo.width, networkInfo.height, maxIndex, maxProb, binfo);此处不再需要output[index+4]是因为,已经用4过滤了,且与index+4相乘覆盖了

k的起始位置labelStartIndex是5。所以类别数,需要用k-5

后面接丢还给巨人的模型,转换坐标和NMS。(addBBoxProposal)

至此,结束

附上整个 代码块:

/*

* Copyright (c) 2019, NVIDIA CORPORATION. All rights reserved.

*

* Permission is hereby granted, free of charge, to any person obtaining a

* copy of this software and associated documentation files (the "Software"),

* to deal in the Software without restriction, including without limitation

* the rights to use, copy, modify, merge, publish, distribute, sublicense,

* and/or sell copies of the Software, and to permit persons to whom the

* Software is furnished to do so, subject to the following conditions:

*

* The above copyright notice and this permission notice shall be included in

* all copies or substantial portions of the Software.

*

* THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

* IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

* FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL

* THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

* LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

* FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER

* DEALINGS IN THE SOFTWARE.

*

* Edited by Marcos Luciano

* https://www.github.com/marcoslucianops

*/

#include

#include

#include

#include "nvdsinfer_custom_impl.h"

#include "utils.h"

#include "yoloPlugins.h"

extern "C" bool NvDsInferParseYolo(

std::vector const& outputLayersInfo,

NvDsInferNetworkInfo const& networkInfo,

NvDsInferParseDetectionParams const& detectionParams,

std::vector& objectList);

static std::vector

nonMaximumSuppression(const float nmsThresh, std::vector binfo)

{

auto overlap1D = [](float x1min, float x1max, float x2min, float x2max) -> float {

if (x1min > x2min)

{

std::swap(x1min, x2min);

std::swap(x1max, x2max);

}

return x1max < x2min ? 0 : std::min(x1max, x2max) - x2min;

};

auto computeIoU

= [&overlap1D](NvDsInferParseObjectInfo& bbox1, NvDsInferParseObjectInfo& bbox2) -> float {

float overlapX

= overlap1D(bbox1.left, bbox1.left + bbox1.width, bbox2.left, bbox2.left + bbox2.width);

float overlapY

= overlap1D(bbox1.top, bbox1.top + bbox1.height, bbox2.top, bbox2.top + bbox2.height);

float area1 = (bbox1.width) * (bbox1.height);

float area2 = (bbox2.width) * (bbox2.height);

float overlap2D = overlapX * overlapY;

float u = area1 + area2 - overlap2D;

return u == 0 ? 0 : overlap2D / u;

};

std::stable_sort(binfo.begin(), binfo.end(),

[](const NvDsInferParseObjectInfo& b1, const NvDsInferParseObjectInfo& b2) {

return b1.detectionConfidence > b2.detectionConfidence;

});

std::vector out;

for (auto i : binfo)

{

bool keep = true;

for (auto j : out)

{

if (keep)

{

float overlap = computeIoU(i, j);

keep = overlap <= nmsThresh;

}

else

break;

}

if (keep) out.push_back(i);

}

return out;

}

static NvDsInferParseObjectInfo convertBBox(

const float& bx, const float& by, const float& bw,

const float& bh, const uint& netW, const uint& netH)

{

NvDsInferParseObjectInfo b;

float x1 = bx - bw / 2;

float y1 = by - bh / 2;

float x2 = x1 + bw;

float y2 = y1 + bh;

x1 = clamp(x1, 0, netW);

y1 = clamp(y1, 0, netH);

x2 = clamp(x2, 0, netW);

y2 = clamp(y2, 0, netH);

b.left = x1;

b.width = clamp(x2 - x1, 0, netW);

b.top = y1;

b.height = clamp(y2 - y1, 0, netH);

return b;

}

static void addBBoxProposal(

const float bx, const float by, const float bw, const float bh,

const uint& netW, const uint& netH, const int maxIndex,

const float maxProb, std::vector& binfo)

{

NvDsInferParseObjectInfo bbi = convertBBox(bx, by, bw, bh, netW, netH);

if (bbi.width < 1 || bbi.height < 1) return;

bbi.detectionConfidence = maxProb;

bbi.classId = maxIndex;

binfo.push_back(bbi);

}

static std::vector

nmsAllClasses(const float nmsThresh,

std::vector& binfo,

const uint numClasses)

{

std::vector result;

std::vector> splitBoxes(numClasses);

for (auto& box : binfo)

{

splitBoxes.at(box.classId).push_back(box);

}

for (auto& boxes : splitBoxes)

{

boxes = nonMaximumSuppression(nmsThresh, boxes);

result.insert(result.end(), boxes.begin(), boxes.end());

}

return result;

}

extern "C" bool NvDsInferParseYolo(

std::vector const& outputLayersInfo,

NvDsInferNetworkInfo const& networkInfo,

NvDsInferParseDetectionParams const& detectionParams,

std::vector& objectList)

{

uint numBBoxes = kNUM_BBOXES;

uint numClasses = kNUM_CLASSES;

int dimensions = 5 + detectionParams.numClassesConfigured;

int confidenceIndex = 4;

int labelStartIndex = 5;

const float beta_nms = 0.45;

std::vector binfo;

for (unsigned int l = 0; l < 1; l++)

{

float* output = (float *)outputLayersInfo[l].buffer;

const NvDsInferLayerInfo &layer = outputLayersInfo[0];

//printf("zwh layer.inferDims.d[0] %d, layer.inferDims.d[1] %d \n", layer.inferDims.d[0],layer.inferDims.d[1]);

for (int i = 0; i < 25200; ++i) {

int index = i * dimensions; // index是5+classnum的倍数,位置是0;

if(output[index+confidenceIndex] <= 0.4f) continue;

for (int j = labelStartIndex; j < dimensions; ++j) {

output[index+j] = output[index+j] * output[index+confidenceIndex];

}

for (int k = labelStartIndex; k < dimensions; ++k) {

if(output[index+k] <= 0.5f) continue;

const float bx = output[index];

const float by = output[index+1];

const float bw = output[index+2];

const float bh = output[index+3];

const float maxProb = output[index+k];

const int maxIndex = k -5; // k是5+0的位置,所以类别是k-5

printf("output[index+4]:%f, output[index+5]%d \n",output[index+4], output[index+5]);

printf("zwh bx:%f by:%f bw:%f bh:%f maxProb:%f \n", bx, by, bw, bh, maxProb);

addBBoxProposal(bx, by, bw, bh, networkInfo.width, networkInfo.height, maxIndex, maxProb, binfo);

}

}

}

objectList.clear();

objectList = nmsAllClasses(beta_nms, binfo, detectionParams.numClassesConfigured);

return true;

}

CHECK_CUSTOM_PARSE_FUNC_PROTOTYPE(NvDsInferParseYolo);