Kubernetes----入门与安装----集群环境搭建

一、安装简介

1.1 集群类型

1.2 安装方式

- mminikube:一个用于快速搭建单节点kubernetes的工具

- kubeadm:一个用于快速搭建kubernetes集群的工具

- 二进制包:从官网下载每个组件的二进制包,依次去安装,安装复杂,但对于理解kubernetes组件更加有效

二、使用kubeadm工具方式安装一主两从类型的kubernetes集群

2.1 主机规划

| 节点 | IP地址 | 操作系统 | 配置 |

|---|---|---|---|

| Master | 192.168.2.150 | CentOS7.9 | 2Cpu2G内存40G硬盘 |

| Node1 | 192.168.2.151 | CentOS7.9 | 2Cpu2G内存40G硬盘 |

| Node2 | 192.168.2.152 | CentOS7.9 | 2Cpu2G内存40G硬盘 |

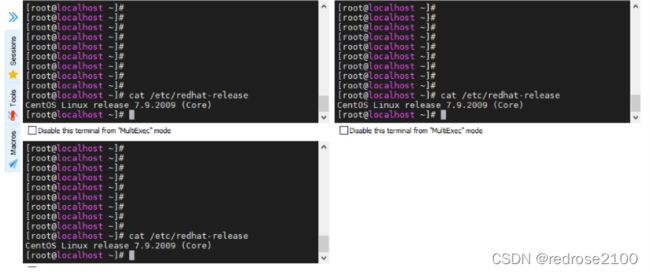

2.2 服务器初始化配置

- (1)修改主机名

hostnamectl set-hostname master # 在192.168.2.150上执行

hostnamectl set-hostname node1 # 在192.168.2.151上执行

hostnamectl set-hostname node2 # 在192.168.2.152上执行

192.168.2.150 master

192.168.2.151 node1

192.168.2.152 node2

配置完成后,在各个节点上ping master,ping node1,ping node2均为ping通状态说明配置OK

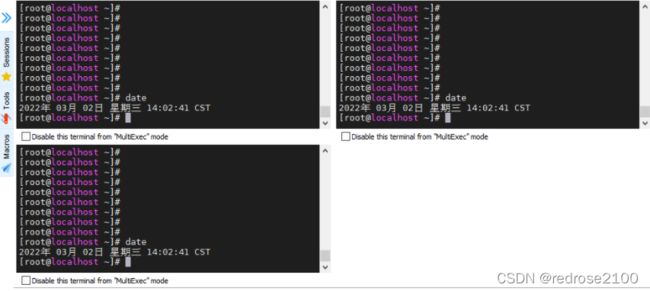

- (4)时间同步

在三台服务器都执行如下命令

systemctl start chronyd

systemctl enable chronyd

若未安装chrony,则使用如下命令安装

yum instal -y chrony

- (5)禁用IPtable和firewall(测试环境可以直接禁掉,生产环境需要慎重考虑)

在三台服务器上执行如下命令:

systemctl stop firewalld

systemctl disable firewalld

systemctl stop iptables

systemctl disable iptables

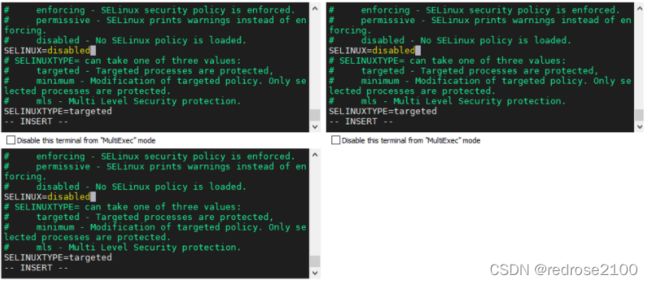

- (6)禁用selinux

通过getenforce命令可以查看状态,默认情况下是开启的

关闭selinux需要修改文件,vi /etc/selinux/config ,然后将 SELINUX=enforcing 修改为SELINUX=disabled,如下:

然后需要重启系统才会生效,这里暂时先不重启,待后续都配置完成后统一重启

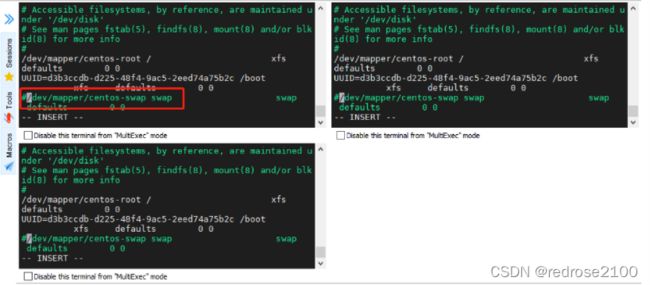

这里也需要重启才会生效,同样暂时不重启,待最后统一重启

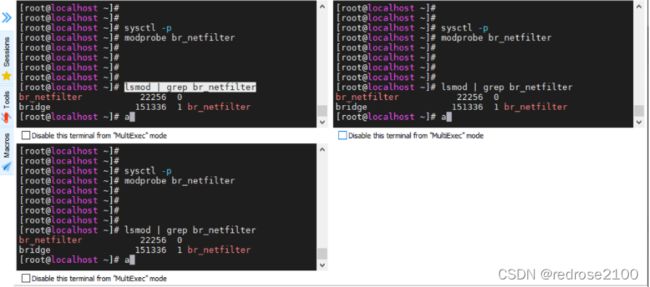

- (8)修改linux内核参数

在三台服务器上创建 /etc/sysctl.d/kubernetes.conf文件,即vi /etc/sysctl.d/kubernetes.conf,文件中增加如下内容:

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip.forward = 1

执行如下命令使上述配置生效

sysctl -p

然后执行如下命令加载一个模块

modprobe br_netfilter

通过执行如下命令来检查是否生效

lsmod | grep br_netfilter

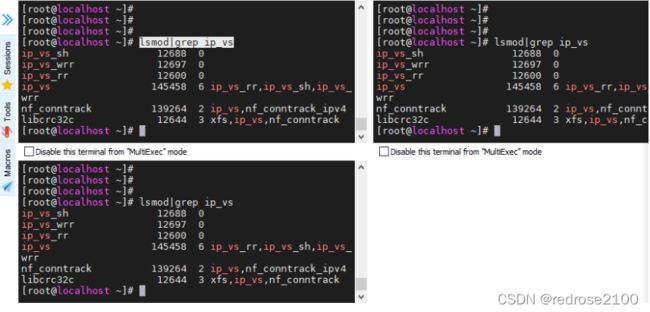

- (9)配置ipvs功能

在三台服务器上执行如下命令:

yum install -y ipset ipvsadmin

创建 /etc/sysconfig/modules/ipvs.modules 文件,即vi /etc/sysconfig/modules/ipvs.modules内容如下:

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

给新建文件增加可执行权限

chmod +x /etc/sysconfig/modules/ipvs.modules

然后执行上述脚本文件

bash /etc/sysconfig/modules/ipvs.modules

通过如下命令可以查看上述配置是否OK

lsmod|grep ip_vs

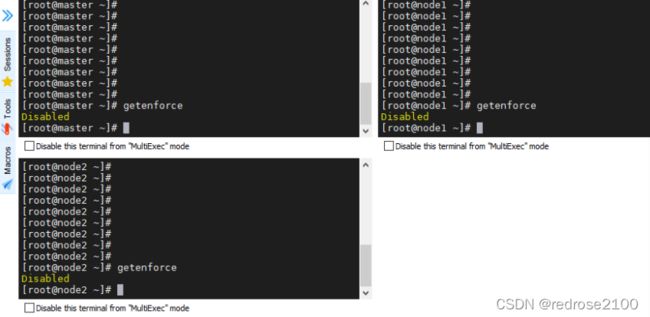

(10)至此,三台服务器的基础配置已经配置完成,此时可以重启一下三台服务器

重启完成后,可以通过getenforce命令查看selinux是否生效,如下表示已经生效

然后通过 free -m查看,如下swap位置均为0表示swap分区已经成功关闭

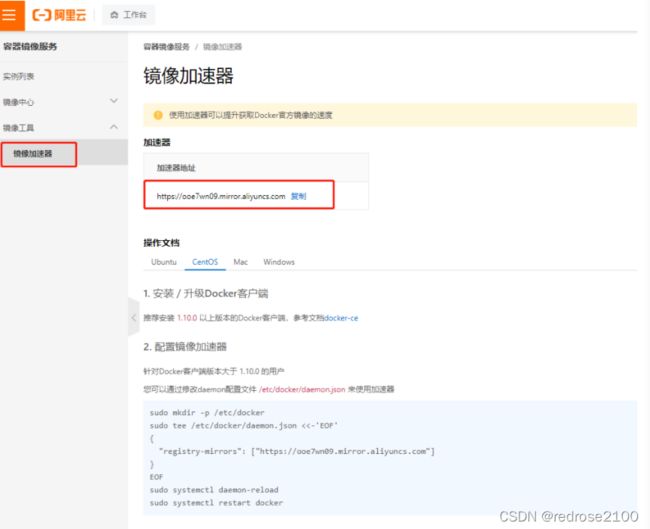

2.3 安装Docker

- (1)配置阿里云的docker镜像源

wget http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

yum clean all

yum makecache

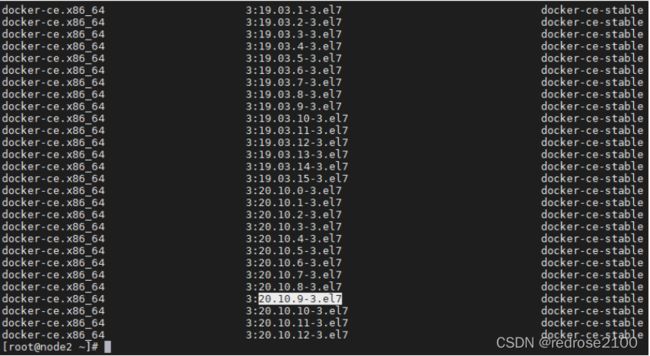

- (2)查看docker的版本

yum list docker-ce --showduplicates

- (3)安装docker

yum install -y --setopt=obsolutes=0 docker-ce-20.10.9-3.el7

创建docker配置文件夹

mkdir /etc/docker

创建 /etc/docker/daemon.json文件,即vi /etc/docker/daemon.json,内容如下:

{

"exec-opts":["native.cgroupdriver=systemd"],

"registry-mirrors":["https://ooe7wn09.mirror.aliyuncs.com"]

}

这里阿里云镜像加速地址可以通过登录阿里云平台,在【容器服务】-【容器镜像服务】-【镜像工具】-【镜像加速器】中找到自己的镜像加速地址

然后执行systemctl start docker 即可启动docker,使用systemctl status docker查看docker状态

如下:

通过docker version 可以查看docker的版本

至此docker安装已经完成,此时可以把docker设置为开机自启动

systemctl enable docker

2.4 安装kubernetes组件

- (1)配置阿里云的kubernetes镜像源

vi /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

然后执行如下命令

yum clean all

yum makecache

- (2)安装kubeadm,kubelet,kubectl

yum install -y --setopt=obsolutes=0 kubeadm-1.21.10-0 kubelet-1.21.10-0 kubectl-1.21.10-0

- (3)配置kubelet配置文件

vi /etc/sysconfig/kubelet 然后编写内容如下:

KUBELET_CGROUP_ARGS="--cgroup-driver=systemd"

KUBE_PROXY_MODE="ipvs"

- (4)设置kubelet的开机自启动

systemctl enable kubelet

2.5 安装集群

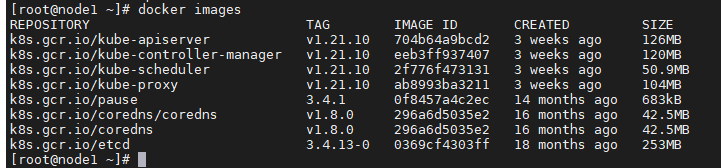

- (1)准备集群镜像

首先通过kubeadm config images list 命令查看需要的镜像,比如这里是:

k8s.gcr.io/kube-apiserver:v1.21.10

k8s.gcr.io/kube-controller-manager:v1.21.10

k8s.gcr.io/kube-scheduler:v1.21.10

k8s.gcr.io/kube-proxy:v1.21.10

k8s.gcr.io/pause:3.4.1

k8s.gcr.io/etcd:3.4.13-0

k8s.gcr.io/coredns/coredns:v1.8.0

因为k8s官网在国外,这里需要先从阿里云上下载,然后通过修改tag值的方式改为和k8s官网一样的,然后再进行安装

在三台服务器上执行如下命令

images=(kube-apiserver:v1.21.10 kube-controller-manager:v1.21.10 kube-scheduler:v1.21.10 kube-proxy:v1.21.10 pause:3.4.1 etcd:3.4.13-0 coredns:v1.8.0)

for imageName in ${images[@]} ; do

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName

docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

done

执行完成后通过docker images 即可查看已经下载好的docker镜像,

这里还需要执行如下命令做一次修改

docker tag k8s.gcr.io/coredns:v1.8.0 k8s.gcr.io/coredns/coredns:v1.8.0

- (2)集群初始化

至此,所有上述的执行命令都是在三台服务器上执行的,这里执行集群初始化的命令注意只需要在master节点执行即可

kubeadm init --kubernetes-version=v1.21.6 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --apiserver-advertise-address=192.168.2.150

如下,表示集群初始化成功

根据上图提示,执行如下三条命令(注意,以下三条命令从上述回显中拷贝)

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

根据提示,如果是root用户,需要再执行如下命令(注意,同样从上述回显中拷贝)

export KUBECONFIG=/etc/kubernetes/admin.conf

此时在主节点可以查看当前集群中的节点信息,如下,此时只有master节点

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane,master 3m13s v1.21.6

[root@master ~]#

然后还是根数上述回显提示,分别将如下命令(注意此命令同样也是从上述回显中拷贝)在另外两个node节点上执行

kubeadm join 192.168.2.150:6443 --token la4re6.kxloa60jkydvl8nv \

--discovery-token-ca-cert-hash sha256:e0bfa2e1f9adf734d415567d95f97db1ec97883ffd8402712b2960cd1f30d0fc

然后回到master节点继续查询此时的节点信息,如下,此时已经有两个节点了

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane,master 7m7s v1.21.6

node1 NotReady <none> 16s v1.21.6

node2 NotReady <none> 7s v1.21.6

[root@master ~]#

如何在node节点执行kubectl命令

如果想在node节点行执行kubectl命令,需要将master节点的 $Home/.kube 文件拷贝到node节点的home目录下

执行如下命令进行配置文件的拷贝

# 拷贝到node1节点

scp -r ~/.kube node1:~/

# 拷贝到node2节点

scp -r ~/.kube node2:~/

然后到node节点就可以执行kubectl命令了

2.6 安装网络插件

这里的操作只需要在master上操作即可

下载yml文件并使用此文件执行

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

kubectl apply -f kube-flannel.yml

稍等一会,再次在master节点上查询节点状态,如下status为Ready表示此时已经OK了

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 21m v1.21.6

node1 Ready <none> 14m v1.21.6

node2 Ready <none> 14m v1.21.6

[root@master ~]#

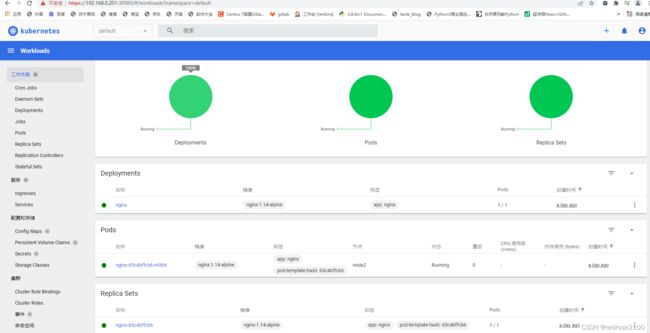

2.7 部署Nginx服务测试集群是否已部署成功

- (1)部署nginx

在master节点执行如下命令部署Nginx

kubectl create deployment nginx --image=nginx:1.14-alpine

- (2)暴露端口

在master节点执行如下命令暴露80端口

kubectl expose deployment nginx --port=80 --type=NodePort

- (3)查看服务状态

在master节点执行如下命令:

kubectl get pods

kubectl get service

执行结果如下:

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-65c4bffcb6-jdd64 1/1 Running 0 2m41s

[root@master ~]# kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 34m

nginx NodePort 10.97.150.219 <none> 80:30483/TCP 2m6s

[root@master ~]#

此时就可以通过master的ip加上这里的端口号30483进行访问了,如下说明k8s的集群已经部署成功并且可以使用了

三、安装dashboard

3.1 下载yaml文件并安装

从Kubernetes/dashboard的github地址下载yaml文件如下:

https://github.com/kubernetes/dashboard/blob/master/aio/deploy/recommended.yaml

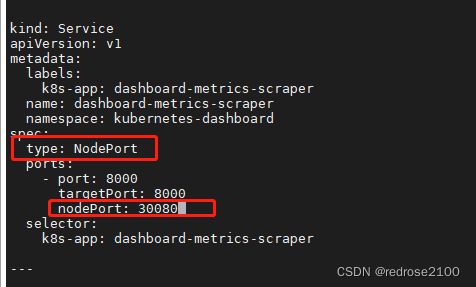

然后编辑yaml文件,增加如下两行,即给Service设置类型为NodePort,并且设置其值为30080

然后使用如下命令创建

[root@master ~]# kubectl apply -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

[root@master ~]#

如下命令即查看pod和service已经启动OK了

[root@master ~]# kubectl get pod,svc -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

pod/dashboard-metrics-scraper-c45b7869d-n67mp 1/1 Running 0 95s

pod/kubernetes-dashboard-79b5779bf4-6srmq 1/1 Running 0 95s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/dashboard-metrics-scraper ClusterIP 10.96.75.37 <none> 8000/TCP 95s

service/kubernetes-dashboard NodePort 10.109.128.90 <none> 443:30080/TCP 95s

[root@master ~]#

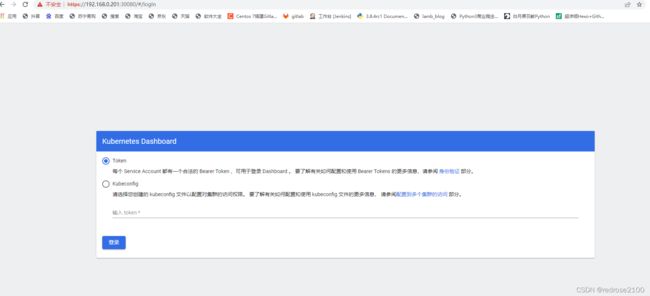

然后在浏览器中打开 https://192.168.0.201:30080/,其中ip地址为master节点的ip

如下:

3.2 浏览器访问dashboard页面

如下创建访问账户,获取token

# 创建账号

[root@master ~]# kubectl create serviceaccount dashboard-admin -n kubernetes-dashboard

serviceaccount/dashboard-admin created

[root@master ~]#

# 授权

[root@master ~]# kubectl create clusterrolebinding dashboard-admin-rb --clusterrole=cluster-admin --serviceaccount=kubernetes-dashboard:dashboard-admin

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin-rb created

[root@master ~]#

# 获取token

[root@master ~]# kubectl get secrets -n kubernetes-dashboard | grep dashboard-admin

dashboard-admin-token-748s2 kubernetes.io/service-account-token 3 3m16s

[root@master ~]#

[root@master ~]# kubectl describe secrets dashboard-admin-token-748s2 -n kubernetes-dashboard

Name: dashboard-admin-token-748s2

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: 865e01fd-0ef5-4f42-9dad-f49116ecce0a

Type: kubernetes.io/service-account-token

Data

====

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IlMzcHVkSWhFclhxc0Eydzh0SXc4Q0ltQnVGc0xaZElsR0gtNzBUalBKSmsifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tNzQ4czIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiODY1ZTAxZmQtMGVmNS00ZjQyLTlkYWQtZjQ5MTE2ZWNjZTBhIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmVybmV0ZXMtZGFzaGJvYXJkOmRhc2hib2FyZC1hZG1pbiJ9.tTuwAikwMmUr9IL781PPTp9qx6jz7OXox8H3XvtYqtKBX7JZAM0lfkoMmtt8QPGvEgGhMO0Ze25uNjiRG5BxwZ5f7mzIWgpvxe5IIxrVYNl-xNu4Iml3cSzsHKrwXHpdhIn_lqEkqA0_060t4IRW1MiTKqnN_53q8UPu_YXaby4JchhHA-pmRh2qYdY7pVcNsDFc1W0Eo6UNG9Jw3Y3NNdTJAT-w5dzTFfLhV5TpG6STBihA8Aq9oLgx_88DHeiHA4xrGtWkCG8Bwx60bpVIwOOVMwg9iyW89ES8Zl5CcQvBOWNhnfIAq6d2OPNInSoHV_Xv1OSsV-Sex0EImKFssw

ca.crt: 1066 bytes

namespace: 20 bytes

[root@master ~]#