深度学习之基于卷积神经网络实现花朵识别

类比于猫狗大战,利用自己搭建的CNN网络和已经搭建好的VGG16实现花朵识别。

1.导入库

注:导入的库可能有的用不到,之前打acm时留下的毛病,别管用不用得到,先写上再说

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense,Conv2D,Flatten,Dropout,MaxPooling2D

from tensorflow.keras.preprocessing.image import ImageDataGenerator

import os,PIL

import numpy as np

import matplotlib.pyplot as plt

import pathlib

from PIL import ImageFile

ImageFile.LOAD_TRUNCATED_IMAGES = True

2.数据下载

#数据下载

dataset_url = "https://storage.googleapis.com/download.tensorflow.org/example_images/flower_photos.tgz"

dataset_dir = tf.keras.utils.get_file(fname = 'flower_photos',origin=dataset_url,untar=True,cache_dir= 'E:/Deep-Learning/flowers')

dataset_dir = pathlib.Path(dataset_dir)

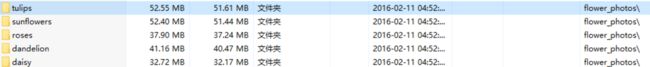

下载之后的文件是这个样子的。

为了方便处理,博主将数据集按照8:2的比例手动(就是不会用代码)划分成了训练集和测试集。

处理之后的文件夹如图所示:

3.计算数据总数

#将train和test下面的数据的文件路径加载到变量中

train_daisy = os.path.join(dataset_dir,"train","daisy")

train_dandelion = os.path.join(dataset_dir,"train","dandelion")

train_roses = os.path.join(dataset_dir,"train","roses")

train_sunflowers = os.path.join(dataset_dir,"train","sunflowers")

train_tulips = os.path.join(dataset_dir,"train","tulips")

test_daisy = os.path.join(dataset_dir,"test","daisy")

test_dandelion = os.path.join(dataset_dir,"test","dandelion")

test_roses = os.path.join(dataset_dir,"test","roses")

test_sunflowers = os.path.join(dataset_dir,"test","sunflowers")

test_tulips = os.path.join(dataset_dir,"test","tulips")

#将训练集和测试集加载到变量中

train_dir = os.path.join(dataset_dir,"train")

test_dir = os.path.join(dataset_dir,"test")

#统计训练集和测试集的数据数目

train_daisy_num = len(os.listdir(train_daisy))

train_dandelion_num = len(os.listdir(train_dandelion))

train_roses_num = len(os.listdir(train_roses))

train_sunflowers_num = len(os.listdir(train_sunflowers))

train_tulips_num = len(os.listdir(train_tulips))

train_all = train_tulips_num+train_daisy_num+train_dandelion_num+train_roses_num+train_sunflowers_num

test_daisy_num = len(os.listdir(test_daisy))

test_dandelion_num = len(os.listdir(test_dandelion))

test_roses_num = len(os.listdir(test_roses))

test_sunflowers_num = len(os.listdir(test_sunflowers))

test_tulips_num = len(os.listdir(test_tulips))

test_all = test_tulips_num+test_daisy_num+test_dandelion_num+test_roses_num+test_sunflowers_num

4.超参数的设置

batch_size = 32

epochs = 10

height = 180

width = 180

5.数据预处理

#归一化处理

train_generator = tf.keras.preprocessing.image.ImageDataGenerator(rescale=1.0/255)

test_generator = tf.keras.preprocessing.image.ImageDataGenerator(rescale=1.0/255)

#规定batch_size的大小,文件路径,打乱图片顺序,规定图片的大小

train_data_gen = train_generator.flow_from_directory(

batch_size=batch_size,

directory=train_dir,

shuffle=True,

target_size=(height,width),

class_mode="categorical")

test_data_gen = test_generator.flow_from_directory(

batch_size=batch_size,

directory=test_dir,

shuffle=True,

target_size=(height,width),

class_mode="categorical")

6.模型搭建&&模型训练

模型采用的是三层卷积池化层+Dropout+Flatten+两层全连接层

model = tf.keras.Sequential([

tf.keras.layers.Conv2D(16,3,padding="same",activation="relu",input_shape=(height,width,3)),

tf.keras.layers.MaxPooling2D(),

tf.keras.layers.Conv2D(32,3,padding="same",activation="relu"),

tf.keras.layers.MaxPooling2D(),

tf.keras.layers.Conv2D(64,3,padding="same",activation="relu"),

tf.keras.layers.MaxPooling2D(),

tf.keras.layers.Dropout(0.5),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(128,activation="relu"),

tf.keras.layers.Dense(5,activation='softmax')

])

model.compile(optimizer="adam",

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=["acc"])

history = model.fit_generator(train_data_gen,

steps_per_epoch=train_all//batch_size,

epochs=epochs,

validation_data=test_data_gen,

validation_steps=test_all//batch_size)

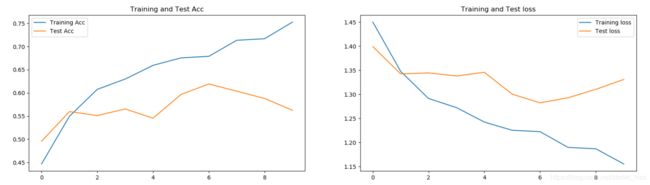

实验效果如下所示:

出现了过拟合的情况,epochs为50的情况下,运行结果如下所示:

测试集的准确率相比于epochs为10的情况高了10%左右,但是准确率仍然很低,而且过拟合的情况还是很严重,运行时间特别长~

7.数据增强

train_generator = tf.keras.preprocessing.image.ImageDataGenerator(rescale=1.0/255,

rotation_range=45,#倾斜45°

width_shift_range=.15,

height_shift_range=.15,

horizontal_flip=True,#水平翻转

zoom_range=0.5)#随机放大

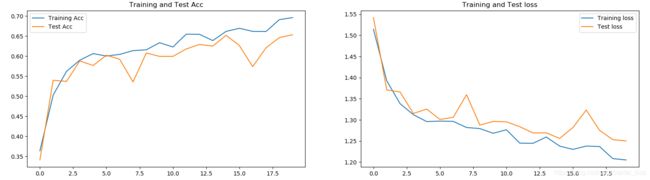

实验结果如下所示:

epochs为20,过拟合情况得到了改善。

loss: 1.2052 - acc: 0.6962 - val_loss: 1.2502 - val_acc: 0.6534

#epochs为20的时候准确率是65,而epochs为50的时候准确率为66,并没有很大的改善。

8.迁移学习

利用别人训练好的VGG16网络对同样的数据进行训练。

#引用VGG16模型

conv_base = tf.keras.applications.VGG16(weights='imagenet',include_top = False)

#设置为不可训练

conv_base.trainable = False

#搭建模型

model = tf.keras.Sequential()

model.add(conv_base)

model.add(tf.keras.layers.GlobalAveragePooling2D())

model.add(tf.keras.layers.Dense(128,activation='relu'))

model.add(tf.keras.layers.Dense(5,activation='sigmoid'))