深度学习项目,使用python进行表情识别,pytorch应用

文章目录

- 前言

- 一、深度学习是什么?

- 二、数据的预处理

-

- 1.数据分类

- 2.代码

- 三、构建模型与训练

-

- 1.模型与代码

- 2.使用方法

- 四、实时识别

- 总结

前言

这个项目是以前课设用到的功能之一,参考了其他人的人的博客,自己做了一下整理

需要用到的库有:opencv,pandas,pytorch,numpy,dlib

本项目基于深度学习

一、深度学习是什么?

深度学习(DL, Deep Learning)是机器学习(ML, Machine Learning)领域中一个新的研究方向,它被引入机器学习使其更接近于最初的目标——人工智能(AI, Artificial Intelligence)。

形象来说,深度学习就是构建一个类似于人类大脑的人工神经网络,通过已有的数据不断学习,最终使得每个神经元的参数趋于完美,使其能够解决实际生活中的抽象问题。

二、数据的预处理

1.数据分类

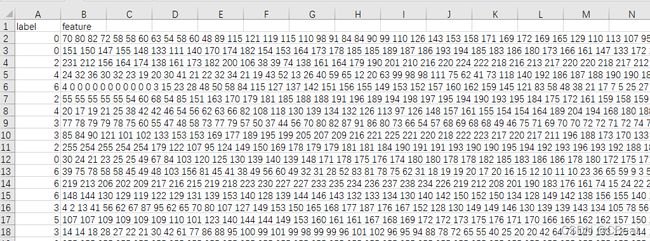

我们所用到的数据都存放在train.csv文件里面,文件的结构如下:

其中,lable是单张图片的标签,不同的值对应不同的表情:

| value | emotion |

|---|---|

| 0 | angry |

| 1 | disgust |

| 2 | fear |

| 3 | happy |

| 4 | sad |

| 5 | surprise |

| 6 | neutral |

feature是单张图片的字符串编码,每一串编码由28 * 28 = 784组字符串组成,每组字符串对应一个像素的灰度图。

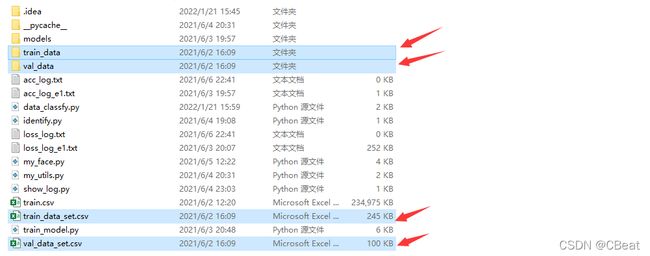

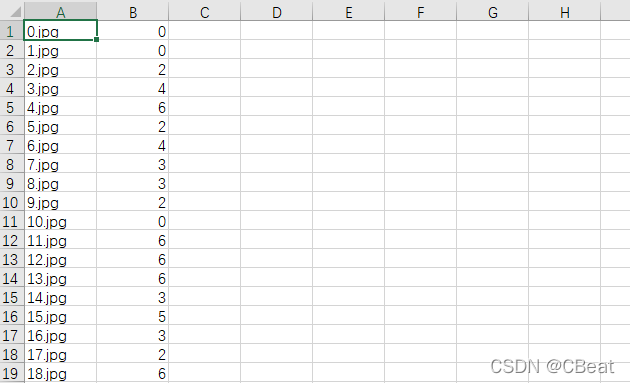

在预处理阶段,我们需要将train.scv文件中的所有图像提取出来,分为train_data和val_data,分别作为训练集与验证集,并存放到各自的文件夹。

同时,建立train_data.csv与val_data.csv存放文件名到该文件标签的映射

2.代码

"""

该文件将原数据‘train.csv’分离

图片分离到train_data, val_data两个文件夹中

并创建train_data_set.csv与val_data_set.csv来标注数据

"""

import pandas as pd

import cv2 as cv

import numpy as np

import os

def classify():

"""

将数据分为训练集与数据集并储存

:return:

"""

# 数据预处理

# 将label与人脸数据作拆分

path = './train.csv'

all_data = pd.read_csv(path)

# 将百分之70的数据作为训练集

train_num = int(all_data.shape[0] * 0.7)

train_data = all_data.loc[0: train_num]

val_data = all_data.loc[train_num:]

print(train_data)

save_img_and_label(train_data, './train_data', './train_data_set.csv')

save_img_and_label(val_data, './val_data', './val_data_set.csv')

def save_img_and_label(data: pd.DataFrame, img_save_path, csv_save_path):

"""

保存数据中的图片并对其标注

"""

if not os.path.exists(img_save_path):

os.mkdir(img_save_path)

img_name_list = []

img_label_list = []

for i in range(len(data)):

# 生成文件名

img_name = '{}.jpg'.format(i)

# 读取csv文件中字符串类型的图片

img_str = data[['feature']].values[i][0]

img_label = str(data[['label']].values[i][0])

# 分隔字符串,得到每个像素点的灰度

img_list = img_str.split(' ')

# 转化为darray数组并保存

img = np.array(img_list, dtype=np.int32).reshape((48, 48))

cv.imwrite(img_save_path + '/' + img_name, img)

img_name_list.append(img_name)

img_label_list.append(img_label)

# 保存数据

save_data = pd.DataFrame(img_label_list, index=img_name_list)

save_data.to_csv(csv_save_path, header=False)

# 调用执行

classify()

三、构建模型与训练

1.模型与代码

代码如下

文件名train_model.py

"""

训练模型

"""

import torch

import torch.utils.data as data

from torch.utils.data.dataset import T_co

import pandas as pd

import numpy as np

import cv2 as cv

import torch.nn as nn

from torch import optim

class FaceDateset(data.Dataset):

"""

加载数据的类

"""

def __init__(self, img_dir, data_set_path):

self.img_dir = img_dir

self.label_path = data_set_path

# 读取csv

img_names = pd.read_csv(data_set_path, header=None, usecols=[0])

img_labels = pd.read_csv(data_set_path, header=None, usecols=[1])

# 转化为一维np数组

self.img_names = np.array(img_names)[:, 0]

self.img_labels = np.array(img_labels)[:, 0]

def __getitem__(self, index) -> T_co:

# 记载图片路径

img_path = self.img_dir + '/' + self.img_names[index]

# 读取图片

img = cv.imread(img_path)

# 转化为灰度

img = cv.cvtColor(img, cv.COLOR_BGR2GRAY)

# 直方图均衡

img = cv.equalizeHist(img)

# 标准化输入并进行归一化

img = img.reshape((1, 48, 48)) / 255.0

# 转化为torch图片

img_torch = torch.from_numpy(img).type('torch.FloatTensor')

label = self.img_labels[index]

return img_torch, label

def __len__(self):

return self.img_names.shape[0]

def gaussian_weights_init(m):

"""参数初始化,采用高斯分布"""

classname = m.__class__.__name__

if classname.find('Conv') != -1:

m.weight.data.normal_(0.0, 0.04)

def validate(model, dataset, batch_size):

"""验证模型在验证集上的正确率"""

val_loader = data.DataLoader(dataset, batch_size)

result, num = 0.0, 0

for images, labels in val_loader:

pred = model.forward(images)

pred = np.argmax(pred.data.numpy(), axis=1)

labels = labels.data.numpy()

result += np.sum((pred == labels))

num += len(images)

acc = result / num

return acc

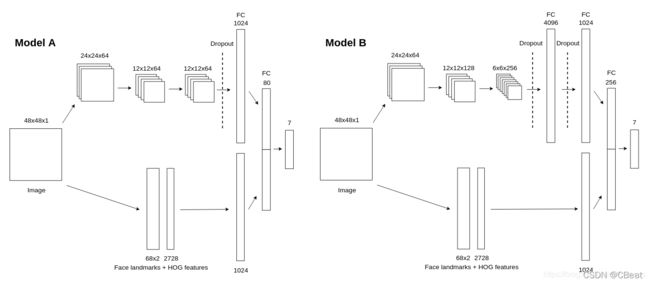

class FaceCNN(nn.Module):

"""CNN神经网络"""

def __init__(self):

super().__init__()

# 第一层卷积、池化

self.conv1 = nn.Sequential(

nn.Conv2d(in_channels=1, out_channels=64, kernel_size=3, stride=1, padding=1), # 卷积层

nn.BatchNorm2d(num_features=64), # 归一化

nn.RReLU(inplace=True), # 激活函数

nn.MaxPool2d(kernel_size=2, stride=2), # 最大值池化

)

# 第二层卷积、池化

self.conv2 = nn.Sequential(

nn.Conv2d(in_channels=64, out_channels=128, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(num_features=128),

nn.RReLU(inplace=True),

# output:(bitch_size, 128, 12 ,12)

nn.MaxPool2d(kernel_size=2, stride=2),

)

# 第三层卷积、池化

self.conv3 = nn.Sequential(

nn.Conv2d(in_channels=128, out_channels=256, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(num_features=256),

nn.RReLU(inplace=True),

# output:(bitch_size, 256, 6 ,6)

nn.MaxPool2d(kernel_size=2, stride=2),

)

# 参数初始化

self.conv1.apply(gaussian_weights_init)

self.conv2.apply(gaussian_weights_init)

self.conv3.apply(gaussian_weights_init)

# 全连接层

self.fc = nn.Sequential(

nn.Dropout(p=0.2),

nn.Linear(in_features=256 * 6 * 6, out_features=4096),

nn.RReLU(inplace=True),

nn.Dropout(p=0.5),

nn.Linear(in_features=4096, out_features=1024),

nn.RReLU(inplace=True),

nn.Linear(in_features=1024, out_features=256),

nn.RReLU(inplace=True),

nn.Linear(in_features=256, out_features=7),

)

def forward(self, x):

"""前向传播"""

# print(x.shape)

x = self.conv1(x)

x = self.conv2(x)

x = self.conv3(x)

# 数据扁平化

x = x.view(x.shape[0], -1)

y = self.fc(x)

return y

def train(lr=0.01, weight_decay=0, batch_size=128, epochs=100):

"""训练模型"""

# 加载数据

train_data_set = FaceDateset('train_data', 'train_data_set.csv')

val_data_set = FaceDateset('val_data', 'val_data_set.csv')

train_loader = data.DataLoader(train_data_set, batch_size)

# 构建神经网络

model = FaceCNN()

# 损失函数

loss_fun = nn.CrossEntropyLoss()

# 优化器

optimizer = optim.Adam(model.parameters(), lr=lr, weight_decay=weight_decay)

# 记录损失值与正确率

loss_log = open('loss_log.txt', 'w')

acc_log = open('acc_log.txt', 'w')

for epoch in range(epochs):

# 损失函数值

print('第{}轮训练开始'.format(epoch))

loss_rate = 0

model.train()

for images, labels in train_loader:

# 梯度清零

optimizer.zero_grad()

# 前向转播

out_put = model.forward(images)

# 计算误差值

loss_rate = loss_fun(out_put, labels)

# 反向传播

loss_rate.backward()

optimizer.step()

# 保存损失值

loss_log.write('{}\n'.format(loss_rate.item()))

loss_log.flush()

print('第{}轮损失值为:{}'.format(epoch, loss_rate.item()))

# 计算并保存正确率,保存模型

if epoch % 5 == 0:

acc = validate(model, val_data_set, batch_size)

print('第{}轮正确率为:{}'.format(epoch, acc))

acc_log.write('{}, {}\n'.format(epoch, acc))

acc_log.flush()

torch.save(model, './models/train_{}.pkl'.format(epoch))

loss_log.close()

acc_log.close()

if __name__ == '__main__':

train()

文件名show_log.py

"""

可视化结果

"""

import matplotlib.pyplot as plt

import numpy as np

def cvt_file2np(log_file_path):

"""读取log文件并转化为np数组"""

log = np.genfromtxt(log_file_path, delimiter=',')

if log.ndim != 1:

x = np.array(log[:, 0])

y = np.array(log[:, 1])

else:

length = log.shape[0]

x = np.arange(0, length)

y = log

return [x, y]

acc_data = cvt_file2np('acc_log.txt')

loss_data = cvt_file2np('loss_log.txt')

loss_data[1] = loss_data[1] + 0.0000001

loss_data[1] = np.log10(loss_data[1])

plt.subplot(121)

plt.plot(acc_data[0], acc_data[1])

plt.title('acc')

plt.xlabel('epoch')

plt.ylabel('acc')

plt.subplot(122)

plt.plot(loss_data[0], loss_data[1])

plt.title('log10(loss)')

plt.xlabel('iteration')

plt.ylabel('log10(loss)')

plt.show()

2.使用方法

首先运行train_model.py文件训练模型,这里采用的是cup运算,非常花费时间。

运行完成后模型会保存到根目录的models文件夹下,每训练5个epoch保存一次。

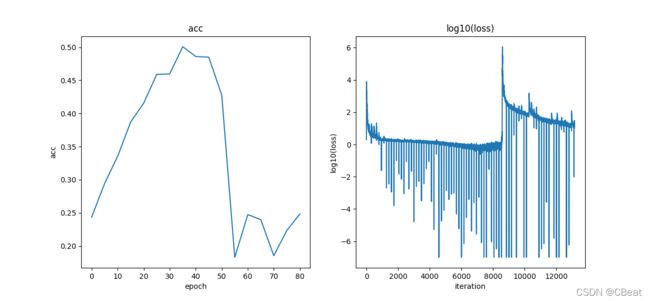

训练完成后,运行show_log.py将训练结果可视化,例如:

不难看出,训练到第35轮时正确率最高,之后数据发生了过拟合,正确率开始下降。

四、实时识别

使用opencv来打开摄像头,实现对图像中人脸的实时识别

(由于课设中不需要使用gui显示图像,所以设置了一个变量gui_open来控制是否显示gui)

代码如下:

文件名:my_face.py

"""

表情识别

"""

import cv2 as cv

import dlib

import numpy as np

import torch

from train_model import FaceCNN

from my_utils import get_face_target, labels, soft_max, cvt_R2N

import warnings

warnings.filterwarnings("ignore")

class EmotionIdentify:

"""

用于表情识别的类

"""

def __init__(self, gui_open=False):

# 使用特征提取器get_frontal_face_detector

self.detector = dlib.get_frontal_face_detector()

# 加载CNN模型

self.model = FaceCNN()

self.model.load_state_dict(torch.load('./models/train_35.pkl').state_dict())

# 建cv2摄像头对象,这里使用电脑自带摄像头,如果接了外部摄像头,则自动切换到外部摄像头

self.cap = cv.VideoCapture(0)

# 设置视频参数,propId设置的视频参数,value设置的参数值

self.cap.set(3, 480)

# 截图screenshot的计数器

self.img = None

self.gui_open = gui_open

def get_img_and_predict(self):

"""

表情识别

:return:(img, emotion)

"""

gray_img, left_top, right_bottom, result = None, None, None, None

if self.cap.isOpened():

# 从摄像头读取图像

flag, img = self.cap.read()

self.img = img

img_gray = cv.cvtColor(img, cv.COLOR_RGB2GRAY)

# 使用人脸检测器检测每一帧图像中的人脸。并返回人脸数rects

faces = self.detector(img_gray, 0)

if len(faces) > 0:

# 只取第一张脸

face = faces[0]

left_top = (face.left(), face.top())

right_bottom = (face.right(), face.bottom())

# 转化为灰度图片并提取目标

gray_img = cv.cvtColor(img, cv.COLOR_BGR2GRAY)

gray_img = get_face_target(gray_img, face)

# 将目标大小重置为神经网络的输入大小

gray_img = cv.resize(gray_img, (48, 48))

target_img = gray_img.reshape((1, 1, 48, 48)) / 255.0

img_torch = torch.from_numpy(target_img).type('torch.FloatTensor')

result = self.model(img_torch).detach().numpy()

result = soft_max(result)

if self.gui_open:

self.show(gray_img, left_top, right_bottom, result)

return self.img, result

raise Exception('No camera')

def show(self, gray_img, left_top, right_bottom, result):

"""显示图像"""

show_img = self.img

if gray_img is not None:

cv.imshow('gray_img', gray_img)

color = (255, 34, 78)

cv.rectangle(show_img, left_top, right_bottom, color, 2)

emotion = labels[np.argmax(result)]

cv.putText(show_img, emotion, left_top, 0, 2, color, 2)

cv.imshow('cv', show_img)

cv.waitKey(20)

def my_test():

ed = EmotionIdentify(gui_open=True)

flag = True

while flag is not None:

flag = ed.get_img_and_predict()

cv.waitKey(10)

result = flag[1]

print(cvt_R2N(result))

if __name__ == '__main__':

my_test()

依赖工具my_utils.py

"""

使用的工具

"""

import numpy as np

labels = {0: 'angry', 1: 'disgust', 2: 'fear', 3: 'happy',

4: 'sad', 5: 'surprise', 6: 'neutral'}

def get_face_target(gray_img: np.ndarray, face):

"""

从gray_img中提取面部图像

:param gray_img:

:param face:

:return:

"""

img_size = gray_img.shape

# print(img_size)

x1, y1, x2, y2 = face.left(), face.top(), face.right(), face.bottom()

def reset_point(point, max_size):

"""

重置点的位置

:param point: 当前点的坐标

:param max_size: 点在坐标轴的上的最大位置

:return:

"""

if point < 0:

point = 0

if point > max_size:

point = max_size - 1

return point

x1 = reset_point(x1, img_size[0])

x2 = reset_point(x2, img_size[0])

y1 = reset_point(y1, img_size[1])

y2 = reset_point(y2, img_size[1])

return gray_img[y1:y2, x1:x2]

def soft_max(x):

"""classify"""

exp_x = np.exp(x)

sum_x = np.sum(exp_x)

y = exp_x / sum_x

return y

def cvt_R2N(result):

"""将神经网络预测的结果转化为0-100的数值"""

if result is None:

return 80

cvt_M = [70, 20, 60, 100, 10, 90, 80]

cvt_M = np.array(cvt_M)

level = np.sum(cvt_M * result)

return level

总结

该项目的项目树如下

- data_classfy.py

- my_face.py

- my_utils.py

- show_log.py

- train.csv

- train_model.py