TensorFlow学习记录:用TF_Serving将人脸识别模型Inception ResNet V2部署到服务器上

部署前先到这里下载已训练好的Inception ResNet V2模型。

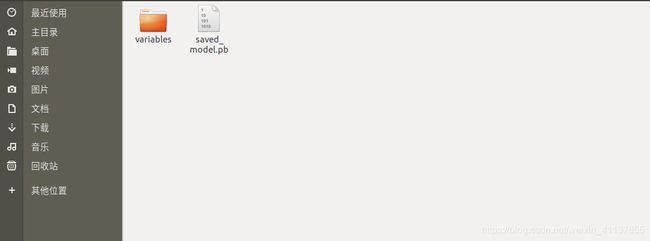

下载后解压得到如下图所示文件

将上图所示pb文件用saved_model模块导出为带有签名的模型文件

import tensorflow as tf

import numpy as np

from tensorflow.python.saved_model import tag_constants

model_path = "/home/boss/Study/face_recognition_flask/20180402-114759/model.pb"

image_test = np.random.randint(0,10,(1,160,160,3)) # 随机生成一个[1,160,160,3]的数组,代表一张图片,用于测试

with tf.gfile.FastGFile(model_path,'rb')as f:

graph_def = tf.GraphDef()

graph_def.ParseFromString(f.read())

tf.import_graph_def(graph_def,name='')

my_graph = tf.get_default_graph()

# 从读取的pb文件中找到相关张量,构造要保存到saved_model模型中的张量

images_placeholder = my_graph.get_tensor_by_name('input:0') # 传入Inception ResNet V2的placeholder

embeddinigs = my_graph.get_tensor_by_name('embeddings:0') # Inception ResNet V2的输出,为人脸的特征数组,形状为[1,512]

phase_train_placeholder = my_graph.get_tensor_by_name('phase_train:0')# 传入Inception ResNet V2告诉神经网络是否是在训练阶段,值域为[True,False]

with tf.Session()as sess:

builder = tf.saved_model.builder.SavedModelBuilder('tfservingmodel')

inputs = {'input_1':tf.saved_model.utils.build_tensor_info(images_placeholder),'input_2':tf.saved_model.utils.build_tensor_info(phase_train_placeholder)}

outputs = {'output':tf.saved_model.utils.build_tensor_info(embeddinigs)}

signature = tf.saved_model.signature_def_utils.build_signature_def(inputs,outputs,'signame') # 构造签名对象

builder.add_meta_graph_and_variables(sess,[tag_constants.SERVING],{'my_signature':signature})

builder.save()

tf.reset_default_graph() # 清除默认图形堆栈并重置全局默认图形。

with tf.Session()as sess:

meta_graph_def = tf.saved_model.loader.load(sess,[tag_constants.SERVING],'tfservingmodel')

signature = meta_graph_def.signature_def # 从meta_graph_def取出SignatureDef对象

images_placeholder = signature['my_signature'].inputs['input_1'].name # 从SignatureDef对象中找出具体的输入输出张量

phase_train_placeholder = signature['my_signature'].inputs['input_2'].name

embeddinigs = signature['my_signature'].outputs['output'].name

feed_dict = {images_placeholder:image_test,phase_train_placeholder:False}

emb = sess.run(embeddinigs,feed_dict=feed_dict) # 使用一开始构造的数据测试,Inception ResNet V2模型会把人脸转换成一个[1,512]的特征数组

print(emb)

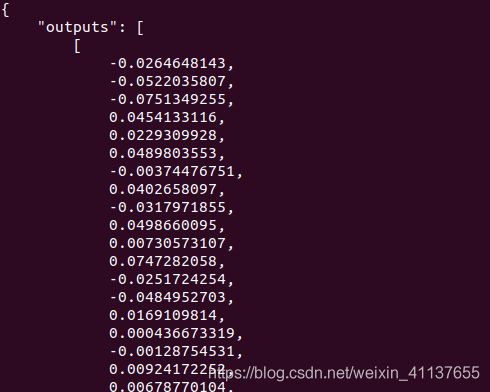

运行结果截图(部分)

生成支持远程调用的模型,和上面的代码几乎一样,不一样的地方是在生成签名对象时加入用于预测任务的签名信息

import tensorflow as tf

from tensorflow.python.saved_model import tag_constants

model_path = "/home/boss/Study/face_recognition_flask/20180402-114759/model.pb"

with tf.gfile.FastGFile(model_path,'rb')as f:

graph_def = tf.GraphDef()

graph_def.ParseFromString(f.read())

tf.import_graph_def(graph_def,name='')

my_graph = tf.get_default_graph()

images_placeholder = my_graph.get_tensor_by_name('input:0')

embeddinigs = my_graph.get_tensor_by_name('embeddings:0')

phase_train_placeholder = my_graph.get_tensor_by_name('phase_train:0')

with tf.Session()as sess:

builder = tf.saved_model.builder.SavedModelBuilder('tfservingmodelv1')

inputs = {'input_1':tf.saved_model.utils.build_tensor_info(images_placeholder),'input_2':tf.saved_model.utils.build_tensor_info(phase_train_placeholder)}

outputs = {'output':tf.saved_model.utils.build_tensor_info(embeddinigs)}

signature = tf.saved_model.signature_def_utils.build_signature_def(inputs=inputs,outputs=outputs,method_name=tf.saved_model.signature_constants.PREDICT_METHOD_NAME)

builder.add_meta_graph_and_variables(sess,[tag_constants.SERVING],{'my_signature':signature})

builder.save()

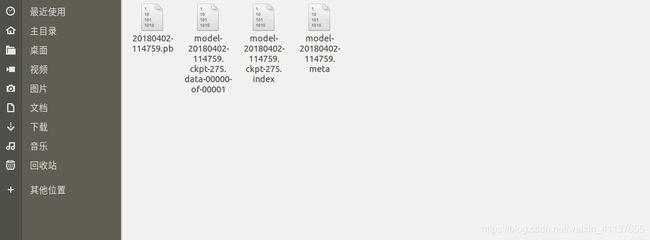

在Linux中开启TF_Serving服务前,先构建模型版本号

cd tfservingmodelv1/

mkdir 123456

mv saved_model.pb 123456/

mv variables 123456/

开启服务

tensorflow_model_server --port=9000 --model_base_path=/home/boss/Study/face_recognition_flask/20180402-114759/tfservingmodelv1/ --model_name=md --rest_api_port=8500

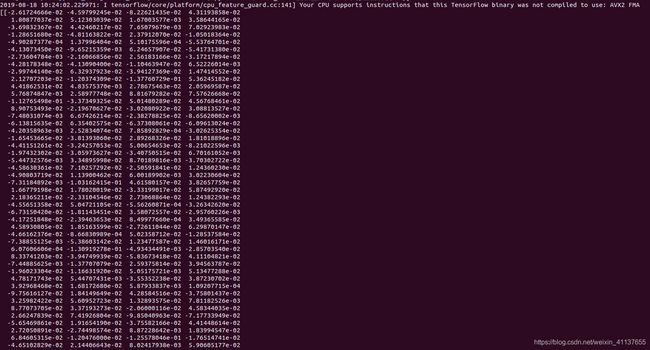

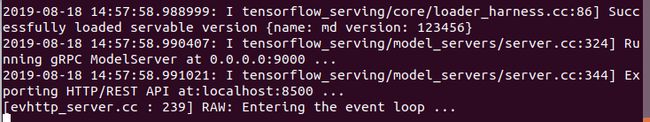

如果出现与下面类似的图片,代表服务启动成功

编写一个脚本发送HTTP POST请求测试

import requests

import json

import numpy as np

url = "http://localhost:8500/v1/models/md/versions/123456:predict"

image_test = np.random.randint(0,10,(1,160,160,3))

def default(obj):

if isinstance(obj,(np.ndarray,)):

return obj.tolist()

s = json.dumps({"inputs":{'input_1':default(image_test),'input_2':False},"signature_name":"my_signature"}) # numpy不能转换成json格式,所以要先把numpy转换成list,再转json

r = requests.post(url,data=s)

print(r.text)

参考书籍:《深度学习之TensorFlow工程化项目实战》 李金洪 编著