机器学习实战(第三章-决策树-ID3算法-所有代码与详细注解-python3.7)

理解代码的过程中,参考了各路大神的文章,感谢:

{

https://blog.csdn.net/gaoyueace/article/details/78742579

https://www.cnblogs.com/fantasy01/p/4595902.html

https://blog.csdn.net/gdkyxy2013/article/details/80495353

知网论文:决策树算法在隐形眼镜配型中的应用研究_佘朝兵

}

那么,正文就从这里开始啦!(我的代码都是可直接运行的,只要环境正确)

把很多功能放在一个文件中不利于入门学习,以及后续参考,我这里把所有代码分在了7个文件里,每个文件实现一个阶段性的功能。

我也是在入门阶段,希望大家看到错误的地方帮忙指正,感谢!

本章全部资源在这里:https://download.csdn.net/download/m0_37738114/13609892

1、tree01_base.py (决策树原理-准备阶段)

'''

海洋生物分类

已有数据:不浮出水面是否可以生存、是否有脚蹼、属于鱼类

'''

from math import log

# 计算香农熵,熵越高,代表混合的数据越多

def calcShannonEnt(dataSet):

numEntries = len(dataSet)

labelCounts = {}

# 为所有可能分类创建字典

for featVec in dataSet:

# 数据的最后一列是标签

currentLabel = featVec[-1]

if currentLabel not in labelCounts.keys():

labelCounts[currentLabel] = 0

labelCounts[currentLabel] += 1

shannonEnt = 0.0

# 计算香农熵

for key in labelCounts:

# 求概率

prob = float(labelCounts[key]) / numEntries

# 以2为底求对数

shannonEnt -= prob * log(prob, 2)

return shannonEnt

# 获取熵之后,按照获取最大信息增益的方法划分数据集

# 按照给定特征划分数据集

def splitDataSet(dataSet, axis, value):

# 创建新的list对象

retDataSet = []

for featVec in dataSet:

if featVec[axis] == value:

# 抽取

reduceFeatVec = featVec[:axis]

reduceFeatVec.extend(featVec[axis+1:])

retDataSet.append(reduceFeatVec)

return retDataSet

def createDataSet():

# 已有数据:不浮出水面是否可以生存、是否有脚蹼、属于鱼类

dataSet = [[1, 1, 'yes'],

[1, 1, 'yes'],

[1, 0, 'no'],

[0, 1, 'no'],

[0, 1, 'no']]

# flippers:脚蹼

labels = ['no surfacing', 'flippers']

return dataSet, labels

# 选择最好的数据划分方式

# 假定数据集的每行元素是相同的,最后一列表示标签

def chooseBestFeatureToSplit(dataSet):

# 先计算一个基本香农熵,看看按照哪个特征分类后的数据增益最大,即分类后的香农熵-基本香农熵

dataNum = float(len(dataSet))

baseEntropy = calcShannonEnt(dataSet)

baseFeature = -1

# 最佳信息增益

bestInfoGain = 0.0

# 遍历数据集中的每一个特征,根据特征进行数据划分,计算香农熵,寻找最小香农熵

featureNum = len(dataSet[0]) - 1

for i in range(featureNum):

# 获得第i个特征的所有可能取值

featureList = [example[i] for example in dataSet]

featureSet = set(featureList)

subEntropy = 0

for value in featureSet:

subDataSet = splitDataSet(dataSet, i, value)

prob = len(subDataSet) / dataNum

subEntropy = subEntropy + prob * calcShannonEnt(subDataSet)

# 信息增益

infoGain = baseEntropy - subEntropy

# 对比获得最大信息增益的特征值

if infoGain > bestInfoGain:

bestInfoGain = infoGain

baseFeature = i

return baseFeature

if __name__ == "__main__":

myData, labels = createDataSet()

print("香农熵为 ", calcShannonEnt(myData)) # 香农熵为 0.9709505944546686

# 熵越高,代表混合的数据越多,添加更多分类,观察熵的变化

myData[0][-1] = 'maybe'

print("香农熵为 ", calcShannonEnt(myData)) # 香农熵为 1.3709505944546687

# 按照给定特征划分数据集

myData, labels = createDataSet()

# 取出不浮出水面可以生存的一组数据

print(splitDataSet(myData, 0, 1)) # [[1, 'yes'], [1, 'yes'], [0, 'no']]

# 取出浮出水面不能生存的一组数据

print(splitDataSet(myData, 0, 0)) # [[1, 'no'], [1, 'no']]

myData, labels = createDataSet()

print(chooseBestFeatureToSplit(myData)) # 0 说明第0个特征是最好的用于划分数据集的特征

2、tree02_recursion_train.py (构建决策树)

'''

递归构建决策树 (训练)

'''

from tree01_base import chooseBestFeatureToSplit

from tree01_base import splitDataSet

from tree01_base import createDataSet

# 返回一组结点中最多的类别

def majorityCnt(classList):

classCount = {}

for vote in classList:

if vote not in classCount.keys():

classCount[vote] = 0

classCount[vote] = classCount[vote] + 1

sortedClassCount = sorted(classCount.items(), key=lambda item: item[1], reverse=True)

return sortedClassCount[0][0]

# 递归处理数据集

def createTree(dataSet, labels):

# 获取类别

classList = [example[-1] for example in dataSet]

# 类别一致,则无需划分

if classList.count(classList[0]) == len(classList):

return classList[0]

# 没有特征可供划分,则选择一组结点中最多的类别作为该组的类别

if len(dataSet[0]) == 1:

return majorityCnt(classList)

# 选取最佳划分属性

bestFeature = chooseBestFeatureToSplit(dataSet)

# labels 形如:labels = ['no surfacing', 'flippers']

bestFeatureLabel = labels[bestFeature]

myTree = {bestFeatureLabel:{}}

# 已经划分过的特征不可再用,所以删除

del(labels[bestFeature])

featureValues = [example[bestFeature] for example in dataSet]

uniqueVals = set(featureValues)

# 按照最佳划分属性进行划分, 可能会划分为不止两个分支

# 在各个分支的数据集分别调用递归函数

for value in uniqueVals:

subLabels = labels[:]

# splitDataSet:按照给定特征、给定特征值,构建新的数据集

myTree[bestFeatureLabel][value] = createTree(splitDataSet(dataSet, bestFeature, value), subLabels)

return myTree

if __name__ == "__main__":

myData, initLabels = createDataSet()

labels = initLabels[:]

myTree = createTree(myData, labels)

print(myTree) # {'no surfacing': {0: 'no', 1: {'flippers': {0: 'no', 1: 'yes'}}}}

3、tree03_arrow_node_plotter.py(绘制箭头与结点-练习阶段)

'''

绘制树形图

'''

import matplotlib.pyplot as plt

# 定义文本框和箭头格式

'''

boxstyle: 文本框的类型,sawtooth:锯齿形,波浪线

fc: 颜色深度

'''

# decisionNode = dict(boxstyle='sawtooth', fc='0.8')

decisionNode = {'boxstyle':'sawtooth','fc':'0.8'}

leafNode = dict(boxstyle="round4", fc="0.8")

arrow_args = dict(arrowstyle="<-")

'''

绘制箭头,与箭头指向的内容

nodeTxt:要显示的文本,centerPt:文本的中心点,parentPt:箭头的起点,

xy:箭头的起点,xytest:内容显示的中心位置

xycoords和textcoords是坐标xy与xytext的说明(按轴坐标)

若textcoords=None,则默认textcoords与xycoords相同,若都未设置,默认为data

va/ha设置节点框中文字的位置,va为纵向取值为(u'top', u'bottom', u'center', u'baseline'),

ha为横向取值为(u'center', u'right', u'left')

'''

def plotNode(nodeTxt, centerPt, parentPt, nodeType):

createPlot.ax1.annotate(nodeTxt, xy=parentPt, xycoords='axes fraction',

xytext=centerPt, textcoords='axes fraction',

va="center", ha="center", bbox=nodeType,

arrowprops=arrow_args)

'''

cla() # Clear axis即清除当前图形中的当前活动轴。其他轴不受影响。

clf() # Clear figure清除所有轴,但是窗口打开,这样它可以被重复使用。

close() # Close a figure window

'''

def createPlot():

# 创建一个画布,背景为白色,facecolor翻译成表面颜色

fig = plt.figure(1, facecolor='white')

# figure清除所有轴,但是窗口打开,这样它可以被重复使用

fig.clf()

# frameon: 是否绘制图像边缘绘制框架与否

# 设置一个全局变量

createPlot.ax1 = plt.subplot(111, frameon=True)

plotNode('决策结点', (0.5,0.1), (0.1,0.5), decisionNode)

plotNode('叶节点', (0.8, 0.1), (0.3, 0.8), leafNode)

plt.show()

if __name__ == "__main__":

# 画图示例

createPlot()

4、tree04_tree_plotter.py(绘制决策树)

'''

构建注解树

'''

import matplotlib.pyplot as plt

decisionNode = {'boxstyle':'sawtooth','fc':'0.8'}

leafNode = dict(boxstyle="round4", fc="0.8")

arrow_args = dict(arrowstyle="<-")

def plotNode(nodeTxt, centerPt, parentPt, nodeType):

createPlot.ax1.annotate(nodeTxt, xy=parentPt, xycoords='axes fraction',

xytext=centerPt, textcoords='axes fraction',

va="center", ha="center", bbox=nodeType,

arrowprops=arrow_args)

# 计算决策树的叶子结点数

def getNumLeaves(myTree):

numleaves = 0

firstStr = list(myTree.keys())[0]

secondDict = myTree[firstStr]

for key in secondDict.keys():

if type(secondDict[key]).__name__ == 'dict':

numleaves = numleaves + getNumLeaves(secondDict[key])

else:

numleaves = numleaves + 1

return numleaves

# 计算决策树的深度 (此处的深度不包括根结点)

def getTreeDepth(myTree):

maxDepth = 0

firstStr = list(myTree.keys())[0]

secondDict = myTree[firstStr]

for key in secondDict.keys():

if type(secondDict[key]).__name__ == 'dict':

thisDepth = 1 + getTreeDepth(secondDict[key])

else:

thisDepth = 1

if thisDepth > maxDepth:

maxDepth = thisDepth

return maxDepth

'''

添加箭头旁边的文字

在父子结点间填充文本信息,比如箭头旁边的注释 0,1,2之类的

parentPt: 父结点位置,cntrPt:子结点位置, txtString:箭头上的标注内容

'''

def plotMidText(cntrPt, parentPt, txtString):

xMid = (parentPt[0] - cntrPt[0])/2.0 + cntrPt[0]

yMid = (parentPt[1] - cntrPt[1])/2.0 + cntrPt[1]

createPlot.ax1.text(xMid, yMid, txtString)

def plotTree(myTree, parentPt, nodeTxt):

# 计算当前树的宽和高

numLeaves = getNumLeaves(myTree)

depth = getTreeDepth(myTree)

firstStr = list(myTree.keys())[0]

# 当前结点位置在其所有叶子结点正中间

cntrPt = (plotTree.xOff + (1.0 + float(numLeaves))/2.0/plotTree.totalW, plotTree.yOff)

# 添加箭头加指向的结点(此处因为是根结点,所以采用cntrPt==parentPt,只绘制出来一个结点,没有箭头)

plotMidText(cntrPt, parentPt, nodeTxt)

# 绘制结点

plotNode(firstStr, cntrPt, parentPt, decisionNode)

# 获取子树

secondDict = myTree[firstStr]

# 进入子树的时候,深度要加一层,即位置要降低一个单元

plotTree.yOff = plotTree.yOff - 1.0/plotTree.totalD

for key in secondDict.keys():

if type(secondDict[key]).__name__ == 'dict':

# 如果存在子树,则继续绘制子树

plotTree(secondDict[key], cntrPt, str(key))

else:

# 如果不存在子树,则绘制叶节点

plotTree.xOff = plotTree.xOff + 1.0/plotTree.totalW

# 绘制箭头与箭头指向的内容:plotNode(nodeTxt, centerPt, parentPt, nodeType)

# 叶子结点的内容为 secondDict[key]

plotNode(secondDict[key], (plotTree.xOff, plotTree.yOff), cntrPt, leafNode)

# 把当前结点作为父结点,叶节点作为子结点,添加箭头旁边的文字内容

plotMidText((plotTree.xOff, plotTree.yOff), cntrPt, str(key))

plotTree.yOff = plotTree.yOff + 1.0/plotTree.totalD

'''

全局变量plotTree.totalW存储树的宽,plotTree.totalD存储树的高

用plotTree.xOff和plotTree.yOff之中已经绘制的结点位置,以及放置下一个结点的恰当位置

'''

def createPlot(inTree):

fig = plt.figure(1, facecolor='white')

fig.clf()

axprops = dict(xticks=[], yticks=[])

createPlot.ax1 = plt.subplot(111, frameon=False, **axprops)

plotTree.totalW = float(getNumLeaves(inTree))

plotTree.totalD = float(getTreeDepth(inTree))

plotTree.xOff = -0.5/plotTree.totalW

plotTree.yOff = 1.0

plotTree(inTree, (0.5, 1.0), '')

plt.show()

def retrieveTree(i):

listOfTrees = [{'no surfacing': {0: 'no', 1: {'flippers': {0: 'no', 1: 'yes'}}}},

{'no surfacing': {0: 'no', 1: {'flippers': {0: {'head': {0: 'no', 1: 'yes'}}, 1: 'no'}}}},

{'no surfacing': {0: {'flippers': {0: 'no', 1: 'yes'}}, 1: {'flippers': {0: 'no', 1: 'yes'}},

2: {'flippers': {0: 'no', 1: 'yes'}}}},

{'no surfacing': {0: 'no', 1: {'flippers': {0: 'no', 1: 'yes'}}, 3: 'maybe'}}

]

return listOfTrees[i]

if __name__ == "__main__":

# 计算预定义的树结构

myTree = retrieveTree(0)

numLeaves = getNumLeaves(myTree)

treeDepth = getTreeDepth(myTree)

print("树的叶节点有", numLeaves, "个,深度为", treeDepth)

# 绘制决策树

myTree = retrieveTree(0)

createPlot(myTree)

myTree['no surfacing'][3] = 'maybe'

print(myTree) # {'no surfacing': {0: 'no', 1: {'flippers': {0: 'no', 1: 'yes'}}, 3: 'maybe'}}

createPlot(myTree)

5、tree05_tree_test.py (测试决策树)

'''

测试决策树分类器

'''

from tree02_recursion_train import createDataSet, createTree

'''

inputTree: 待测试的预训练决策树,featureLabels:标签集,testVec:单个测试样本

输入测试样本,返回测试样本的预测标签

'''

def classify(inputTree, featureLabels, testVec):

# 取第一个分类特征(根结点)

firstStr = list(inputTree.keys())[0]

# 取根结点的子树集

secondDict = inputTree[firstStr]

# 第一个分类特征的索引

featureIndex = featureLabels.index(firstStr)

for key in secondDict.keys():

# 在子结点中找到待分类数据的对应特征值,如果是叶节点,则直接返回其标签

# 如果是子树,则继续递归查找,直至搜索到叶结点为止

if testVec[featureIndex] == key:

if type(secondDict[key]).__name__ == 'dict':

classLabel = classify(secondDict[key], featureLabels, testVec)

else:

classLabel = secondDict[key]

return classLabel

if __name__ == "__main__":

myData, initLabels = createDataSet()

print(myData) # [[1, 1, 'yes'], [1, 1, 'yes'], [1, 0, 'no'], [0, 1, 'no'], [0, 1, 'no']]

labels = initLabels[:]

myTree = createTree(myData, labels)

print(myTree) # {'no surfacing': {0: 'no', 1: {'flippers': {0: 'no', 1: 'yes'}}}}

classLabel = classify(myTree, initLabels, [1, 0])

print("[1, 0]", classLabel) # [1, 0] no

classLabel = classify(myTree, initLabels, [1, 1])

print("[1, 1]", classLabel) # [1, 1] yes6、tree06_tree_store.py (存储决策树)

'''

构造决策树比较耗时,这里提供存储预训练好的决策树的方法

'''

from tree02_recursion_train import createDataSet, createTree

from tree04_tree_plotter import createPlot

'''

用pickle模块存储决策树

pickle.dump(obj, file, [,protocol]):

序列化对象,将对象obj保存到文件file中去。

参数protocol是序列化模式,默认是0(ASCII协议,表示以文本的形式进行序列化),

protocol的值还可以是1和2(1和2表示以二进制的形式进行序列化。1是老式的二进制协议;2是新二进制协议)。

file表示保存到的类文件对象,file必须有write()接口,file可以是一个以'w'打开的文件或者是一个StringIO对象,也可以是任何可以实现write()接口的对象。

pickle.load(file):

反序列化对象,将文件中的数据解析为一个python对象。file中有read()接口和readline()接口

'''

def storeTree(inputTree, filename):

import pickle

fw = open(filename, 'wb')

pickle.dump(inputTree, fw) # 对象序列化

fw.close()

def grabTree(filename):

import pickle

fr = open(filename, 'rb+') # #文件模式为字节处理

return pickle.load(fr) # 对象反序列化

if __name__ == "__main__":

myData, initLabels = createDataSet()

print(myData) # [[1, 1, 'yes'], [1, 1, 'yes'], [1, 0, 'no'], [0, 1, 'no'], [0, 1, 'no']]

labels = initLabels[:]

myTree = createTree(myData, labels)

print(myTree) # {'no surfacing': {0: 'no', 1: {'flippers': {0: 'no', 1: 'yes'}}}}

# 存储

storeTree(myTree, 'classifierStorage.txt')

# 读取

print(grabTree('classifierStorage.txt'))

7、tree07_contactLens.py (使用决策树来预测患者需要佩戴的隐形眼镜类型)

这里先贴一下隐形眼镜数据集,省得你们去下载了,lenses.txt:

young myope no reduced no lenses

young myope no normal soft

young myope yes reduced no lenses

young myope yes normal hard

young hyper no reduced no lenses

young hyper no normal soft

young hyper yes reduced no lenses

young hyper yes normal hard

pre myope no reduced no lenses

pre myope no normal soft

pre myope yes reduced no lenses

pre myope yes normal hard

pre hyper no reduced no lenses

pre hyper no normal soft

pre hyper yes reduced no lenses

pre hyper yes normal no lenses

presbyopic myope no reduced no lenses

presbyopic myope no normal no lenses

presbyopic myope yes reduced no lenses

presbyopic myope yes normal hard

presbyopic hyper no reduced no lenses

presbyopic hyper no normal soft

presbyopic hyper yes reduced no lenses

presbyopic hyper yes normal no lenses'''

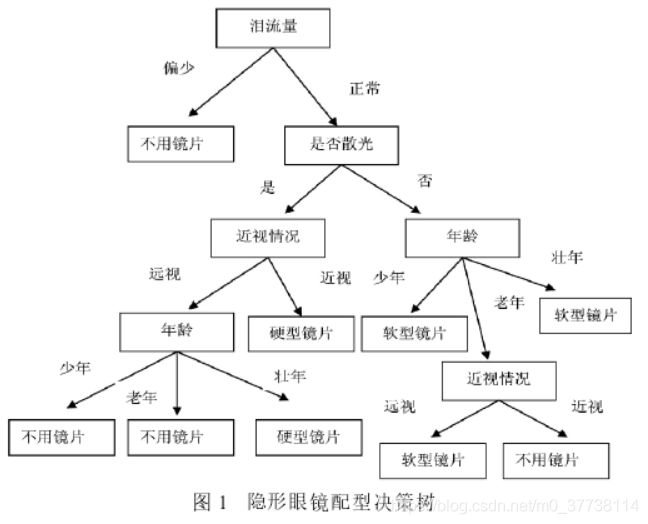

使用决策树来预测患者需要佩戴的隐形眼镜类型

由于决策树只能处理离散值,所以对于连续型的数据集需要进行离散化

隐形眼镜数据集属性信息:

患者的年龄(age):pre(少年0-14)、young(壮年15-64)、presbyopic(老年65~)

视力诊断结果(prescript):myope(近视),hyper(远视)

是否散光(astigmatic):yes,no (轻度认为是没有,中度和重度认为是有)

泪流量:reduced(偏少),normal(正常)(平均 1ul/min 为正常,低于该值为偏少)

眼镜类型:soft(软型镜片),hard(硬型镜片),no lenses(不需要镜片)

'''

from tree02_recursion_train import createTree

from tree04_tree_plotter import createPlot

if __name__ == "__main__":

fr = open('lenses.txt')

# strip() 方法用于移除字符串头尾指定的字符(默认为空格或换行符)或字符序列

# strip().split('\t') 将'\t'分隔符改为空格

lenses = [inst.strip().split('\t') for inst in fr.readlines()]

lensesLabels = ['age', 'prescript', 'astigmatic', 'tearRate']

lensesTree = createTree(lenses, lensesLabels)

print(lensesTree)

createPlot(lensesTree)

我自己生成的图略丑,这里引用一下别人论文里面的图:(决策树算法在隐形眼镜配型中的应用研究_佘朝兵)