【很简单的教程】如何提取模型每层的特征【以ResNet为例】

介于CSDN上没有人很详细地讲述如何提取特征,所以我踩了很多坑,本文教程是我踩坑的心路历程,最后面有提取特征的方法实例,不过建议从头阅读。

方法1

以一个十分类的问题为例,我最开始用于提取特征的方法是这样的:

class model(nn.Module):

def __init__(self):

super(model, self).__init__()

self.model1 = torchvision.models.resnet18(pretrained=None) #512->1000

self.model1.fc = nn.Linear(512, 64)

self.linear2 = nn.Linear(64, 10)

self.ReLU = nn.ReLU()

def forward(self, x):

x1 = self.model1(x)

x2 = self.ReLU(x1)

x3 = self.linear1(x2)

x4 = self.ReLU(x3)

x5 = self.linear2(x4)

return x1/x2/x3/x4【通过设置不同的返回值,从而实现想要提取的特征】

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model = model.to(device)

feature = model(x) 【x是一个输入,对x的处理这里略过,通过model()的定义,输出及为x的特征】

上述的特征提取方法发挥出了作用,但是存在瓶颈——如果我想输出resnet18中的某层的特征,这种方法是行不通的。

试错:

我在网上查到一个方法,用torch.nn.Sequential(*list(cnn.children())[:-1])来提取resnet导数第一层的特征。

于是以resnet18为例,我用torch.nn.Sequential(*list(cnn.children())[:-1])尝试提取特征:

class model(nn.Module):

def __init__(self):

super(model, self).__init__()

self.model = torchvision.models.resnet18(pretrained=None) #512->1000

self.model.fc = nn.Linear(512, 10)

def forward(self, x):

x1 = self.model(x)

return x1

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model = model()

model = model.to(device)

# 查看模型结构

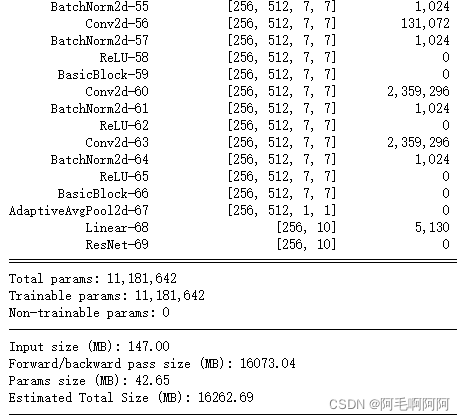

summary(model, input_size=[(3, 224, 224)], batch_size=256, device="cuda") # 如果是cpu,device="cpu"

为了提取fc层前的维度为512的特征,我添加下面代码:

model_f = torch.nn.Sequential(*list(model.children())[:-1])

model_f = model_f.to(device)

summary(model_f, input_size=[(3, 224, 224)], batch_size=256, device="cuda") # 如果是cpu,device="cpu"

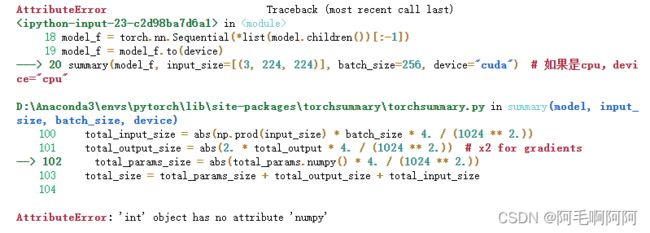

出现报错:

为了我检查torch.nn.Sequential(*list(model.children())[:-1])代码是否错误,于是运行下列代码:

for child in model_f.children():

print(child)

print('#######################')

我发现输出为空!也就是什么都没输出。

于是检查:

for child in model.children():

print(child)

print('#######################')

输出为:

于是我发现model的children()是一个大的整体,名为ResNet,这样的话我根本没办法提取每层的特征啊。

发现问题所在

后面通过尝试,我找到了问题出现的根本原因,是我重新定义模型导致的。

改正方法如下:

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model = resnet18(pretrained=None)

model.fc = nn.Linear(512, 10)

model = model.to(device)

model_f = torch.nn.Sequential(*list(model.children())[:-1])

model_f = model_f.to(device)

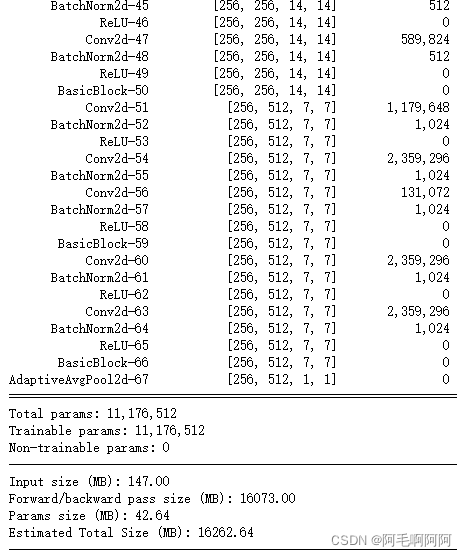

summary(model_f, input_size=[(3, 224, 224)], batch_size=256, device="cuda") # 如果是cpu,device="cpu"

输出为:

可以看到fc层不见了!这样输出后的结果就是512维的特征!所以是模型定义的问题!

.

最后,我再给出一个函数定义,它可以被用于提取任意层的特征,思路不变,里面代码含义就不再具体讲了:

def build_resnet(feature_type):

cnn = torchvision.models.resnet152(pretrained=True)

if feature_type == 'pool5':

model = torch.nn.Sequential(*list(cnn.children())[:-1])

elif feature_type == 'res5c':

model = torch.nn.Sequential(*list(cnn.children())[:-2])

elif feature_type == 'res4c':

layers = list(cnn.children())[:-3]

layers.append(nn.AvgPool2d(kernel_size=3, stride=2, padding=1))

model = torch.nn.Sequential(*layers)

elif feature_type == 'pool4':

layers = list(cnn.children())[:-3]

layers.append(nn.AdaptiveAvgPool2d((1, 1)))

model = torch.nn.Sequential(*layers)

model = model.cuda()

model.eval()

return model

.

.

实践中,提取特征的模型很难传入训练好的参数,因为参数名字很难对应,这里我的解决办法是先传到正常的不删减的模型,传入参数后再进行特征提取网络的提取:

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model = resnet18(pretrained=None)

model.fc = nn.Sequential(nn.Linear(512, 256), nn.ReLU())

model.fc.add_module('linear-71', nn.Sequential(nn.Linear(256, 128), nn.ReLU()))

model.fc.add_module('linear-72', nn.Sequential(nn.Linear(128, 10)))

state_dict = torch.load('/root/Desktop/cifar-10/checkpoint/best_89.65_100.0.pth', map_location='cuda') #?????CPU

new2_state_dict = OrderedDict()

for k, v in state_dict.items():

namekey = k[7:] # ??module???

new2_state_dict[namekey] = v

model.load_state_dict(new2_state_dict)

# model_list = list(model.children())[:-1] + list(model.fc.children())[:-1]

#model_list = list(model.children())[:-1]

model_f_front = torch.nn.Sequential(*list(model.children())[:-1])

model_f_behind = torch.nn.Sequential(*list(model.fc.children())[:-2])

model = nn.DataParallel(model, device_ids=[0, 1])

model_f_front = nn.DataParallel(model_f_front, device_ids=[0, 1])

model_f_behind = nn.DataParallel(model_f_behind, device_ids=[0, 1])

model = model.to(device)

model_f_front = model_f_front.to(device)

model_f_behind = model_f_behind.to(device)