华为云原生之KubeEdge深度使用体验与Kubeflow应用开发实践

一、KubeEdge 简介

① 什么是 KubeEdge ?

- KubeEdge 是一个开源的系统,可将本机容器化应用编排和管理扩展到边缘端设备。它基于 Kubernetes 构建,为网络和应用程序提供核心基础架构支持,并在云端和边缘端部署应用,同步元数据。KubeEdge 还支持 MQTT 协议,允许开发人员编写客户逻辑,并在边缘端启用设备通信的资源约束。

- KubeEdge 包含云端和边缘端两部分,使用 KubeEdge,可以很容易地将已有的复杂机器学习、图像识别、事件处理和其他高级应用程序部署到边缘端并进行使用。随着业务逻辑在边缘端上运行,可以在本地保护和处理大量数据。通过在边缘端处理数据,响应速度会显著提高,并且可以更好地保护数据隐私。

- KubeEdge 是一个由 Cloud Native Computing Foundation (CNCF) 托管的孵化级项目,CNCF 是对 KubeEdge 的 孵化公告。

② KubeEdge 的优势

- Kubernetes 原生支持:使用 KubeEdge 用户可以在边缘节点上编排应用、管理设备并监控应用程序/设备状态,就如同在云端操作 Kubernetes 集群一样。

- 云边可靠协作:在不稳定的云边网络上,可以保证消息传递的可靠性,不会丢失。

- 边缘自治:当云边之间的网络不稳定或者边缘端离线或重启时,确保边缘节点可以自主运行,同时确保边缘端的应用正常运行。

- 边缘设备管理:通过 Kubernetes 的原生 API,并由 CRD 来管理边缘设备。

- 极致轻量的边缘代理:在资源有限的边缘端上运行的非常轻量级的边缘代理(EdgeCore)。

③ KubeEdge 的工作原理

- KubeEdge 由云端和边缘端部分构成:

- 云上部分:

-

- CloudHub 是一个 Web Socket 服务端,负责监听云端的变化,缓存并发送消息到 EdgeHub;

-

- EdgeController 是一个扩展的 Kubernetes 控制器,管理边缘节点和 Pods 的元数据确保数据能够传递到指定的边缘节点;

-

- DeviceController 是一个扩展的 Kubernetes 控制器,管理边缘设备,确保设备信息、设备状态的云边同步。

- 边缘部分:

-

- EdgeHub 是一个 Web Socket 客户端,负责与边缘计算的云服务(例如 KubeEdge 架构图中的 Edge Controller)交互,包括同步云端资源更新、报告边缘主机和设备状态变化到云端等功能;

-

- Edged 是运行在边缘节点的代理,用于管理容器化的应用程序;

-

- EventBus 是一个与 MQTT 服务器 (mosquitto) 交互的 MQTT 客户端,为其他组件提供订阅和发布功能;

-

- ServiceBus 是一个运行在边缘的 HTTP 客户端,接受来自云上服务的请求,与运行在边缘端的 HTTP 服务器交互,提供了云上服务通过 HTTP 协议访问边缘端 HTTP 服务器的能力;

-

- DeviceTwin 负责存储设备状态并将设备状态同步到云,它还为应用程序提供查询接口;

-

- MetaManager 是消息处理器,位于 Edged 和 Edgehub 之间,它负责向轻量级数据库 (SQLite) 存储/检索元数据。

④ 升级 KubeEdge

- 在每个边缘节点上备份 edgecore 数据库:

$ mkdir -p /tmp/kubeedge_backup

$ cp /var/lib/kubeedge/edgecore.db /tmp/kubeedge_backup/

- 您可以保留旧的配置,根据需要保存一些自定义更改。升级后,某些选项可能会被删除,而某些选项可能会被添加,请不要直接使用旧的配置。

- 如果从 1.3 升级到 1.4,请注意会将设备 API 从 v1alpha1 升级到 v1alpha2,需要安装 Device v1alpha2 和 DeviceModel v1alpha2,并将它们现有的自定义资源从 v1alpha1 手动转换为 v1alpha2,如果需要回滚,建议将 v1alpha1 CRD 和自定义资源保留在集群中或导出到某个位置。

- 确保所有 Edgecore 进程均已停止后,一个一个停止 edgecore 的进程,然后停止 cloudcore。停止的方式取决于部署方式:

-

- 使用二进制 or “keadm” 方式: 使用 kill;

-

- 使用 “systemd”方式: 使用 systemctl。

- 清理:

$ rm -rf /var/lib/kubeedge /etc/kubeedge

- 在每个边缘节点还原数据库:

$ mkdir -p /var/lib/kubeedge

$ mv /tmp/kubeedge_backup/edgecore.db /var/lib/kubeedge/

二、KubeEdge 的部署

① 使用 Keadm 进行部署

- Keadm 用于安装 KubeEdge 的云端和边缘端组件,它不负责 K8s 的安装和运行。可以参考 Kubernetes-compatibility 了解 Kubernetes 兼容性来确定安装哪个版本的 Kubernetes。

- Keadm 目前支持 Ubuntu 和 CentOS OS,RaspberryPi 的支持正在进行中,并且需要超级用户权限(或 root 权限)才能运行。

(A)设置云端(KubeEdge 主节点)

- keadm init:

-

- keadm init 将安装 cloudcore,生成证书并安装 CRD(默认情况下边缘节点需要访问 cloudcore 中 10000、10002 端口),它还提供了一个命令行参数,通过它可以设置特定的版本。

-

- 必须正确配置 kubeconfig 或 master 中的至少一个,以便可以将其用于验证 k8s 集群的版本和其他信息。

-

- 请确保边缘节点可以使用云节点的本地 IP 连接云节点,或者需要使用 --advertise-address 标记指定云节点的公共 IP 。

-

- –advertise-address(仅从 1.3 版本开始可用)是云端公开的地址(将添加到 CloudCore 证书的 SAN 中),默认值为本地 IP。

-

- keadm init 将会使用二进制方式部署 cloudcore 为一个系统服务,如果想实现容器化部署,可以参考 keadm beta init。

-

- keadm init 如下所示:

# keadm init --advertise-address="THE-EXPOSED-IP"(only work since 1.3 release)

-

- 输出:

Kubernetes version verification passed, KubeEdge installation will start...

...

KubeEdge cloudcore is running, For logs visit: /var/log/kubeedge/cloudcore.log

- keadm beta init:

-

- 现在可以使用 keadm beta init 进行云端组件安装:

# keadm beta init --advertise-address="THE-EXPOSED-IP" --set cloudcore-tag=v1.9.0 --kube-config=/root/.kube/config

-

- 还可使用 --external-helm-root 安装外部的 helm chart 组件,如 edgemesh:

# keadm beta init --set server.advertiseAddress="THE-EXPOSED-IP" --set server.nodeName=allinone --kube-config=/root/.kube/config --force --external-helm-root=/root/go/src/github.com/edgemesh/build/helm --profile=edgemesh

- keadm beta manifest generate 可以帮助我们快速渲染生成期望的 manifests 文件,并输出在终端显示:

# keadm beta manifest generate --advertise-address="THE-EXPOSED-IP" --kube-config=/root/.kube/config > kubeedge-cloudcore.yaml

(B) 设置边缘端(KubeEdge 工作节点)

- 从云端获取令牌:在云端运行 keadm gettoken 将返回token令牌,该令牌将在加入边缘节点时使用:

# keadm gettoken

27a37ef16159f7d3be8fae95d588b79b3adaaf92727b72659eb89758c66ffda2.eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE1OTAyMTYwNzd9.JBj8LLYWXwbbvHKffJBpPd5CyxqapRQYDIXtFZErgYE

- keadm join 将安装 edgecore 和 mqtt。它还提供了一个命令行参数,通过它可以设置特定的版本:

# keadm join --cloudcore-ipport=192.168.20.50:10000 --token=27a37ef16159f7d3be8fae95d588b79b3adaaf92727b72659eb89758c66ffda2.eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE1OTAyMTYwNzd9.JBj8LLYWXwbbvHKffJBpPd5CyxqapRQYDIXtFZErgYE

- 可以使用 keadm beta join 通过镜像下载所需资源,进行节点接:

# keadm beta join --cloudcore-ipport=192.168.20.50:10000 --token=27a37ef16159f7d3be8fae95d588b79b3adaaf92727b72659eb89758c66ffda2.eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE1OTAyMTYwNzd9.JBj8LLYWXwbbvHKffJBpPd5CyxqapRQYDIXtFZErgYE

# keadm beta join --cloudcore-ipport=192.168.20.50:10000 --runtimetype remote --remote-runtime-endpoint unix:///run/containerd/containerd.sock --token=27a37ef16159f7d3be8fae95d588b79b3adaaf92727b72659eb89758c66ffda2.eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE1OTAyMTYwNzd9.JBj8LLYWXwbbvHKffJBpPd5CyxqapRQYDIXtFZErgYE

- 输出:

Host has mosquit+ already installed and running. Hence skipping the installation steps !!!

...

KubeEdge edgecore is running, For logs visit: /var/log/kubeedge/edgecore.log

- 说明:

-

- –cloudcore-ipport 是必填参数;

-

- 如果您需要的话,加上 --token 会自动为边缘节点生成证书;

-

- 需要保证云和边缘端使用的 KubeEdge 版本相同;

-

- 加上 --with-mqtt 会自动为边缘节点以容器运行的方式部署 mosquitto 服务。

(C)启用 kubectl logs 功能

- kubectl logs 必须在使用 metrics-server 之前部署,通过以下操作激活功能:

- 确保可以找到 Kubernetes 的 ca.crt 和 ca.key 文件,如果通过 kubeadm 安装 Kubernetes 集群,这些文件将位于 /etc/kubernetes/pki/ 目录中:

ls /etc/kubernetes/pki/

-

- 设置 CLOUDCOREIPS 环境,环境变量设置为指定的 cloudcore 的 IP 地址,如果具有高可用的集群,则可以指定 VIP(即弹性 IP/虚拟 IP):

export CLOUDCOREIPS="192.168.0.139"

-

- 使用以下命令检查环境变量:

echo $CLOUDCOREIPS

-

- 在云端节点上为 CloudStream 生成证书,但是生成的文件不在 /etc/kubeedge/ 中,需要从GitHub的存储库中拷贝一份。

-

- 将用户更改为 root:

```shell

sudo su

- 从原始克隆的存储库中拷贝证书:shell cp $GOPATH/src/github.com/kubeedge/kubeedge/build/tools/certgen.sh /etc/kubeedge/ 将目录更改为 kubeedge 目录:shell cd /etc/kubeedge/ 从 certgen.sh 生成证书 bash /etc/kubeedge/certgen.sh stream。

- 需要在主机上设置 iptables,此命令应该在每个 apiserver 部署的节点上执行,在这种情况下,须在 master 节点上执行,并由 root 用户执行此命令,在运行每个 apiserver 的主机上运行以下命令:

bash

iptables -t nat -A OUTPUT -p tcp --dport 10350 -j DNAT --to $CLOUDCOREIPS:10003

- 如果不确定是否设置了 iptables,并且希望清除所有这些表(如果错误地设置 iptables,它将阻止使用 kubectl logs 功能),可以使用以下命令清理 iptables 规则:

shell iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

- /etc/kubeedge/config/cloudcore.yaml 和 /etc/kubeedge/config/edgecore.yaml 上 cloudcore 和 edgecore 都要 修改,将 cloudStream 和 edgeStream 设置为 enable: true,将服务器 IP 更改为 cloudcore IP(与 $ CLOUDCOREIPS 相同),打开 YAML 文件:

shell

sudo nano /etc/kubeedge/config/cloudcore.yaml

- 在以下文件中修改( enable: true )内容:

yaml cloudStream: enable: true streamPort: 10003 tlsStreamCAFile: /etc/kubeedge/ca/streamCA.crt tlsStreamCertFile: /etc/kubeedge/certs/stream.crt tlsStreamPrivateKeyFile: /etc/kubeedge/certs/stream.key tlsTunnelCAFile: /etc/kubeedge/ca/rootCA.crt tlsTunnelCertFile: /etc/kubeedge/certs/server.crt tlsTunnelPrivateKeyFile: /etc/kubeedge/certs/server.key tunnelPort: 10004

- 在 edgecore 中打开 YAML 文件:

shell sudo nano /etc/kubeedge/config/edgecore.yaml

- 修改以下部分中的文件 (enable: true), (server: 192.168.0.193:10004):

yaml

edgeStream:

enable: true

handshakeTimeout: 30

readDeadline: 15

server: 192.168.0.139:10004

tlsTunnelCAFile: /etc/kubeedge/ca/rootCA.crt

tlsTunnelCertFile: /etc/kubeedge/certs/server.crt

tlsTunnelPrivateKeyFile: /etc/kubeedge/certs/server.key

writeDeadline: 15

- 重新启动所有 cloudcore 和 edgecore:

sudo su

pkill cloudcore

nohup cloudcore > cloudcore.log 2>&1 &

kubectl -n kubeedge rollout restart deployment cloudcore

systemctl restart edgecore.service

- 可以考虑避免 kube-proxy 部署在 edgenode 上,有两种方法:

// 1.通过调用 `kubectl edit daemonsets.apps -n kube-system kube-proxy` 添加以下设置:

yaml

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: node-role.kubernetes.io/edge

operator: DoesNotExist

// 2.如果您仍然要运行 `kube-proxy`,请通过在以下位置添加 `edgecore.service` 中的 env 变量来要求 **edgecore** 不进行检查edgecore.service:

shell

sudo vi /etc/kubeedge/edgecore.service

// 将以下行添加到 **edgecore.service** 文件:

shell

Environment="CHECK_EDGECORE_ENVIRONMENT=false"

// 最终文件应如下所示:

Description=edgecore.service

[Service]

Type=simple

ExecStart=/root/cmd/ke/edgecore --logtostderr=false --log-file=/root/cmd/ke/edgecore.log

Environment="CHECK_EDGECORE_ENVIRONMENT=false"

[Install]

WantedBy=multi-user.target

(D) 在云端支持 Metrics-server

- 实现该功能点的是重复使用 cloudstream 和 edgestream 模块,因此还需要执行启用 kubectl logs 功能的所有步骤。

- 由于边缘节点和云节点的 kubelet 端口不同,故当前版本的 metrics-server(0.3.x)不支持自动端口识别(这是 0.4.0 功能),因此现在需要手动编译从 master 分支拉取的镜像。

- Git clone 最新的 metrics server 代码仓:

bash

git clone https://github.com/kubernetes-sigs/metrics-server.git

- 转到 metrics server 目录:

bash

cd metrics-server

- 制作 docker 容器:

bash

make container

- 检查您是否有此 docker 镜像:

bash

docker images

- 确保使用镜像 ID 来对镜像标签进行变更,以使其与 yaml 文件中的镜像名称一致:

bash

docker tag a24f71249d69 metrics-server-kubeedge:latest

- 需要通过以下命令使主节点可调度:

shell

kubectl taint nodes --all node-role.kubernetes.io/master-

- 需要将这些设置写入部署 yaml(metrics-server-deployment.yaml)文件中,如下所示:

yaml

volumes:

# mount in tmp so we can safely use from-scratch images and/or read-only containers

- name: tmp-dir

emptyDir: {}

hostNetwork: true #Add this line to enable hostnetwork mode

containers:

- name: metrics-server

image: metrics-server-kubeedge:latest #Make sure that the REPOSITORY and TAG are correct

# Modified args to include --kubelet-insecure-tls for Docker Desktop (don't use this flag with a real k8s cluster!!)

imagePullPolicy: Never #Make sure that the deployment uses the image you built up

args:

- --cert-dir=/tmp

- --secure-port=4443

- --v=2

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternalDNS,InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port #Enable the feature of --kubelet-use-node-status-port for Metrics-server

ports:

- name: main-port

containerPort: 4443

protocol: TCP

(E) 重置 KubeEdge master 节点和工作节点

- keadm reset 将停止 cloudcore 并从 Kubernetes master 中删除与KubeEdge相关的资源,如 kubeedge 命名空间,它不会卸载/删除任何先决条件。

- 它为用户提供了一个标志,以指定 kubeconfig 路径,默认路径为 /root/.kube/config:

# keadm reset --kube-config=$HOME/.kube/config

② 使用二进制部署

(A)设置云端(KubeEdge 主节点)

- 创建 CRD:

kubectl apply -f https://raw.githubusercontent.com/kubeedge/kubeedge/master/build/crds/devices/devices_v1alpha2_device.yaml

kubectl apply -f https://raw.githubusercontent.com/kubeedge/kubeedge/master/build/crds/devices/devices_v1alpha2_devicemodel.yaml

kubectl apply -f https://raw.githubusercontent.com/kubeedge/kubeedge/master/build/crds/reliablesyncs/cluster_objectsync_v1alpha1.yaml

kubectl apply -f https://raw.githubusercontent.com/kubeedge/kubeedge/master/build/crds/reliablesyncs/objectsync_v1alpha1.yaml

kubectl apply -f https://raw.githubusercontent.com/kubeedge/kubeedge/master/build/crds/router/router_v1_ruleEndpoint.yaml

kubectl apply -f https://raw.githubusercontent.com/kubeedge/kubeedge/master/build/crds/router/router_v1_rule.yaml

- 准备配置文件:

# cloudcore --minconfig > cloudcore.yaml

- 运行:

# cloudcore --config cloudcore.yaml

(B) 设置边缘端(KubeEdge 工作节点)

- 生成配置文件:

# edgecore --minconfig > edgecore.yaml

- 在云端获取 token 值:

# kubectl get secret -nkubeedge tokensecret -o=jsonpath='{.data.tokendata}' | base64 -d

- 更新 edgecore 配置文件中的 token 值:

# sed -i -e "s|token: .*|token: ${token}|g" edgecore.yaml

- 如果要在同一主机上运行 cloudcore 和 edgecore ,请首先运行以下命令:

# export CHECK_EDGECORE_ENVIRONMENT="false"

- 运行 edgecore:

# edgecore --config edgecore.yaml

- 运行 edgecore -h 以获取帮助信息,并在需要时添加选项。

© 局限性

- 需要超级用户权限(或 root 权限)才能运行。

- 使用二进制部署 KubeEdge 进行测试,切勿在生产环境中使用这种方式。

三、配置 CloudCore 和 EdgeCore

① 配置云端(KubeEdge Master 节点)

(A)修改配置文件

- Cloudcore 要求更改 cloudcore.yaml 配置文件,创建并设置 cloudcore 配置文件,创建 /etc/kubeedge/config 文件夹:

# the default configuration file path is '/etc/kubeedge/config/cloudcore.yaml'

# also you can specify it anywhere with '--config'

mkdir -p /etc/kubeedge/config/

- 使用 ~/kubeedge/cloudcore --minconfig 命令获取最小配置模板:

~/kubeedge/cloudcore --minconfig > /etc/kubeedge/config/cloudcore.yaml

- 或使用 ~/kubeedge/cloudcore --defaultconfig 命令获取完整配置模板:

~/kubeedge/cloudcore --defaultconfig > /etc/kubeedge/config/cloudcore.yaml

- 编辑配置文件:

vim /etc/kubeedge/config/cloudcore.yaml

(B) 修改 cloudcore.yaml

- 无论是 kubeAPIConfig.kubeConfig 或 kubeAPIConfig.master,都可能 kubeconfig 文件的路径,同时它也可能是:

/root/.kube/config

// 或者

/home//.kube/config

- 在安装 kubernetes 的地方执行以下步骤:

要开始使用集群,您需要以普通用户身份运行以下命令

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

- 默认情况下,cloudcore 使用 https 连接到 Kubernetes apiserver。如果 master 和 kubeConfig 都进行了配置,master 将覆盖 kubeconfig 中的对应值。

- 在 advertiseAddress 中配置所有暴露给边缘节点(如动态 IP)的 CloudCore 的 IP 地址,这些 IP 地址将添加到 CloudCore 证书中的 SAN 中。

modules:

cloudHub:

advertiseAddress:

- 10.1.11.85

© 在云端(KubeEdge master 节点)添加边缘节点(KubeEdge 工作节点)

- 如果 edgecore 的配置中的 modules.edged.registerNode 设置为 true,Edge 节点则会自动注册:

modules:

edged:

registerNode: true

- 复制 $GOPATH/src/github.com/kubeedge/kubeedge/build/node.json 到您的工作目录并更改 metadata.name 为边缘节点的名称:

mkdir -p ~/kubeedge/yaml

cp $GOPATH/src/github.com/kubeedge/kubeedge/build/node.json ~/kubeedge/yaml

② 配置边缘端(KubeEdge 工作节点)

(A)创建并设置 Edgecore 配置文件

- 创建 /etc/kubeedge/config 文件夹:

# the default configration file path is '/etc/kubeedge/config/edgecore.yaml'

# also you can specify it anywhere with '--config'

mkdir -p /etc/kubeedge/config/

- 使用 ~/kubeedge/edgecore --minconfig 命令创建最简配置:

~/kubeedge/edgecore --minconfig > /etc/kubeedge/config/edgecore.yaml

- 或使用 ~/kubeedge/edgecore --defaultconfig 命令创建完整配置:

~/kubeedge/edgecore --defaultconfig > /etc/kubeedge/config/edgecore.yaml

- 编辑配置文件:

vim /etc/kubeedge/config/edgecore.yaml

(B) 修改 edgecore.yaml

- 检查 modules.edged.podSandboxImage,请运行以下命令:

shell

getconf LONG_BIT

+ `kubeedge/pause-arm:3.1` for arm arch

+ `kubeedge/pause-arm64:3.1` for arm64 arch

+ `kubeedge/pause:3.1` for x86 arch

- KubeEdge v1.3之前的版本:检查 modules.edgehub.tlsCaFile 、modules.edgehub.tlsCertFile 和 modules.edgehub.tlsPrivateKeyFile 的证书文件是否存在。如果这些文件不存在,需要从云端拷贝它们。

- KubeEdge v1.3之后的版本:仅跳过以上有关证书文件的检查,但是如果手动配置 Edgecore 证书,则必须检查证书的路径是否正确。

- 在 modules.edgehub.websocket.server 和 modules.edgehub.quic.server 字段中更新 KubeEdge CloudCore 的 IP 地址和端口,需要设置 cloudcore 的 IP 地址。

- 配置 docker 或 remote 作为所需的 runtime 的容器(对于所有基于 CRI 的runtime(包括容器))。如果未指定此参数,则默认情况下将使用 docker 作为 runtime 的容器:

runtimeType: docker

// 或者

runtimeType: remote

- 如果运行类型是 remote,请遵循 KubeEdge CRI 配置指南来设置基于 remote/CRI 的运行时的 KubeEdge。

- 在用于申请证书的 modules.edgehub.httpServer 中配置 KubeEdge cloudcore 的 IP 地址和端口,例如:

modules:

edgeHub:

httpServer: https://10.1.11.85:10002

- 配置 token:

kubectl get secret tokensecret -n kubeedge -oyaml

- 将获得如下内容:

apiVersion: v1

data:

tokendata: ODEzNTZjY2MwODIzMmIxMTU0Y2ExYmI5MmRlZjY4YWQwMGQ3ZDcwOTIzYmU3YjcyZWZmOTVlMTdiZTk5MzdkNS5leUpoYkdjaU9pSklVekkxTmlJc0luUjVjQ0k2SWtwWFZDSjkuZXlKbGVIQWlPakUxT0RreE5qRTVPRGw5LmpxNENXNk1WNHlUVkpVOWdBUzFqNkRCdE5qeVhQT3gxOHF5RnFfOWQ4WFkK

kind: Secret

metadata:

creationTimestamp: "2020-05-10T01:53:10Z"

name: tokensecret

namespace: kubeedge

resourceVersion: "19124039"

selfLink: /api/v1/namespaces/kubeedge/secrets/tokensecret

uid: 48429ce1-2d5a-4f0e-9ff1-f0f1455a12b4

type: Opaque

- 通过 base64 解码 tokendata 字段:

echo ODEzNTZjY2MwODIzMmIxMTU0Y2ExYmI5MmRlZjY4YWQwMGQ3ZDcwOTIzYmU3YjcyZWZmOTVlMTdiZTk5MzdkNS5leUpoYkdjaU9pSklVekkxTmlJc0luUjVjQ0k2SWtwWFZDSjkuZXlKbGVIQWlPakUxT0RreE5qRTVPRGw5LmpxNENXNk1WNHlUVkpVOWdBUzFqNkRCdE5qeVhQT3gxOHF5RnFfOWQ4WFkK |base64 -d

# then we get:

81356ccc08232b1154ca1bb92def68ad00d7d70923be7b72eff95e17be9937d5.eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE1ODkxNjE5ODl9.jq4CW6MV4yTVJU9gAS1j6DBtNjyXPOx18qyFq_9d8XY

- 将解码后的字符串复制到 edgecore.yaml 中,如下所示:

modules:

edgeHub:

token: 81356ccc08232b1154ca1bb92def68ad00d7d70923be7b72eff95e17be9937d5.eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE1ODkxNjE5ODl9.jq4CW6MV4yTVJU9gAS1j6DBtNjyXPOx18qyFq_9d8XY

四、使用 CRI 设置不同的 container runtime

① containerd

- Docker 18.09 及更高版本自带 containerd ,因此无需手动安装,如果没有 containerd ,可以通过运行以下命令进行安装:

# Install containerd

apt-get update && apt-get install -y containerd.io

# Configure containerd

mkdir -p /etc/containerd

containerd config default > /etc/containerd/config.toml

# Restart containerd

systemctl restart containerd

- 使用 Docker 自带的 containerd 时,默认情况下 cri 插件是不可使用的,需要更新 containerd 的配置,来使 KubeEdge 能够 containerd 作为它的 runtime:

# Configure containerd

mkdir -p /etc/containerd

containerd config default > /etc/containerd/config.toml

- 更新 edgecore 配置文件 edgecore.yaml,为被作为 runtime 的 containerd 指定以下参数:

remoteRuntimeEndpoint: unix:///var/run/containerd/containerd.sock

remoteImageEndpoint: unix:///var/run/containerd/containerd.sock

runtimeRequestTimeout: 2

podSandboxImage: k8s.gcr.io/pause:3.2

runtimeType: remote

- 默认情况下,cri 的 cgroup 驱动程序配置为 cgroupfs,如果不是这种情况,可以在中 edgecore.yaml 手动切换成 systemd:

modules:

edged:

cgroupDriver: systemd

- 设置 containerd 的配置文件(/etc/containerd/config.toml)中的 systemd_cgroup 为 true ,然后重新启动 containerd:

# /etc/containerd/config.toml

systemd_cgroup = true

# Restart containerd

systemctl restart containerd

- 创建 nginx 应用程序,检查该服务容器含有 containerd 并位于边缘端:

kubectl apply -f $GOPATH/src/github.com/kubeedge/kubeedge/build/deployment.yaml

deployment.apps/nginx-deployment created

ctr --namespace=k8s.io container ls

CONTAINER IMAGE RUNTIME

41c1a07fe7bf7425094a9b3be285c312127961c158f30fc308fd6a3b7376eab2 docker.io/library/nginx:1.15.12 io.containerd.runtime.v1.linux

- cri 不支持 multi-tenancy 但是 containerd 支持,因此默认情况下,容器的命名空间需要设置为 “k8s.io”。

② CRI-O

- 请遵循 CRI-O安装指南 来设置 CRI-O,如果边缘节点需要在 ARM 平台上运行,而发行版是 ubuntu18.04,那么可能需要构建二进制形式的源文件然后进行安装,因为 Kubic 存储库中没有针对此组合的 CRI-O 软件包:

git clone https://github.com/cri-o/cri-o

cd cri-o

make

sudo make install

# generate and install configuration files

sudo make install.config

- 遵循以下指导来配置 CNI 网络,设置 CNI,更新 edgecore 配置文件,为 CRI-O-based runtime 指定以下参数:

remoteRuntimeEndpoint: unix:///var/run/crio/crio.sock

remoteImageEndpoint: unix:var/run/crio/crio.sock

runtimeRequestTimeout: 2

podSandboxImage: k8s.gcr.io/pause:3.2

runtimeType: remote

- 默认情况下 CRI-O 使用 cgroupfs 作为一个 cgroup 的程序管理器,如果想替换成 systemd,请更新 CRI-O 配置文件(/etc/crio/crio.conf.d/00-default.conf):

# Cgroup management implementation used for the runtime.

cgroup_manager = "systemd"

- 如果在 ARM 平台上使用 pause 镜像,并且 pause 镜像不是 multi-arch 镜像,应该更新 pause 镜像,要配置 pause 镜像,更新 CRI-O 配置文件:

pause_image = "k8s.gcr.io/pause-arm64:3.1"

- 同时更新 edgecore.yaml 里面的 cgroup driver manager:

modules:

edged:

cgroupDriver: systemd

- 启动 CRI-O 和 edgecore 服务(假设两项服务均由 systemd 负责):

sudo systemctl daemon-reload

sudo systemctl enable crio

sudo systemctl start crio

sudo systemctl start edgecore

- 创建应用程序,并检查 CRI-O 容器是在边缘端创建:

kubectl apply -f $GOPATH/src/github.com/kubeedge/kubeedge/build/deployment.yaml

deployment.apps/nginx-deployment created

# crictl ps

CONTAINER ID IMAGE CREATED STATE NAME ATTEMPT POD ID

41c1a07fe7bf7 f6d22dec9931b 2 days ago Running nginx 0 51f727498b06f

③ Kata Containers

- Kata Containers 是一个 container runtime,旨在解决多租户、不受信任的云环境中的安全挑战。但是多租户支持仍在 KubeEdge 的 backlog 中,如果下游定制 KubeEdge 已经支持多租户,那么 Kata Containers 是轻量级且安全的 container runtime 的理想选择。

- 按照 安装指南 安装和配置容器和 Kata Containers。如果安装了“kata-runtime”,请运行以下命令以检查主机系统是否可以运行并创建 Kata 容器:

kata-runtime kata-check

- RuntimeClass 是一项功能,用于选择自 containerdv1.2.0 以来受支持的container runtime配置,以用于运行 Pod 的容器。如果 containerd 版本高于 v1.2.0,则有两种选择来配置 containerd 以使用 Kata 容器:

-

- Kata Containers 作为 RuntimeClass;

-

- Kata Containers 作为不受信任的工作负载的 runtime。

- 假设您已将 Kata Containers 配置为不受信任的工作负载的 runtime,为了验证它是否可以在边缘节点上运行,可以运行:

cat nginx-untrusted.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-untrusted

annotations:

io.kubernetes.cri.untrusted-workload: "true"

spec:

containers:

- name: nginx

image: nginx

kubectl create -f nginx-untrusted.yaml

# verify the container is running with qemu hypervisor on edge side,

ps aux | grep qemu

root 3941 3.0 1.0 2971576 174648 ? Sl 17:38 0:02 /usr/bin/qemu-system-aarch64

crictl pods

POD ID CREATED STATE NAME NAMESPACE ATTEMPT

b1c0911644cb9 About a minute ago Ready nginx-untrusted default 0

④ Virtlet

- 下载 CNI 插件安装包并解压它:

$ wget https://github.com/containernetworking/plugins/releases/download/v0.8.2/cni-plugins-linux-amd64-v0.8.2.tgz

# Extract the tarball

$ mkdir cni

$ tar -zxvf v0.2.0.tar.gz -C cni

$ mkdir -p /opt/cni/bin

$ cp ./cni/* /opt/cni/bin/

- 配置 CNI 插件:

$ mkdir -p /etc/cni/net.d/

$ cat >/etc/cni/net.d/bridge.conf <- 设置 VM runtime,使用 hack/setup-vmruntime.sh 脚本来设置 VM runtime,它利用 Arktos Runtime 安装包来启动三个容器:

vmruntime_vms

vmruntime_libvirt

vmruntime_virtlet

五、使用 Kubeflow 和 Volcano 实现典型 AI 训练任务

① Kubeflow 的诞生背景和运行 AI 计算任务面临的问题

- Kubernetes 已经成为云原生应用编排、管理的事实标准,越来越多的应用选择向 Kubernetes 迁移。人工智能和机器学习领域天然的包含大量的计算密集型任务,开发者非常愿意基于 Kubernetes 构建 AI 平台,充分利用 Kubernetes 提供的资源管理、应用编排、运维监控能力。

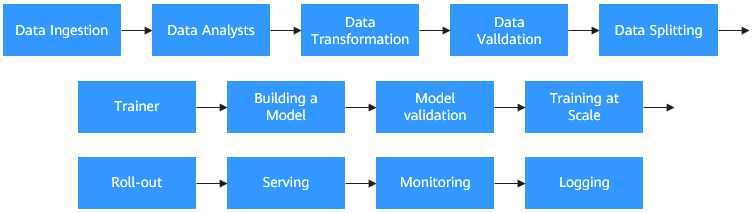

- 然而基于 Kubernetes 构建一个端到端的 AI 计算平台是非常复杂和繁琐的过程,它需要处理很多个环节。如下所示,除了熟知的模型训练环节之外还包括数据收集、预处理、资源管理、特性提取、数据验证、模型的管理、模型发布、监控等环节,对于一个 AI 算法工程师来讲,要做模型训练,就不得不搭建一套 AI 计算平台,这个过程耗时费力,而且需要很多的知识积累:

- Kubeflow 诞生于 2017 年,Kubeflow 项目是基于容器和 Kubernetes 构建,旨在为数据科学家、机器学习工程师、系统运维人员提供面向机器学习业务的敏捷部署、开发、训练、发布和管理平台。它利用了云原生技术的优势,让用户更快速、方便的部署、使用和管理当前最流行的机器学习软件。

- 目前 Kubeflow 1.0 版本已经发布,包含开发、构建、训练、部署四个环节,可全面支持企业用户的机器学习、深度学习完整使用过程,如下所示:

- 通过 Kubeflow 1.0,用户可以使用 Jupyter 开发模型,然后使用 fairing(SDK)等工具构建容器,并创建 Kubernetes 资源训练其模型。模型训练完成后,用户还可以使用 KFServing 创建和部署用于推理的服务器,再结合 pipeline(流水线)功能可实现端到端机器学习系统的自动化敏捷构建,实现 AI 领域的 DevOps。

② Kubernetes 存在的问题

- 既然有了 Kubeflow,是不是在 Kubernetes 上进行机器学习、深度学习就一帆风顺了呢?答案是否定的。我们知道 Kubeflow 在调度环境使用的是 kubernetes 的默认调度器,而 Kubernetes 默认调度器最初主要是为长服务设计的,对于 AI、大数据等批量和弹性调度方面还有很多的不足。

- 主要存在以下问题:

-

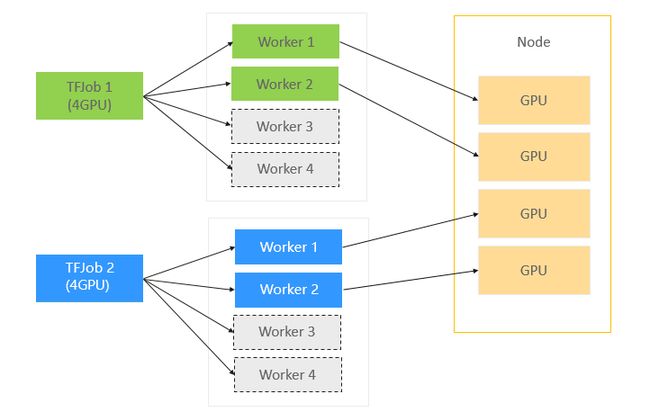

- 资源争抢问题:TensorFlow 的作业包含 Ps 和 Worker 两种不同的角色,这两种角色的 Pod 要配合起来完成整个作业,如果只是运行一种角色 Pod,整个作业是无法正常执行的,而默认调度器对于 Pod 调度是逐个进行的,对于 Kubeflow 作业 TFJob 的 Ps 和 Worker 是不感知的。在集群高负载(资源不足)的情况下,会出现多个作业各自分配到部分资源运行一部分 Pod,而又无法正执行完成的状况,从而造成资源浪费。以下为例,集群有 4 块 GPU 卡,TFJob1 和 TFJob2 作业各自有 4 个 Worker,TFJob1 和 TFJob2 各自分配到 2 个 GPU,但是 TFJob1 和 TFJob2 均需要 4 块 GPU 卡才能运行起来,这样 TFJob1 和 TFJob2 处于互相等待对方释放资源,这种死锁情况造成了 GPU 资源的浪费:

-

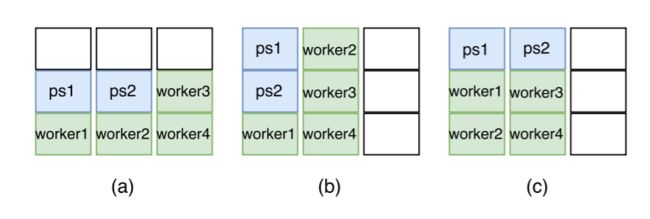

- 亲和调度问题:分布式训练中,Ps 和 Worker 存在很频繁的数据交互,所以 Ps 和 Worker 之间的带宽直接影响了训练的效率。Kubernetes 默认调度器并不考虑 Ps 和 Worker 的这种逻辑关系,Ps 和 Worker 是被随机调度的。如下图所示,2 个 TFJob(1 个 Ps + 2 Worker),使用默认调度器,有可能会出现(a)、(b)、(c)三种情况的任意一种情况,我们知道(c)才是最想要的调度结果。因为在©中,Ps 和 Worker 可以利用本机网络提供传输效率,缩短训练时间:

③ Volcano 批量调度系统加速 AI 计算的利器

- Volcano是一款构建于 Kubernetes 之上的增强型高性能计算任务批量处理系统。作为一个面向高性能计算场景的平台,它弥补了 kubernetes 在机器学习、深度学习、HPC、大数据计算等场景下的基本能力缺失,其中包括 gang-schedule 的调度能力、计算任务队列管理、task-topology 和 GPU 亲和性调度。另外,Volcano 在原生 kubernetes 能力基础上对计算任务的批量创建及生命周期管理、fair-share、binpack 调度等方面做了增强,Volcano 充分解决了上文提到的 Kubeflow 分布式训练面临的问题:

④ Volcano 在华为云的应用:实现典型分布式 AI 训练任务

- Kubeflow 和 Volcano 两个开源项目的结合充分简化和加速 Kubernetes 上 AI 计算进程,当前已经成为越来越多用户的最佳选择,应用于生产环境。Volcano 目前已经应用于华为云 CCE、CCI 产品以及容器批量计算解决方案。未来 Volcano 会持续迭代演进,优化算法、增强调度能力如智能调度的支持,在推理场景增加 GPU Share 等特性的支持,进一步提升 kubeflow 批量训练和推理的效率。

- 登录 CCE 控制台,创建一个 CCE 集群,详情请参见购买 CCE 集群。

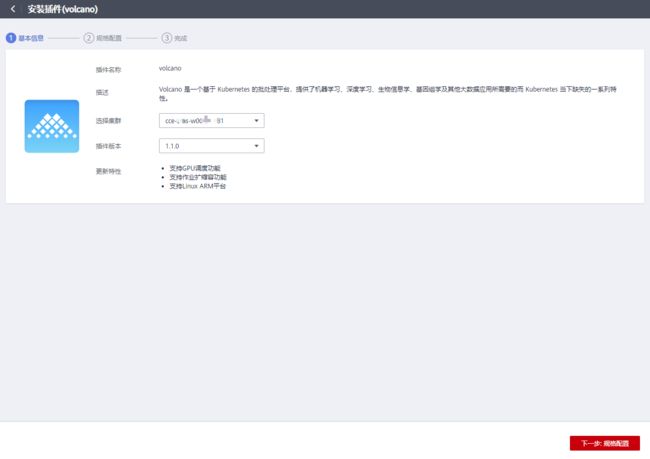

- 在 CCE 集群上部署 Volcano 环境,单击左侧栏目树中的“插件管理”,在“插件市场”页签下单击 Volcano 插件下方的“安装插件”,在安装插件页面的“基本信息”步骤中选择要安装的集群和 Volcano 插件版本,单击“下一步:规格配置”:

- Volcano 插件无可配置参数,直接单击“安装”,等待安装任务完成。

- 安装 kfctl 工具并设置环境变量,环境变量设置如下:

export KF_NAME=

export BASE_DIR=

export KF_DIR=${BASE_DIR}/${KF_NAME}

export CONFIG_URI="https://raw.githubusercontent.com/kubeflow/manifests/v1.0-branch/kfdef/kfctl_k8s_istio.v1.0.2.yaml"

- kfctl 安装:下载 kfctl:

tar -xvf kfctl_v1.0.2_.tar.gz

chmod +x kfctl

mv kfctl /usr/local/bin/

- 部署 kubeflow:

mkdir -p ${KF_DIR}

cd ${KF_DIR}

kfctl apply -V -f ${CONFIG_URI}

- 下载 kubeflow/examples 到本地并根据环境选择指南,命令如下:

yum install git

git clone https://github.com/kubeflow/examples.git

- 安装 python3:

wget https://www.python.org/ftp/python/3.6.8/Python-3.6.8.tgz

tar -zxvf Python-3.6.8.tgz

cd Python-3.6.8 ./configure

make make install

- 安装完成后执行如下命令检查是否安装成功:

python3 -V

pip3 -V

- 安装 jupyter notebook 并启动,命令如下:

pip3 install jupyter notebook

jupyter notebook --allow-root

- Putty 设置 tunnel,远程连接 notebook,连接成功后浏览器输入 localhost:8000,登录 notebook。

- 根据 jupyter 的指引,创建分布式训练作业,通过简单的设置 schedulerName 字段的值为“volcano”,启用 Volcano 调度器:

kind: TFJob

metadata:

name: {train_name}

spec:

schedulerName: volcano

tfReplicaSpecs:

Ps:

replicas: {num_ps}

template:

metadata:

annotations:

sidecar.istio.io/inject: "false"

spec:

serviceAccount: default-editor

containers:

- name: tensorflow

command:

...

env:

...

image: {image}

workingDir: /opt

restartPolicy: OnFailure

Worker:

replicas: 1

template:

metadata:

annotations:

sidecar.istio.io/inject: "false"

spec:

serviceAccount: default-editor

containers:

- name: tensorflow

command:

...

env:

...

image: {image}

workingDir: /opt

restartPolicy: OnFailure

- 提交作业,开始训练:

kubectl apply -f mnist.yaml

- 等待训练作业完成,通过 Kubeflow 的 UI 可以查询训练结果信息。至此就完成了一次简单的分布式训练任务,Kubeflow 的借助 TFJob 简化了作业的配置。

- Volcano 通过简单的增加一行配置就可以让用户启动组调度、Task-topology 等功能来解决死锁、亲和性等问题,在大规模分布式训练情况下,可以有效的缩短整体训练时间。