【项目一、xxx病虫害检测项目】1、SSD原理和源码分析

目录

- 前言

- 一、SSD backbone

-

- 1.1、总体结构

- 1.2、修改vgg

- 1.3、额外添加层

- 1.4、需要注意的点

- 二、SSD head

-

- 2.1、检测头predictor

- 2.2、生成default box

- 2.3、计算分类回归损失

-

- 2.3.1、正负样本匹配:MaxIOUAssign

- 2.3.2、平衡正负样本:在线困难样本挖掘

- 2.5、后处理

-

- 2.5.1、回归参数编解码:

- 2.5.2、NMS

- 三、数据增强

- Reference

前言

马上要找工作了,想总结下自己做过的几个小项目。

先总结下实验室之前的一个病虫害检测相关的项目。选用的baseline是SSD,代码是在这个仓库的基础上改的 lufficc/SSD.这个仓库写的ssd还是很牛的,github有1.3k个star。

选择这个版本的代码,主要有两个原因:

- 它的backbone代码是支持直接加载pytorch官方预训练权重的,所以很方便我做实验

- 代码高度模块化,类似mmdetection和Detectron2,写的很高级,不过对初学者不是很友好,但是很能提高工程代码能力。

原仓库主要实现了SSD-VGG16、SSD-Mobilenet-V2、SSD-Mobilenet-V3、SSD-EfficientNet等网络,在我数据集上几个改进版本都还不如SSD-VGG16效果好,所以我在原仓库的基础上进行了自己的实验,加了一些也不算很高级的trick吧,主要是在我的数据集上确实好使,疯狂调参,哈哈哈。

后续代码也会上传到github上的。

同系列讲解:

【项目一、xxx病虫害检测项目】2、网络结构尝试改进:Resnet50、SE、CBAM、Feature Fusion.

【项目一、xxx病虫害检测项目】3、损失函数尝试:Focal loss.

第一篇,先介绍下SSD的原理和源码吧。

代码已全部上传GitHub: HuKai-cv/FFSSD-ResNet..

一、SSD backbone

1.1、总体结构

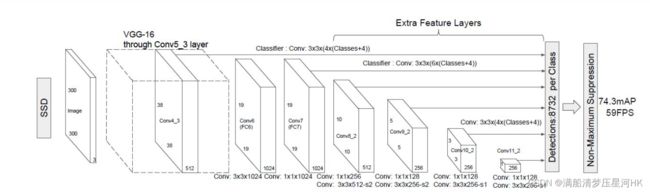

总体结构:

backbone主要由两个部分构成:第一个部分是原先的vgg结构(conv1_1->conv_53)和替换vgg的部分(pool5->conv7);第二个部分是一些额外的添加层结构:conv8_1->conv11_2。总共有6个预测特征层,分别是Conv4_3、Conv7、Conv8_2、Conv9_2、Conv10_2、Conv11_2,前面两个是backbone生成的,后面4个是额外添加层生成的。

对应代码在ssd/modeling/backbone/vgg.py中:

@registry.BACKBONES.register('vgg')

def vgg(cfg, pretrained=True):

"""搭建vgg模型+加载预训练权重

Args:

cfg: vgg配置文件

pretrained: 是否加载预训练模型

Returns:

返回加载完预训练权重的vgg模型

"""

model = VGG(cfg) # 搭建vgg模型

if pretrained: # 加载预训练权重

# 这里默认会从amazonaws平台下载vgg模型预训练权重文件

model.init_from_pretrain(load_state_dict_from_url(model_urls['vgg']))

return model

vgg_base = {

'300': [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 'C', 512, 512, 512, 'M',

512, 512, 512],

'512': [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 'C', 512, 512, 512, 'M',

512, 512, 512],

}

extras_base = {

'300': [256, 'S', 512, 128, 'S', 256, 128, 256, 128, 256],

'512': [256, 'S', 512, 128, 'S', 256, 128, 'S', 256, 128, 'S', 256],

}

class VGG(nn.Module):

def __init__(self, cfg):

super().__init__()

size = cfg.INPUT.IMAGE_SIZE # 输入图片大小

# ssd-vgg16 backbone配置信息 [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 'C', 512, 512, 512, 'M', 512, 512, 512]

vgg_config = vgg_base[str(size)]

# vgg之后额外的一些特征提取层配置信息 [256, 'S', 512, 128, 'S', 256, 128, 'S', 256, 128, 'S', 256]

extras_config = extras_base[str(size)]

# 初始化backbone

self.vgg = nn.ModuleList(add_vgg(vgg_config))

# 初始化额外特征提取层

self.extras = nn.ModuleList(add_extras(extras_config, i=1024, size=size))

self.l2_norm = L2Norm(512, scale=20)

self.reset_parameters() # 参数初始化

def reset_parameters(self): # 参数初始化

for m in self.extras.modules():

if isinstance(m, nn.Conv2d):

nn.init.xavier_uniform_(m.weight)

nn.init.zeros_(m.bias)

def init_from_pretrain(self, state_dict): # 载入backbone预训练权重

self.vgg.load_state_dict(state_dict)

def forward(self, x):

features = [] # 存放6个预测特征层

# 前23层conv1_1->conv4_3(包括relu层)前序传播

for i in range(23):

x = self.vgg[i](x)

s = self.l2_norm(x) # Conv4_3 L2 normalization 论文里这样说的 不知道为什么

features.append(s) # append conv4_3

# apply vgg up to fc7

for i in range(23, len(self.vgg)):

x = self.vgg[i](x)

features.append(x) # append conv7

for k, v in enumerate(self.extras):

x = F.relu(v(x), inplace=True)

if k % 2 == 1:

features.append(x) # append conv8_2 conv9_2 conv10_2 conv11_2

# ssd300返回六个预测特征层 ssd512返回7个预测特征层

return tuple(features)

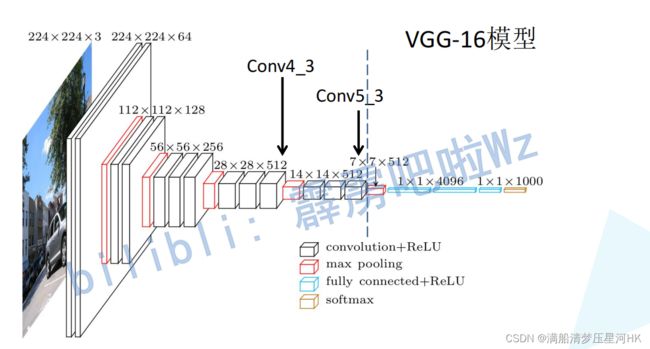

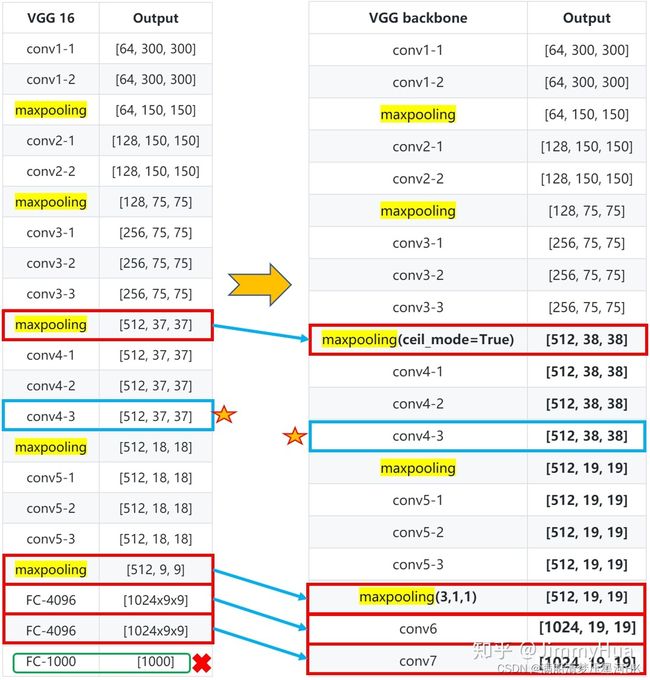

1.2、修改vgg

模型结构的部分对应的是vgg中的Conv1_1->Conv5_3,如下图所示(图片来自b站霹雳吧啦Wz:2.1SSD算法理论.):

而Conv4_3得到的特征图就是我们的第一个预测特征图,Conv5_3之后接一个pool5,和原论文不同的是,这里将pool5从原来的2x2/2变为3x3/1,这样经过pool5之后特征层尺寸不变。再之和再接一个3x3的卷积Conv6和1x1卷积Conv7代替vgg16中的两个全连接层fc6、fc7,到此backbone-vgg部分就全部构建完成了。而且Conv7的输出特征层就算第二个预测特征图。

对比下(图片来自just_sort: 目标检测算法之SSD代码解析(万字长文超详细).):

对应代码在ssd/modeling/backbone/vgg.py中:

# borrowed from https://github.com/amdegroot/ssd.pytorch/blob/master/ssd.py

def add_vgg(cfg, batch_norm=False):

"""根据配置文件搭建SSD中的backbone(Conv1_1->Conv7)模型结构

pool3x3/1替换vgg中的pool2x2/2 conv6(空洞卷积 r=6)、conv7替换fc6、fc7 其中conv4_3、conv7都得到预测特征图

Args:

cfg: Conv1_1->Conv7配置文件 [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 'C', 512, 512, 512, 'M', 512, 512, 512]

数字表示当前卷积层的输出channel 'M'表示maxpooling采用向下取整的形式如9x9->4x4 'C'相反表示向上取整如9x9->5x5

batch_norm: True conv+bn+relu

False conv relu 原论文是没有bn层的

Returns: backbone层结构 卷积层+maxpool共17层

"""

layers = []

in_channels = 3

for v in cfg:

if v == 'M':

layers += [nn.MaxPool2d(kernel_size=2, stride=2)]

elif v == 'C':

# ceil mode feature map size是奇数时 最大池化下采样向上取整 如 9x9->5x5

layers += [nn.MaxPool2d(kernel_size=2, stride=2, ceil_mode=True)]

else:

conv2d = nn.Conv2d(in_channels, v, kernel_size=3, padding=1)

if batch_norm:

layers += [conv2d, nn.BatchNorm2d(v), nn.ReLU(inplace=True)]

else:

layers += [conv2d, nn.ReLU(inplace=True)]

in_channels = v # 下一层的输入=这一层的输出

# 论文里有说将vgg中的pool5的2x2/2变为3x3/1 这样这层的池化后特征图尺寸不变

pool5 = nn.MaxPool2d(kernel_size=3, stride=1, padding=1)

# conv6使用了空洞卷积 原论文也是这样说的

conv6 = nn.Conv2d(512, 1024, kernel_size=3, padding=6, dilation=6)

conv7 = nn.Conv2d(1024, 1024, kernel_size=1)

layers += [pool5, conv6,

nn.ReLU(inplace=True), conv7, nn.ReLU(inplace=True)]

return layers

1.3、额外添加层

额外添加层主要由4个部分构成,Conv8:conv1x1x256 + conv3x3x512/2;Conv9:conv1x1x128 + conv3x3x256/2;Conv10:conv1x1x128 + conv3x3x256/1;Conv11:conv1x1x128 + conv3x3x256/1

对应代码在ssd/modeling/backbone/vgg.py中:

def add_extras(cfg, i, size=300):

"""

backbone之后的4个额外添加层 生成4个预测特征图

Extra layers added to VGG for feature scaling

Args:

cfg: 4个额外添加层的配置文件 [256, 'S', 512, 128, 'S', 256, 128, 'S', 256, 128, 'S', 256]

数字代表当前卷积层输出channel当前卷积层s=1 'S'代表当前卷积层s=2

i: 1024 第一个额外特征层 也就是conv8_1的输入channel

size: 输入图片大小

Returns: 4个额外添加层结构

"""

layers = []

in_channels = i

flag = False

for k, v in enumerate(cfg):

if in_channels != 'S':

if v == 'S':

layers += [nn.Conv2d(in_channels, cfg[k + 1], kernel_size=(1, 3)[flag], stride=2, padding=1)]

else:

layers += [nn.Conv2d(in_channels, v, kernel_size=(1, 3)[flag])]

flag = not flag

in_channels = v

if size == 512:

layers.append(nn.Conv2d(in_channels, 128, kernel_size=1, stride=1))

layers.append(nn.Conv2d(128, 256, kernel_size=4, stride=1, padding=1))

return layers

好了整个网络结构介绍完了。

1.4、需要注意的点

- ssd整个网络没有bn

- ssd的conv4_3输出的特征图进行了L2 norm操作,因为网络层靠前,方差比较大,需要加一个L2标准化,以保证和后面的检测层差异不是很大。

- 3、conv6 用了3x3空洞卷积 p=6 r=6

二、SSD head

SSD head分为三部分,第一部分将6/7个预测特征层输入检测头得到最终的预测回归参数和预测分类结果;第二部分训练时根据最终的预测回归参数和预测分类结果和gt计算损失;第三部分测试时对预测分类结果进行softmax处理,对预测回归参数利用default box进行解码,nms等处理。

对应代码在ssd/modeling/box_head/box_head.py中:

@registry.BOX_HEADS.register('SSDBoxHead')

class SSDBoxHead(nn.Module):

def __init__(self, cfg):

super().__init__()

self.cfg = cfg

self.predictor = make_box_predictor(cfg) # 预测器 类型SSDBoxPredictor

self.loss_evaluator = MultiBoxLoss(neg_pos_ratio=cfg.MODEL.NEG_POS_RATIO) # 损失函数 类型MutiBoxLoss

# self.loss_evaluator = Focal_loss(neg_pos_ratio=cfg.MODEL.NEG_POS_RATIO)

self.post_processor = PostProcessor(cfg) # 后处理

self.priors = None # default box

def forward(self, features, targets=None):

"""

Args:

features: tuple7 [bs,512,64,64] [bs,1024,32,32] [bs,512,16,16] [bs,256,8,8] [bs,256,4,4] [bs,256,2,2] [bs,256,1,1]

targets: 'boxes': [bs, 24564, 4] 'labels': [bs, 24564]

Returns:

"""

# cls_logits: [bs,all_anchors,num_classes]=[bs, 24564, 6]

# bbox_pred: [bs,all_anchors,xywh]=[bs, 24564, 4]

cls_logits, bbox_pred = self.predictor(features) # 预测器前线传播

if self.training: # 训练返回预测结果和loss

return self._forward_train(cls_logits, bbox_pred, targets)

else: # 测试和验证返回预测结果和{}

return self._forward_test(cls_logits, bbox_pred)

def _forward_train(self, cls_logits, bbox_pred, targets):

"""

Args:

cls_logits: 分类预测结果 [bs,all_anchors,num_classes]=[bs, 24564, 6] 0代表背景类 真实类别=num_classes-1

bbox_pred: 回归预测结果 [bs,all_anchors,xywh]=[bs, 24564, 4]

targets: gt 'boxes': [bs, 24564, 4] 'labels': [bs, 24564]

Returns: 预测结果+loss

"""

# gt_boxes: [bs, 24564, 4] gt_labels: [bs, 24564]

gt_boxes, gt_labels = targets['boxes'], targets['labels']

# 计算损失

reg_loss, cls_loss = self.loss_evaluator(cls_logits, bbox_pred, gt_labels, gt_boxes) # 回归损失 + 分类损失

loss_dict = dict(

reg_loss=reg_loss,

cls_loss=cls_loss,

)

detections = (cls_logits, bbox_pred)

return detections, loss_dict # 返回预测结果和loss

def _forward_test(self, cls_logits, bbox_pred):

"""

Args:

cls_logits: 分类预测结果 [bs,all_anchors,num_classes]=[bs, 24564, 6]

bbox_pred: 回归预测结果 [bs,all_anchors,xywh]=[bs, 24564, 4]

Returns:

"""

if self.priors is None:

self.priors = PriorBox(self.cfg)().to(bbox_pred.device)

scores = F.softmax(cls_logits, dim=2) # 分类结果在classes_num维度进行softmax [bs,24564,num_classes]

# 利用default box将预测回归参数进行解码 [bs, num_anchors, xywh(归一化后的真实的预测坐标)]

boxes = box_utils.convert_locations_to_boxes(

bbox_pred, self.priors, self.cfg.MODEL.CENTER_VARIANCE, self.cfg.MODEL.SIZE_VARIANCE

)

# xywh->xyxy 将归一化后的预测坐标由xywh->xyxy

boxes = box_utils.center_form_to_corner_form(boxes)

detections = (scores, boxes)

detections = self.post_processor(detections) # 后处理

return detections, {}

2.1、检测头predictor

第一部分网络结构总共得到6个特征图,分别是Conv4_3,Conv7、Conv8_2、Conv9_2、Conv10_2、Conv11_2的输出特征图。接下来就是就这些预测特征层送入检测头中了,其实就是一个3x3个Conv直接对default box进行分类预测和回归预测。

base检测头对应代码在ssd/modeling/box_head/box_predictor.py中:

class BoxPredictor(nn.Module): # base box predictor

def __init__(self, cfg):

super().__init__()

self.cfg = cfg # predictor配置文件

self.cls_headers = nn.ModuleList() # 7个预测特征层对应的7个分类头 全是3x3conv s=1 p=1

self.reg_headers = nn.ModuleList() # 7个预测特征层对应的7个回归头 全是3x3cong s=1 p=1

# 每层default box个数 [4, 6, 6, 6, 6, 4, 4]

# 7个预测特征层输出channel数=7个分类头/回归头的输入channel (512, 1024, 512, 256, 256, 256, 256)

for level, (boxes_per_location, out_channels) in enumerate(zip(cfg.MODEL.PRIORS.BOXES_PER_LOCATION, cfg.MODEL.BACKBONE.OUT_CHANNELS)):

# conv3x3 s=1 p=1 in_channel=(512, 1024, 512, 256, 256, 256, 256) out_channel=[4, 6, 6, 6, 6, 4, 4]*num_classes

self.cls_headers.append(self.cls_block(level, out_channels, boxes_per_location))

# conv3x3 s=1 p=1 in_channel=(512, 1024, 512, 256, 256, 256, 256) out_channel=[4, 6, 6, 6, 6, 4, 4]*4

self.reg_headers.append(self.reg_block(level, out_channels, boxes_per_location))

self.reset_parameters()

def cls_block(self, level, out_channels, boxes_per_location):

raise NotImplementedError

def reg_block(self, level, out_channels, boxes_per_location):

raise NotImplementedError

def reset_parameters(self): # 初始化权重

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.xavier_uniform_(m.weight)

nn.init.zeros_(m.bias)

def forward(self, features):

cls_logits = [] # 存放所有head分类预测结果

bbox_pred = [] # 存放所有head回归预测结果

for feature, cls_header, reg_header in zip(features, self.cls_headers, self.reg_headers):

# [bs,h,w,num_classes*box_nums] 其中num_classes=6 box_nums=[4, 6, 6, 6, 6, 4, 4]

# [bs,64,64,24] [bs,32,32,32] [bs,16,16,32] [bs,64,64,32] [bs,64,64,32] [bs,64,64,24] [bs,64,64,24]

cls_logits.append(cls_header(feature).permute(0, 2, 3, 1).contiguous())

# [bs,h,w,num_classes*4] 其中num_classes=6 4=xywh

# [bs,64,64,16] [bs,32,32,24] [bs,16,16,24] [bs,64,64,24] [bs,64,64,24] [bs,64,64,16] [bs,64,64,16]

bbox_pred.append(reg_header(feature).permute(0, 2, 3, 1).contiguous())

batch_size = features[0].shape[0]

# [bs,all_anchors,num_classes]=[2,24564,6]

cls_logits = torch.cat([c.view(c.shape[0], -1) for c in cls_logits], dim=1).view(batch_size, -1, self.cfg.MODEL.NUM_CLASSES)

# [bs,all_anchors,xywh]=[bs,24564,4]

bbox_pred = torch.cat([l.view(l.shape[0], -1) for l in bbox_pred], dim=1).view(batch_size, -1, 4)

return cls_logits, bbox_pred

可以看到默认是没有检测头的,但是SSD继承base head的同时重写了检测头对应代码在ssd/modeling/box_head/box_predictor.py中::

@registry.BOX_PREDICTORS.register('SSDBoxPredictor')

class SSDBoxPredictor(BoxPredictor):

# 继承自base box predict BoxPredictor

# 重写分类预测头

def cls_block(self, level, out_channels, boxes_per_location):

return nn.Conv2d(out_channels, boxes_per_location * self.cfg.MODEL.NUM_CLASSES, kernel_size=3, stride=1, padding=1)

# 重写回归预测头

def reg_block(self, level, out_channels, boxes_per_location):

return nn.Conv2d(out_channels, boxes_per_location * 4, kernel_size=3, stride=1, padding=1)

2.2、生成default box

论文生成过程:

Default box的设置包含尺度min_size、max_size和长宽比两个方面。

尺度公式如下:

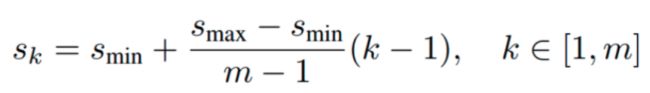

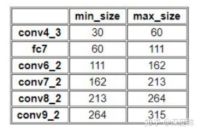

m代表特征层层数,这里是5,因为第一层单独设置。s_k表示anchor尺寸相对于原图的比例,s_min和s_max表示比例的最小值和最大值,论文中分别为0.2和0.9。第一层单独设置比例为s_min/2=0.1,所以它的尺度为300x0.1=30,后面的特征图按照线性公式计算,且下一层的min_size=这一层的max_size。最后可以得到每个特征图的min_size和max_size。

再为每一层人为设置了长宽比;

根据每层的min_size和max_size和长宽比生成各层的default box(1个min_size的1:1;1个min_size*max_size的1:1;2n)。

但是实际代码中default box的尺度和长宽比都是人为设置的。

对应代码在ssd/modeling/anchors/prior_box.py中:

class PriorBox:

def __init__(self, cfg):

# 这里的min_size和max_size是直接给出的 论文是公式求出来的

self.image_size = cfg.INPUT.IMAGE_SIZE # 512 图片大小

prior_config = cfg.MODEL.PRIORS

self.feature_maps = prior_config.FEATURE_MAPS # 所有层的featue map size [64, 32, 16, 8, 4, 2, 1]

self.min_sizes = prior_config.MIN_SIZES # 所有层的min_size[35.84, 76.8, 153.6, 230.4, 307.2, 384.0, 460.8]

self.max_sizes = prior_config.MAX_SIZES # 所有层的max_size[76.8, 153.6, 230.4, 307.2, 384.0, 460.8, 537.65]

self.strides = prior_config.STRIDES # 所有层的stride[8, 16, 32, 64, 128, 256, 512]

self.aspect_ratios = prior_config.ASPECT_RATIOS # 所有层的aspect ratio[[2], [2, 3], [2, 3], [2, 3], [2, 3], [2], [2]]

self.clip = prior_config.CLIP # True

def __call__(self):

"""Generate SSD Prior Boxes.

It returns the center, height and width of the priors. The values are relative to the image size

Returns:

priors (num_priors, 4): The prior boxes represented as [[center_x, center_y, w, h]]. All the values

are relative to the image size.

"""

priors = []

for k, f in enumerate(self.feature_maps):

scale = self.image_size / self.strides[k]

for i, j in product(range(f), repeat=2):

# unit center x,y default box中心点坐标(相对特征图)

cx = (j + 0.5) / scale

cy = (i + 0.5) / scale

# 生成default box

# small sized square box w/h=1:1

size = self.min_sizes[k]

h = w = size / self.image_size

priors.append([cx, cy, w, h])

# big sized square box w/h=1:1

size = sqrt(self.min_sizes[k] * self.max_sizes[k])

h = w = size / self.image_size

priors.append([cx, cy, w, h])

# change h/w ratio of the small sized box w/h=ratio and 1/ratio

size = self.min_sizes[k]

h = w = size / self.image_size

for ratio in self.aspect_ratios[k]:

ratio = sqrt(ratio)

priors.append([cx, cy, w * ratio, h / ratio])

priors.append([cx, cy, w / ratio, h * ratio])

priors = torch.tensor(priors)

if self.clip:

priors.clamp_(max=1, min=0)

return priors

2.3、计算分类回归损失

损失分为两个部分分类损失+回归损失,分类损失针对正样本+负样本(困难样本挖掘负样本:正样本=3:1)用的是softmax+cross entropy。在ssd和faster rcnn中分类损失其实也包含了置信度(预测框包含物体的概率)损失,因为ssd分类通常会将背景也当作一个类别,最后输出接softmax得到20个类别分数和1个置信度分数。;回归损失针对所有正样本使用smooth l1损失函数。

对应代码在ssd/modeling/box_head/loss.py中:

class MultiBoxLoss(nn.Module):

def __init__(self, neg_pos_ratio):

"""Implement SSD MultiBox Loss.

Basically, MultiBox loss combines classification loss

and Smooth L1 regression loss.

"""

super(MultiBoxLoss, self).__init__()

self.neg_pos_ratio = neg_pos_ratio # 负样本/正样本=3:1

def forward(self, confidence, predicted_locations, labels, gt_locations):

"""计算分类损失和回归损失

Args:

confidence: 分类预测结果 [bs,all_anchors,num_classes]=[bs, 24564, 6] 0代表背景类 真实类别=num_classes-1

predicted_locations: 回归预测结果 [bs,all_anchors,xywh]=[bs, 24564, 4]

labels: gt_labels 所有anchor的真实框类别标签 0表示背景类 [bs, 24564]

gt_locations: 所有anchor的真实框回归标签 [bs, 24564, 4]

Returns:

"""

num_classes = confidence.size(2) # 6

with torch.no_grad(): # 困难样本挖掘

# derived from cross_entropy=sum(log(p))

# log_softmax(confidence, dim=2): [bs,24564,6] 对confidence的num_classes维度进行softmax出来再log

# -F.log_softmax(confidence, dim=2)[:, :, 0]: [bs,24564] 所有anchor属于背景的损失

loss = -F.log_softmax(confidence, dim=2)[:, :, 0] # [bs,anchor_nums]

mask = box_utils.hard_negative_mining(loss, labels, self.neg_pos_ratio)

confidence = confidence[mask, :] # [104,6] 正样本[1,25] 负样本[3,75] 正样本+负样本=1+25 + 3+75 = 104

# 分类损失 sum 正样本:负样本=3:1 预测输入: [pos+neg, num_classes] gt输入: [pos+neg]

# F.cross_entropy: 1、对预测输入进行softmax+log运算 -> [pos+neg, num_classes]

# 2、对gt输入进行one-hot编码 [pos+neg] -> [pos+neg, num_classes]

# 3、再对预测和gt进行交叉熵损失计算

classification_loss = F.cross_entropy(confidence.view(-1, num_classes), labels[mask], reduction='sum')

pos_mask = labels > 0

predicted_locations = predicted_locations[pos_mask, :].view(-1, 4) # 正样本预测回归参数 [26,4]

gt_locations = gt_locations[pos_mask, :].view(-1, 4) # 正样本gt label [26,4]

# 回归loss smooth l1 sum(只计算正样本)

smooth_l1_loss = F.smooth_l1_loss(predicted_locations, gt_locations, reduction='sum')

num_pos = gt_locations.size(0) # 正样本个数 26

# 回归总损失(正样本)/正样本个数 分类总损失(正样本+负样本)/正样本个数

return smooth_l1_loss / num_pos, classification_loss / num_pos

2.3.1、正负样本匹配:MaxIOUAssign

正负样本匹配规则:

- 正样本:对每个gt找到和它iou最大的default box,作为这个gt的正样本,这样可以保证每个gt至少有一个正样本;一个default box和所有gt的最大IOU大于0.5时,这个default box为正样本;(1和2同时起作用,可能某个gt会匹配到多个正样本,但是一个default box只能是一个gt的正样本。)

- 负样本:一个default box和所有gt的最大IOU小于0.5时,该default box为负样本;

- 没有忽略样本

对应代码在ssd/utils/box_utils.py中:

def assign_priors(gt_boxes, gt_labels, corner_form_priors, iou_threshold):

"""把每个prior box进行正负样本匹配

Assign ground truth boxes and targets to priors.

Args:

gt_boxes (num_targets, 4): ground truth boxes. 图片的所有类别

gt_labels (num_targets): labels of targets. gt图片的所有类别

priors (num_priors, 4): corner form priors 先验框 default box xyxy

iou_threshold: 0.5 IOU阈值,小于阈值设为背景

Returns:

boxes (num_priors, 4): real values for priors.

labels (num_priros): labels for priors.

"""

# size: num_priors x num_targets gt和default box的iou

ious = iou_of(gt_boxes.unsqueeze(0), corner_form_priors.unsqueeze(1))

# size: num_priors 和每个ground truth box 交集最大的 prior box

best_target_per_prior, best_target_per_prior_index = ious.max(1)

# size: num_targets 和每个prior box 交集最大的 ground truth box

best_prior_per_target, best_prior_per_target_index = ious.max(0)

# 保证每一个ground truth 匹配它的都是具有最大IOU的prior

# 根据 best_prior_dix 锁定 best_truth_idx里面的最大IOU prior

for target_index, prior_index in enumerate(best_prior_per_target_index):

best_target_per_prior_index[prior_index] = target_index

# 2.0 is used to make sure every target has a prior assigned

# 保证每个ground truth box 与某一个prior box 匹配,固定值为 2 > threshold

best_target_per_prior.index_fill_(0, best_prior_per_target_index, 2)

# size: num_priors 提取出所有匹配的ground truth box

labels = gt_labels[best_target_per_prior_index]

# 把 iou < threshold 的框类别设置为 bg,即为0

labels[best_target_per_prior < iou_threshold] = 0 # the backgournd id

# 匹配好boxes

boxes = gt_boxes[best_target_per_prior_index]

return boxes, labels

2.3.2、平衡正负样本:在线困难样本挖掘

困难样本挖掘主要是为了针对负样本太多的情况,如果全部负样本都参与计算损失,那么一定会造成正负样本的比例失衡,所以这里主要计算一些较为困难的负样本的损失,即loss较大的负样本。

具体做法:将所有负样本的分类损失按降序排序,取前正样本3个负样本作为最终的分类负样本,参与分类损失计算。

正样本个数=所有正样本个数 负样本个数=正样本个数3

对应代码在ssd/utils/box_utils.py中:

def hard_negative_mining(loss, labels, neg_pos_ratio):

"""

It used to suppress the presence of a large number of negative prediction.

It works on image level not batch level.

For any example/image, it keeps all the positive predictions and

cut the number of negative predictions to make sure the ratio

between the negative examples and positive examples is no more

the given ratio for an image.

Args:

loss (N, num_priors): the loss for each example. [bs,24564] 所有anchor属于背景的损失

labels (N, num_priors): the labels. 所有anchor的真实框类别标签 0表示背景类 [bs, 24564]

neg_pos_ratio: the ratio between the negative examples and positive examples. 负样本:正样本=3:1

"""

pos_mask = labels > 0 # 正类True 负类背景类False

num_pos = pos_mask.long().sum(dim=1, keepdim=True) # [1个,25个] 第一张图1个正样本 第二张图25个正样本

num_neg = num_pos * neg_pos_ratio # [3, 75] 第一张图3个负样本 第二张图75个负样本

loss[pos_mask] = -math.inf

_, indexes = loss.sort(dim=1, descending=True) # 对负样本损失进行排序

_, orders = indexes.sort(dim=1)

neg_mask = orders < num_neg # 选出损失最高的前num_neg个负样本

return pos_mask | neg_mask

2.5、后处理

后处理主要是针对预测得到的回归参数,利用上一步生成的default box进行解码,得到所有anchor的预测结果,再对这些预测结果进行nms等处理,得到最终的预测结果。

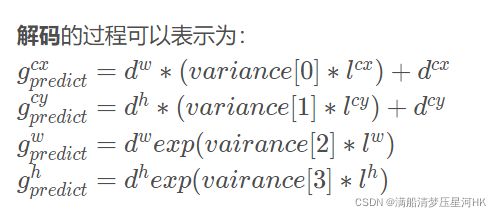

2.5.1、回归参数编解码:

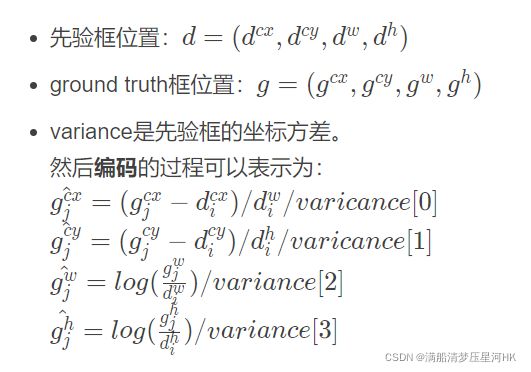

解码:利用default box,将边界框回归参数解码为真实坐标。一般用在验证测试时,将预测框解码为真实坐标,再进行nms,显示在原图上。公式:

对应代码在ssd/utils/box_utils.py中:

def convert_locations_to_boxes(locations, priors, center_variance,

size_variance):

"""Convert regressional location results of SSD into boxes in the form of (center_x, center_y, h, w).

The conversion:

$$predicted\_center * center_variance = \frac {real\_center - prior\_center} {prior\_hw}$$

$$exp(predicted\_hw * size_variance) = \frac {real\_hw} {prior\_hw}$$

We do it in the inverse direction here.

Args:

locations (batch_size, num_priors, 4): the regression output of SSD. It will contain the outputs as well.

回归预测结果 [bs,all_anchors,xywh]=[bs, 24564, 4]

priors (num_priors, 4) or (batch_size/1, num_priors, 4): prior boxes.

[all_anchor, 4]

center_variance: a float used to change the scale of center. 0.1

size_variance: a float used to change of scale of size. 0.2

Returns:

boxes: priors: [[center_x, center_y, w, h]]. All the values

are relative to the image size.

"""

# priors can have one dimension less.

if priors.dim() + 1 == locations.dim():

priors = priors.unsqueeze(0) # [num_anchors, 4] -> [1, num_anchors, 4]

return torch.cat([

# xy 利用default box将xy回归参数进行解码

# 解码后xy坐标=预测的xy参数*default box的wh坐标*default box的xy坐标方差+default box的xy坐标

locations[..., :2] * center_variance * priors[..., 2:] + priors[..., :2],

# wh 利用default box将wh回归参数进行解码

# 解码后的wh坐标=e^(预测的wh参数*default box的wh坐标方差) * default box的wh坐标

torch.exp(locations[..., 2:] * size_variance) * priors[..., 2:]

], dim=locations.dim() - 1)

编码:利用default box,将真实坐标编码为边界框参数,一般用在训练时,对gt label编码,将gt的坐标编码为回归参数,再与预测得到的预测框回归参数一起计算损失。公式(和上面解码过程刚好相反):

对应代码在ssd/utils/box_utils.py中:

def convert_boxes_to_locations(center_form_boxes, center_form_priors, center_variance, size_variance):

# priors can have one dimension less

if center_form_priors.dim() + 1 == center_form_boxes.dim():

center_form_priors = center_form_priors.unsqueeze(0)

return torch.cat([

(center_form_boxes[..., :2] - center_form_priors[..., :2]) / center_form_priors[..., 2:] / center_variance,

torch.log(center_form_boxes[..., 2:] / center_form_priors[..., 2:]) / size_variance

], dim=center_form_boxes.dim() - 1)

2.5.2、NMS

对应代码在ssd/modeling/box_head/inference.py中:

class PostProcessor:

def __init__(self, cfg):

super().__init__()

self.cfg = cfg

self.width = cfg.INPUT.IMAGE_SIZE

self.height = cfg.INPUT.IMAGE_SIZE

def __call__(self, detections):

batches_scores, batches_boxes = detections

device = batches_scores.device

batch_size = batches_scores.size(0)

results = []

for batch_id in range(batch_size):

scores, boxes = batches_scores[batch_id], batches_boxes[batch_id] # (N, #CLS) (N, 4)

num_boxes = scores.shape[0]

num_classes = scores.shape[1]

boxes = boxes.view(num_boxes, 1, 4).expand(num_boxes, num_classes, 4)

labels = torch.arange(num_classes, device=device)

labels = labels.view(1, num_classes).expand_as(scores)

# remove predictions with the background label

boxes = boxes[:, 1:]

scores = scores[:, 1:]

labels = labels[:, 1:]

# batch everything, by making every class prediction be a separate instance

boxes = boxes.reshape(-1, 4)

scores = scores.reshape(-1)

labels = labels.reshape(-1)

# remove low scoring boxes

indices = torch.nonzero(scores > self.cfg.TEST.CONFIDENCE_THRESHOLD).squeeze(1)

boxes, scores, labels = boxes[indices], scores[indices], labels[indices]

boxes[:, 0::2] *= self.width

boxes[:, 1::2] *= self.height

# nms

keep = batched_nms(boxes, scores, labels, self.cfg.TEST.NMS_THRESHOLD)

# keep only topk scoring predictions

keep = keep[:self.cfg.TEST.MAX_PER_IMAGE]

boxes, scores, labels = boxes[keep], scores[keep], labels[keep]

container = Container(boxes=boxes, labels=labels, scores=scores)

container.img_width = self.width

container.img_height = self.height

results.append(container)

return results

三、数据增强

SSD的效果好,还有一个很重要的原因:SSD做了丰富的数据增强策略,这部分为模型mAP带来了8.8%的提升,尤其是小物体和遮挡问题等难点问题,强大的数据增强很重要。

这部分代码在ssd/data/transforms/transformers.py中,自己看下就ok了

Reference

b站霹雳吧啦Wz:2.1SSD算法理论.

CSDN just_sort:目标检测算法之SSD代码解析(万字长文超详细).