传感器监测数据实时存储、计算和展示(RabbitMQ-Flink-InfluxDB)

Backgroud

【2021-09-01】更新内容见下面两篇文章:

- 数据模拟软件:传感器数据模拟软件【StreamFaker】【带界面】【发送到Rabbitmq】【python3+pyqt5实现】

- 程序源码:传感器采集的数据怎么处理、怎么存储【flink】【influxdb】

Goal: 目前一直在做传感器监测类项目,包括地震、桥梁、大厦、体育场、高铁站房、爬模架、风电塔筒等的监测。传感器类型包括GPS、应变、位移、温度、振动、索力、静力水准仪、倾角仪、气象站等。数据采集频率从分钟级到毫秒级不等。需求大同小异,一般需要存储原始监测数据、实时计算、阈值告警等。

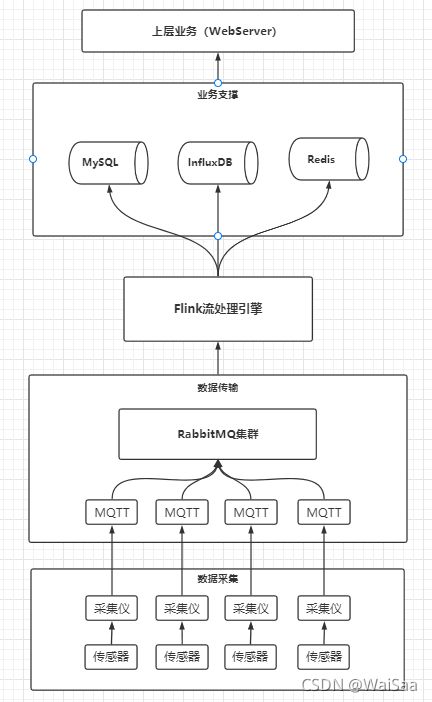

Design: 此类时序数据的存储采用influxDB,实时计算采用Flink,所有传感器数据都接入RabbitMQ(本来想用Kafka,但前期架构不好改了)。

Tool: 这里分享一个数据模拟软件(传感器数据模拟软件(To RabbitMQ))提取码:nxlu

【声明: 本软件为公司自己开发,未经允许和授权,任何组织或个人不得用于商业用途】

这里只是做个简单的记录,方便以后查阅,若有错漏之处,敬请斧正。

先贴个简单的架构图

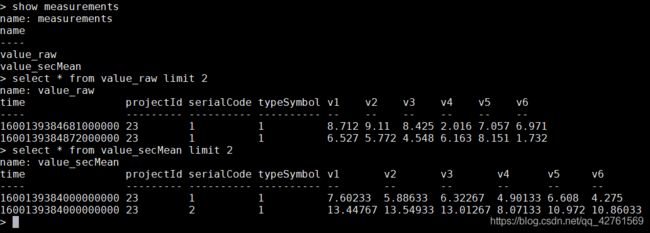

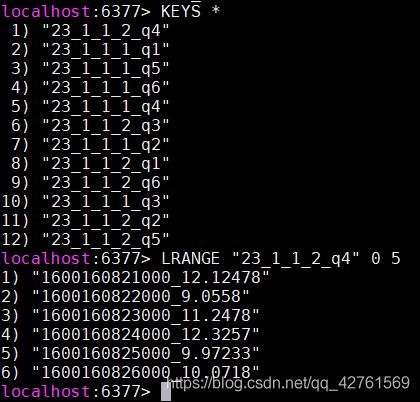

influxDB 和 redis 中存储的数据结构和格式

influx中的v1,v2等和redis中的q1,q2等代表的是传感器的监测指标quota

上代码

package com.cloudansys.api.rabbitmqsource;

import com.cloudansys.config.DefaultConfig;

import com.cloudansys.config.FlinkConfig;

import com.cloudansys.core.constant.Const;

import com.cloudansys.core.entity.MultiDataEntity;

import com.cloudansys.core.flink.function.CustomFilterFunc;

import com.cloudansys.core.flink.function.rabbitmq.*;

import com.cloudansys.core.flink.sink.RawValueInfluxSink;

import com.cloudansys.core.flink.sink.RedisBatchSink;

import com.cloudansys.core.flink.sink.SecMeanValueInfluxSink;

import lombok.extern.slf4j.Slf4j;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.windowing.assigners.TumblingEventTimeWindows;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.util.OutputTag;

import java.util.List;

@Slf4j

public class Application {

public Application() {

}

public static void main(String[] args) throws Exception {

// Windows 下配置 Hadoop 目录

if (System.getProperty("os.name").startsWith("Win")) {

log.info("------ 【windows env】 ------");

System.setProperty(Const.HADOOP_HOME_DIR, FlinkConfig.getHadoopHomeDir());

}

log.info("");

log.info("****************** 获取 Flink 执行环境");

log.info("");

// 获取 Flink 执行环境

StreamExecutionEnvironment env = FlinkUtil.getFlinkEnv();

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

log.info("");

log.info("****************** 配置 RabbitMQ 数据源并解析");

log.info("");

// 解析原始数据,把流元素封装成 ListRabbitMQ 数据源

/**

* RabbitMQ 数据源

*/

public static RMQSource<String> getRMQSource() {

return new RMQSource<String>(

DefaultConfig.getRabbitMQConfig(),

props.getProperty(Const.RABBITMQ_QUEUE_NAME),

new SimpleStringSchema()) {

/**

* 如果 rabbitmq 中的 queue 设置了 ttl

* 这里要把 queueDeclare 的第二个参数修改成 true,并配置 x-message-ttl

*/

@Override

protected void setupQueue() throws IOException {

if (queueName != null) {

Map<String, Object> arguments = new HashMap<>();

arguments.put("x-message-ttl", 259200000);

channel.queueDeclare(queueName, true, false, false, arguments);

}

}

};

}

数据解析

package com.cloudansys.core.flink.function.rabbitmq;

import com.cloudansys.core.entity.MultiDataEntity;

import com.cloudansys.core.util.SensorPayloadParseUtil;

import org.apache.flink.api.common.functions.MapFunction;

import java.util.List;

public class RabbitMQMapFunc implements MapFunction<String, List<MultiDataEntity>> {

@Override

public List<MultiDataEntity> map(String element) throws Exception {

return SensorPayloadParseUtil.parse(element);

}

}

package com.cloudansys.core.util;

import com.cloudansys.core.constant.Const;

import com.cloudansys.core.entity.MultiDataEntity;

import lombok.extern.slf4j.Slf4j;

import java.text.ParseException;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.List;

@Slf4j

public class SensorPayloadParseUtil {

/**

* 原始流中元素{项目id,时间(17位字符串),功能码,测定类型,预留字节,测定个数,指标个数,测定id,value}

* {项目id,时间(17位字符串),测定类型,测定个数,指标个数,id,value}

* 输出:projectId, timestamp, typeSymbol, sensorNum, quotaNum, sensorId1, value1, sensorId2, value2, ...

*/

public static List<MultiDataEntity> parse(String element) throws ParseException {

List<MultiDataEntity> multiDataEntities = new ArrayList<>();

int sensorNum, quotaNum;

String projectId, typeSymbol, pickTime;

String[] items = element.split(Const.COMMA);

int index = 0;

projectId = items[index++];

pickTime = items[index++];

index++; // 功能码

typeSymbol = items[index++];

index++; // 预留字节

sensorNum = Integer.parseInt(items[index++]);

quotaNum = Integer.parseInt(items[index]);

// 将数值拷贝成一个新数组 a1,aq1,aq2,aq3,b1,bq1,bq2,bq3,c1,cq1,cq2,cq3,...

String[] keyValues = Arrays.copyOfRange(items, 7, items.length);

for (int i = 0; i < sensorNum; i++) {

int indexOfSerialCode = i * (quotaNum + 1);

MultiDataEntity multiDataEntity = new MultiDataEntity();

multiDataEntity.setProjectId(projectId);

multiDataEntity.setTypeSymbol(typeSymbol);

multiDataEntity.setPickTime(pickTime);

multiDataEntity.setSerialCode(keyValues[indexOfSerialCode]);

Double[] values = new Double[quotaNum];

int indexOfFirstQuota = indexOfSerialCode + 1;

int indexOfLastQuota = indexOfSerialCode + quotaNum;

for (int j = indexOfFirstQuota; j <= indexOfLastQuota; j++) {

values[j - indexOfFirstQuota] = Double.parseDouble(keyValues[j]);

}

multiDataEntity.setValues(values);

multiDataEntities.add(multiDataEntity);

}

return multiDataEntities;

}

}

写入 influxDB

package com.cloudansys.core.flink.sink;

import com.cloudansys.config.DefaultConfig;

import com.cloudansys.core.constant.Const;

import com.cloudansys.core.entity.MultiDataEntity;

import lombok.extern.slf4j.Slf4j;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.functions.sink.RichSinkFunction;

import org.influxdb.InfluxDB;

import org.influxdb.dto.BatchPoints;

import org.influxdb.dto.Point;

import java.text.ParseException;

import java.text.SimpleDateFormat;

import java.util.List;

import java.util.concurrent.TimeUnit;

@Slf4j

public class RawValueInfluxSink extends RichSinkFunction<List<MultiDataEntity>> {

private static InfluxDB influxDBClient;

private static BatchPoints batchPoints;

private static final String measurement = "value_raw";

private static final String tagName_1 = "projectId";

private static final String tagName_2 = "typeSymbol";

private static final String tagName_3 = "serialCode";

private static final String fieldName_base = "v";

private static int count = 0;

private static long lastTime = 0L;

@Override

public void open(Configuration parameters) throws Exception {

super.open(parameters);

influxDBClient = DefaultConfig.getInfluxDB();

batchPoints = DefaultConfig.getBatchPoints();

}

@Override

public void invoke(List<MultiDataEntity> element, Context context) throws Exception {

log.info("##### write in influx 【{}】#####", measurement);

for (MultiDataEntity multiDataEntity : element) {

String projectId = multiDataEntity.getProjectId();

String typeSymbol = multiDataEntity.getTypeSymbol();

String serialCode = multiDataEntity.getSerialCode();

String pickTime = multiDataEntity.getPickTime();

Point.Builder pointBuilder = getPointBuilder(projectId, typeSymbol, serialCode, pickTime);

if (pointBuilder != null) {

Double[] values = multiDataEntity.getValues();

for (int j = 0; j < values.length; j++) {

String fieldName = fieldName_base + (j + 1);

// 数值一律采用 double 类型

double fieldValue = values[j];

pointBuilder.addField(fieldName, fieldValue);

}

// 将单条数据存储到集合中

batchPoints.point(pointBuilder.build());

count++;

// 每读取十万条数据或者每隔 500ms 提交到 influxDB 存储一次

long thisTime = System.nanoTime();

long diffTime = (thisTime - lastTime) / 1000000;

if ((count / 100000 == 1 || diffTime > 500) && batchPoints.getPoints().size() != 0) {

influxDBClient.write(batchPoints);

batchPoints = DefaultConfig.getBatchPoints();

count = 0;

lastTime = System.nanoTime();

}

}

}

}

@Override

public void close() throws Exception {

super.close();

if (influxDBClient != null) {

influxDBClient.close();

}

}

private Point.Builder getPointBuilder(String projectId, String typeSymbol, String serialCode, String pickTime) throws ParseException {

// 毫秒级数据

if (pickTime.length() == 17) {

SimpleDateFormat format = new SimpleDateFormat(Const.FORMAT_MILLI_TRIM);

long timestamp = format.parse(pickTime).getTime();

return Point.measurement(measurement)

.time(timestamp, TimeUnit.MILLISECONDS)

.tag(tagName_1, projectId)

.tag(tagName_2, typeSymbol)

.tag(tagName_3, serialCode);

}

// 秒级数据

if (pickTime.length() == 14) {

SimpleDateFormat format = new SimpleDateFormat(Const.FORMAT_SEC_TRIM);

long timestamp = format.parse(pickTime).getTime();

return Point.measurement(measurement)

.time(timestamp, TimeUnit.MILLISECONDS)

.tag(tagName_1, projectId)

.tag(tagName_2, typeSymbol)

.tag(tagName_3, serialCode);

}

// 分钟级数据

if (pickTime.length() == 12) {

SimpleDateFormat format = new SimpleDateFormat(Const.FORMAT_MINUTE_TRIM);

long timestamp = format.parse(pickTime).getTime();

return Point.measurement(measurement)

.time(timestamp, TimeUnit.MILLISECONDS)

.tag(tagName_1, projectId)

.tag(tagName_2, typeSymbol)

.tag(tagName_3, serialCode);

}

return null;

}

}

DefaultConfig

分流,给不同采集频率的数据打上 tag

package com.cloudansys.core.flink.function.rabbitmq;

import com.cloudansys.core.entity.MultiDataEntity;

import org.apache.flink.streaming.api.functions.ProcessFunction;

import org.apache.flink.util.Collector;

import org.apache.flink.util.OutputTag;

import java.util.List;

public class RabbitMQSideOutputProcessFunc extends ProcessFunction<List<MultiDataEntity>, List<MultiDataEntity>> {

// 定义测流输出标志

private OutputTag<List<MultiDataEntity>> tagH;

private OutputTag<List<MultiDataEntity>> tagL;

public RabbitMQSideOutputProcessFunc(OutputTag<List<MultiDataEntity>> tagH, OutputTag<List<MultiDataEntity>> tagL) {

this.tagH = tagH;

this.tagL = tagL;

}

@Override

public void processElement(List<MultiDataEntity> element, Context context, Collector<List<MultiDataEntity>> out) throws Exception {

// 根据采集时间格式判断数据采集频率

String pickTime = element.get(0).getPickTime();

// 侧流-只输出特定数据

if (pickTime.length() == 17) {

context.output(tagH, element);

}

if (pickTime.length() < 17) {

context.output(tagL, element);

}

// 主流-输出所有数据

// out.collect(element);

}

}

设置 watermark

package com.cloudansys.core.flink.function.rabbitmq;

import com.cloudansys.core.constant.Const;

import com.cloudansys.core.entity.MultiDataEntity;

import lombok.SneakyThrows;

import org.apache.flink.streaming.api.functions.AssignerWithPeriodicWatermarks;

import org.apache.flink.streaming.api.watermark.Watermark;

import java.text.SimpleDateFormat;

import java.util.List;

public class RabbitMQWatermark implements AssignerWithPeriodicWatermarks<List<MultiDataEntity>> {

private SimpleDateFormat format = new SimpleDateFormat(Const.FORMAT_MILLI_TRIM);

private long currentMaxTimestamp = 0L;

/**

* 定义生成 Watermark 的逻辑

* 默认 100ms 被调用一次

*/

@Override

public Watermark getCurrentWatermark() {

// 最大允许的乱序时间是10s

final long maxOutOfOrderness = 10000L;

return new Watermark(currentMaxTimestamp - maxOutOfOrderness);

}

/**

* 抽取 eventTime

*

* @param element 流元素

* @param previousElementTimestamp 上一个流元素的 eventTime

* @return 当前流元素的 eventTime

*/

@SneakyThrows

@Override

public long extractTimestamp(List<MultiDataEntity> element, long previousElementTimestamp) {

// 因为同一个 List 中的 pickTime 是相同的

String pickTime = element.get(0).getPickTime();

long eventTime = format.parse(pickTime).getTime();

currentMaxTimestamp = Math.max(eventTime, currentMaxTimestamp);

return eventTime;

}

}

根据项目 id 和传感器类型分组

package com.cloudansys.core.flink.function.rabbitmq;

import com.cloudansys.core.entity.MultiDataEntity;

import org.apache.flink.api.java.functions.KeySelector;

import java.util.List;

public class RabbitMQKeySelector implements KeySelector<List<MultiDataEntity>, String> {

/**

* 根据项目 id 和传感器类型分组

*/

@Override

public String getKey(List<MultiDataEntity> element) throws Exception {

// 因为同一个 List 中的 projectId 和 typeSymbol 是相同的

String projectId = element.get(0).getProjectId();

String typeSymbol = element.get(0).getTypeSymbol();

return projectId + typeSymbol;

}

}

对窗口内的元素进行聚合计算—计算秒级均值

package com.cloudansys.core.flink.function.rabbitmq;

import com.cloudansys.core.constant.Const;

import com.cloudansys.core.entity.MultiDataEntity;

import lombok.extern.slf4j.Slf4j;

import org.apache.flink.streaming.api.functions.windowing.WindowFunction;

import org.apache.flink.streaming.api.windowing.windows.TimeWindow;

import org.apache.flink.util.Collector;

import java.util.*;

@Slf4j

public class RabbitMQWindowFunc implements WindowFunction<List<MultiDataEntity>, List<MultiDataEntity>, String, TimeWindow> {

@Override

public void apply(String keyBy, TimeWindow timeWindow, Iterable<List<MultiDataEntity>> elements, Collector<List<MultiDataEntity>> out) throws Exception {

int count = 0;

String projectId = null, typeSymbol = null, pickTime = null;

Map<String, List<List<Double>>> sensorValuesMap = new HashMap<>();

for (List<MultiDataEntity> element : elements) {

// 相同的变量只赋值一次

if (count == 0) {

int i = 0;

MultiDataEntity multiDataEntity = element.get(0);

projectId = multiDataEntity.getProjectId();

typeSymbol = multiDataEntity.getTypeSymbol();

String milliPickTime = multiDataEntity.getPickTime();

pickTime = milliPickTime.substring(0, milliPickTime.length() - 3);

}

// 一个传感器放在一个 map 中,key 为传感器id,value 为是一个 list,元素为指标;

// 同一个指标也放在一个 list 中。例子:Map>>

for (MultiDataEntity multiDataEntity : element) {

String serialCode = multiDataEntity.getSerialCode();

Double[] values = multiDataEntity.getValues();

// 判断 sensorValuesMap 中是否已经存储了该传感器 id 的相关信息

if (!sensorValuesMap.containsKey(serialCode)) {

List<List<Double>> quotasList = new ArrayList<>();

for (Double value : values) {

List<Double> quotaValuesList = new ArrayList<>();

quotaValuesList.add(value);

quotasList.add(quotaValuesList);

}

sensorValuesMap.put(serialCode, quotasList);

} else {

List<List<Double>> quotasList = sensorValuesMap.get(serialCode);

for (int i = 0; i < values.length; i++) {

List<Double> quotaValuesList = quotasList.get(i);

quotaValuesList.add(values[i]);

}

}

}

count++;

}

// log.info("sensorValuesMap: {}", sensorValuesMap);

// 计算每个指标的平均值

Map<String, List<Double>> secMeanValue = new HashMap<>();

for (String key : sensorValuesMap.keySet()) {

List<List<Double>> quotasList = sensorValuesMap.get(key);

for (List<Double> quotaValuesList : quotasList) {

OptionalDouble average = quotaValuesList

.stream()

.mapToDouble(value -> value)

.average();

if (average.isPresent()) {

double quotaSecMeanValue = Double.parseDouble(

String.format(Const.FORMAT_DOUBLE, average.getAsDouble()));

if (!secMeanValue.containsKey(key)) {

List<Double> quotaSecMeanValueList = new ArrayList<>();

quotaSecMeanValueList.add(quotaSecMeanValue);

secMeanValue.put(key, quotaSecMeanValueList);

} else {

secMeanValue.get(key).add(quotaSecMeanValue);

}

} else {

log.error("***** failed to calculate mean value *****");

}

}

}

// log.info("secMeanValue: {}", secMeanValue);

// 封装成对象

List<MultiDataEntity> multiDataEntities = new ArrayList<>();

for (String key : secMeanValue.keySet()) {

MultiDataEntity multiDataEntity = new MultiDataEntity();

multiDataEntity.setProjectId(projectId);

multiDataEntity.setPartId(Const.PART_ID);

multiDataEntity.setTypeSymbol(typeSymbol);

multiDataEntity.setPickTime(pickTime);

multiDataEntity.setSerialCode(key);

List<Double> secMeans = secMeanValue.get(key);

Double[] values = new Double[secMeans.size()];

for (int i = 0; i < secMeans.size(); i++) {

values[i] = secMeans.get(i);

}

multiDataEntity.setValues(values);

multiDataEntities.add(multiDataEntity);

}

// 再扔回流中

out.collect(multiDataEntities);

}

}

写入redis

package com.cloudansys.core.flink.sink;

import com.cloudansys.config.DefaultConfig;

import com.cloudansys.core.constant.Const;

import com.cloudansys.core.entity.MultiDataEntity;

import com.cloudansys.core.util.ElementUtil;

import lombok.extern.slf4j.Slf4j;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.functions.sink.RichSinkFunction;

import redis.clients.jedis.Jedis;

import redis.clients.jedis.Pipeline;

import java.text.SimpleDateFormat;

import java.util.List;

@Slf4j

public class RedisBatchSink extends RichSinkFunction<List<MultiDataEntity>> {

private SimpleDateFormat format = new SimpleDateFormat(Const.FORMAT_SEC_TRIM);

private static final String quota_base = "q";

private static Pipeline pipeline;

private static Jedis jedis;

@Override

public void open(Configuration parameters) throws Exception {

super.open(parameters);

jedis = DefaultConfig.getJedis();

pipeline = DefaultConfig.getPipeline();

}

/**

* 在 redis 中存储采用 列表(List)类型

* redis 只存储最近五分钟的实时数据,通过插入数据的时间和第一个数据的时间来控制

*/

@Override

public void invoke(List<MultiDataEntity> element, Context context) throws Exception {

log.info("##### write in redis #####");

for (MultiDataEntity multiDataEntity : element) {

// 解出流数据中的各个值,准备存储到 redis 中

String projectId = multiDataEntity.getProjectId();

String typeSymbol = multiDataEntity.getTypeSymbol();

String serialCode = multiDataEntity.getSerialCode();

long timestamp = format.parse(multiDataEntity.getPickTime()).getTime();

String partId = multiDataEntity.getPartId();

Double[] values = multiDataEntity.getValues();

for (int i = 0; i < values.length; i++) {

// q1 q2 q3 ...

String quota = quota_base + (i + 1);

// 构造 redis 存储 key {projectId_partId_typeSymbol_serialCode_quota}

String key = ElementUtil.stringsToStr(projectId, partId, typeSymbol, serialCode, quota);

// 构造 redis 存储 value {timestamp_value}

String value = ElementUtil.stringsToStr(String.valueOf(timestamp), String.valueOf(values[i]));

// 判断 redis 中 key 是否存在,不存在直接放入

if (!jedis.exists(key)) {

pipeline.rpush(key, value);

} else {

// 存在的话,需要取出第一个数据的时间,判断 list 中存储的数据是否是五分钟的数据

long firstValueTimestamp = Long.parseLong(jedis.lindex(key, 0).split(Const.BAR_BOTTOM)[0]);

long interval = timestamp - firstValueTimestamp;

// 不够五分钟直接存储

if (interval < 5 * 60 * 1000) {

pipeline.rpush(key, value);

} else {

// 已经存储了五分钟的数据,那就先把第一个数据移除,再存储数据

jedis.lpop(key);

pipeline.rpush(key, value);

}

}

}

}

}

@Override

public void close() throws Exception {

super.close();

if (pipeline != null) {

pipeline.close();

}

}

}