k8s学习

k8s容器编排

[TOC]

1:k8s集群的安装

1.1 k8s的架构

除了核心组件,还有一些推荐的Add-ons:

| 组件名称 |

说明 |

| kube-dns |

负责为整个集群提供DNS服务 |

| Ingress Controller |

为服务提供外网入口 |

| Heapster |

提供资源监控 |

| Dashboard |

提供GUI |

| Federation |

提供跨可用区的集群 |

| Fluentd-elasticsearch |

提供集群日志采集、存储与查询 |

1.2:修改IP地址、主机和host解析

10.0.0.11 k8s-master

10.0.0.12 k8s-node-1

10.0.0.13 k8s-node-2

所有节点需要做hosts解析

1.3:master节点安装etcd

yum install etcd -y vim /etc/etcd/etcd.conf 6行:ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379" 21行:ETCD_ADVERTISE_CLIENT_URLS="http://10.0.0.11:2379" systemctl start etcd.service systemctl enable etcd.service etcdctl set testdir/testkey0 0 etcdctl get testdir/testkey0 etcdctl -C http://10.0.0.11:2379 cluster-health

##etcd原生支持做集群,

##作业1:安装部署etcd集群,要求三个节点

1.4:master节点安装kubernetes

yum install kubernetes-master.x86_64 -y vim /etc/kubernetes/apiserver 8行: KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0" 11行:KUBE_API_PORT="--port=8080" 17行:KUBE_ETCD_SERVERS="--etcd-servers=http://10.0.0.11:2379" 23行:KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota" vim /etc/kubernetes/config 22行:KUBE_MASTER="--master=http://10.0.0.11:8080" systemctl enable kube-apiserver.service systemctl restart kube-apiserver.service systemctl enable kube-controller-manager.service systemctl restart kube-controller-manager.service systemctl enable kube-scheduler.service systemctl restart kube-scheduler.service

#检查服务是否安装正常

[root@k8s-master ~]# kubectl get componentstatus

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

1.5:node节点安装kubernetes

yum install kubernetes-node.x86_64 -y vim /etc/kubernetes/config 22行:KUBE_MASTER="--master=http://10.0.0.11:8080" vim /etc/kubernetes/kubelet 5行:KUBELET_ADDRESS="--address=0.0.0.0" 8行:KUBELET_PORT="--port=10250" 11行:KUBELET_HOSTNAME="--hostname-override=10.0.0.12" 14行:KUBELET_API_SERVER="--api-servers=http://10.0.0.11:8080" systemctl enable kubelet.service systemctl start kubelet.service systemctl enable kube-proxy.service systemctl start kube-proxy.service

##在master节点检查

[root@k8s-master ~]# kubectl get nodes NAME STATUS AGE 10.0.0.12 Ready 6m 10.0.0.13 Ready 3s

1.6:所有节点配置flannel网络

yum install flannel -y

sed -i 's#http://127.0.0.1:2379#http://10.0.0.11:2379#g' /etc/sysconfig/flanneld

##master节点:

etcdctl mk /atomic.io/network/config '{ "Network": "172.16.0.0/16" }'

yum install docker -y

systemctl enable flanneld.service

systemctl restart flanneld.service

service docker restart

systemctl restart kube-apiserver.service

systemctl restart kube-controller-manager.service

systemctl restart kube-scheduler.service

##node节点:

systemctl enable flanneld.service

systemctl restart flanneld.service

service docker restart

systemctl restart kubelet.service

systemctl restart kube-proxy.service

7:配置master为镜像仓库

#所有节点 vim /etc/sysconfig/docker OPTIONS='--selinux-enabled --log-driver=journald --signature-verification=false --registry-mirror=https://registry.docker-cn.com --insecure-registry=10.0.0.11:5000' systemctl restart docker #master节点 docker run -d -p 5000:5000 --restart=always --name registry -v /opt/myregistry:/var/lib/registry registry #验证yum仓库 docker load -i docker_nginx1.15.tar.gz docker tag docker.io/nginx:latest 10.0.0.11:5000/nginx:1.15 docker push 10.0.0.11:5000/nginx:1.15 #修改全部node节点的镜像下载地址 vim /etc/kubernetes/kubelet KUBELET_API_SERVER="--api-servers=http://10.0.0.11:8080"

2:什么是k8s,k8s有什么功能?

k8s是一个docker集群的管理工具

2.1 k8s的核心功能

自愈: 重新启动失败的容器,在节点不可用时,替换和重新调度节点上的容器,对用户定义的健康检查不响应的容器会被中止,并且在容器准备好服务之前不会把其向客户端广播。

弹性伸缩: 通过监控容器的cpu的负载值,如果这个平均高于80%,增加容器的数量,如果这个平均低于10%,减少容器的数量

服务的自动发现和负载均衡: 不需要修改您的应用程序来使用不熟悉的服务发现机制,Kubernetes 为容器提供了自己的 IP 地址和一组容器的单个 DNS 名称,并可以在它们之间进行负载均衡。

滚动升级和一键回滚: Kubernetes 逐渐部署对应用程序或其配置的更改,同时监视应用程序运行状况,以确保它不会同时终止所有实例。 如果出现问题,Kubernetes会为您恢复更改,利用日益增长的部署解决方案的生态系统。

2.2 k8s的历史

2014年 docker容器编排工具,立项

2015年7月 发布kubernetes 1.0, 加入cncf

2016年,kubernetes干掉两个对手,docker swarm,mesos 1.2版

2017年

2018年 k8s 从cncf基金会 毕业

2019年: 1.13, 1.14 ,1.15

cncf cloud native compute foundation

kubernetes (k8s): 希腊语 舵手,领航 容器编排领域,

谷歌16年容器使用经验,borg容器管理平台,使用golang重构borg,kubernetes

2.3 k8s的安装

yum安装 1.5 最容易安装成功,最适合学习的

源码编译安装---难度最大 可以安装最新版

二进制安装---步骤繁琐 可以安装最新版 shell,ansible,saltstack

kubeadm 安装最容易, 网络 可以安装最新版

minikube 适合开发人员体验k8s, 网络

2.4 k8s的应用场景

k8s最适合跑微服务项目!

3:k8s常用的资源

3.1 创建pod资源

k8s yaml的主要组成

apiVersion: v1 api版本 kind: pod 资源类型 metadata: 属性 spec: 详细

k8s_pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

app: web

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

pod资源:至少由两个容器组成,pod基础容器和业务容器组成

pod配置文件2:

apiVersion: v1

kind: Pod

metadata:

name: test

labels:

app: web

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

- name: busybox

image: 10.0.0.11:5000/busybox:latest

command: ["sleep","10000"]

pod是k8s最小的资源单位

修改完yaml后可以把之前的删除再创建,也可以apply应用

kubeclt delete -f xxx.yaml

或

kubeclt apply -f xxx.yaml

3.2 ReplicationController资源

rc:保证指定数量的pod始终存活,rc通过标签选择器来关联pod

k8s资源的常见操作:

kubectl create -f xxx.yaml

kubectl get pod|rc

kubectl get pod -o wide

kubectl describe pod nginx

kubectl delete pod nginx 或者kubectl delete -f xxx.yaml

kubectl edit pod nginx

创建一个rc

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx

spec:

replicas: 5

selector:

app: myweb

template:

metadata:

labels:

app: myweb

spec:

containers:

- name: myweb

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

rc的滚动升级

新建一个nginx-rc1.15.yaml

升级

kubectl rolling-update nginx -f nginx-rc1.15.yaml --update-period=10s

回滚

kubectl rolling-update nginx2 -f nginx-rc.yaml --update-period=1s

3.3 service资源

rc + svc 保证高可用,被外界访问

service帮助pod暴露端口

创建一个service

apiVersion: v1

kind: Service

metadata:

name: myweb

spec:

type: NodePort #ClusterIP

ports:

- port: 80 #clusterIP VIP

nodePort: 30000 #nodeport

targetPort: 80 #podport

selector:

app: myweb2

不写type就是 ClusterIP 类型 外界不能访问

查看全部服务

kubectl get all -o wide

kubectl describe svc myweb

kubectl edit svc myweb

把app: myweb2修改为app: myweb

修改nodePort范围

vim /etc/kubernetes/apiserver KUBE_API_ARGS="--service-node-port-range=3000-50000"

service默认使用iptables来实现负载均衡, k8s 1.8新版本中推荐使用lvs(四层负载均衡)

3.4 deployment资源

是比rc高级的资源,已经代替了rc

有rc在滚动升级之后,标签不一致会造成服务访问中断,于是k8s引入了deployment资源

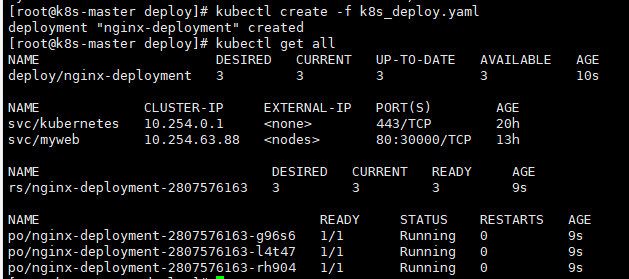

创建deployment

kubectl create -f k8s_deploy.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

resources:

limits:

cpu: 100m

requests:

cpu: 100m

rs 90%的功能和 rc 一样

deployment升级和回滚

升级:直接编辑版本

kubectl edit deployment nginx-deployment

回滚:

查看历史版本

[root@k8s-master deploy]# kubectl rollout history deployment nginx-deployment

deployments "nginx-deployment"

REVISION CHANGE-CAUSE

1

2

方法一:回滚到上个版本

[root@k8s-master deploy]# kubectl rollout undo deployment nginx-deployment

deployment "nginx-deployment" rolled back

[root@k8s-master deploy]# kubectl rollout history deployment nginx-deployment

deployments "nginx-deployment"

REVISION CHANGE-CAUSE

2

3

方法二:回滚到指定版本

[root@k8s-master deploy]# kubectl rollout undo deployment nginx-deployment --to-revision=2

deployment "nginx-deployment" rolled back

用下面方法创建和升级能看到版本变化信息

命令行创建deployment

kubectl run nginx --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

命令行升级版本

kubectl set image deploy nginx nginx=10.0.0.11:5000/nginx:1.15

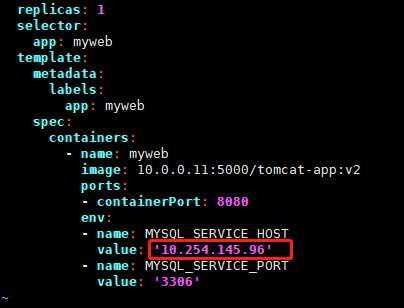

3.5 tomcat+mysql练习

在k8s中容器之间相互访问,通过VIP地址!

[root@k8s-master tomcat-rc]# kubectl delete node 10.0.0.12

node "10.0.0.12" deleted

[root@k8s-node-2 ~]# docker load -i docker-mysql-5.7.tar.gz

[root@k8s-node-2 ~]# docker load -i tomcat-app-v2.tar.gz

[root@k8s-node-2 ~]# docker tag docker.io/mysql:5.7 10.0.0.11:5000/mysql:5.7

[root@k8s-node-2 ~]# docker tag docker.io/kubeguide/tomcat-app:v2 10.0.0.11:5000/tomcat-app:v2

[root@k8s-master tomcat-rc]# kubectl create -f mysql-rc.yml

[root@k8s-master tomcat-rc]# kubectl create -f mysql-svc.yml

[root@k8s-master tomcat-rc]# vim tomcat-rc.yml

[root@k8s-master tomcat-rc]# kubectl delete -f tomcat-svc.yml

service "myweb" deleted

[root@k8s-master tomcat-rc]# kubectl create -f tomcat-svc.yml

4:k8s的附加组件

4.1 dns服务

作用:解析CLUSTWEE-IP的名字

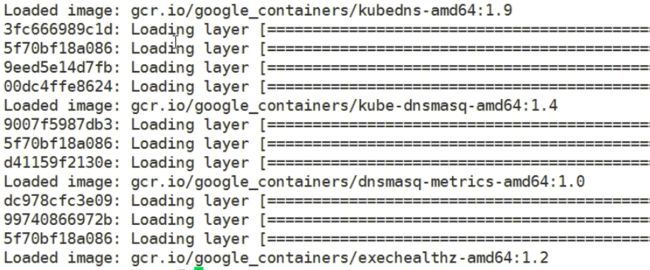

安装dns服务

1:下载dns_docker镜像包

wget http://192.168.12.201/docker_image/docker_k8s_dns.tar.gz

2:导入dns_docker镜像包(node2节点)

3:修改skydns-rc.yaml

后面版本的k8s用的是coredns

spec:

nodeSelector:

kubernetes.io/hostname: 10.0.0.13

containers:

4:创建dns服务

kubectl create -f skydns-rc.yaml

启动了四个容器

5:检查

kubectl get all --namespace=kube-system

6:修改所有node节点kubelet的配置文件

vim /etc/kubernetes/kubelet

KUBELET_ARGS="--cluster_dns=10.254.230.254 --cluster_domain=cluster.local"

systemctl restart kubelet

4.2 namespace命令空间

namespace做资源隔离

创建namespace

[root@k8s-master tomcat-rc]# kubectl create namespace spj

namespace "spj" created

[root@k8s-master tomcat-rc]# kubectl get namespace

NAME STATUS AGE

default Active 22h

kube-system Active 22h

spj Active 3s

4.3 健康检查

4.3.1 探针的种类

livenessProbe:健康状态检查,周期性检查服务是否存活,检查结果失败,将重启容器

readinessProbe:可用性检查,周期性检查服务是否可用,不可用将从service的endpoints中移除

4.3.2 探针的检测方法

- exec:执行一段命令

- httpGet:检测某个 http 请求的返回状态码

- tcpSocket:测试某个端口是否能够连接

4.3.3 liveness探针的exec使用

vi nginx_pod_exec.yaml

apiVersion: v1

kind: Pod

metadata:

name: exec

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

args:

- /bin/sh

- -c

- touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600

livenessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 5 #初始时间

periodSeconds: 5 #间隔时间

4.3.4 liveness探针的httpGet使用

vi nginx_pod_httpGet.yaml

apiVersion: v1

kind: Pod

metadata:

name: httpget

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

livenessProbe:

httpGet:

path: /index.html

port: 80

initialDelaySeconds: 3

periodSeconds: 3

4.3.5 liveness探针的tcpSocket使用

vi nginx_pod_tcpSocket.yaml

apiVersion: v1

kind: Pod

metadata:

name: tcpSocket

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

livenessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 3

periodSeconds: 3

4.3.6 readiness探针的httpGet使用

vi nginx-rc-httpGet.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: readiness

spec:

replicas: 2

selector:

app: readiness

template:

metadata:

labels:

app: readiness

spec:

containers:

- name: readiness

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

readinessProbe:

httpGet:

path: /qiangge.html

port: 80

initialDelaySeconds: 3

periodSeconds: 3

4.4 dashboard服务图形界面

1:上传并导入镜像,打标签

kubernetes-dashboard-amd64_v1.4.1.tar.gz

2:创建dashborad的deployment和service

3:访问http://10.0.0.11:8080/ui/dashboard-deploy.yamldashboard-svc.yaml

4.5 通过apiservicer反向代理访问service

proxy 代理

namespaces=kube-system

services=kubernetes-dashboard

第一种:NodePort类型

type: NodePort

ports:

- port: 80

targetPort: 80

nodePort: 30008

第二种:ClusterIP类型

type: ClusterIP

ports:

- port: 80

targetPort: 80

5: k8s弹性伸缩

k8s弹性伸缩,需要附加插件heapster监控

5.1 安装heapster监控

kubectl get all -n kube-system -o wide

1:上传并导入镜像,打标签

ls *.tar.gz

for n in `ls *.tar.gz`;do docker load -i $n ;done

docker tag docker.io/kubernetes/heapster_grafana:v2.6.0 10.0.0.11:5000/heapster_grafana:v2.6.0

docker tag docker.io/kubernetes/heapster_influxdb:v0.5 10.0.0.11:5000/heapster_influxdb:v0.5

docker tag docker.io/kubernetes/heapster:canary 10.0.0.11:5000/heapster:canary

2:上传配置文件

kubectl create -f .

3:打开dashboard验证

5.2 弹性伸缩

1:修改rc的配置文件

containers:

- name: myweb

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

resources:

limits:

cpu: 100m

requests:

cpu: 100m

2:创建弹性伸缩规则

kubectl autoscale -n qiangge replicationcontroller myweb --max=8 --min=1 --cpu-percent=8

3:测试

ab -n 1000000 -c 40 http://172.16.28.6/index.html

扩容截图

缩容:

6:持久化存储

pv: persistent volume 全局的资源 pv,node

pvc: persistent volume claim 局部的资源(namespace)pod,rc,svc

6.1:安装nfs服务端(10.0.0.11)

yum install nfs-utils.x86_64 -y mkdir /data vim /etc/exports /data 10.0.0.0/24(rw,async,no_root_squash,no_all_squash) systemctl start rpcbind systemctl start nfs

6.2:在node节点安装nfs客户端

yum install nfs-utils.x86_64 -y showmount -e 10.0.0.11

6.3:创建pv和pvc

上传yaml配置文件,创建pv和pvc

6.4:创建mysql-rc,pod模板里使用volume

volumeMounts:

- name: mysql

mountPath: /var/lib/mysql

volumes:

- name: mysql

persistentVolumeClaim:

claimName: tomcat-mysql

6.5: 验证持久化

验证方法1:删除mysql的pod,数据库不丢

kubectl delete pod mysql-gt054

验证方法2:查看nfs服务端,是否有mysql的数据文件

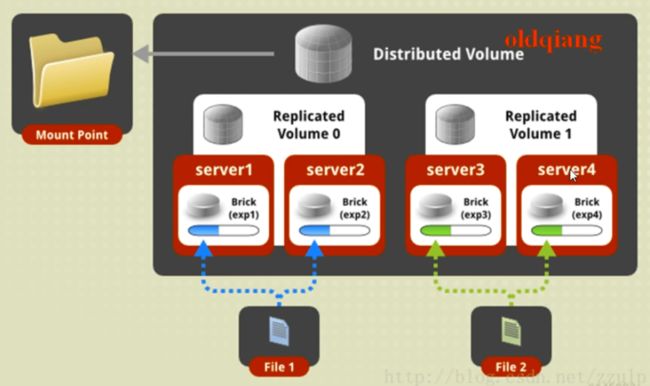

6.6: 分布式存储glusterfs

a: 什么是glusterfs

Glusterfs是一个开源分布式文件系统,具有强大的横向扩展能力,可支持数PB存储容量和数千客户端,通过网络互联成一个并行的网络文件系统。具有可扩展性、高性能、高可用性等特点。

b: 安装glusterfs

所有节点: yum install centos-release-gluster -y yum install install glusterfs-server -y systemctl start glusterd.service systemctl enable glusterd.service mkdir -p /gfs/test1 mkdir -p /gfs/test2

增加分区,各节点挂载上硬盘

c: 添加存储资源池

master节点: gluster pool list gluster peer probe k8s-node1 gluster peer probe k8s-node2 gluster pool list

d: glusterfs卷管理

创建分布式复制卷 gluster volume create qiangge replica 2 k8s-master:/gfs/test1 k8s-master:/gfs/test2 k8s-node1:/gfs/test1 k8s-node1:/gfs/test2 force 启动卷 gluster volume start qiangge 查看卷 gluster volume info qiangge 挂载卷 mount -t glusterfs 10.0.0.11:/qiangge /mnt

e: 分布式复制卷讲解

f: 分布式复制卷扩容

扩容前查看容量: df -h 扩容命令: gluster volume add-brick qiangge k8s-node2:/gfs/test1 k8s-node2:/gfs/test2 force 扩容后查看容量: df -h

6.7 k8s 对接glusterfs存储

a:创建endpoint

vi glusterfs-ep.yaml

apiVersion: v1

kind: Endpoints

metadata:

name: glusterfs

namespace: default

subsets:

- addresses:

- ip: 10.0.0.11

- ip: 10.0.0.12

- ip: 10.0.0.13

ports:

- port: 49152

protocol: TCP

b: 创建service

vi glusterfs-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: glusterfs

namespace: default

spec:

ports:

- port: 49152

protocol: TCP

targetPort: 49152

sessionAffinity: None

type: ClusterIP

c: 创建gluster类型pv

apiVersion: v1

kind: PersistentVolume

metadata:

name: gluster

labels:

type: glusterfs

spec:

capacity:

storage: 50Gi

accessModes:

- ReadWriteMany

glusterfs:

endpoints: "glusterfs"

path: "qiangge"

readOnly: false

d: 创建pvc

略

e:在pod中使用gluster

vi nginx_pod.yaml

……

volumeMounts:

- name: nfs-vol2

mountPath: /usr/share/nginx/html

volumes:

- name: nfs-vol2

persistentVolumeClaim:

claimName: gluster

7:与jenkins集成实现ci/cd

7.1: 安装gitlab并上传代码

10.0.0.15

#a:安装 wget https://mirrors.tuna.tsinghua.edu.cn/gitlab-ce/yum/el7/gitlab-ce-11.9.11-ce.0.el7.x86_64.rpm yum localinstall gitlab-ce-11.9.11-ce.0.el7.x86_64.rpm -y #b:配置 vim /etc/gitlab/gitlab.rb external_url 'http://10.0.0.15' prometheus_monitoring['enable'] = false #c:应用并启动服务 gitlab-ctl reconfigure #使用浏览器访问http://10.0.0.15,修改root用户密码,创建project #上传代码到git仓库 cd /srv/ rz -E unzip xiaoniaofeifei.zip rm -fr xiaoniaofeifei.zip git config --global user.name "Administrator" git config --global user.email "[email protected]" git init git remote add origin http://10.0.0.15/root/xiaoniao.git git add . git commit -m "Initial commit" git push -u origin master yum install docker -y systemctel start docker

7.2 安装jenkins,并自动构建docker镜像

1:安装jenkins

10.0.0.14

cd /opt/ rz -E rpm -ivh jdk-8u102-linux-x64.rpm mkdir /app tar xf apache-tomcat-8.0.27.tar.gz -C /app rm -fr /app/apache-tomcat-8.0.27/webapps/* mv jenkins.war /app/apache-tomcat-8.0.27/webapps/ROOT.war tar xf jenkin-data.tar.gz -C /root /app/apache-tomcat-8.0.27/bin/startup.sh netstat -lntup

2:访问jenkins

访问http://10.0.0.14:8080/,默认账号密码admin:123456

3:配置jenkins拉取gitlab代码凭据

a:在jenkins上生成秘钥对

ssh-keygen -t rsa 一路回车

b:复制公钥粘贴gitlab上

c:jenkins上创建全局凭据

4:拉取代码测试

创建自由任务

5:编写dockerfile并测试

#vim dockerfile FROM 10.0.0.11:5000/nginx:1.13 add . /usr/share/nginx/html

#添加docker build构建时不add的文件

vim .dockerignore

dockerfile

docker build -t xiaoniao:v1 .

docker run -d -p 88:80 xiaoniao:v1

打开浏览器测试访问xiaoniaofeifei的项目

6:上传dockerfile和.dockerignore到私有仓库

git add docker .dockerignore

git commit -m "fisrt commit"

git push -u origin master

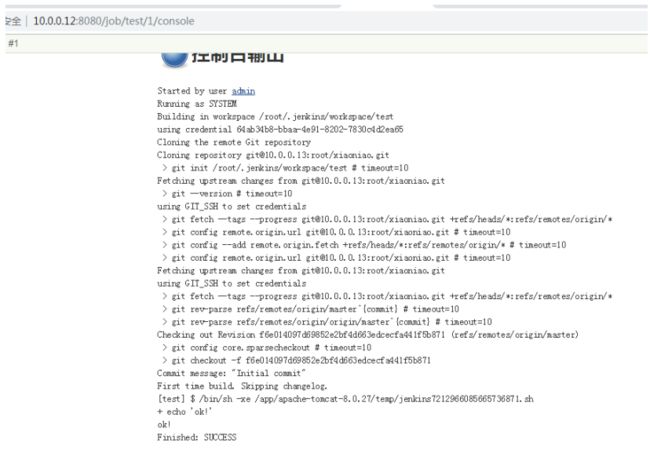

7:点击jenkins立即构建,自动构建docker镜像并上传到私有仓库

修改jenkins 工程配置

docker build -t 10.0.0.11:5000/test:v$BUILD_ID .

docker push 10.0.0.11:5000/test:v$BUILD_ID

7.3 jenkins自动部署应用到k8s

kubectl -s 10.0.0.11:8080 get nodes

if [ -f /tmp/xiaoniao.lock ];then docker build -t 10.0.0.11:5000/xiaoniao:v$BUILD_ID . docker push 10.0.0.11:5000/xiaoniao:v$BUILD_ID kubectl -s 10.0.0.11:8080 set image -n xiaoniao deploy xiaoniao xiaoniao=10.0.0.11:5000/xiaoniao:v$BUILD_ID echo "更新成功" else docker build -t 10.0.0.11:5000/xiaoniao:v$BUILD_ID . docker push 10.0.0.11:5000/xiaoniao:v$BUILD_ID kubectl -s 10.0.0.11:8080 create namespace xiaoniao kubectl -s 10.0.0.11:8080 run xiaoniao -n xiaoniao --image=10.0.0.11:5000/xiaoniao:v$BUILD_ID --replicas=3 --record kubectl -s 10.0.0.11:8080 expose -n xiaoniao deployment xiaoniao --port=80 --type=NodePort port=`kubectl -s 10.0.0.11:8080 get svc -n xiaoniao|grep -oP '(?<=80:)\d+'` echo "你的项目地址访问是http://10.0.0.13:$port" touch /tmp/xiaoniao.lock fi

jenkins一键回滚

kubectl -s 10.0.0.11:8080 rollout undo -n xiaoniao deployment xiaoniao