《神经网络与深度学习》习题解答(至第七章)

部分题目的个人解答,参考了github上的习题解答分享与知乎解答。题目是自己做的,部分解答可能出错,有问题的解题部分欢迎指正。原文挂在自己的自建博客上。

第二章

2-1

直观上,对特定的分类问题,平方差的损失有上限(所有标签都错,损失值是一个有效值),但交叉熵则可以用整个非负域来反映优化程度的程度。

从本质上看,平方差的意义和交叉熵的意义不一样。概率理解上,平方损失函数意味着模型的输出是以预测值为均值的高斯分布,损失函数是在这个预测分布下真实值的似然度,softmax损失意味着真实标签的似然度。

分类问题中的标签,是没有连续的概念的。1-hot作为标签的一种表达方式,每个标签之间的距离也是没有实际意义的,所以预测值和标签两个向量之间的平方差这个值不能反应分类这个问题的优化程度。

大部分分类问题并非服从高斯分布

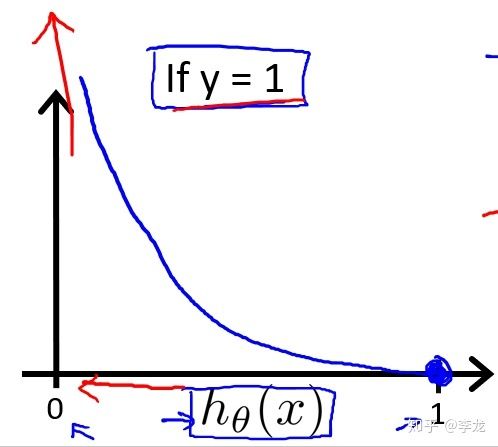

根据吴恩达机器学习视频: J ( θ ) = 1 m ∑ i = 1 m 1 2 ( h θ ( x ( i ) ) − y ( i ) ) 2 J(\theta)=\frac{1}{m}\sum^m_{i=1}\frac{1}{2}(h_{\theta}(x^{(i)})-y^{(i)})^2 J(θ)=m1∑i=1m21(hθ(x(i))−y(i))2,h表示的是你的预测结果,y表示对应的标签,J就可以理解为用二范数的方式将预测和标签的差距表示出来,模型学习的过程就是优化权重参数,使得J达到近似最小值,理论上这个损失函数是很有效果的,但是在实践中却又些问题,它这个h是激活函数激活后的结果,激活函数通常是非线性函数,例如sigmoid之类的,这就使得这个J的曲线变得很复杂,并不是凸函数,不利于优化,很容易陷入到局部最优解的情况。吴恩达说当激活函数是sigmoid的时候,J的曲线就如下图所示,可以看到这个曲线是很难求出全局最小值的,稍不留神就是局部最小值。

交叉熵的公式为: C o s t ( h θ ( x ) , y ) = − y ⋅ l o g ( h θ ( x ) ) + ( y − 1 ) ⋅ l o g ( 1 − h θ ( x ) ) Cost(h_{\theta}(x),y)=-y\cdot log(h_{\theta}(x))+(y-1)\cdot log(1-h_{\theta}(x)) Cost(hθ(x),y)=−y⋅log(hθ(x))+(y−1)⋅log(1−hθ(x))

使用交叉熵的时候就变成:

2-2

最优参数

令 [ r ‾ ( n ) ] 2 = r ( n ) [\overline{r}^{(n)}]^2 = r^{(n)} [r(n)]2=r(n),则:

R ( w ) = 1 2 ∑ n = 1 N [ r ‾ ( n ) ] 2 ( y ( n ) − w ⊤ x ( n ) ) 2 = 1 2 ∑ n = 1 N ( ( ‾ r ) ( n ) ( y ( n ) − w ⊤ x ( n ) ) ) 2 = 1 2 ∣ ∣ r ‾ ⊤ ( y − X ‾ w ) ∣ ∣ 2 \begin{aligned} R(w) &=\frac{1}{2}\sum^N_{n=1}[\overline{r}^{(n)}]^2(y^{(n)}-w^\top x^{(n)})^2 \\ & =\frac{1}{2}\sum^N_{n=1}(\overline(r)^{(n)}(y^{(n)}-w^\top x^{(n)}))^2 \\ & =\frac{1}{2}||\overline{r}^\top(y-\overline{X}w)||^2 \end{aligned} R(w)=21n=1∑N[r(n)]2(y(n)−w⊤x(n))2=21n=1∑N((r)(n)(y(n)−w⊤x(n)))2=21∣∣r⊤(y−Xw)∣∣2

损失函数对参数 w w w求导:

∂ R ( w ) ∂ w = 1 2 ∣ ∣ r ‾ ⊤ ( y − X ‾ w ) ∣ ∣ 2 ∂ w = − X r ‾ r ‾ ⊤ ( y − X ⊤ w ) = 0 \begin{aligned} \frac{\partial R(w)}{\partial w}&= \frac{\frac{1}{2}||\overline{r}^\top(y-\overline{X}w)||^2}{\partial w} \\ &= -X\overline{r}\overline{r}^\top (y-X^\top w) \\ &= 0 \end{aligned} ∂w∂R(w)=∂w21∣∣r⊤(y−Xw)∣∣2=−Xrr⊤(y−X⊤w)=0

于是有: w ∗ = ( X r ‾ r ‾ ⊤ X ⊤ ) − 1 X r ‾ r ‾ ⊤ y w^* =(X\overline{r}\overline{r}^\top X^\top)^{-1}X\overline{r}\overline{r}^\top y w∗=(Xrr⊤X⊤)−1Xrr⊤y

参数 r ( n ) r^{(n)} r(n)

这个参数是为了对不同的数据进行加权,相当于不同数据对结果的影响程度会不同,如果某个数据比较重要,希望对其高度重视,那么就可以设置相对较大的权重,反之则设置小一点。

2-3

已知定理: A 、 B A、B A、B分别为 n × m , m × s n \times m,m\times s n×m,m×s的矩阵,则 r a n k ( A B ) ≤ m i n { r a n k ( A ) , r a n k ( B ) } rank(AB)\leq min\{rank(A),rank(B)\} rank(AB)≤min{rank(A),rank(B)}

X ∈ R ( d + 1 ) × N , X T ∈ R N × ( d + 1 ) X\in \mathbb{R}^{(d+1)\times N},X^T \in \mathbb{R}^{N\times (d+1)} X∈R(d+1)×N,XT∈RN×(d+1)

r a n k ( X ) = r a n k ( X ⊤ ) = m i n ( ( d + 1 ) , N ) , N < d + 1 rank(X)=rank(X^\top)=min((d+1),N),N

r a n k ( X X ⊤ ) ≤ N , N = N rank(XX^\top)\leq{N,N}=N rank(XX⊤)≤N,N=N

2-4

R ( w ) = 1 2 ∣ ∣ y − X ⊤ w ∣ ∣ 2 + 1 2 λ ∣ ∣ w ∣ ∣ 2 R(w)=\frac{1}{2}||y-X^\top w||^2+\frac{1}{2}\lambda||w||^2 R(w)=21∣∣y−X⊤w∣∣2+21λ∣∣w∣∣2, w ∗ = ( X X ⊤ + λ I ) − 1 X y w^* = (XX^\top+\lambda I)^{-1}Xy w∗=(XX⊤+λI)−1Xy

可得:

∂ R ( w ) ∂ w = 1 2 ∂ ∣ ∣ y − X ⊤ w ∣ ∣ 2 + λ ∣ ∣ w ∣ ∣ 2 ∂ w = − X ( y − X ⊤ w ) + λ w = 0 \begin{aligned} \frac{\partial R(w)}{\partial w} &=\frac{1}{2}\frac{\partial ||y-X^\top w||^2+\lambda||w||^2}{\partial w} \\ &=-X(y-X^\top w)+\lambda w \\ &= 0 \end{aligned} ∂w∂R(w)=21∂w∂∣∣y−X⊤w∣∣2+λ∣∣w∣∣2=−X(y−X⊤w)+λw=0

因此有:

− X Y + X X ⊤ w + λ w = 0 ( X X ⊤ + λ I ) w = X Y w ∗ = ( X X ⊤ + λ I ) − 1 X y -XY+XX^\top w+\lambda w = 0 \\ (XX^\top+\lambda I)w =XY \\ w^* = (XX^\top+\lambda I)^{-1}Xy −XY+XX⊤w+λw=0(XX⊤+λI)w=XYw∗=(XX⊤+λI)−1Xy

2-5

根据题意,有: log p ( y ∣ X ; w , σ ) = ∑ n = 1 N log N ( y ( n ) w ⊤ x ( n ) , σ 2 ) \log p(y|X;w,\sigma) =\sum^N_{n=1}\log N(y^{(n)}w^\top x^{(n)},\sigma^2) logp(y∣X;w,σ)=∑n=1NlogN(y(n)w⊤x(n),σ2)

令 ∂ log p ( y ∣ X ; w , σ ) ∂ w = 0 \frac{\partial \log p(y|X;w,\sigma)}{\partial w} = 0 ∂w∂logp(y∣X;w,σ)=0,因此有:

∂ ( ∑ n = 1 N − ( y ( n ) − w ⊤ x ( n ) ) 2 2 β ) ∂ w = 0 ∂ 1 2 ∣ ∣ y − X ⊤ w ∣ ∣ 2 ∂ w = 0 − X ( y − X ⊤ w ) = 0 \frac{\partial(\sum^N_{n=1}-\frac{(y^{(n)}-w^\top x^{(n)})^2}{2\beta})}{\partial w}=0 \\ \frac{\partial \frac{1}{2}||y-X^\top w||^2}{\partial w} =0 \\ -X(y-X^\top w) = 0 ∂w∂(∑n=1N−2β(y(n)−w⊤x(n))2)=0∂w∂21∣∣y−X⊤w∣∣2=0−X(y−X⊤w)=0

因此有: w M L = ( X X ⊤ ) − 1 X y w^{ML}=(XX^\top)^{-1}Xy wML=(XX⊤)−1Xy

2-6

样本均值

参数 μ \mu μ在样本上的似然函数为: p ( x ∣ μ , σ 2 ) = ∑ n = 1 N ( x ( n ) ; μ , σ 2 ) p(x|\mu,\sigma^2)=\sum^N_{n=1}(x^{(n)};\mu,\sigma^2) p(x∣μ,σ2)=∑n=1N(x(n);μ,σ2)

对数似然函数为: log p ( x ; μ , σ 2 ) = ∑ n = 1 N log p ( x ( n ) ; μ , σ 2 ) = ∑ n = 1 N ( log 1 2 π σ − ( x ( n ) − μ ) 2 2 σ 2 ) \log p(x;\mu,\sigma^2)=\sum^N_{n=1}\log p(x^{(n)};\mu,\sigma^2)=\sum^N_{n=1}(\log \frac{1}{\sqrt{2\pi}\sigma}-\frac{(x^{(n)}-\mu)^2}{2\sigma^2}) logp(x;μ,σ2)=∑n=1Nlogp(x(n);μ,σ2)=∑n=1N(log2πσ1−2σ2(x(n)−μ)2)

我们的目标是找到参数 μ \mu μ的一个估计使得似然函数最大,等价于对数自然函数最大。

令 ∂ log p ( x ; μ , σ 2 ) ∂ μ = 1 σ 2 ∑ n = 1 N ( x ( n ) − μ ) = 0 \frac{\partial \log p(x;\mu,\sigma^2)}{\partial \mu}=\frac{1}{\sigma^2}\sum^N_{n=1}(x^{(n)}-\mu)=0 ∂μ∂logp(x;μ,σ2)=σ21∑n=1N(x(n)−μ)=0,得到: μ M L = 1 N ∑ n = 1 N x ( n ) \mu^{ML}=\frac{1}{N}\sum^N_{n=1}x^{(n)} μML=N1∑n=1Nx(n),即样本均值

MAP证明

参数 μ \mu μ的后验分布: p ( μ ∣ x ; μ 0 , σ 0 2 ) ∝ p ( x ∣ μ ; σ 2 ) p ( μ ; μ 0 , σ 0 2 ) p(\mu|x;\mu_0,\sigma_0^2)\propto p(x|\mu;\sigma^2)p(\mu;\mu_0,\sigma_0^2) p(μ∣x;μ0,σ02)∝p(x∣μ;σ2)p(μ;μ0,σ02)

令似然函数 p ( x ∣ μ ; σ 2 ) p(x|\mu;\sigma^2) p(x∣μ;σ2)为高斯密度函数,后验分布的对数为:

log p ( μ ∣ x ; μ 0 , σ 0 2 ) ∝ log p ( x ∣ μ ; σ 2 ) + log p ( μ ; μ 0 , σ 2 ) ∝ − 1 σ 2 ∑ n = 1 N ( x ( n ) − μ ) 2 − 1 σ 2 ( μ − μ 0 ) 2 \begin{aligned} \log p(\mu|x;\mu_0,\sigma_0^2)&\propto\log p(x|\mu;\sigma^2)+\log p(\mu;\mu_0,\sigma^2) \\ &\propto -\frac{1}{\sigma^2}\sum^N_{n=1}(x^{(n)}-\mu)^2-\frac{1}{\sigma^2}(\mu-\mu_0)^2 \end{aligned} logp(μ∣x;μ0,σ02)∝logp(x∣μ;σ2)+logp(μ;μ0,σ2)∝−σ21n=1∑N(x(n)−μ)2−σ21(μ−μ0)2

令 ∂ log p ( μ ∣ x ; μ 0 , σ 0 2 ) ∂ μ = 0 \frac{\partial \log p(\mu|x;\mu_0,\sigma_0^2)}{\partial \mu}=0 ∂μ∂logp(μ∣x;μ0,σ02)=0,得到: μ M A P = ( 1 σ 2 ∑ n = 1 N x ( n ) + μ 0 σ 0 2 ) / ( N σ 2 + 1 σ 0 2 ) \mu^{MAP}=(\frac{1}{\sigma^2}\sum^N_{n=1}x^{(n)}+\frac{\mu_0}{\sigma_0^2})/(\frac{N}{\sigma^2}+\frac{1}{\sigma_0^2}) μMAP=(σ21∑n=1Nx(n)+σ02μ0)/(σ2N+σ021)

证明完毕

2-7

σ → ∞ \sigma\rightarrow \infty σ→∞, μ M A P = 1 N ∑ n = 1 N x ( n ) \mu^{MAP}=\frac{1}{N}\sum^N_{n=1}x^{(n)} μMAP=N1∑n=1Nx(n)

2-8

因为 f f f可测量,故 σ \sigma σ可测量,又 f f f有界,有: E y [ f 2 ( x ) ∣ x ] = f 2 ( x ) , E y [ y f ( x ) ∣ x ] = f ( x ) ⋅ E y ( y ∣ x ) \mathbb{E}_y[f^2(x)|x]=f^2(x),\ \mathbb{E}_y[yf(x)|x]=f(x)\cdot \mathbb{E}_y(y|x) Ey[f2(x)∣x]=f2(x), Ey[yf(x)∣x]=f(x)⋅Ey(y∣x)

R ( f ) = E x [ E y [ ( y − f ( x ) ) 2 ∣ x ] ] = E x [ E y [ y 2 ∣ x ] + E y [ f 2 ( x ) ∣ x ] − 2 E y [ y f ( x ) ∣ x ] ] R(f)=\mathbb{E}_x[\mathbb{E}_y[(y-f(x))^2|x]]=\mathbb{E}_x[\mathbb{E}_y[y^2|x]+\mathbb{E}_y[f^2(x)|x]-2\mathbb{E}_y[yf(x)|x]] R(f)=Ex[Ey[(y−f(x))2∣x]]=Ex[Ey[y2∣x]+Ey[f2(x)∣x]−2Ey[yf(x)∣x]]

R ( f ) = E x [ E y [ y 2 ∣ x ] + f 2 ( x ) − 2 f ( x ) E y [ y ∣ x ] ] R(f)=\mathbb{E}_x[\mathbb{E}_y[y^2|x]+f^2(x)-2f(x)\mathbb{E}_y[y|x]] R(f)=Ex[Ey[y2∣x]+f2(x)−2f(x)Ey[y∣x]]

由Jensen不等式: E y [ y 2 ∣ x ] ≥ E y [ y ∣ x ] 2 \mathbb{E}_y[y^2|x]\geq \mathbb{E}_y[y|x]^2 Ey[y2∣x]≥Ey[y∣x]2

故: R ( f ) ≥ E x [ E y [ f ( x ) − E y [ y ∣ x ] ] ] 2 R(f)\geq \mathbb{E}_x[\mathbb{E}_y[f(x)-\mathbb{E}_y[y|x]]]^2 R(f)≥Ex[Ey[f(x)−Ey[y∣x]]]2

故: f ∗ ( x ) = E y ∼ p r ( y ∣ x ) [ y ] f^*(x)=\mathbb{E}_{y\sim p_r(y|x)}[y] f∗(x)=Ey∼pr(y∣x)[y]

2-9

高偏差原因:数据特征过少;模型复杂度太低;正则化系数 λ \lambda λ太大;

高方差原因:数据样例过少;模型复杂度过高;正则化系数 λ \lambda λ太小;没有使用交叉验证

2-10

对于单个样本 E D E_D ED, f ∗ ( x ) f^*(x) f∗(x)是常数,因此: E D [ f ∗ ( x ) ] = f ∗ ( x ) E_D[f^*(x)]=f^*(x) ED[f∗(x)]=f∗(x)

E D [ ( f D ( x ) − f ∗ ( x ) ) 2 ] = E D [ ( f D ( x ) − E D [ f D ( x ) ] + E D [ f D ( x ) ] − f ∗ ( x ) ) 2 ] = E D [ ( f D ( x ) − E D [ f D ( x ) ] ) 2 + ( E D [ f D ( x ) ] − f ∗ ( x ) ) 2 + 2 ( f D ( x ) − E D [ f D ( x ) ] ) ( E D [ f D ( x ) ] − f ∗ ( x ) ) ] = E D [ ( f D ( x ) − E D [ f D ( x ) ] ) 2 ] + E D [ E D [ f D ( x ) ] 2 + ( f ∗ ( x ) ) 2 − 2 E D [ f D ( x ) ] f ∗ ( x ) ] + 2 E D [ ( f D ( x ) − E D [ f D ( x ) ] ) ( E D [ f D ( x ) ] − f ∗ ( x ) ) ] = E D [ ( f D ( x ) − E D [ f D ( x ) ] ) 2 ] + E D [ f D ( x ) ] 2 + ( f ∗ ( x ) ) 2 − 2 E D [ f D ( x ) + 2 E D [ ( f D ( x ) − E D [ f D ( x ) ] ) ( E D [ f D ( x ) ] − f ∗ ( x ) ) ] = E D [ ( f D ( x ) − E D [ f D ( x ) ] ) 2 ] + ( E D [ f D ( x ) ] − f ∗ ( x ) ) 2 + 2 E D [ ( f D ( x ) − E D [ f D ( x ) ] ) ( E D [ f D ( x ) ] − f ∗ ( x ) ) ] = E D [ ( f D ( x ) − E D [ f D ( x ) ] ) 2 ] + ( E D [ f D ( x ) ] − f ∗ ( x ) ) 2 + 2 ( E D [ f D ( x ) ] ) 2 − 2 E D [ f D ( x ) f ∗ ( x ) ] − 2 ( E D [ f D ( x ) ] ) 2 + 2 E D [ f D ( x ) f ∗ ( x ) ] = E D [ ( f D ( x ) − E D [ f D ( x ) ] ) 2 ] + ( E D [ f D ( x ) ] − f ∗ ( x ) ) 2 \begin{aligned} E_D[(f_D(x)-f^*(x))^2] &= E_D[(f_D(x)-E_D[f_D(x)]+E_D[f_D(x)]-f*(x))^2] \\ &=E_D[(f_D(x)-E_D[f_D(x)])^2+(E_D[f_D(x)]-f^*(x))^2+2(f_D(x)-E_D[f_D(x)])(E_D[f_D(x)]-f^*(x))] \\ &=E_D[(f_D(x)-E_D[f_D(x)])^2]+E_D[E_D[f_D(x)]^2+(f^*(x))^2-2E_D[f_D(x)]f^*(x)]+2E_D[(f_D(x)-E_D[f_D(x)])(E_D[f_D(x)]-f^*(x))] \\ &=E_D[(f_D(x)-E_D[f_D(x)])^2]+E_D[f_D(x)]^2+(f^*(x))^2-2E_D[f_D(x)+2E_D[(f_D(x)-E_D[f_D(x)])(E_D[f_D(x)]-f^*(x))] \\ &=E_D[(f_D(x)-E_D[f_D(x)])^2]+(E_D[f_D(x)]-f^*(x))^2+2E_D[(f_D(x)-E_D[f_D(x)])(E_D[f_D(x)]-f^*(x))] \\ &=E_D[(f_D(x)-E_D[f_D(x)])^2]+(E_D[f_D(x)]-f^*(x))^2+2(E_D[f_D(x)])^2-2E_D[f_D(x)f^*(x)]-2(E_D[f_D(x)])^2+2E_D[f_D(x)f^*(x)] \\ &=E_D[(f_D(x)-E_D[f_D(x)])^2]+(E_D[f_D(x)]-f^*(x))^2 \end{aligned} ED[(fD(x)−f∗(x))2]=ED[(fD(x)−ED[fD(x)]+ED[fD(x)]−f∗(x))2]=ED[(fD(x)−ED[fD(x)])2+(ED[fD(x)]−f∗(x))2+2(fD(x)−ED[fD(x)])(ED[fD(x)]−f∗(x))]=ED[(fD(x)−ED[fD(x)])2]+ED[ED[fD(x)]2+(f∗(x))2−2ED[fD(x)]f∗(x)]+2ED[(fD(x)−ED[fD(x)])(ED[fD(x)]−f∗(x))]=ED[(fD(x)−ED[fD(x)])2]+ED[fD(x)]2+(f∗(x))2−2ED[fD(x)+2ED[(fD(x)−ED[fD(x)])(ED[fD(x)]−f∗(x))]=ED[(fD(x)−ED[fD(x)])2]+(ED[fD(x)]−f∗(x))2+2ED[(fD(x)−ED[fD(x)])(ED[fD(x)]−f∗(x))]=ED[(fD(x)−ED[fD(x)])2]+(ED[fD(x)]−f∗(x))2+2(ED[fD(x)])2−2ED[fD(x)f∗(x)]−2(ED[fD(x)])2+2ED[fD(x)f∗(x)]=ED[(fD(x)−ED[fD(x)])2]+(ED[fD(x)]−f∗(x))2

2-11

2-12

用笔算一下就OK,9

第三章

3-1

设任意 α \alpha α为超平面上的向量,取两点 α 1 , α 2 ∈ a \alpha_1,\alpha_2 \in a α1,α2∈a,则满足:

{ ω ⊤ α 1 + b = 0 ω ⊤ α 2 + b = 0 \begin{cases} \omega^\top\alpha_1+b=0 \\\\ \omega^\top\alpha_2+b=0 \\\\ \end{cases} ⎩⎪⎪⎪⎨⎪⎪⎪⎧ω⊤α1+b=0ω⊤α2+b=0

两式相减,得到: ω T ( α 1 − α 2 ) = 0 \omega^T(\alpha_1-\alpha_2)=0 ωT(α1−α2)=0,由 α 1 − α 2 \alpha_1-\alpha_2 α1−α2平行于 a a a,故 ω ⊥ α \omega \perp \alpha ω⊥α,即 ω \omega ω垂直于决策边界。

3-2

设 x x x投影到平面 f ( x , ω ) = ω ⊤ x + b = 0 f(x,\omega)=\omega^\top x+b=0 f(x,ω)=ω⊤x+b=0的点为 x ′ x' x′,则:可知 x − x ′ x-x' x−x′垂直于 f ( x , ω ) f(x,\omega) f(x,ω),由3-1有 x − x ′ x-x' x−x′平行于 ω \omega ω

于是有: δ = ∣ ∣ x − x ′ ∣ ∣ = k ∣ ∣ ω ∣ ∣ \delta=||x-x'||=k||\omega|| δ=∣∣x−x′∣∣=k∣∣ω∣∣,又:

{ f ( x , ω ) = ω ⊤ x + b ω ⊤ x 2 + b = 0 \begin{cases} f(x,\omega)=\omega^\top x+b \\\\ \omega^\top x_2+b=0 \end{cases} ⎩⎪⎨⎪⎧f(x,ω)=ω⊤x+bω⊤x2+b=0

故有: w T ( x − x ′ ) = f ( x , ω ) w^T(x-x')=f(x,\omega) wT(x−x′)=f(x,ω),带入 x − x ′ = k ω x-x'=k\omega x−x′=kω有: k ∣ ∣ ω ∣ ∣ 2 = f ( x , ω ) k||\omega||^2=f(x,\omega) k∣∣ω∣∣2=f(x,ω)

故: δ = ∣ f ( x , ω ) ∣ ∣ ∣ ω ∣ ∣ \delta=\frac{|f(x,\omega)|}{||\omega||} δ=∣∣ω∣∣∣f(x,ω)∣

3-3

由多线性可分定义

可知: ω c x 1 > ω c ~ x 1 \omega_cx_1>\omega_{\tilde{c}}x_1 ωcx1>ωc~x1, ω c x 2 > ω c ~ x 2 \omega_cx_2>\omega_{\tilde{c}}x_2 ωcx2>ωc~x2,又 ρ ∈ [ 0 , 1 ] \rho \in[0,1] ρ∈[0,1],故: ρ > 0 , 1 − ρ > 0 \rho>0,1-\rho>0 ρ>0,1−ρ>0

线性组合即有: ρ ω c x 1 + ( 1 − ρ ) ω c x 2 > ρ ω a ~ x 1 + ( 1 − ρ ) ω c ~ x 2 \rho\omega_cx_1+(1-\rho)\omega_cx_2>\rho\omega_{\tilde{a}}x_1+(1-\rho)\omega_{\tilde{c}}x_2 ρωcx1+(1−ρ)ωcx2>ρωa~x1+(1−ρ)ωc~x2

3-4

对于每个类别 c c c,他们的分类函数为 f c ( x ; ω c ) = ω c T x + b c , c ∈ { 1 , ⋯ , C } f_c(x;\omega_c)=\omega^T_cx+b_c,c\in \{1,\cdots,C\} fc(x;ωc)=ωcTx+bc,c∈{1,⋯,C}

因为每个类都与除它本身以外的类线性可分,所以: ω c ⊤ x ( n ) > ω c ~ ⊤ x ( n ) \omega_c^\top x^ {(n)}>\omega_{\tilde{c}}^\top x^{(n)} ωc⊤x(n)>ωc~⊤x(n)

因此有: ∑ n = 1 N ( ω c ⊤ x ( n ) − ω c ~ ⊤ x ( n ) ) > 0 \sum^N_{n=1}(\omega^\top_cx^{(n)}-\omega^\top_{\tilde{c}}x^{(n)})>0 ∑n=1N(ωc⊤x(n)−ωc~⊤x(n))>0,即: X T ω c − X T ω c ~ > 0 X^T\omega_c-X^T\omega_{\tilde{c}}>0 XTωc−XTωc~>0,故整个数据集线性可分。

3-5

从理论上来说,平方损失函数也可以用于分类问题,但不适合。首先,最小化平方损失函数本质上等同于在误差服从高斯分布的假设下的极大似然估计,然而大部分分类问题的误差并不服从高斯分布。而且在实际应用中,交叉熵在和Softmax激活函数的配合下,能够使得损失值越大导数越大,损失值越小导数越小,这就能加快学习速率。然而若使用平方损失函数,则损失越大导数反而越小,学习速率很慢。

Logistic回归的平方损失函数是非凸的: L = 1 2 ∑ t ( y ^ − y ) 2 L=\frac{1}{2}\sum_t(\hat{y}-y)^2 L=21∑t(y^−y)2, y ^ = σ ( ω T x ) \hat{y}=\sigma(\omega^Tx) y^=σ(ωTx)

∂ L ∂ ω = ∑ t ( y ^ − y i ) ∂ y ^ ∂ ω = ∑ i ( y ^ − y i ) y ^ ( 1 − y ^ ) x i = ∑ i ( − y ^ 3 + ( y i + 1 ) y ^ 2 − y i y ^ ) x i \frac{\partial L}{\partial \omega} =\sum_t(\hat{y}-y_i)\frac{\partial \hat{y}}{\partial \omega}=\sum_i(\hat{y}-y_i)\hat{y}(1-\hat{y})x_i=\sum_i(-\hat{y}^3+(y_i+1)\hat{y}^2-y_i\hat{y})x_i ∂ω∂L=∑t(y^−yi)∂ω∂y^=∑i(y^−yi)y^(1−y^)xi=∑i(−y^3+(yi+1)y^2−yiy^)xi

进一步地: ∂ 2 L ∂ ω 2 = ∑ ( − 3 y ^ 2 + 2 ( y i + 1 ) y ^ − y i ) ∂ y ^ ∂ ω x i = ∑ [ − 3 y ^ 2 + 2 ( y i + 1 ) y ^ − y i ] y ^ ( 1 − y ^ ) x i 2 \frac{\partial^2 L}{\partial \omega^2}=\sum(-3\hat{y}^2+2(y_i+1)\hat{y}-y_i)\frac{\partial \hat{y}}{\partial \omega}x_i=\sum[-3\hat{y}^2+2(y_i+1)\hat{y}-y_i]\hat{y}(1-\hat{y})x_i^2 ∂ω2∂2L=∑(−3y^2+2(yi+1)y^−yi)∂ω∂y^xi=∑[−3y^2+2(yi+1)y^−yi]y^(1−y^)xi2

又 y ^ ∈ [ 0 , 1 ] , y ∈ 0 , 1 \hat{y}\in[0,1],y\in{0,1} y^∈[0,1],y∈0,1,二阶导数不一定大于零。

3-6

不加入正则化项限制权重向量的大小,可能造成权重向量过大,产生上溢。

3-7

原式中: ω ‾ = 1 T ∑ k = 1 K c k ω k \overline{\omega}=\frac{1}{T}\sum^K_{k=1}c_k\omega_k ω=T1∑k=1Kckωk,又 c k = t k + 1 − t k c_k=t_{k+1}-t_k ck=tk+1−tk

即: ω ‾ = 1 T ∑ k = 1 K ( t k + 1 − t k ) ω k \overline{\omega}=\frac{1}{T}\sum^K_{k=1}(t_{k+1}-t_k)\omega_k ω=T1∑k=1K(tk+1−tk)ωk,即只需要证明算法和该式等价。

根据算法第8、9行有: ω k = y ( n ) x ( n ) \omega_k=y^{(n)}x^{(n)} ωk=y(n)x(n),故原算法中: ω ‾ = ω T − 1 T u = ∑ k = 1 K ω k − 1 T u \overline{\omega}=\omega_T-\frac{1}{T}u=\sum^K_{k=1}\omega_k-\frac{1}{T}u ω=ωT−T1u=∑k=1Kωk−T1u

又有: u = ∑ k = 1 K t k ω k u=\sum^K_{k=1}t_{k}\omega_k u=∑k=1Ktkωk

故算法3.2能得到: ω ‾ = ∑ k = 1 K ( 1 − 1 T t k ω k ) \overline{\omega}=\sum^K_{k=1}(1-\frac{1}{T}t_k\omega_k) ω=∑k=1K(1−T1tkωk),由算法第12行可知: t k + 1 = T t_{k+1}=T tk+1=T并带入可得到:

ω ‾ = 1 T ∑ k = 1 K ( T − t k ) ω k = 1 T ∑ k = 1 K ( t k + 1 − t k ) ω k \overline{\omega}=\frac{1}{T}\sum^K_{k=1}(T-t_k)\omega_k=\frac{1}{T}\sum^K_{k=1}(t_{k+1}-t_k)\omega_k ω=T1∑k=1K(T−tk)ωk=T1∑k=1K(tk+1−tk)ωk,证毕。

3-8

ω k = ω k − 1 + ϕ ( x ( k ) , y ( k ) ) − ϕ ( x ( k ) , z ) \omega_k=\omega_{k-1}+\phi(x^{(k)},y^{(k)})-\phi(x^{(k)},z) ωk=ωk−1+ϕ(x(k),y(k))−ϕ(x(k),z)

因此可知: ∣ ∣ ω K ∣ ∣ 2 = ∣ ∣ ω K − 1 + ϕ ( x ( k ) , y ( k ) ) − ϕ ( x ( k ) , z ) ∣ ∣ 2 ||\omega_K||^2=||\omega_{K-1}+\phi(x^{(k)},y^{(k)})-\phi(x^{(k)},z)||^2 ∣∣ωK∣∣2=∣∣ωK−1+ϕ(x(k),y(k))−ϕ(x(k),z)∣∣2

即 ∣ ∣ ω K ∣ ∣ 2 = ∣ ∣ ω K − 1 ∣ ∣ 2 + ∣ ∣ ϕ ( x ( k ) , y ( k ) ) − ϕ ( x ( k ) , z ) ∣ ∣ 2 + 2 ω K − 1 ⋅ ( ϕ ( x ( n ) , y ( n ) ) − ϕ ( x ( n ) , z ) ) ||\omega_K||^2=||\omega_{K-1}||^2+||\phi(x^{(k)},y^{(k)})-\phi(x^{(k)},z)||^2+2\omega_{K-1}\cdot(\phi(x^{(n)},y^{(n)})-\phi(x^{(n)},z)) ∣∣ωK∣∣2=∣∣ωK−1∣∣2+∣∣ϕ(x(k),y(k))−ϕ(x(k),z)∣∣2+2ωK−1⋅(ϕ(x(n),y(n))−ϕ(x(n),z))

因为 z z z为 ω K − 1 \omega_{K-1} ωK−1的最倾向的候选项,因此 2 ω K − 1 ⋅ ( ϕ ( x ( n ) , y ( n ) ) − ϕ ( x ( n ) , z ) ) 2\omega_{K-1}\cdot(\phi(x^{(n)},y^{(n)})-\phi(x^{(n)},z)) 2ωK−1⋅(ϕ(x(n),y(n))−ϕ(x(n),z))将小于0。

故: ∣ ∣ ω K ∣ ∣ 2 ≤ ∣ ∣ ω K − 1 ∣ ∣ 2 + R 2 ||\omega_K||^2\leq||\omega_{K-1}||^2+R^2 ∣∣ωK∣∣2≤∣∣ωK−1∣∣2+R2

迭代到最后有: ∣ ∣ ω K ∣ ∣ 2 ≤ K R 2 ||\omega_K||^2\leq KR^2 ∣∣ωK∣∣2≤KR2,找到了上界。

再寻找下界: ∣ ∣ ω K ∣ ∣ 2 = ∣ ∣ ω ∗ ∣ ∣ 2 ⋅ ∣ ∣ ω K ∣ ∣ 2 ≥ ∣ ∣ ω ∗ ⊤ ω K ∣ ∣ 2 ||\omega_K||^2=||\omega^*||^2\cdot||\omega_K||^2\geq||\omega^{*\top}\omega_K||^2 ∣∣ωK∣∣2=∣∣ω∗∣∣2⋅∣∣ωK∣∣2≥∣∣ω∗⊤ωK∣∣2

带入 ω K \omega_K ωK有: ∣ ∣ ω K ∣ ∣ 2 ≥ ∣ ∣ ω ∗ ⊤ ∑ k = 1 K ( ϕ ( x ( n ) , y ( n ) ) − ϕ ( x ( n ) . z ) ) ∣ ∣ ||\omega_K||^2\geq ||\omega^{*\top}\sum^K_{k=1}(\phi(x^{(n)},y^{(n)})-\phi(x^{(n)}.z))|| ∣∣ωK∣∣2≥∣∣ω∗⊤∑k=1K(ϕ(x(n),y(n))−ϕ(x(n).z))∣∣

即: ∣ ∣ ω K ∣ ∣ 2 ≥ [ ∑ k = 1 K ⟨ ω ∗ , ( ϕ ( x ( n ) , y ( n ) ) − ϕ ( x ( n ) . z ) ) ⟩ ] 2 ||\omega_K||^2\geq [\sum^K_{k=1}\langle\omega^*,(\phi(x^{(n)},y^{(n)})-\phi(x^{(n)}.z))\rangle]^2 ∣∣ωK∣∣2≥[∑k=1K⟨ω∗,(ϕ(x(n),y(n))−ϕ(x(n).z))⟩]2

根据广义线性可分有: ⟨ ω ∗ , ϕ ( x ( k ) , y ( k ) ) ⟩ − ⟨ ω ∗ , ϕ ( x ( k ) , z ) ⟩ ≥ γ \langle\omega^*,\phi(x^{(k)},y^{(k)})\rangle-\langle\omega^*,\phi(x^{(k)},z)\rangle\geq\gamma ⟨ω∗,ϕ(x(k),y(k))⟩−⟨ω∗,ϕ(x(k),z)⟩≥γ

因此: ∣ ∣ ω K ∣ ∣ 2 ≥ K 2 γ 2 ||\omega_K||^2\geq K^2\gamma^2 ∣∣ωK∣∣2≥K2γ2

因此结合起来就得到了: K 2 γ 2 ≤ K R 2 K^2\gamma^2\leq KR^2 K2γ2≤KR2,即 K ≤ R 2 γ 2 K\leq\frac{R^2}{\gamma^2} K≤γ2R2,证毕

3-9

存在性证明:

因为数据集线性可分,因此该最优化问题存在可行解,又根据线性可分的定义可知目标函数一定有下界,所以最优化问题一定有解,记作: ( ω ∗ , b ∗ ) (\omega^*,b^*) (ω∗,b∗)

因为 y ∈ { 1 , − 1 } y\in \{1,-1\} y∈{1,−1},因此 ( ω ∗ , b ∗ ) ≠ ( 0 , b ∗ ) (\omega^*,b^*)\not=(0,b^*) (ω∗,b∗)=(0,b∗),即 ω ∗ ≠ O \omega^*\not=\mathbb{O} ω∗=O,故分离的超平面一定存在。

唯一性证明(反证法):

假定存在两个最优的超平面分别为 ω 1 ∗ x + b = 0 \omega_1^*x+b=0 ω1∗x+b=0和 ω 2 ∗ x + b = 0 \omega_2^*x+b=0 ω2∗x+b=0

因为为最优,故有: ∣ ∣ ω 1 ∗ ∣ ∣ = ∣ ∣ ω 2 ∗ ∣ ∣ = C ||\omega_1^*||=||\omega_2^*||=C ∣∣ω1∗∣∣=∣∣ω2∗∣∣=C,其中C为一个常数。

于是令: ω = ω 1 ∗ + ω 2 ∗ 2 \omega=\frac{\omega_1^*+\omega_2^*}{2} ω=2ω1∗+ω2∗, b = b 1 ∗ + b 2 ∗ 2 b=\frac{b_1^*+b_2^*}{2} b=2b1∗+b2∗,可知该解也为可行解。

于是有: C ≤ ∣ ∣ ω ∣ ∣ C\leq||\omega|| C≤∣∣ω∣∣,又根据范数的三角不等式性质: ∥ ∣ ω ∣ ∣ ≤ ∣ ∣ ω 1 ∗ ∣ ∣ 2 + ∣ ∣ ω 2 ∗ ∣ ∣ 2 = C \||\omega||\leq\frac{||\omega_1^*||}{2}+\frac{||\omega_2^*||}{2}=C ∥∣ω∣∣≤2∣∣ω1∗∣∣+2∣∣ω2∗∣∣=C

因此: 2 ∣ ∣ ω ∣ ∣ = ∣ ∣ ω 1 ∗ ∣ ∣ + ∣ ∣ ω 2 ∗ ∣ ∣ 2||\omega||=||\omega_1^*||+||\omega^*_2|| 2∣∣ω∣∣=∣∣ω1∗∣∣+∣∣ω2∗∣∣

又根据不等式取等号的条件可以得到: ω 1 ∗ = λ ω 2 ∗ \omega_1^*=\lambda\omega_2^* ω1∗=λω2∗

代入可知: λ = 1 \lambda=1 λ=1 (-1的解舍去,会导致 ω = 0 \omega=0 ω=0)

因此不存在两个超平面最优,故该超平面惟一。

证毕

3-10

ϕ ( x ) = [ 1 , 2 x 1 , 2 x 2 , 2 x 1 x 2 , x 1 2 , x 2 2 ] ⊤ \phi(x)=[1,\sqrt{2}x_1,\sqrt{2}x_2,\sqrt{2}x_1x_2,x_1^2,x_2^2]^{\top} ϕ(x)=[1,2x1,2x2,2x1x2,x12,x22]⊤, ϕ ( z ) = [ 1 , 2 z 1 , 2 z 2 , 2 z 1 z 2 , z 1 2 , z 2 2 ] ⊤ \phi(z)=[1,\sqrt{2}z_1,\sqrt{2}z_2,\sqrt{2}z_1z_2,z_1^2,z_2^2]^{\top} ϕ(z)=[1,2z1,2z2,2z1z2,z12,z22]⊤

故: ϕ ( x ) ⊤ ϕ ( z ) = 1 + 2 x 1 z 1 + 2 x 2 z 2 + 2 x 1 x 2 z 1 z 2 + x 1 2 z 1 2 + x 2 2 z 2 2 = ( 1 + ( x 1 x 2 ) ( z 1 z 2 ) ⊤ ) 2 \phi(x)^\top\phi(z)=1+2x_1z_1+2x_2z_2+2x_1x_2z_1z_2+x_1^2z_1^2+x_2^2z_2^2=(1+(x_1 \ x_2)(z_1 \ z_2)^\top)^2 ϕ(x)⊤ϕ(z)=1+2x1z1+2x2z2+2x1x2z1z2+x12z12+x22z22=(1+(x1 x2)(z1 z2)⊤)2

即: ϕ ( x ) ⊤ ϕ ( z ) = ( 1 + x ⊤ z ) 2 = k ( x , z ) \phi(x)^\top\phi(z)=(1+x^\top z)^2=k(x,z) ϕ(x)⊤ϕ(z)=(1+x⊤z)2=k(x,z),证毕

3-11

原问题:

m i n 1 2 ∣ ∣ w ∣ ∣ 2 + C ∑ n = 1 N ξ n s . t . 1 − y n ( w ⊤ x n + b ) − ξ n ≤ 0 , ∀ n ∈ { 1 , ⋯ , N } ξ n ≥ 0 , ∀ n ∈ { 1 , ⋯ , N } \begin{array}{c} min\frac{1}{2}||w||^2+C\sum^N_{n=1}\xi_n \\\\ s.t. 1-y_n(w^\top x_n+b)-\xi_n\leq 0,\forall n\in\{1,\cdots,N\} \\\\ \xi_n\geq0,\forall n\in\{1,\cdots,N\} \end{array} min21∣∣w∣∣2+C∑n=1Nξns.t.1−yn(w⊤xn+b)−ξn≤0,∀n∈{1,⋯,N}ξn≥0,∀n∈{1,⋯,N}

使用拉格朗日乘子法,可得:

L ( w , b , ξ , λ , μ ) = 1 2 ∣ ∣ w ∣ ∣ 2 + C ∑ i = 1 N ξ i + ∑ i = 1 N λ i ( 1 − y i ( w ⊤ x i + b ) − ξ i ) − ∑ i = 1 N μ i ξ i L(w,b,\xi,\lambda,\mu)=\frac{1}{2}||w||^2+C\sum^N_{i=1}\xi_i+\sum^N_{i=1}\lambda_i(1-y_i(w^\top x_i+b)-\xi_i)-\sum^N_{i=1}\mu_i\xi_i L(w,b,ξ,λ,μ)=21∣∣w∣∣2+Ci=1∑Nξi+i=1∑Nλi(1−yi(w⊤xi+b)−ξi)−i=1∑Nμiξi

将其转化为最小最大问题:

min w , b , ξ max λ , μ L ( w , b , ξ , λ , μ ) s . t . λ i ≥ 0 , ∀ n ∈ { 1 , ⋯ , N } \begin{array}{c} \min\limits_{w,b,\xi} \ \max\limits_{\lambda,\mu} \ L(w,b,\xi,\lambda,\mu) \\\\ s. t. \lambda_i\geq0,\forall n\in\{1,\cdots,N\} \end{array} w,b,ξmin λ,μmax L(w,b,ξ,λ,μ)s.t.λi≥0,∀n∈{1,⋯,N}

转化为对偶问题:

max λ , μ min w , b , ξ L ( w , b , ξ , λ , μ ) s . t . λ i ≥ 0 , ∀ n ∈ { 1 , ⋯ , N } \begin{array}{c} \max\limits_{\lambda,\mu} \ \min\limits_{w,b,\xi} \ L(w,b,\xi,\lambda,\mu) \\\\ s. t. \lambda_i\geq0,\forall n\in\{1,\cdots,N\} \end{array} λ,μmax w,b,ξmin L(w,b,ξ,λ,μ)s.t.λi≥0,∀n∈{1,⋯,N}

求解 min w , b , ξ L ( w , b , ξ , λ , μ ) \min\limits_{w,b,\xi}L(w,b,\xi,\lambda,\mu) w,b,ξminL(w,b,ξ,λ,μ)如下:

令 ∂ L ∂ b = 0 \frac{\partial L}{\partial b}=0 ∂b∂L=0,得到 ∑ i = 1 N λ i y i = 0 \sum^N\limits_{i=1}\lambda_iy_i=0 i=1∑Nλiyi=0,带入 L L L中,有:

L ( w , b , ξ , λ , μ ) = 1 2 ∣ ∣ w ∣ ∣ 2 + C ∑ i = 1 N ξ i + ∑ i = 1 N λ i − ∑ i = 1 N λ i y i w ⊤ x i − ∑ i = 1 N λ i ξ i − ∑ i = 1 N μ i ξ i L(w,b,\xi,\lambda,\mu)=\frac{1}{2}||w||^2+C\sum^N\limits_{i=1}\xi_i+\sum^N\limits_{i=1}\lambda_i-\sum^N\limits_{i=1}\lambda_iy_iw^\top x_i-\sum^N\limits_{i=1}\lambda_i\xi_i-\sum^N\limits_{i=1}\mu_i\xi_i L(w,b,ξ,λ,μ)=21∣∣w∣∣2+Ci=1∑Nξi+i=1∑Nλi−i=1∑Nλiyiw⊤xi−i=1∑Nλiξi−i=1∑Nμiξi

令 ∂ L ∂ w = 0 \frac{\partial L}{\partial w}=0 ∂w∂L=0,可得: w − ∑ i = 1 N λ i y i x i = 0 w-\sum^N\limits_{i=1}\lambda_iy_ix_i=0 w−i=1∑Nλiyixi=0,因此: w = ∑ i = 1 N λ i y i x i w=\sum^N\limits_{i=1}\lambda_iy_ix_i w=i=1∑Nλiyixi

带入 L L L得到:

L ( w , b , ξ , λ , μ ) = 1 2 ∑ i = 1 N ∑ i = 1 N λ i λ j y i y j x i ⊤ x j + C ∑ i = 1 N ξ i + ∑ i = 1 N λ i − ∑ i = 1 N ∑ j = 1 N λ i λ j y i y j x i ⊤ x j − ∑ i = 1 N λ i ξ i − ∑ i = 1 N μ i ξ i = − 1 2 ∑ i = 1 N ∑ i = 1 N λ i λ j y i y j x i ⊤ x j + ∑ i = 1 N ( C − λ i − μ i ) ξ i + ∑ i = 1 N λ i \begin{aligned} L(w,b,\xi,\lambda,\mu) &=\frac{1}{2}\sum^N\limits_{i=1}\sum^N\limits_{i=1}\lambda_i\lambda_jy_iy_jx^\top_ix_j+C\sum^N\limits_{i=1}\xi_i+\sum^N\limits_{i=1}\lambda_i-\sum^N\limits_{i=1}\sum^N\limits_{j=1}\lambda_i\lambda_jy_iy_jx_i^\top x_j-\sum^N_{i=1}\lambda_i\xi_i-\sum^N_{i=1}\mu_i\xi_i \\\\ &=-\frac{1}{2}\sum^N\limits_{i=1}\sum^N\limits_{i=1}\lambda_i\lambda_jy_iy_jx^\top_ix_j+\sum^N_{i=1}(C-\lambda_i-\mu_i)\xi_i+\sum^N_{i=1}\lambda_i \end{aligned} L(w,b,ξ,λ,μ)=21i=1∑Ni=1∑Nλiλjyiyjxi⊤xj+Ci=1∑Nξi+i=1∑Nλi−i=1∑Nj=1∑Nλiλjyiyjxi⊤xj−i=1∑Nλiξi−i=1∑Nμiξi=−21i=1∑Ni=1∑Nλiλjyiyjxi⊤xj+i=1∑N(C−λi−μi)ξi+i=1∑Nλi

令 ∂ L ∂ ξ i = 0 \frac{\partial L}{\partial \xi_i}=0 ∂ξi∂L=0,可得 C − λ i − μ i = 0 C-\lambda_i-\mu_i=0 C−λi−μi=0

带入 L L L再次有: L ( w , b , ξ , λ , μ ) = − 1 2 ∑ i = 1 N ∑ j = 1 N λ i λ j y i y j x i ⊤ x j + ∑ i = 1 N λ i L(w,b,\xi,\lambda,\mu)=-\frac{1}{2}\sum^N\limits_{i=1}\sum^N\limits_{j=1}\lambda_i\lambda_jy_iy_jx^\top_ix_j+\sum^N\limits_{i=1}\lambda_i L(w,b,ξ,λ,μ)=−21i=1∑Nj=1∑Nλiλjyiyjxi⊤xj+i=1∑Nλi

因此对偶问题可以为:

max λ − 1 2 ∑ i = 1 N ∑ j = 1 N λ i λ j y i y j x i ⊤ x j + ∑ i = 1 N λ i s . t . ∑ i = 1 N λ i y i = 0 , ∀ i ∈ { 1 , ⋯ , N } C − λ i − μ i = 0 , ∀ i ∈ { 1 , ⋯ , N } λ i ≥ 0 , ∀ i ∈ { 1 , ⋯ , N } μ i ≥ 0 , ∀ i ∈ { 1 , ⋯ , N } \begin{array}{c} \max\limits_{\lambda}-\frac{1}{2}\sum^N\limits_{i=1}\sum^N\limits_{j=1}\lambda_i\lambda_jy_iy_jx^\top_ix_j+\sum^N\limits_{i=1}\lambda_i \\ s. t. \sum^N\limits_{i=1}\lambda_iy_i=0,\forall i\in\{1,\cdots,N\} \\ C-\lambda_i-\mu_i=0,\forall i\in\{1,\cdots,N\} \\ \lambda_i\geq 0,\forall i \in\{1,\cdots,N\} \\ \mu_i\geq 0,\forall i \in\{1,\cdots,N\} \end{array} λmax−21i=1∑Nj=1∑Nλiλjyiyjxi⊤xj+i=1∑Nλis.t.i=1∑Nλiyi=0,∀i∈{1,⋯,N}C−λi−μi=0,∀i∈{1,⋯,N}λi≥0,∀i∈{1,⋯,N}μi≥0,∀i∈{1,⋯,N}

化简得到:

max λ − 1 2 ∑ i = 1 N ∑ j = 1 N λ i λ j y i y j x i ⊤ x j + ∑ i = 1 N λ i s . t . ∑ i = 1 N λ i y i = 0 , ∀ i ∈ { 1 , ⋯ , N } 0 ≤ λ i ≤ C , ∀ i ∈ { 1 , ⋯ , N } \begin{array}{c} \max\limits_{\lambda}-\frac{1}{2}\sum^N\limits_{i=1}\sum^N\limits_{j=1}\lambda_i\lambda_jy_iy_jx^\top_ix_j+\sum^N\limits_{i=1}\lambda_i \\ s. t. \sum^N\limits_{i=1}\lambda_iy_i=0,\forall i\in\{1,\cdots,N\} \\ 0\leq \lambda_i \leq C,\forall i\in\{1,\cdots,N\} \end{array} λmax−21i=1∑Nj=1∑Nλiλjyiyjxi⊤xj+i=1∑Nλis.t.i=1∑Nλiyi=0,∀i∈{1,⋯,N}0≤λi≤C,∀i∈{1,⋯,N}

因此其KKT条件如下:

{ ∇ w L = w − ∑ i = 1 N λ i y i x i = 0 ∇ b L = − ∑ i = 1 N λ i y i x i = 0 ∇ ξ L = C − λ − μ = 0 λ i ( 1 − y n ( w ⊤ x n + b ) − ξ i ) = 0 1 − y n ( w ⊤ x n + b ) − ξ n ≤ 0 ξ i ≥ 0 λ i ≥ 0 μ i ≥ 0 \begin{cases} \nabla_w L=w-\sum^N\limits_{i=1}\lambda_iy_ix_i=0 \\ \nabla_b L=-\sum^N\limits_{i=1}\lambda_iy_ix_i=0 \\ \nabla_{\xi}L=C-\lambda-\mu=0 \\ \lambda_i(1-y_n(w^\top x_n+b)-\xi_i)=0 \\ 1-y_n(w^\top x_n+b)-\xi_n\leq 0 \\ \xi_i\geq 0 \\ \lambda_i\geq 0 \\ \mu_i \geq 0 \end{cases} ⎩⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪⎨⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪⎧∇wL=w−i=1∑Nλiyixi=0∇bL=−i=1∑Nλiyixi=0∇ξL=C−λ−μ=0λi(1−yn(w⊤xn+b)−ξi)=01−yn(w⊤xn+b)−ξn≤0ξi≥0λi≥0μi≥0

第四章

4-1

零均值化的输入,使得神经元在0附近,sigmoid函数在零点处的导数最大,所有收敛速度最快。非零中心化的输入将导致 ω \omega ω的梯度全大于0或全小于0,使权重更新发生抖动,影响梯度下降的速度。形象一点而言,就是零中心化的输入就如同走较为直的路,而非零时七拐八拐才到终点。

4-2

题目要求有两个隐藏神经元和一个输出神经元,那么网络应该有 W ( 1 ) W^{(1)} W(1)和 w ( 2 ) w^{(2)} w(2)两个权重,取:

W ( 1 ) = [ 1 1 1 1 ] , b ( 1 ) = [ 0 1 ] w ( 2 ) = [ 1 − 2 ] , b ( 2 ) = 0 W^{(1)}=\left[\begin{array}{c}1 & 1\\ 1& 1\end{array}\right],b^{(1)}=\left[\begin{array}{c}0 \\ 1\end{array}\right] \\ w^{(2)}=\left[\begin{array}{c}1 \\ -2\end{array}\right],b^{(2)}=0 W(1)=[1111],b(1)=[01]w(2)=[1−2],b(2)=0

带入得到:

X = [ 0 0 1 1 0 1 0 1 ] X=\left[\begin{array}{c}0 & 0 & 1 & 1 \\ 0 & 1 & 0 & 1\end{array}\right] X=[00011011]

神经元的输入与输出:

| x 1 x_1 x1 | x 2 x_2 x2 | y y y |

|---|---|---|

| 0 | 0 | 0 |

| 1 | 0 | 1 |

| 0 | 1 | 1 |

| 1 | 1 | 0 |

实验代码(需要tensorflow2.3):

import numpy as np

import tensorflow.compat.v1 as tf

tf.disable_v2_behavior()

#input and output

X=np.array([[0,0],[0,1],[1,0],[1,1]])

Y=np.array([[0],[1],[1],[0]])

x=tf.placeholder(dtype=tf.float32,shape=[None,2])

y=tf.placeholder(dtype=tf.float32,shape=[None,1])

#weight

w1=tf.Variable(tf.random_normal([2,2]))

w2=tf.Variable(tf.random_normal([2,1]))

#bias

b1=tf.Variable([0.1,0.1])

b2=tf.Variable(0.1)

#relu activation function

h=tf.nn.relu(tf.matmul(x,w1)+b1)

output=tf.matmul(h,w2)+b2

#loss and Adam optimizer

loss=tf.reduce_mean(tf.square(output-y))

train=tf.train.AdamOptimizer(0.05).minimize(loss)

with tf.Session() as session:

session.run(tf.global_variables_initializer())

for i in range(2000):

session.run(train,feed_dict={x:X,y:Y})

loss_=session.run(loss,feed_dict={x:X,y:Y})

if i%50 == 0:

print("step:%d,loss:%.3f"%(i,loss_))

print("X:%r"%X)

print("Pred:%r"%session.run(output,feed_dict={x:X}))

4-3

二分类的例子

二分类交叉熵损失函数为: L ( y , y ^ ) = − ( y log y ^ + ( 1 − y ) log ( 1 − y ^ ) ) L(y,\hat{y})=-(y\log\hat{y}+(1-y)\log(1-\hat{y})) L(y,y^)=−(ylogy^+(1−y)log(1−y^))

不同取值损失函数如表所示:

| y y y | y ^ \hat{y} y^ | L ( y , y ^ ) L(y,\hat{y}) L(y,y^) |

|---|---|---|

| 0 | 0 | 0 |

| 1 | 0 | + ∞ +\infty +∞ |

| 0 | 1 | − ∞ -\infty −∞ |

| 1 | 1 | 0 |

如果我们要损失函数尽可能小的时候, y y y为1的时候 y ^ \hat{y} y^尽可能要大, y y y为0的时候 y ^ \hat{y} y^尽可能要小。而在后一种情况下需要 ω \omega ω尽可能小,因此如果更新过多,会导致样本的所有输出全部为负数,因而梯度会为0,造成权重无法更新,因而成死亡结点。

解决方法

使用Leaky ReLU、PReLU、ELU或者SoftPlus函数当作激活函数。

ReLU死亡问题数学推导

向前传播公式: { z = ω ⋅ x a = R e L U ( z ) \begin{cases}z=\omega\cdot x\\ a=ReLU(z)\end{cases} {z=ω⋅xa=ReLU(z)

损失函数为 L L L,反向传播公式为: { ∂ L ∂ z = ∂ L ∂ a ⋅ ∂ a ∂ z ∂ L ∂ W = ∂ L ∂ z ⋅ x ⊤ ∂ L ∂ x = ω ⊤ ⋅ ∂ L ∂ z \begin{cases}\frac{\partial L}{\partial z}=\frac{\partial L}{\partial a}\cdot\frac{\partial a}{\partial z}\\\\ \frac{\partial L}{\partial W}=\frac{\partial L}{\partial z}\cdot x^\top\\\\ \frac{\partial L}{\partial x}=\omega^\top \cdot \frac{\partial L}{\partial z}\end{cases} ⎩⎪⎪⎪⎪⎪⎪⎨⎪⎪⎪⎪⎪⎪⎧∂z∂L=∂a∂L⋅∂z∂a∂W∂L=