DStream实例——实现网站热词排序

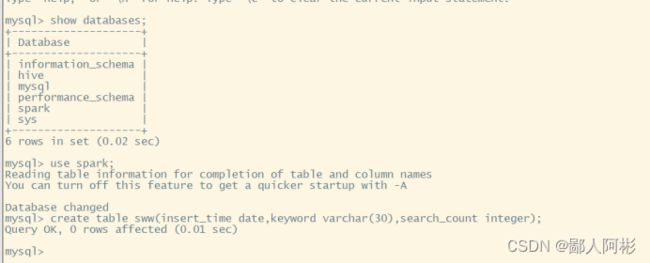

1、进入数据库:mysql -uroot -pPassword123$

创建数据库名字为spark:create database spark

创建数据表sww :create table sww(insert_time date,keyword varchar(30),search_count integer)

2、在pom.xml文件添加mysql数据库的依赖,添加的内容如下:

mysql

mysql-connector-java

5.1.38

3、在spark03项目的/src/main/scala/itcast目录下创建一个名为HotWordBySort的scala类,编写以下内容:

import java.sql.{DriverManager, Statement}

import org.apache.spark.streaming.dstream.{DStream, ReceiverInputDStream}

import org.apache.spark.streaming.{Seconds, StreamingContext}

import org.apache.spark.{SparkConf, SparkContext}

//import org.scalatest.time.Second

object HotWordBySort {

def main(args: Array[String]): Unit = {

//创建SparkConf对象

val sparkConf: SparkConf = new SparkConf()

.setAppName("HotWordBySort").setMaster("local[2]")

//创建SparkContext对象

val sc : SparkContext = new SparkContext(sparkConf)

//设置日志打印级别

sc.setLogLevel("WARN")

//创建StreamingContetxt,需要两个参数

val ssc:StreamingContext = new StreamingContext(sc,Seconds(5))

//连接socket服务

val dstream:ReceiverInputDStream[String] = ssc.socketTextStream("192.168.196.101",9999)

//逗号分隔

val itemPairs:DStream[(String,Int)] = dstream.map(line => (line.split(",")(0),1))

//调用操作

val itemCount:DStream[(String,Int)] = itemPairs.reduceByKeyAndWindow((v1:Int,v2:Int)=>v1+v2,Seconds(60),Seconds(10))

//Dstream没有sortByKey操作

val hotWord = itemCount.transform(itemRDD=>{

val top3:Array[(String,Int)]=itemRDD.map(pair =>(pair._2,pair._1)).sortByKey(false).map(pair=>(pair._2,pair._1)).take(3)

//本地集合转成RDD

ssc.sparkContext.makeRDD(top3)

})

//调用foreachRDD操作

hotWord.foreachRDD(rdd=>{

val url = "jdbc:mysql://192.168.196.101:3306/spark"

val user = "root"

val password = "Password123$"

Class.forName("com.mysql.jdbc.Driver")

val conn1 = DriverManager.getConnection(url,user,password)

conn1.prepareStatement("delete from searchKeyWord where 1=1").executeUpdate()

conn1.close()

rdd.foreachPartition(partitionOfRecords=>{

val url = "jdbc:mysql://192.168.196.101:3306/spark"

val user = "root"

val password = "Password123$"

Class.forName("com.mysql.jdbc.Driver")

val conn2 = DriverManager.getConnection(url,user,password)

conn2.setAutoCommit(false)

val stat:Statement = conn2.createStatement()

partitionOfRecords.foreach(record=>{

stat.addBatch("insert into searchKeyWord(insert_time,keyword,search_count) values (now(),'"+record._1+"','"+record._2+"')")

})

stat.executeBatch()

conn2.commit()

conn2.close()

})

})

ssc.start()

ssc.awaitTermination()

ssc.stop()

}

}4、运行HotWordBySort代码,并在master 9999端口输入以下数据:

nc -lk 9999

Hadoop,111

Spark,222

Hadoop,222

Hadoop,222

Hive,333

Hive,222

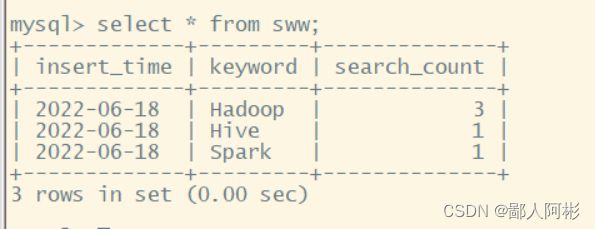

在mysql中查看数据表是否插入数据:

由上面结果可以看出刚刚创建的sww数据表是已经添加数据成功的。