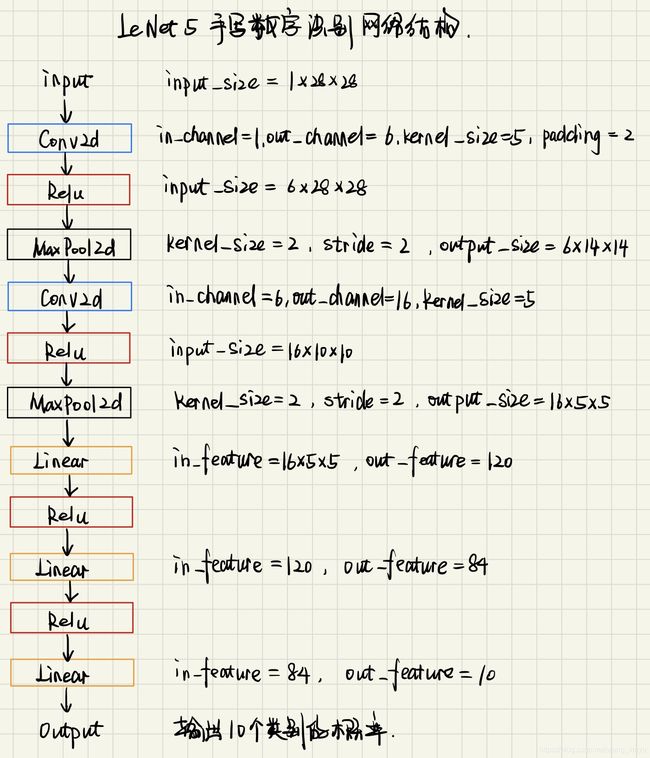

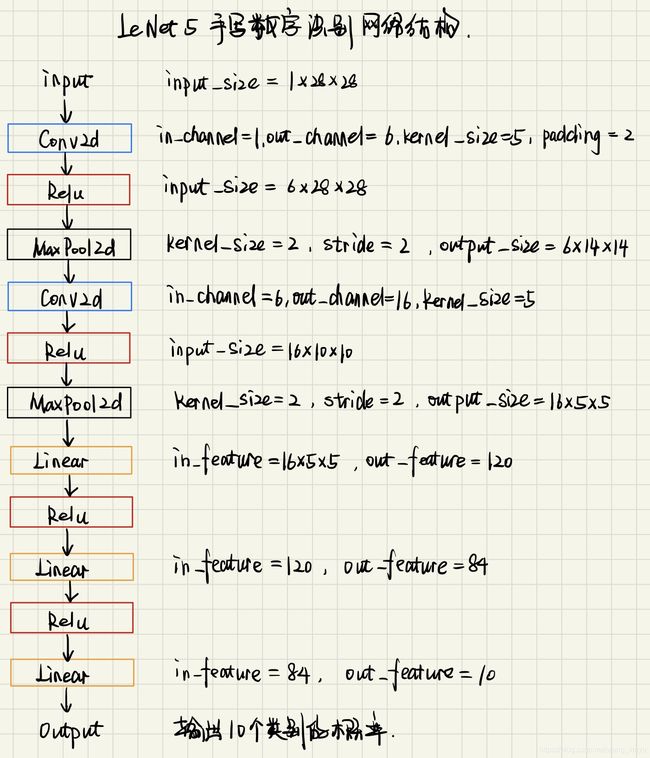

- LeNet5网络结构

LeNet-5共有7层,不包含输入,每层都包含可训练参数;每个层有多个Feature Map,每个FeatureMap通过一种卷积滤波器提取输入的一种特征,然后每个FeatureMap有多个神经元。

在论文上的LeNet5的结构如下,由于论文的数据集是32x32的,mnist数据集是28x28的,所有只有INPUT变了,其余地方会严格按照LeNet5的结构编写程序:

- 网络模型

class LeNet(nn.Module):

def __init__(self):

super(LeNet, self).__init__()

self.conv1 = nn.Sequential(

nn.Conv2d(1, 6, 5, 1, 2),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2),

)

self.conv2 = nn.Sequential(

nn.Conv2d(6, 16, 5),

nn.ReLU(),

nn.MaxPool2d(2, 2)

)

self.fc1 = nn.Sequential(

nn.Linear(16 * 5 * 5, 120),

nn.ReLU()

)

self.fc2 = nn.Sequential(

nn.Linear(120, 84),

nn.ReLU()

)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = self.conv1(x)

fp1 = x.detach()

x = self.conv2(x)

x = x.view(x.size()[0], -1)

x = self.fc1(x)

x = self.fc2(x)

x = self.fc3(x)

return x

- 训练代码

def train(epoch):

model.train()

for batch_idx, (data, target) in enumerate(train_loader):

data = data.to(device)

target = target.to(device)

data, target = Variable(data), Variable(target)

optimizer.zero_grad()

output = model(data)

loss = F.cross_entropy(output, target)

loss.backward()

optimizer.step()

if batch_idx % log_interval == 0:

print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

epoch, batch_idx * len(data), len(train_loader.dataset),

100. * batch_idx / len(train_loader), loss.item()))

- 测试代码

def test():

model.eval()

test_loss = 0

correct = 0

for data, target in test_loader:

data = data.to(device)

target = target.to(device)

data, target = Variable(data), Variable(target)

output = model(data)

test_loss += F.cross_entropy(output, target, size_average=False).item()

pred = output.data.max(1, keepdim=True)[1]

correct += pred.eq(target.data.view_as(pred)).cpu().sum()

test_loss /= len(test_loader.dataset)

print('\nTest set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format(

test_loss, correct, len(test_loader.dataset),

100. * correct / len(test_loader.dataset)))

- LeNet.py完整代码

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torchvision import datasets, transforms

from torch.autograd import Variable

lr = 0.01

momentum = 0.5

log_interval = 10

epochs = 10

batch_size = 64

test_batch_size = 1000

class LeNet(nn.Module):

def __init__(self):

super(LeNet, self).__init__()

self.conv1 = nn.Sequential(

nn.Conv2d(1, 6, 5, 1, 2),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2),

)

self.conv2 = nn.Sequential(

nn.Conv2d(6, 16, 5),

nn.ReLU(),

nn.MaxPool2d(2, 2)

)

self.fc1 = nn.Sequential(

nn.Linear(16 * 5 * 5, 120),

nn.ReLU()

)

self.fc2 = nn.Sequential(

nn.Linear(120, 84),

nn.ReLU()

)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = self.conv1(x)

fp1 = x.detach()

x = self.conv2(x)

x = x.view(x.size()[0], -1)

x = self.fc1(x)

x = self.fc2(x)

x = self.fc3(x)

return x

def train(epoch):

model.train()

for batch_idx, (data, target) in enumerate(train_loader):

data = data.to(device)

target = target.to(device)

data, target = Variable(data), Variable(target)

optimizer.zero_grad()

output = model(data)

loss = F.cross_entropy(output, target)

loss.backward()

optimizer.step()

if batch_idx % log_interval == 0:

print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

epoch, batch_idx * len(data), len(train_loader.dataset),

100. * batch_idx / len(train_loader), loss.item()))

def test():

model.eval()

test_loss = 0

correct = 0

for data, target in test_loader:

data = data.to(device)

target = target.to(device)

data, target = Variable(data), Variable(target)

output = model(data)

test_loss += F.cross_entropy(output, target, size_average=False).item()

pred = output.data.max(1, keepdim=True)[1]

correct += pred.eq(target.data.view_as(pred)).cpu().sum()

test_loss /= len(test_loader.dataset)

print('\nTest set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format(

test_loss, correct, len(test_loader.dataset),

100. * correct / len(test_loader.dataset)))

if __name__ == '__main__':

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

train_loader = torch.utils.data.DataLoader(

datasets.MNIST('../data', train=True, download=True,

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

])),

batch_size=batch_size, shuffle=True)

test_loader = torch.utils.data.DataLoader(

datasets.MNIST('../data', train=False, transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

])),

batch_size=test_batch_size, shuffle=True)

model = LeNet()

model = model.to(device)

optimizer = optim.SGD(model.parameters(), lr=lr, momentum=momentum)

for epoch in range(1, epochs + 1):

train(epoch)

test()

torch.save(model, 'model.pth')

- 中间层特征提取代码

class FeatureExtractor(nn.Module):

def __init__(self, submodule, extracted_layers):

super(FeatureExtractor, self).__init__()

self.submodule = submodule

self.extracted_layers = extracted_layers

def forward(self, x):

outputs = []

print(self.submodule._modules.items())

for name, module in self.submodule._modules.items():

if "fc" in name:

print(name)

x = x.view(x.size(0), -1)

print(module)

x = module(x)

print(name)

if name in self.extracted_layers:

outputs.append(x)

return outputs

- LeNet5Pre.py完整代码

import torch

import torch.nn as nn

import cv2

import torch.nn.functional as F

from LeNet5 import LeNet

from torch.autograd import Variable

from torchvision import datasets, transforms

import numpy as np

import matplotlib.pyplot as plt

class FeatureExtractor(nn.Module):

def __init__(self, submodule, extracted_layers):

super(FeatureExtractor, self).__init__()

self.submodule = submodule

self.extracted_layers = extracted_layers

def forward(self, x):

outputs = []

print(self.submodule._modules.items())

for name, module in self.submodule._modules.items():

if "fc" in name:

print(name)

x = x.view(x.size(0), -1)

print(module)

x = module(x)

print(name)

if name in self.extracted_layers:

outputs.append(x)

return outputs

if __name__ == '__main__':

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model = torch.load('model.pth')

model = model.to(device)

model.eval()

img = cv2.imread("8.jpg")

trans = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.1307,), (0.3081,))])

img = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

img = trans(img)

img = img.to(device)

img = img.unsqueeze(0)

output = model(img)

prob = F.softmax(output, dim=1)

prob = Variable(prob)

prob = prob.cpu().numpy()

print(prob)

pred = np.argmax(prob)

print(pred.item())

net = LeNet().to(device)

exact_list = ["conv1", "conv2"]

myexactor = FeatureExtractor(net, exact_list)

x = myexactor(img)

for i in range(6):

ax = plt.subplot(1, 6, i + 1)

ax.set_title('Feature {}'.format(i))

ax.axis('off')

plt.imshow(x[0].data.cpu()[0, i, :, :], cmap='jet')

plt.show()

-

LeNet5Pre.py输入图像

输入图像为28*28像素的黑底白字手写数字图像。

-

运行结果

-

参考文献:

pytorch用LeNet5识别Mnist手写体数据集(训练+预测单张输入图片代码)

基于Pytorch的特征图提取