pytorch实现图像上采样的几种方式

pytorch实现图像上采样的几种方式

-

- 1. torch.nn.Upsample()

- 2. torch.nn.ConvTranspose2d()

- 3. torch.nn.functional.interpolate()

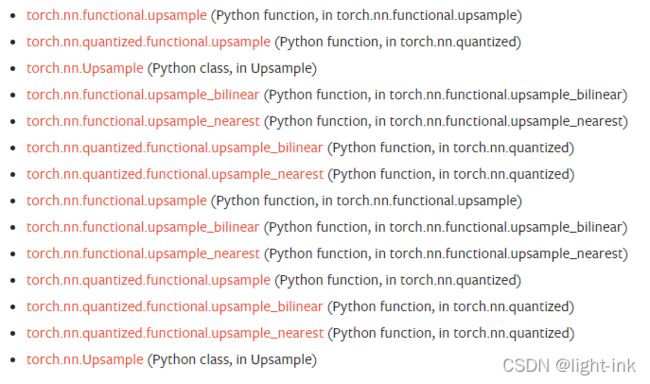

- 其他的API可以参考:

1. torch.nn.Upsample()

torch.nn.Upsample(size=None, scale_factor=None, mode='nearest', align_corners=None)

参数:

- size (int or Tuple[int] or Tuple[int, int] or Tuple[int, int, int], optional) – 根据不同的输入类型制定的输出大小

- scale_factor (float or Tuple[float] or Tuple[float, float] or Tuple[float, float, float], optional) – 指定输出为输入的多少倍数。如果输入为tuple,其也要制定为tuple类型

- mode (str, optional) – 可使用的上采样算法,有

nearest,linear,bilinear,bicubicandtrilinear. 默认使用nearest - align_corners (bool, optional) – 如果为True,输入的角像素将与输出张量对齐,因此将保存下来这些像素的值。仅当使用的算法为

linear,bilinearortrilinear时可以使用。默认设置为False

Examples:

>>> input = torch.arange(1, 5, dtype=torch.float32).view(1, 1, 2, 2)

>>> input

tensor([[[[ 1., 2.],

[ 3., 4.]]]])

>>> m = nn.Upsample(scale_factor=2, mode='nearest')

>>> m(input)

tensor([[[[ 1., 1., 2., 2.],

[ 1., 1., 2., 2.],

[ 3., 3., 4., 4.],

[ 3., 3., 4., 4.]]]])

>>> m = nn.Upsample(scale_factor=2, mode='bilinear') # align_corners=False

>>> m(input)

tensor([[[[ 1.0000, 1.2500, 1.7500, 2.0000],

[ 1.5000, 1.7500, 2.2500, 2.5000],

[ 2.5000, 2.7500, 3.2500, 3.5000],

[ 3.0000, 3.2500, 3.7500, 4.0000]]]])

>>> m = nn.Upsample(scale_factor=2, mode='bilinear', align_corners=True)

>>> m(input)

tensor([[[[ 1.0000, 1.3333, 1.6667, 2.0000],

[ 1.6667, 2.0000, 2.3333, 2.6667],

[ 2.3333, 2.6667, 3.0000, 3.3333],

[ 3.0000, 3.3333, 3.6667, 4.0000]]]])

>>> # Try scaling the same data in a larger tensor

>>>

>>> input_3x3 = torch.zeros(3, 3).view(1, 1, 3, 3)

>>> input_3x3[:, :, :2, :2].copy_(input)

tensor([[[[ 1., 2.],

[ 3., 4.]]]])

>>> input_3x3

tensor([[[[ 1., 2., 0.],

[ 3., 4., 0.],

[ 0., 0., 0.]]]])

>>> m = nn.Upsample(scale_factor=2, mode='bilinear') # align_corners=False

>>> # Notice that values in top left corner are the same with the small input (except at boundary)

>>> m(input_3x3)

tensor([[[[ 1.0000, 1.2500, 1.7500, 1.5000, 0.5000, 0.0000],

[ 1.5000, 1.7500, 2.2500, 1.8750, 0.6250, 0.0000],

[ 2.5000, 2.7500, 3.2500, 2.6250, 0.8750, 0.0000],

[ 2.2500, 2.4375, 2.8125, 2.2500, 0.7500, 0.0000],

[ 0.7500, 0.8125, 0.9375, 0.7500, 0.2500, 0.0000],

[ 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000]]]])

>>> m = nn.Upsample(scale_factor=2, mode='bilinear', align_corners=True)

>>> # Notice that values in top left corner are now changed

>>> m(input_3x3)

tensor([[[[ 1.0000, 1.4000, 1.8000, 1.6000, 0.8000, 0.0000],

[ 1.8000, 2.2000, 2.6000, 2.2400, 1.1200, 0.0000],

[ 2.6000, 3.0000, 3.4000, 2.8800, 1.4400, 0.0000],

[ 2.4000, 2.7200, 3.0400, 2.5600, 1.2800, 0.0000],

[ 1.2000, 1.3600, 1.5200, 1.2800, 0.6400, 0.0000],

[ 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000]]]])

2. torch.nn.ConvTranspose2d()

torch.nn.ConvTranspose2d(in_channels, out_channels, kernel_size, stride=1, padding=0, output_padding=0, bias=True)

Args:

- in_channels (int): Number of channels in the input image

- out_channels (int): Number of channels produced by the convolution

- kernel_size (int or tuple): Size of the convolving kernel

- stride (int or tuple, optional): Stride of the convolution. Default: 1

- padding (int or tuple, optional):

dilation * (kernel_size - 1) - paddingzero-padding

will be added to both sides of each dimension in the input. Default: 0 - output_padding (int or tuple, optional): Additional size added to one side

of each dimension in the output shape. Default: 0 - groups (int, optional): Number of blocked connections from input channels to output channels. Default: 1

- bias (bool, optional): If

True, adds a learnable bias to the output. Default:True - dilation (int or tuple, optional): Spacing between kernel elements. Default: 1

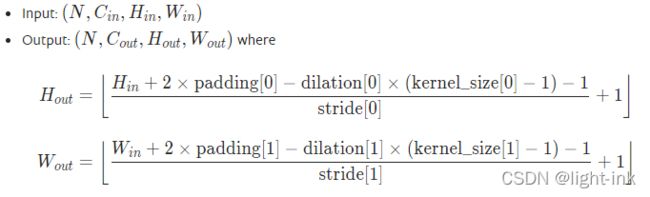

二维卷积的计算公式

- 2维卷积的输出尺寸的计算公式为:

- d i l a t i o n [ 0 ] dilation[0] dilation[0]在 n n . C o n v 2 d nn.Conv2d nn.Conv2d中默认为1

转置卷积的计算公式

- Input: ( N , C i n , H i n , W i n ) (N, C_{in}, H_{in}, W_{in}) (N,Cin,Hin,Win)

- Output: ( N , C o u t , H o u t , W o u t ) (N, C_{out}, H_{out}, W_{out}) (N,Cout,Hout,Wout)

- 转置卷积在直观上相当于求原始的 H i n H_{in} Hin与 W i n W_{in} Win

H o u t = ( H i n − 1 ) × s t r i d e [ 0 ] − 2 × p a d d i n g [ 0 ] + d i l a t i o n [ 0 ] × ( k e r n e l s i z e [ 0 ] − 1 ) + o u t p u t p a d d i n g [ 0 ] + 1 H_{out}=(H_{in}−1)×stride[0]−2×padding[0]+dilation[0]×(kernelsize[0]−1)+outputpadding[0]+1 Hout=(Hin−1)×stride[0]−2×padding[0]+dilation[0]×(kernelsize[0]−1)+outputpadding[0]+1

W o u t = ( W i n − 1 ) × s t r i d e [ 1 ] − 2 × p a d d i n g [ 1 ] + d i l a t i o n [ 1 ] × ( k e r n e l s i z e [ 1 ] − 1 ) + o u t p u t p a d d i n g [ 1 ] + 1 W_{out}=(W_{in}−1)×stride[1]−2×padding[1]+dilation[1]×(kernelsize[1]−1)+outputpadding[1]+1 Wout=(Win−1)×stride[1]−2×padding[1]+dilation[1]×(kernelsize[1]−1)+outputpadding[1]+1

Examples:

>>> # With square kernels and equal stride

>>> m = nn.ConvTranspose2d(16, 33, 3, stride=2)

>>> # non-square kernels and unequal stride and with padding

>>> m = nn.ConvTranspose2d(16, 33, (3, 5), stride=(2, 1), padding=(4, 2))

>>> input = torch.randn(20, 16, 50, 100)

>>> output = m(input)

>>> # exact output size can be also specified as an argument

>>> input = torch.randn(1, 16, 12, 12)

>>> downsample = nn.Conv2d(16, 16, 3, stride=2, padding=1)

>>> upsample = nn.ConvTranspose2d(16, 16, 3, stride=2, padding=1)

>>> h = downsample(input)

>>> h.size()

torch.Size([1, 16, 6, 6]) # (12-3+2*1)//2+1 = 6

>>> output = upsample(h, output_size=input.size())

>>> output.size()

torch.Size([1, 16, 12, 12]) # 反卷积在公式上相当于卷积的逆运算,实际上不是真正的逆

3. torch.nn.functional.interpolate()

torch.nn.functional.interpolate(input, size=None, scale_factor=None, mode='nearest', align_corners=None, recompute_scale_factor=None)

该函数可以实现图像的上下采样,将输入图像上/下采样到指定的size或者给定的scale_factor。上下采样使用的算法由取决于参数mode。

Parameters

- input (Tensor) – the input tensor

- size (int or Tuple[int] or Tuple[int, int] or Tuple[int, int, int]) – output spatial size.

- scale_factor (float or Tuple[float]) – multiplier for spatial size. If scale_factor is a tuple, its length has to match input.dim().

- mode (str) – algorithm used for upsampling:

nearest|linear|bilinear|bicubic|trilinear|area. Default:nearest - align_corners (bool, optional) – Geometrically, we consider the pixels of the input and output as squares rather than points. If set to True, the input and output tensors are aligned by the center points of their corner pixels, preserving the values at the corner pixels. If set to False, the input and output tensors are aligned by the corner points of their corner pixels, and the interpolation uses edge value padding for out-of-boundary values, making this operation independent of input size when scale_factor is kept the same. This only has an effect when mode is

linear,bilinear,bicubicortrilinear. Default: False - recompute_scale_factor (bool, optional) – recompute the scale_factor for use in the interpolation calculation. If recompute_scale_factor is True, then scale_factor must be passed in and scale_factor is used to compute the output size. The computed output size will be used to infer new scales for the interpolation. Note that when scale_factor is floating-point, it may differ from the recomputed scale_factor due to rounding and precision issues. If recomputed_scale_factor is False, then size or scale_factor will be used directly for interpolation.

Note: 使用mode=‘bicubic’,可能能会产生负值或大于255的图像值,需要使用截断函数result.clamp(min=0, max=255)进行二次调整。

Examples:

import torch

import torch.nn.functional as F

input = torch.randn(2, 3, 256, 256)

down = F.interpolate(input, scale_factor=0.5)

print(down.shape) # torch.Size([2, 3, 128, 128])

up = F.interpolate(input, scale_factor=2)

print(up.shape) # torch.Size([2, 3, 512, 512])