卷积神经网络 - 车辆识别

参考资料:https://blog.csdn.net/u013733326/article/details/80341740

自动驾驶 - 汽车识别

本次我们将学习使用YOLO算法进行对象识别,我们先导入包

%load_ext autoreload

%autoreload 2

import argparse

import os

import matplotlib.pyplot as plt

from matplotlib.pyplot import imshow

import imageio

import numpy as np

import pandas as pd

import PIL

import h5py

import tensorflow.compat.v1 as tf

tf.disable_v2_behavior()

from keras import backend as K

from keras.layers import Input, Lambda, Conv2D

from keras.models import load_model, Model

from yad2k.models.keras_yolo import yolo_head, yolo_boxes_to_corners, preprocess_true_boxes, yolo_loss, yolo_body

import yolo_utils

%matplotlib inline

请注意!正如你所看到的,我们导入了Keras的后台,命名为K,意味着我们将使用Keras框架

1 - 待解决的问题

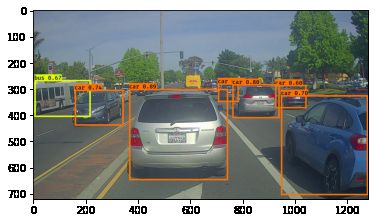

假设你现在在做自动驾驶的汽车,你想着首先应该做一个汽车检测系统,为了搜集数据,你已经在你的汽车前引擎盖上安装了一个照相机,在你开车的时候它会每隔几秒拍摄一次前方的道路

假如你想让YOLO识别80个分类,你可以把分类标签c cc从1到80进行标记,或者把它变为80维的向量(80个数字),在对应位置填写上0或1。视频中我们使用的是后面的方案。因为YOLO的模型训练起来是比较久的,我们将使用预先训练好的权重来进行使用

2 - YOLO

YOLO(“you only look once”)因为它的实时高准确率,这就使得它是目前比较流行的算法。在算法中“只看一次(only looks once)”的机制使得它在预测时只需要进行一次前向传播,在使用非最大值抑制后,它与边界框一起输出识别对象

2.1 - 模型细节

第一个你需要知道的事情:

- 输入的批量图片的维度为(m,608,608,3)

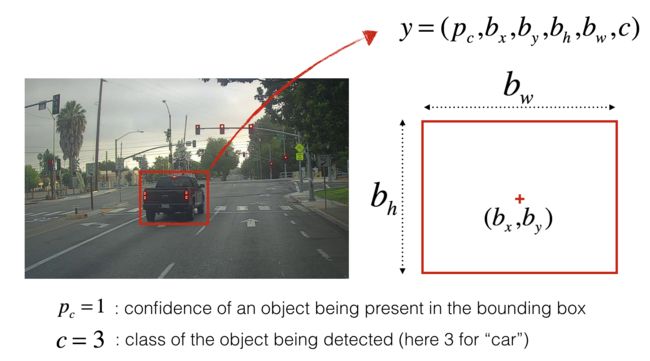

- 输出是一个识别分类与边界框的列表。每个边界框由6个数字组成: ( p x , b x , b y , b h , b w ) (p_x,b_x,b_y,b_h,b_w) (px,bx,by,bh,bw)如果你将c cc放到80维的向量中,那么每个边界框就由85个数字组成

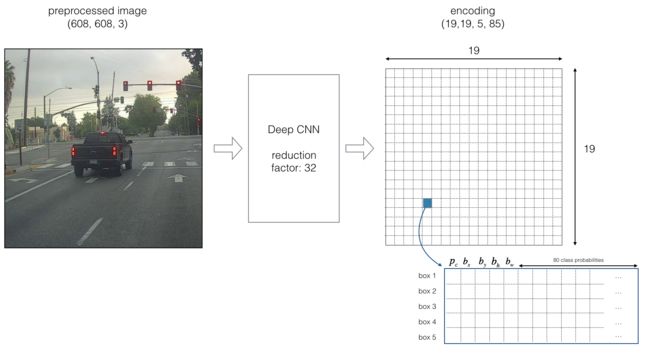

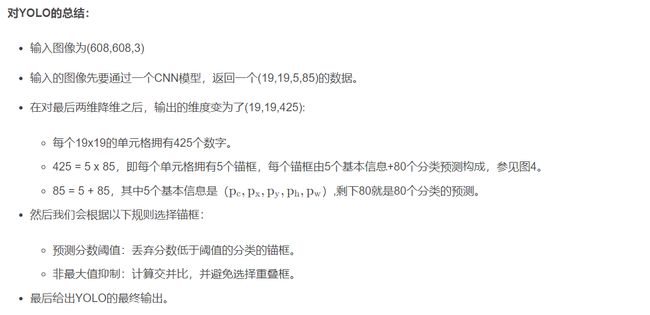

我们会使用5个锚框(anchor boxes),所以算法大致流程是这样的:图像输入(m,608,608,3) ⇒ DEEP CNN ⇒ 编码(m,19,19,5,85)

如果对象的中心/中点在单元格内,那么该单元格就负责识别该对象

- 我们也使用了5个锚框,19x19的单元格,所以每个单元格内有5个锚框的编码信息,锚框的组成是 p c + p x + p y + p h + p w p_c + p_x + p_y + p_h + p_w pc+px+py+ph+pw

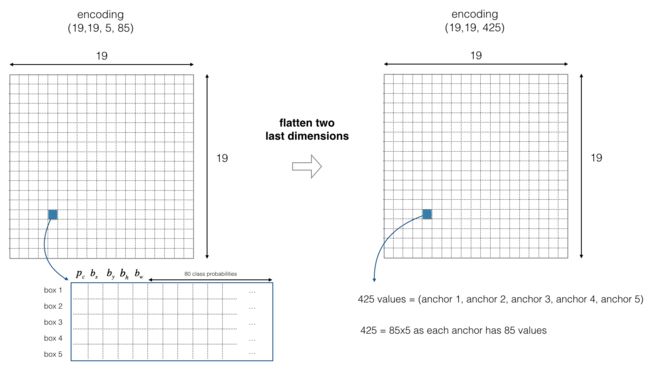

- 为了方便,我们将把最后的两个维度的数据进行展开,所以最后一步的编码由(m,19,19,5,85)变为了(m,19,19,425)

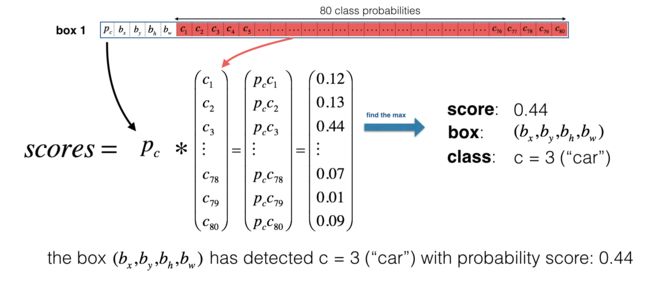

对于每个单元格的每个锚框而言,我们将计算下列元素的乘积,并提取该框包含某一类的概率

这里有张YOLO预测图的可视化预测:

- 对于每个19x19的单元格,找寻最大的可能性值,在5个锚框和不同的类之间取最大值

- 根据单元格预测的最可能的对象来使用添加颜色的方式来标记单元格

需要注意的就是该可视化不是YOLO算法本身进行预测的核心部分,这只是一种可视化算法中间结果的比较d好的方法。另一种可视化YOLO输出的方法是绘制它输出的边界框,这样做会导致可视化是这样的:

在上图中们只绘制了模型所猜测的高概率的锚框,但锚框依旧是太多了。我们希望将算法的输出过滤为检测到的对象数量更少,要做到这一点,我们将使用非最大抑制。具体来说,我们将执行以下步骤:

- 舍弃掉低概率的锚框(意思是格子算出来的概率比较低我们就不要)

- 当几个锚框相互重叠并检测同一个物体时,只选择一个锚框

2.2 - 分类阈值过滤

现在我们要为阈值进行过滤,我们要去掉一些预测值低于预设值的锚框。模型共计会有19 * 19 * 5 * 85 个数字,每一个锚框由85个数字组成(80个分类 + p c + p x + p y + p h + p w p_c + p_x + p_y + p_h + p_w pc+px+py+ph+pw),将维度为(19,19,5,85)或者(19,19,425)转化为下面的维度将会有利于我们的下一步操作:

- box_confidence:tensor类型,维度为(19x19,5,1),包含19x19单元格中每个单元格预测的5个锚框中的所有的锚框的 p c p_c pc(一些对象的置信概率)

- boxes:tensor类型,维度为(19x19,5,4),包含了所有的锚框的 ( p x , p y , p h , p w ) (p_x,p_y,p_h,p_w) (px,py,ph,pw)

- box_class_probs:tensor类型,维度为(19x19,5,80),包含了所有单元格中所有锚框的所有对象 ( c 1 , c 2 , c 3 , . . . , c 80 ) (c_1,c_2,c_3,...,c_{80}) (c1,c2,c3,...,c80)检测的概率

现在我们要实现函数yolo_filter_boxes(),步骤如下:

- 根据图4来计算对象的可能性:

a = np.random.randn(19x19,5,1) #p_c

b = np.random.randn(19x19,5,80) #c_1 ~ c_80

c = a * b #计算后的维度将会是(19x19,5,80)

- 对于每个锚框,需要找到:

- 对分类的预测的概率拥有最大值的锚框的索引(查看中文文档),需要注意的是我们需要选择的轴,我们可以试着使用axis=-1

- 对应的最大值的锚框(查看中文文档),需要注意的是我们需要选择的轴,我们可以试着使用axis=-1

- 根据阈值来创建掩码,比如执行下列操作:[0.9, 0.3, 0.4, 0.5, 0.1] < 0.4,返回的是[False, True, False, False, True],对于我们要保留的锚框,对应的掩码应该为True或者1

- 使用TensorFlow来对box_class_scores、boxes、box_classes进行掩码操作以过滤出我们想要的锚框

如果你想要调用Keras的函数的话,请使用K.function(...)

def yolo_filter_boxes(box_confidence , boxes, box_class_probs, threshold = 0.6):

"""

通过阈值来过滤对象和分类的置信度。

参数:

box_confidence - tensor类型,维度为(19,19,5,1),包含19x19单元格中每个单元格预测的5个锚框中的所有的锚框的pc (一些对象的置信概率)。

boxes - tensor类型,维度为(19,19,5,4),包含了所有的锚框的(px,py,ph,pw )。

box_class_probs - tensor类型,维度为(19,19,5,80),包含了所有单元格中所有锚框的所有对象( c1,c2,c3,···,c80 )检测的概率。

threshold - 实数,阈值,如果分类预测的概率高于它,那么这个分类预测的概率就会被保留。

返回:

scores - tensor 类型,维度为(None,),包含了保留了的锚框的分类概率。

boxes - tensor 类型,维度为(None,4),包含了保留了的锚框的(b_x, b_y, b_h, b_w)

classess - tensor 类型,维度为(None,),包含了保留了的锚框的索引

注意:"None"是因为你不知道所选框的确切数量,因为它取决于阈值。

比如:如果有10个锚框,scores的实际输出大小将是(10,)

"""

#第一步:计算锚框的得分

box_scores = box_confidence * box_class_probs

#第二步:找到最大值的锚框的索引以及对应的最大值的锚框的分数

box_classes = K.argmax(box_scores, axis=-1)

box_class_scores = K.max(box_scores, axis=-1)

#第三步:根据阈值创建掩码

filtering_mask = (box_class_scores >= threshold)

#对scores, boxes 以及 classes使用掩码

scores = tf.boolean_mask(box_class_scores,filtering_mask)

boxes = tf.boolean_mask(boxes,filtering_mask)

classes = tf.boolean_mask(box_classes,filtering_mask)

return scores , boxes , classes

with tf.Session() as test_a:

box_confidence = tf.random_normal([19,19,5,1], mean=1, stddev=4, seed=1)

boxes = tf.random_normal([19,19,5,4], mean=1, stddev=4, seed=1)

box_class_probs = tf.random_normal([19, 19, 5, 80], mean=1, stddev=4, seed = 1)

scores, boxes, classes = yolo_filter_boxes(box_confidence, boxes, box_class_probs, threshold = 0.5)

print("scores[2] = " + str(scores[2].eval()))

print("boxes[2] = " + str(boxes[2].eval()))

print("classes[2] = " + str(classes[2].eval()))

print("scores.shape = " + str(scores.shape))

print("boxes.shape = " + str(boxes.shape))

print("classes.shape = " + str(classes.shape))

test_a.close()

scores[2] = 10.750582

boxes[2] = [ 8.426533 3.2713668 -0.5313436 -4.9413733]

classes[2] = 7

scores.shape = (?,)

boxes.shape = (?, 4)

classes.shape = (?,)

2.3 - 非最大值抑制

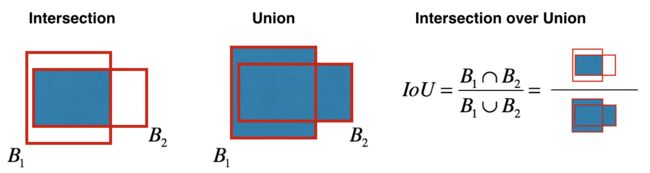

即使是我们通过阈值来过滤了一些得分较低的分类,但是我们依旧会有很多的锚框被留了下来,第二个过滤器就是让下图左边变为右边,我们叫它非最大值抑制( non-maximum suppression (NMS))

非最大值抑制使用了一个非常重要的功能,叫做交并比(Intersection over Union (IoU))

def iou(box1, box2):

"""

实现两个锚框的交并比的计算

参数:

box1 - 第一个锚框,元组类型,(x1, y1, x2, y2)

box2 - 第二个锚框,元组类型,(x1, y1, x2, y2)

返回:

iou - 实数,交并比。

"""

#计算相交的区域的面积

xi1 = np.maximum(box1[0], box2[0])

yi1 = np.maximum(box1[1], box2[1])

xi2 = np.minimum(box1[2], box2[2])

yi2 = np.minimum(box1[3], box2[3])

inter_area = (xi1-xi2)*(yi1-yi2)

#计算并集,公式为:Union(A,B) = A + B - Inter(A,B)

box1_area = (box1[2]-box1[0])*(box1[3]-box1[1])

box2_area = (box2[2]-box2[0])*(box2[3]-box2[1])

union_area = box1_area + box2_area - inter_area

#计算交并比

iou = inter_area / union_area

return iou

box1 = (2,1,4,3)

box2 = (1,2,3,4)

print("iou = " + str(iou(box1, box2)))

iou = 0.14285714285714285

现在我们要实现非最大值抑制函数,关键步骤如下:

- 选择分值高的锚框

- 计算与其他框的重叠部分,并删除与iou_threshold相比重叠的框

- 返回第一步,直到不再有比当前选中的框得分更低的框

这将删除与选定框有较大重叠的其他所有锚框,只有得分最高的锚框仍然存在

我们要实现的函数名为yolo_non_max_suppression(),使用TensorFlow实现,TensorFlow有两个内置函数用于实现非最大抑制(所以你实际上不需要使用你的iou()实现):

https://www.tensorflow.org/api_docs/python/tf/image/non_max_suppression

https://www.tensorflow.org/api_docs/python/tf/gather

def yolo_non_max_suppression(scores, boxes, classes, max_boxes=10, iou_threshold=0.5):

"""

为锚框实现非最大值抑制( Non-max suppression (NMS))

参数:

scores - tensor类型,维度为(None,),yolo_filter_boxes()的输出

boxes - tensor类型,维度为(None,4),yolo_filter_boxes()的输出,已缩放到图像大小(见下文)

classes - tensor类型,维度为(None,),yolo_filter_boxes()的输出

max_boxes - 整数,预测的锚框数量的最大值

iou_threshold - 实数,交并比阈值。

返回:

scores - tensor类型,维度为(,None),每个锚框的预测的可能值

boxes - tensor类型,维度为(4,None),预测的锚框的坐标

classes - tensor类型,维度为(,None),每个锚框的预测的分类

注意:"None"是明显小于max_boxes的,这个函数也会改变scores、boxes、classes的维度,这会为下一步操作提供方便。

"""

max_boxes_tensor = K.variable(max_boxes,dtype="int32") #用于tf.image.non_max_suppression()

K.get_session().run(tf.variables_initializer([max_boxes_tensor])) #初始化变量max_boxes_tensor

#使用使用tf.image.non_max_suppression()来获取与我们保留的框相对应的索引列表

nms_indices = tf.image.non_max_suppression(boxes, scores,max_boxes,iou_threshold)

#使用K.gather()来选择保留的锚框

scores = K.gather(scores, nms_indices)

boxes = K.gather(boxes, nms_indices)

classes = K.gather(classes, nms_indices)

return scores, boxes, classes

with tf.Session() as test_b:

scores = tf.random_normal([54,], mean=1, stddev=4, seed = 1)

boxes = tf.random_normal([54, 4], mean=1, stddev=4, seed = 1)

classes = tf.random_normal([54,], mean=1, stddev=4, seed = 1)

scores, boxes, classes = yolo_non_max_suppression(scores, boxes, classes)

print("scores[2] = " + str(scores[2].eval()))

print("boxes[2] = " + str(boxes[2].eval()))

print("classes[2] = " + str(classes[2].eval()))

print("scores.shape = " + str(scores.eval().shape))

print("boxes.shape = " + str(boxes.eval().shape))

print("classes.shape = " + str(classes.eval().shape))

test_b.close()

scores[2] = 6.938395

boxes[2] = [-5.299932 3.1379814 4.450367 0.95942086]

classes[2] = -2.2452729

scores.shape = (10,)

boxes.shape = (10, 4)

classes.shape = (10,)

2.4 - 对所有框进行过滤

现在我们要实现一个CNN(19x19x5x85)输出的函数,并使用刚刚实现的函数对所有框进行过滤

我们要实现的函数名为yolo_eval(),它采用YOLO编码的输出,并使用分数阈值和NMS来过滤这些框。你必须知道最后一个实现的细节。有几种表示锚框的方式,例如通过它们的角或通过它们的中点和高度/宽度。YOLO使用以下功能(我们提供)在不同时间在几种这样的格式之间进行转换:

- boxes = yolo_boxes_to_corners(box_xy, box_wh)

它将yolo锚框坐标(x,y,w,h)转换为角的坐标(x1,y1,x2,y2)以适应yolo_filter_boxes()的输入

- boxes = yolo_utils.scale_boxes(boxes, image_shape)

YOLO的网络经过训练可以在608x608图像上运行。如果你要在不同大小的图像上测试此数据(例如,汽车检测数据集具有720x1280图像),则此步骤会重新缩放这些框,以便在原始的720x1280图像上绘制它们

def yolo_eval(yolo_outputs, image_shape=(720.,1280.),

max_boxes=10, score_threshold=0.6,iou_threshold=0.5):

"""

将YOLO编码的输出(很多锚框)转换为预测框以及它们的分数,框坐标和类。

参数:

yolo_outputs - 编码模型的输出(对于维度为(608,608,3)的图片),包含4个tensors类型的变量:

box_confidence : tensor类型,维度为(None, 19, 19, 5, 1)

box_xy : tensor类型,维度为(None, 19, 19, 5, 2)

box_wh : tensor类型,维度为(None, 19, 19, 5, 2)

box_class_probs: tensor类型,维度为(None, 19, 19, 5, 80)

image_shape - tensor类型,维度为(2,),包含了输入的图像的维度,这里是(608.,608.)

max_boxes - 整数,预测的锚框数量的最大值

score_threshold - 实数,可能性阈值。

iou_threshold - 实数,交并比阈值。

返回:

scores - tensor类型,维度为(,None),每个锚框的预测的可能值

boxes - tensor类型,维度为(4,None),预测的锚框的坐标

classes - tensor类型,维度为(,None),每个锚框的预测的分类

"""

#获取YOLO模型的输出

box_confidence, box_xy, box_wh, box_class_probs = yolo_outputs

#中心点转换为边角

boxes = yolo_boxes_to_corners(box_xy,box_wh)

#可信度分值过滤

scores, boxes, classes = yolo_filter_boxes(box_confidence, boxes, box_class_probs, score_threshold)

#缩放锚框,以适应原始图像

boxes = yolo_utils.scale_boxes(boxes, image_shape)

#使用非最大值抑制

scores, boxes, classes = yolo_non_max_suppression(scores, boxes, classes, max_boxes, iou_threshold)

return scores, boxes, classes

with tf.Session() as test_c:

yolo_outputs = (tf.random_normal([19, 19, 5, 1], mean=1, stddev=4, seed = 1),

tf.random_normal([19, 19, 5, 2], mean=1, stddev=4, seed = 1),

tf.random_normal([19, 19, 5, 2], mean=1, stddev=4, seed = 1),

tf.random_normal([19, 19, 5, 80], mean=1, stddev=4, seed = 1))

scores, boxes, classes = yolo_eval(yolo_outputs)

print("scores[2] = " + str(scores[2].eval()))

print("boxes[2] = " + str(boxes[2].eval()))

print("classes[2] = " + str(classes[2].eval()))

print("scores.shape = " + str(scores.eval().shape))

print("boxes.shape = " + str(boxes.eval().shape))

print("classes.shape = " + str(classes.eval().shape))

test_c.close()

scores[2] = 138.79124

boxes[2] = [1292.3297 -278.52167 3876.9893 -835.56494]

classes[2] = 54

scores.shape = (10,)

boxes.shape = (10, 4)

classes.shape = (10,)

3 - 测试已经训练好了的YOLO模型

在这部分,我们将使用一个预先训练好的模型并在汽车检测数据集上进行测试。像往常一样,首先创建一个会话来启动计算图:

sess = K.get_session()

3.1 - 定义分类、锚框与图像维度

回想一下我们在试着分类80个类别,使用5个锚框。我们收集了两个文件“coco_classes.txt”和“yolo_anchors.txt”中关于80个类和5个锚框的信息。 我们将这些数据加载到模型中

class_names = yolo_utils.read_classes("model_data/coco_classes.txt")

anchors = yolo_utils.read_anchors("model_data/yolo_anchors.txt")

image_shape = (720.,1280.)

3.2 - 加载已经训练好了的模型

训练YOLO模型需要很长时间,并且需要一个相当大的标签边界框数据集,用于大范围的目标类。我们将加载存储在“yolov2.h5”中的现有预训练Keras YOLO模型。 (这些权值来自官方YOLO网站,并使用Allan Zelener编写的函数进行转换,从技术上讲,这些参数来自“YOLOv2”模型

yolo_model = load_model("model_data/yolov2.h5")

WARNING:tensorflow:From C:\Users\20919\anaconda3\lib\site-packages\tensorflow\python\ops\init_ops.py:93: calling GlorotUniform.__init__ (from tensorflow.python.ops.init_ops) with dtype is deprecated and will be removed in a future version.

Instructions for updating:

Call initializer instance with the dtype argument instead of passing it to the constructor

WARNING:tensorflow:From C:\Users\20919\anaconda3\lib\site-packages\tensorflow\python\ops\init_ops.py:93: calling Zeros.__init__ (from tensorflow.python.ops.init_ops) with dtype is deprecated and will be removed in a future version.

Instructions for updating:

Call initializer instance with the dtype argument instead of passing it to the constructor

WARNING:tensorflow:From C:\Users\20919\anaconda3\lib\site-packages\tensorflow\python\ops\init_ops.py:93: calling Ones.__init__ (from tensorflow.python.ops.init_ops) with dtype is deprecated and will be removed in a future version.

Instructions for updating:

Call initializer instance with the dtype argument instead of passing it to the constructor

WARNING:tensorflow:From C:\Users\20919\anaconda3\lib\site-packages\keras\layers\normalization\batch_normalization.py:514: _colocate_with (from tensorflow.python.framework.ops) is deprecated and will be removed in a future version.

Instructions for updating:

Colocations handled automatically by placer.

WARNING:tensorflow:No training configuration found in the save file, so the model was *not* compiled. Compile it manually.

yolo_model.summary()

Model: "model"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 608, 608, 3 0 []

)]

conv2d (Conv2D) (None, 608, 608, 32 864 ['input_1[0][0]']

)

batch_normalization (BatchNorm (None, 608, 608, 32 128 ['conv2d[0][0]']

alization) )

leaky_re_lu (LeakyReLU) (None, 608, 608, 32 0 ['batch_normalization[0][0]']

)

max_pooling2d (MaxPooling2D) (None, 304, 304, 32 0 ['leaky_re_lu[0][0]']

)

conv2d_1 (Conv2D) (None, 304, 304, 64 18432 ['max_pooling2d[0][0]']

)

batch_normalization_1 (BatchNo (None, 304, 304, 64 256 ['conv2d_1[0][0]']

rmalization) )

leaky_re_lu_1 (LeakyReLU) (None, 304, 304, 64 0 ['batch_normalization_1[0][0]']

)

max_pooling2d_1 (MaxPooling2D) (None, 152, 152, 64 0 ['leaky_re_lu_1[0][0]']

)

conv2d_2 (Conv2D) (None, 152, 152, 12 73728 ['max_pooling2d_1[0][0]']

8)

batch_normalization_2 (BatchNo (None, 152, 152, 12 512 ['conv2d_2[0][0]']

rmalization) 8)

leaky_re_lu_2 (LeakyReLU) (None, 152, 152, 12 0 ['batch_normalization_2[0][0]']

8)

conv2d_3 (Conv2D) (None, 152, 152, 64 8192 ['leaky_re_lu_2[0][0]']

)

batch_normalization_3 (BatchNo (None, 152, 152, 64 256 ['conv2d_3[0][0]']

rmalization) )

leaky_re_lu_3 (LeakyReLU) (None, 152, 152, 64 0 ['batch_normalization_3[0][0]']

)

conv2d_4 (Conv2D) (None, 152, 152, 12 73728 ['leaky_re_lu_3[0][0]']

8)

batch_normalization_4 (BatchNo (None, 152, 152, 12 512 ['conv2d_4[0][0]']

rmalization) 8)

leaky_re_lu_4 (LeakyReLU) (None, 152, 152, 12 0 ['batch_normalization_4[0][0]']

8)

max_pooling2d_2 (MaxPooling2D) (None, 76, 76, 128) 0 ['leaky_re_lu_4[0][0]']

conv2d_5 (Conv2D) (None, 76, 76, 256) 294912 ['max_pooling2d_2[0][0]']

batch_normalization_5 (BatchNo (None, 76, 76, 256) 1024 ['conv2d_5[0][0]']

rmalization)

leaky_re_lu_5 (LeakyReLU) (None, 76, 76, 256) 0 ['batch_normalization_5[0][0]']

conv2d_6 (Conv2D) (None, 76, 76, 128) 32768 ['leaky_re_lu_5[0][0]']

batch_normalization_6 (BatchNo (None, 76, 76, 128) 512 ['conv2d_6[0][0]']

rmalization)

leaky_re_lu_6 (LeakyReLU) (None, 76, 76, 128) 0 ['batch_normalization_6[0][0]']

conv2d_7 (Conv2D) (None, 76, 76, 256) 294912 ['leaky_re_lu_6[0][0]']

batch_normalization_7 (BatchNo (None, 76, 76, 256) 1024 ['conv2d_7[0][0]']

rmalization)

leaky_re_lu_7 (LeakyReLU) (None, 76, 76, 256) 0 ['batch_normalization_7[0][0]']

max_pooling2d_3 (MaxPooling2D) (None, 38, 38, 256) 0 ['leaky_re_lu_7[0][0]']

conv2d_8 (Conv2D) (None, 38, 38, 512) 1179648 ['max_pooling2d_3[0][0]']

batch_normalization_8 (BatchNo (None, 38, 38, 512) 2048 ['conv2d_8[0][0]']

rmalization)

leaky_re_lu_8 (LeakyReLU) (None, 38, 38, 512) 0 ['batch_normalization_8[0][0]']

conv2d_9 (Conv2D) (None, 38, 38, 256) 131072 ['leaky_re_lu_8[0][0]']

batch_normalization_9 (BatchNo (None, 38, 38, 256) 1024 ['conv2d_9[0][0]']

rmalization)

leaky_re_lu_9 (LeakyReLU) (None, 38, 38, 256) 0 ['batch_normalization_9[0][0]']

conv2d_10 (Conv2D) (None, 38, 38, 512) 1179648 ['leaky_re_lu_9[0][0]']

batch_normalization_10 (BatchN (None, 38, 38, 512) 2048 ['conv2d_10[0][0]']

ormalization)

leaky_re_lu_10 (LeakyReLU) (None, 38, 38, 512) 0 ['batch_normalization_10[0][0]']

conv2d_11 (Conv2D) (None, 38, 38, 256) 131072 ['leaky_re_lu_10[0][0]']

batch_normalization_11 (BatchN (None, 38, 38, 256) 1024 ['conv2d_11[0][0]']

ormalization)

leaky_re_lu_11 (LeakyReLU) (None, 38, 38, 256) 0 ['batch_normalization_11[0][0]']

conv2d_12 (Conv2D) (None, 38, 38, 512) 1179648 ['leaky_re_lu_11[0][0]']

batch_normalization_12 (BatchN (None, 38, 38, 512) 2048 ['conv2d_12[0][0]']

ormalization)

leaky_re_lu_12 (LeakyReLU) (None, 38, 38, 512) 0 ['batch_normalization_12[0][0]']

max_pooling2d_4 (MaxPooling2D) (None, 19, 19, 512) 0 ['leaky_re_lu_12[0][0]']

conv2d_13 (Conv2D) (None, 19, 19, 1024 4718592 ['max_pooling2d_4[0][0]']

)

batch_normalization_13 (BatchN (None, 19, 19, 1024 4096 ['conv2d_13[0][0]']

ormalization) )

leaky_re_lu_13 (LeakyReLU) (None, 19, 19, 1024 0 ['batch_normalization_13[0][0]']

)

conv2d_14 (Conv2D) (None, 19, 19, 512) 524288 ['leaky_re_lu_13[0][0]']

batch_normalization_14 (BatchN (None, 19, 19, 512) 2048 ['conv2d_14[0][0]']

ormalization)

leaky_re_lu_14 (LeakyReLU) (None, 19, 19, 512) 0 ['batch_normalization_14[0][0]']

conv2d_15 (Conv2D) (None, 19, 19, 1024 4718592 ['leaky_re_lu_14[0][0]']

)

batch_normalization_15 (BatchN (None, 19, 19, 1024 4096 ['conv2d_15[0][0]']

ormalization) )

leaky_re_lu_15 (LeakyReLU) (None, 19, 19, 1024 0 ['batch_normalization_15[0][0]']

)

conv2d_16 (Conv2D) (None, 19, 19, 512) 524288 ['leaky_re_lu_15[0][0]']

batch_normalization_16 (BatchN (None, 19, 19, 512) 2048 ['conv2d_16[0][0]']

ormalization)

leaky_re_lu_16 (LeakyReLU) (None, 19, 19, 512) 0 ['batch_normalization_16[0][0]']

conv2d_17 (Conv2D) (None, 19, 19, 1024 4718592 ['leaky_re_lu_16[0][0]']

)

batch_normalization_17 (BatchN (None, 19, 19, 1024 4096 ['conv2d_17[0][0]']

ormalization) )

leaky_re_lu_17 (LeakyReLU) (None, 19, 19, 1024 0 ['batch_normalization_17[0][0]']

)

conv2d_18 (Conv2D) (None, 19, 19, 1024 9437184 ['leaky_re_lu_17[0][0]']

)

batch_normalization_18 (BatchN (None, 19, 19, 1024 4096 ['conv2d_18[0][0]']

ormalization) )

conv2d_20 (Conv2D) (None, 38, 38, 64) 32768 ['leaky_re_lu_12[0][0]']

leaky_re_lu_18 (LeakyReLU) (None, 19, 19, 1024 0 ['batch_normalization_18[0][0]']

)

batch_normalization_20 (BatchN (None, 38, 38, 64) 256 ['conv2d_20[0][0]']

ormalization)

conv2d_19 (Conv2D) (None, 19, 19, 1024 9437184 ['leaky_re_lu_18[0][0]']

)

leaky_re_lu_20 (LeakyReLU) (None, 38, 38, 64) 0 ['batch_normalization_20[0][0]']

batch_normalization_19 (BatchN (None, 19, 19, 1024 4096 ['conv2d_19[0][0]']

ormalization) )

space_to_depth_x2 (Lambda) (None, 19, 19, 256) 0 ['leaky_re_lu_20[0][0]']

leaky_re_lu_19 (LeakyReLU) (None, 19, 19, 1024 0 ['batch_normalization_19[0][0]']

)

concatenate (Concatenate) (None, 19, 19, 1280 0 ['space_to_depth_x2[0][0]',

) 'leaky_re_lu_19[0][0]']

conv2d_21 (Conv2D) (None, 19, 19, 1024 11796480 ['concatenate[0][0]']

)

batch_normalization_21 (BatchN (None, 19, 19, 1024 4096 ['conv2d_21[0][0]']

ormalization) )

leaky_re_lu_21 (LeakyReLU) (None, 19, 19, 1024 0 ['batch_normalization_21[0][0]']

)

conv2d_22 (Conv2D) (None, 19, 19, 425) 435625 ['leaky_re_lu_21[0][0]']

==================================================================================================

Total params: 50,983,561

Trainable params: 50,962,889

Non-trainable params: 20,672

__________________________________________________________________________________________________

**请注意:**在某些电脑上,可能会看到来自Keras的警告消息,不要担心,这个没有什么问题的。

**提示:**如图2所示,该模型将预处理的一批输入图像(shape:(m,608,608,3))转换为tensor类型,维度为(m,19,19,5,85)

3.3 - 将模型的输出转换为边界框

yolo_model的输出是一个(m,19,19,5,85)的tensor变量,它需要进行处理和转换

yolo_outputs = yolo_head(yolo_model.output, anchors, len(class_names))

现在你已经把yolo_outputs添加进了计算图中,这4个tensor变量已准备好用作yolo_eval函数的输入

3.4 - 过滤锚框

yolo_outputs已经正确的格式为我们提供了yolo_model的所有预测框,我们现在已准备好执行过滤并仅选择最佳的锚框。现在让我们调用之前实现的yolo_eval()

scores, boxes, classes = yolo_eval(yolo_outputs, image_shape)

3.5 - 在实际图像中运行计算图

我们之前已经创建了一个用于会话的sess,这里有一些回顾:

- yolo_model.input是yolo_model的输入,yolo_model.output是yolo_model的输出

- yolo_model.output会让yolo_head进行处理,这个函数最后输出yolo_outputs

- yolo_outputs会让一个过滤函数yolo_eval进行处理,然后输出预测:scores、 boxes、 classes

现在我们要实现predict()函数,使用它来对图像进行预测,我们需要运行TensorFlow的Session会话,然后在计算图上计算scores、 boxes、 classes,下面的代码可以帮你预处理图像:

`image, image_data = yolo_utils.preprocess_image("images/" + image_file, model_image_size = (608, 608))`

- image:用于绘制框的图像的Python(PIL)表示,这里你不需要使用它

- image_data:图像的numpy数组,这将是CNN的输入

*请注意!**当模型使用BatchNorm(比如YOLO中的情况)时,您需要在feed_dict {K.learning_phase():0}中传递一个额外的占位符

def predict(sess, image_file, is_show_info=True, is_plot=True):

"""

运行存储在sess的计算图以预测image_file的边界框,打印出预测的图与信息。

参数:

sess - 包含了YOLO计算图的TensorFlow/Keras的会话。

image_file - 存储在images文件夹下的图片名称

返回:

out_scores - tensor类型,维度为(None,),锚框的预测的可能值。

out_boxes - tensor类型,维度为(None,4),包含了锚框位置信息。

out_classes - tensor类型,维度为(None,),锚框的预测的分类索引。

"""

#图像预处理

image, image_data = yolo_utils.preprocess_image("images/" + image_file, model_image_size = (608, 608))

#运行会话并在feed_dict中选择正确的占位符.

out_scores, out_boxes, out_classes = sess.run([scores, boxes, classes], feed_dict = {yolo_model.input:image_data, K.learning_phase(): 0})

#打印预测信息

if is_show_info:

print("在" + str(image_file) + "中找到了" + str(len(out_boxes)) + "个锚框。")

#指定要绘制的边界框的颜色

colors = yolo_utils.generate_colors(class_names)

#在图中绘制边界框

yolo_utils.draw_boxes(image, out_scores, out_boxes, out_classes, class_names, colors)

#保存已经绘制了边界框的图

image.save(os.path.join("out", image_file), quality=100)

#打印出已经绘制了边界框的图

if is_plot:

output_image = imageio.imread(os.path.join("out", image_file))

plt.imshow(output_image)

return out_scores, out_boxes, out_classes

out_scores, out_boxes, out_classes = predict(sess, "test.jpg")

在test.jpg中找到了7个锚框。

car 0.60 (925, 285) (1045, 374)

car 0.66 (706, 279) (786, 350)

bus 0.67 (5, 266) (220, 407)

car 0.70 (947, 324) (1280, 705)

car 0.74 (159, 303) (346, 440)

car 0.80 (761, 282) (942, 412)

car 0.89 (367, 300) (745, 648)

3.6 - 批量绘制图

我们可以看到在images文件夹中有从"0001.jpg"到"0120.jpg"的图,我们现在就把它们全部绘制出来

for i in range(1,121):

#计算需要在前面填充几个0

num_fill = int( len("0000") - len(str(1))) + 1

#对索引进行填充

filename = str(i).zfill(num_fill) + ".jpg"

print("当前文件:" + str(filename))

#开始绘制,不打印信息,不绘制图

out_scores, out_boxes, out_classes = predict(sess, filename,is_show_info=False,is_plot=False)

print("绘制完成!")

当前文件:0001.jpg

当前文件:0002.jpg

当前文件:0003.jpg

car 0.69 (347, 289) (445, 321)

car 0.70 (230, 307) (317, 354)

car 0.73 (671, 284) (770, 315)

当前文件:0004.jpg

car 0.63 (400, 285) (515, 327)

car 0.66 (95, 297) (227, 342)

car 0.68 (1, 321) (121, 410)

car 0.72 (539, 277) (658, 318)

当前文件:0005.jpg

car 0.64 (207, 297) (338, 340)

car 0.65 (741, 266) (918, 313)

car 0.67 (15, 313) (128, 362)

car 0.72 (883, 260) (1026, 303)

car 0.75 (517, 282) (689, 336)

当前文件:0006.jpg

car 0.72 (470, 286) (686, 343)

car 0.72 (72, 320) (220, 367)

当前文件:0007.jpg

car 0.67 (1086, 243) (1225, 312)

car 0.78 (468, 292) (685, 353)

当前文件:0008.jpg

truck 0.63 (852, 252) (1083, 330)

car 0.78 (1082, 275) (1275, 340)

当前文件:0009.jpg

当前文件:0010.jpg

truck 0.66 (736, 266) (1054, 368)

当前文件:0011.jpg

truck 0.73 (727, 269) (1054, 376)

car 0.85 (6, 336) (212, 457)

当前文件:0012.jpg

car 0.77 (792, 279) (1163, 408)

car 0.87 (539, 330) (998, 459)

当前文件:0013.jpg

truck 0.65 (718, 276) (1053, 385)

当前文件:0014.jpg

truck 0.64 (715, 274) (1056, 385)

当前文件:0015.jpg

truck 0.72 (713, 275) (1086, 386)

当前文件:0016.jpg

truck 0.63 (708, 276) (1106, 388)

当前文件:0017.jpg

truck 0.64 (666, 274) (1063, 392)

car 0.79 (1103, 300) (1271, 356)

car 0.82 (1, 358) (183, 427)

当前文件:0018.jpg

car 0.76 (71, 362) (242, 419)

car 0.77 (340, 339) (553, 401)

当前文件:0019.jpg

truck 0.64 (685, 275) (1050, 396)

car 0.85 (16, 377) (450, 559)

当前文件:0020.jpg

truck 0.75 (538, 286) (926, 413)

当前文件:0021.jpg

car 0.62 (691, 292) (914, 403)

truck 0.72 (88, 317) (493, 450)

当前文件:0022.jpg

car 0.65 (894, 302) (980, 348)

car 0.79 (751, 318) (879, 370)

当前文件:0023.jpg

当前文件:0024.jpg

当前文件:0025.jpg

car 0.65 (664, 296) (705, 321)

当前文件:0026.jpg

当前文件:0027.jpg

当前文件:0028.jpg

car 0.72 (711, 303) (792, 368)

当前文件:0029.jpg

truck 0.65 (698, 282) (781, 336)

当前文件:0030.jpg

当前文件:0031.jpg

car 0.67 (187, 316) (313, 409)

当前文件:0032.jpg

当前文件:0033.jpg

car 0.62 (899, 279) (964, 306)

当前文件:0034.jpg

traffic light 0.61 (200, 107) (228, 170)

car 0.70 (179, 326) (312, 424)

当前文件:0035.jpg

car 0.62 (1084, 278) (1194, 319)

当前文件:0036.jpg

car 0.65 (211, 313) (349, 402)

car 0.73 (1014, 274) (1201, 338)

当前文件:0037.jpg

car 0.63 (326, 302) (419, 365)

当前文件:0038.jpg

当前文件:0039.jpg

car 0.67 (312, 301) (398, 364)

当前文件:0040.jpg

car 0.61 (330, 299) (415, 363)

当前文件:0041.jpg

car 0.65 (341, 294) (415, 367)

当前文件:0042.jpg

当前文件:0043.jpg

car 0.76 (118, 312) (237, 384)

当前文件:0044.jpg

car 0.61 (551, 283) (624, 329)

当前文件:0045.jpg

traffic light 0.70 (383, 40) (416, 101)

traffic light 0.73 (569, 33) (604, 102)

当前文件:0046.jpg

当前文件:0047.jpg

当前文件:0048.jpg

当前文件:0049.jpg

当前文件:0050.jpg

当前文件:0051.jpg

car 0.68 (151, 323) (247, 379)

traffic light 0.72 (500, 79) (532, 138)

当前文件:0052.jpg

当前文件:0053.jpg

当前文件:0054.jpg

car 0.63 (726, 293) (800, 353)

car 0.72 (786, 292) (941, 410)

当前文件:0055.jpg

car 0.73 (758, 277) (904, 389)

当前文件:0056.jpg

当前文件:0057.jpg

当前文件:0058.jpg

当前文件:0059.jpg

car 0.77 (0, 307) (257, 464)

car 0.82 (570, 277) (864, 417)

car 0.86 (86, 319) (527, 493)

当前文件:0060.jpg

当前文件:0061.jpg

当前文件:0062.jpg

当前文件:0063.jpg

当前文件:0064.jpg

当前文件:0065.jpg

car 0.69 (380, 270) (462, 324)

当前文件:0066.jpg

traffic light 0.62 (532, 68) (564, 113)

car 0.77 (372, 281) (454, 333)

当前文件:0067.jpg

traffic light 0.65 (535, 60) (570, 105)

car 0.70 (369, 280) (454, 345)

当前文件:0068.jpg

traffic light 0.64 (378, 87) (405, 146)

traffic light 0.64 (536, 60) (572, 108)

car 0.66 (367, 288) (450, 348)

当前文件:0069.jpg

traffic light 0.60 (537, 62) (577, 109)

car 0.62 (367, 289) (450, 346)

traffic light 0.63 (379, 87) (407, 147)

当前文件:0070.jpg

car 0.65 (369, 291) (452, 354)

当前文件:0071.jpg

truck 0.70 (87, 287) (569, 450)

当前文件:0072.jpg

traffic light 0.61 (535, 65) (572, 111)

traffic light 0.62 (378, 91) (406, 148)

car 0.62 (291, 301) (357, 351)

truck 0.64 (1049, 263) (1280, 399)

car 0.64 (0, 331) (84, 449)

car 0.66 (368, 292) (450, 357)

当前文件:0073.jpg

car 0.74 (145, 313) (248, 374)

car 0.85 (503, 299) (858, 421)

当前文件:0074.jpg

traffic light 0.60 (380, 91) (407, 147)

car 0.61 (365, 294) (450, 346)

car 0.87 (31, 319) (424, 485)

当前文件:0075.jpg

car 0.75 (151, 315) (246, 372)

当前文件:0076.jpg

traffic light 0.62 (381, 93) (407, 146)

car 0.66 (246, 298) (336, 366)

car 0.70 (369, 292) (451, 357)

car 0.75 (150, 313) (245, 375)

当前文件:0077.jpg

traffic light 0.60 (536, 65) (571, 112)

traffic light 0.60 (380, 92) (407, 147)

car 0.70 (243, 296) (345, 368)

car 0.71 (368, 292) (450, 356)

car 0.75 (150, 313) (245, 374)

当前文件:0078.jpg

traffic light 0.62 (380, 92) (407, 146)

car 0.65 (367, 293) (453, 353)

car 0.70 (242, 295) (339, 367)

car 0.75 (151, 313) (245, 373)

当前文件:0079.jpg

traffic light 0.61 (535, 65) (571, 111)

traffic light 0.61 (378, 92) (406, 148)

car 0.72 (235, 298) (327, 367)

car 0.76 (151, 314) (243, 373)

当前文件:0080.jpg

traffic light 0.61 (379, 92) (407, 147)

car 0.64 (5, 309) (188, 416)

car 0.71 (237, 298) (324, 366)

car 0.79 (714, 282) (916, 362)

当前文件:0081.jpg

car 0.68 (187, 309) (301, 381)

car 0.76 (612, 293) (722, 353)

car 0.79 (25, 328) (141, 398)

当前文件:0082.jpg

traffic light 0.61 (380, 92) (408, 147)

car 0.62 (585, 287) (660, 335)

car 0.70 (410, 282) (609, 388)

当前文件:0083.jpg

traffic light 0.63 (380, 91) (407, 148)

car 0.64 (0, 328) (82, 447)

car 0.72 (609, 280) (888, 397)

当前文件:0084.jpg

traffic light 0.62 (378, 91) (406, 150)

car 0.70 (990, 272) (1270, 381)

当前文件:0085.jpg

traffic light 0.61 (535, 64) (571, 114)

traffic light 0.61 (378, 91) (406, 150)

当前文件:0086.jpg

traffic light 0.60 (378, 92) (407, 150)

traffic light 0.61 (536, 65) (572, 113)

当前文件:0087.jpg

truck 0.60 (0, 315) (93, 404)

traffic light 0.60 (536, 66) (572, 112)

traffic light 0.61 (382, 92) (410, 149)

当前文件:0088.jpg

traffic light 0.61 (377, 92) (406, 150)

当前文件:0089.jpg

当前文件:0090.jpg

当前文件:0091.jpg

traffic light 0.71 (300, 76) (333, 159)

当前文件:0092.jpg

traffic light 0.62 (232, 25) (266, 95)

当前文件:0093.jpg

car 0.60 (361, 313) (414, 341)

当前文件:0094.jpg

当前文件:0095.jpg

当前文件:0096.jpg

car 0.68 (202, 319) (301, 369)

当前文件:0097.jpg

car 0.69 (74, 330) (188, 395)

car 0.69 (235, 315) (336, 375)

当前文件:0098.jpg

car 0.60 (747, 289) (811, 356)

car 0.63 (836, 291) (968, 405)

car 0.81 (898, 315) (1113, 452)

当前文件:0099.jpg

car 0.75 (1046, 352) (1279, 608)

car 0.78 (859, 316) (972, 427)

car 0.86 (921, 336) (1120, 476)

当前文件:0100.jpg

当前文件:0101.jpg

当前文件:0102.jpg

bus 0.79 (180, 259) (304, 362)

当前文件:0103.jpg

bus 0.74 (0, 286) (203, 420)

当前文件:0104.jpg

traffic light 0.62 (241, 24) (273, 97)

truck 0.82 (0, 223) (291, 421)

当前文件:0105.jpg

当前文件:0106.jpg

car 0.62 (1200, 287) (1276, 432)

当前文件:0107.jpg

car 0.60 (376, 309) (421, 335)

当前文件:0108.jpg

当前文件:0109.jpg

当前文件:0110.jpg

当前文件:0111.jpg

car 0.61 (97, 322) (180, 366)

fire hydrant 0.63 (1177, 374) (1237, 483)

当前文件:0112.jpg

当前文件:0113.jpg

当前文件:0114.jpg

当前文件:0115.jpg

当前文件:0116.jpg

traffic light 0.63 (522, 76) (543, 113)

car 0.80 (5, 271) (241, 672)

当前文件:0117.jpg

当前文件:0118.jpg

当前文件:0119.jpg

traffic light 0.61 (1056, 0) (1138, 131)

当前文件:0120.jpg

绘制完成!