365天深度学习训练营 第5周:运动鞋品牌识别

加载包

from tensorflow import keras

from tensorflow.keras import layers,models

import os, PIL, pathlib

import matplotlib.pyplot as plt

import tensorflow as tf

导入数据

cd 'week 5'/

/home/test/Modelwhale/deep learning/week 5

%ls

[0m[01;34m46-data[0m/

data_dir = "./46-data/"

data_dir = pathlib.Path(data_dir)

image_count = len(list(data_dir.glob('*/*/*.jpg')))

print("图片总数为:",image_count)

图片总数为: 578

roses = list(data_dir.glob('train/nike/*.jpg'))

PIL.Image.open(str(roses[1]))

加载数据集的图片

使用keras.preprocessing从磁盘上加载这些图像。

定义加载图片的一些参数,包括:批量大小、图像高度、图像宽度

batch_size = 32

img_height = 224

img_width = 224

已经分好train的数据

"""

关于image_dataset_from_directory()的详细介绍可以参考文章:https://mtyjkh.blog.csdn.net/article/details/117018789

"""

train_ds = tf.keras.preprocessing.image_dataset_from_directory(

"./46-data/train/",

seed=123,

image_size=(img_height, img_width),

batch_size=batch_size)

Found 502 files belonging to 2 classes.

将80%的图像用于训练

# 将80%的图像用于训练

train_ds = tf.keras.preprocessing.image_dataset_from_directory(

"./46-data/train/",

validation_split=0.2,

subset="validation",# subset="training", ###将80%的图像用于训练 将20%的图像用于验证

seed=123,

image_size=(img_height, img_width),

batch_size=batch_size)

Found 502 files belonging to 2 classes.

Using 100 files for validation.

验证数据

"""

关于image_dataset_from_directory()的详细介绍可以参考文章:https://mtyjkh.blog.csdn.net/article/details/117018789

"""

val_ds = tf.keras.preprocessing.image_dataset_from_directory(

"./46-data/test/",

seed=123,

image_size=(img_height, img_width),

batch_size=batch_size)

Found 76 files belonging to 2 classes.

打印数据集中鞋的类别名称

#我们可以通过class_names输出数据集的标签。标签将按字母顺序对应于目录名称。

class_names = train_ds.class_names

print(class_names)

['adidas', 'nike']

可视化数据

查看一下训练数据集中的20张图像

import matplotlib.pyplot as plt

plt.figure(figsize=(20, 5))

for images, labels in train_ds.take(1):

for i in range(20):

ax = plt.subplot(2, 10, i + 1)

plt.imshow(images[i].numpy().astype("uint8"))

plt.title(class_names[labels[i]])

plt.axis("off")

再次检查数据

图像形状传递这些数据集来训练模型model.fit,可以手动遍历数据集并检索成批图像

for image_batch, labels_batch in train_ds:

print(image_batch.shape)

print(labels_batch.shape)

break

(32, 224, 224, 3)

(32,)

image_batch是图片形状的张量(32, 224, 224, 3)。32是指批量大小;

224,224分别表示图像的高度、宽度,

3是颜色通道RGB。32张图片组成一个批次。

label_batch是形状的张量(32,),对应32张图片的标签。

数据预处理

像素标准化

将像素的值标准化至0到1的区间内:

normalization_layer = layers.experimental.preprocessing.Rescaling(1./255)

为什么是除以255呢?由于图片的像素范围是0-255,我们把它变成0-1的范围,于是每张图像(训练集、测试集)都除以255。

标准化数据# 调用map将其应用于数据集:

normalized_ds = train_ds.map(lambda x, y: (normalization_layer(x), y))

image_batch, labels_batch = next(iter(normalized_ds))

first_image = image_batch[0]

# Notice the pixels values are now in `[0,1]`.

print(np.min(first_image), np.max(first_image))

0.0 1.0

配置数据集

shuffle() :打乱数据,关于此函数的详细介绍可以参考:https://zhuanlan.zhihu.com/p/42417456

prefetch() :预取数据,加速运行

cache() :将数据集缓存到内存当中,加速运行

AUTOTUNE = tf.data.AUTOTUNE

train_ds = train_ds.cache().shuffle(1000).prefetch(buffer_size=AUTOTUNE)

val_ds = val_ds.cache().prefetch(buffer_size=AUTOTUNE)

构建CNN网络

常见卷积神经网络(CNN),主要由几个 卷积层Conv2D 和 池化层MaxPooling2D 层组成。卷积层与池化层的叠加实现对输入数据的特征提取,最后连接全连接层实现分类。

特征提取——卷积层与池化层

实现分类——全连接层

CNN 的输入是张量 (Tensor) 形式的 (image_height, image_width, color_channels),包含了图像高度、宽度及颜色信息。通常图像使用 RGB 色彩模式,color_channels 为 (R,G,B) 分别对应 RGB 的三个颜色通道,即:image_height 和 image_width 根据图像的像素高度、宽度决定color_channels是3,对应RGB的3通道。

鞋数据集中的图片,形状是 (240, 240, 3),我们可以在声明第一层时将形状赋值给参数 input_shape 。num_classes = 5

model = models.Sequential([

layers.experimental.preprocessing.Rescaling(1./255, input_shape=(img_height, img_width, 3)),

layers.Conv2D(32, (3, 3), activation='relu', input_shape=(img_height, img_width, 3)), # 卷积层1,卷积核3*3

layers.AveragePooling2D((2, 2)), # 池化层1,2*2采样

# layers.Conv2D(64, (3, 3), activation='relu'), # 卷积层2,卷积核3*3

layers.Dropout(0.4), #作用是防止过拟合,提高模型的泛化能力。

layers.AveragePooling2D((2, 2)), # 池化层2,2*2采样

layers.Conv2D(64, (3, 3), activation='relu'), # 卷积层3,卷积核3*3

layers.Dropout(0.4),

layers.Flatten(), # Flatten层,连接卷积层与全连接层

layers.Dense(240, activation='relu'), # 全连接层,特征进一步提取

layers.Dense(len(class_names)) # 输出层,输出预期结果

])

编译

在准备对模型进行训练之前,还需要再对其进行一些设置。以下内容是在模型的编译步骤中添加的:

损失函数(loss):用于衡量模型在训练期间的准确率。

优化器(optimizer):决定模型如何根据其看到的数据和自身的损失函数进行更新。

指标(metrics):用于监控训练和测试步骤。以下示例使用了准确率,即被正确分类的图像的比率。

# 设置优化器

opt = tf.keras.optimizers.Adam(learning_rate=1e-4)

model.compile(optimizer=opt,

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=['accuracy'])

#查看一下网络模型

model.summary()

Model: "sequential_6"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

rescaling_9 (Rescaling) (None, 224, 224, 3) 0

conv2d_13 (Conv2D) (None, 222, 222, 32) 896

average_pooling2d_10 (Avera (None, 111, 111, 32) 0

gePooling2D)

dropout_11 (Dropout) (None, 111, 111, 32) 0

average_pooling2d_11 (Avera (None, 55, 55, 32) 0

gePooling2D)

conv2d_14 (Conv2D) (None, 53, 53, 64) 18496

dropout_12 (Dropout) (None, 53, 53, 64) 0

flatten_6 (Flatten) (None, 179776) 0

dense_11 (Dense) (None, 240) 43146480

dense_12 (Dense) (None, 2) 482

=================================================================

Total params: 43,166,354

Trainable params: 43,166,354

Non-trainable params: 0

_________________________________________________________________

训练模型

这里我们输入准备好的训练集数据(包括图像、对应的标签),测试集的数据(包括图像、对应的标签),模型一共训练50次。

epochs = 50

history = model.fit(

train_ds,

validation_data=val_ds,

epochs=epochs

)

Epoch 1/50

16/16 [==============================] - 6s 363ms/step - loss: 1.1402 - accuracy: 0.5060 - val_loss: 0.6908 - val_accuracy: 0.5395

Epoch 2/50

16/16 [==============================] - 6s 379ms/step - loss: 0.7293 - accuracy: 0.5259 - val_loss: 0.6890 - val_accuracy: 0.5000

Epoch 3/50

16/16 [==============================] - 6s 367ms/step - loss: 0.6907 - accuracy: 0.5418 - val_loss: 0.7166 - val_accuracy: 0.5263

Epoch 4/50

16/16 [==============================] - 6s 358ms/step - loss: 0.6560 - accuracy: 0.6056 - val_loss: 0.6345 - val_accuracy: 0.6842

Epoch 5/50

16/16 [==============================] - 6s 390ms/step - loss: 0.6332 - accuracy: 0.6235 - val_loss: 0.6147 - val_accuracy: 0.6974

Epoch 6/50

16/16 [==============================] - 6s 362ms/step - loss: 0.6318 - accuracy: 0.6255 - val_loss: 0.6538 - val_accuracy: 0.6316

Epoch 7/50

16/16 [==============================] - 6s 358ms/step - loss: 0.6034 - accuracy: 0.6853 - val_loss: 0.6752 - val_accuracy: 0.6184

Epoch 8/50

16/16 [==============================] - 6s 390ms/step - loss: 0.5652 - accuracy: 0.7410 - val_loss: 0.6619 - val_accuracy: 0.6579

Epoch 9/50

16/16 [==============================] - 6s 377ms/step - loss: 0.5435 - accuracy: 0.7590 - val_loss: 0.6664 - val_accuracy: 0.6447

Epoch 10/50

16/16 [==============================] - 6s 379ms/step - loss: 0.5294 - accuracy: 0.7749 - val_loss: 0.5913 - val_accuracy: 0.7105

Epoch 11/50

16/16 [==============================] - 6s 386ms/step - loss: 0.5008 - accuracy: 0.7749 - val_loss: 0.5973 - val_accuracy: 0.6842

Epoch 12/50

16/16 [==============================] - 6s 379ms/step - loss: 0.4997 - accuracy: 0.7809 - val_loss: 0.7795 - val_accuracy: 0.6053

Epoch 13/50

16/16 [==============================] - 6s 378ms/step - loss: 0.4849 - accuracy: 0.7610 - val_loss: 0.5082 - val_accuracy: 0.7500

Epoch 14/50

16/16 [==============================] - 6s 376ms/step - loss: 0.4875 - accuracy: 0.7789 - val_loss: 0.4860 - val_accuracy: 0.7632

Epoch 15/50

16/16 [==============================] - 6s 376ms/step - loss: 0.4394 - accuracy: 0.8167 - val_loss: 0.5199 - val_accuracy: 0.7237

Epoch 16/50

16/16 [==============================] - 6s 402ms/step - loss: 0.4182 - accuracy: 0.8267 - val_loss: 0.4844 - val_accuracy: 0.7500

Epoch 17/50

16/16 [==============================] - 6s 379ms/step - loss: 0.3856 - accuracy: 0.8506 - val_loss: 0.5402 - val_accuracy: 0.7237

Epoch 18/50

16/16 [==============================] - 6s 351ms/step - loss: 0.3959 - accuracy: 0.8367 - val_loss: 0.4774 - val_accuracy: 0.7500

Epoch 19/50

16/16 [==============================] - 6s 367ms/step - loss: 0.3617 - accuracy: 0.8805 - val_loss: 0.5969 - val_accuracy: 0.7237

Epoch 20/50

16/16 [==============================] - 6s 359ms/step - loss: 0.4321 - accuracy: 0.7749 - val_loss: 0.5671 - val_accuracy: 0.7237

Epoch 21/50

16/16 [==============================] - 6s 357ms/step - loss: 0.3595 - accuracy: 0.8625 - val_loss: 0.4786 - val_accuracy: 0.7500

Epoch 22/50

16/16 [==============================] - 6s 380ms/step - loss: 0.3567 - accuracy: 0.8705 - val_loss: 0.4366 - val_accuracy: 0.8158

Epoch 23/50

16/16 [==============================] - 6s 381ms/step - loss: 0.3046 - accuracy: 0.9084 - val_loss: 0.4577 - val_accuracy: 0.7632

Epoch 24/50

16/16 [==============================] - 6s 346ms/step - loss: 0.2881 - accuracy: 0.9064 - val_loss: 0.4394 - val_accuracy: 0.7895

Epoch 25/50

16/16 [==============================] - 6s 375ms/step - loss: 0.2985 - accuracy: 0.9104 - val_loss: 0.4532 - val_accuracy: 0.7763

Epoch 26/50

16/16 [==============================] - 6s 374ms/step - loss: 0.2740 - accuracy: 0.9163 - val_loss: 0.5409 - val_accuracy: 0.7500

Epoch 27/50

16/16 [==============================] - 6s 396ms/step - loss: 0.2610 - accuracy: 0.9143 - val_loss: 0.5458 - val_accuracy: 0.7368

Epoch 28/50

16/16 [==============================] - 6s 348ms/step - loss: 0.2700 - accuracy: 0.8964 - val_loss: 0.4511 - val_accuracy: 0.7895

Epoch 29/50

16/16 [==============================] - 6s 365ms/step - loss: 0.2473 - accuracy: 0.9263 - val_loss: 0.4392 - val_accuracy: 0.7895

Epoch 30/50

16/16 [==============================] - 6s 382ms/step - loss: 0.2387 - accuracy: 0.9124 - val_loss: 0.5414 - val_accuracy: 0.7500

Epoch 31/50

16/16 [==============================] - 6s 381ms/step - loss: 0.2331 - accuracy: 0.9363 - val_loss: 0.4227 - val_accuracy: 0.8026

Epoch 32/50

16/16 [==============================] - 6s 362ms/step - loss: 0.2423 - accuracy: 0.9183 - val_loss: 0.4048 - val_accuracy: 0.8289

Epoch 33/50

16/16 [==============================] - 6s 376ms/step - loss: 0.2874 - accuracy: 0.8825 - val_loss: 0.4788 - val_accuracy: 0.7763

Epoch 34/50

16/16 [==============================] - 6s 385ms/step - loss: 0.2401 - accuracy: 0.9243 - val_loss: 0.4193 - val_accuracy: 0.7895

Epoch 35/50

16/16 [==============================] - 6s 399ms/step - loss: 0.1868 - accuracy: 0.9582 - val_loss: 0.3914 - val_accuracy: 0.8553

Epoch 36/50

16/16 [==============================] - 6s 367ms/step - loss: 0.2207 - accuracy: 0.9283 - val_loss: 0.4541 - val_accuracy: 0.7763

Epoch 37/50

16/16 [==============================] - 7s 408ms/step - loss: 0.2007 - accuracy: 0.9402 - val_loss: 0.3839 - val_accuracy: 0.8158

Epoch 38/50

16/16 [==============================] - 6s 397ms/step - loss: 0.1836 - accuracy: 0.9482 - val_loss: 0.3863 - val_accuracy: 0.8158

Epoch 39/50

16/16 [==============================] - 6s 379ms/step - loss: 0.1795 - accuracy: 0.9482 - val_loss: 0.4069 - val_accuracy: 0.8158

Epoch 40/50

16/16 [==============================] - 6s 389ms/step - loss: 0.1735 - accuracy: 0.9542 - val_loss: 0.4048 - val_accuracy: 0.8158

Epoch 41/50

16/16 [==============================] - 6s 378ms/step - loss: 0.1685 - accuracy: 0.9462 - val_loss: 0.3930 - val_accuracy: 0.8289

Epoch 42/50

16/16 [==============================] - 6s 383ms/step - loss: 0.1740 - accuracy: 0.9562 - val_loss: 0.4742 - val_accuracy: 0.7763

Epoch 43/50

16/16 [==============================] - 6s 366ms/step - loss: 0.1767 - accuracy: 0.9382 - val_loss: 0.4110 - val_accuracy: 0.8026

Epoch 44/50

16/16 [==============================] - 6s 361ms/step - loss: 0.1620 - accuracy: 0.9462 - val_loss: 0.3925 - val_accuracy: 0.8158

Epoch 45/50

16/16 [==============================] - 6s 392ms/step - loss: 0.1323 - accuracy: 0.9661 - val_loss: 0.3901 - val_accuracy: 0.8289

Epoch 46/50

16/16 [==============================] - 6s 386ms/step - loss: 0.1262 - accuracy: 0.9721 - val_loss: 0.3915 - val_accuracy: 0.8421

Epoch 47/50

16/16 [==============================] - 6s 401ms/step - loss: 0.1431 - accuracy: 0.9542 - val_loss: 0.3868 - val_accuracy: 0.8421

Epoch 48/50

16/16 [==============================] - 6s 376ms/step - loss: 0.1394 - accuracy: 0.9622 - val_loss: 0.4206 - val_accuracy: 0.8158

Epoch 49/50

16/16 [==============================] - 6s 402ms/step - loss: 0.1225 - accuracy: 0.9721 - val_loss: 0.4001 - val_accuracy: 0.8289

Epoch 50/50

16/16 [==============================] - 7s 415ms/step - loss: 0.1260 - accuracy: 0.9622 - val_loss: 0.4035 - val_accuracy: 0.8289

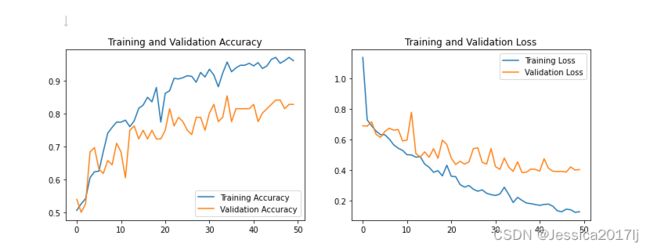

通常loss越小越好,那什么是loss?

简单来说是 模型预测值 和 真实值 的相差的值,反映模型预测的结果和真实值的相差程度( 模型预测值 - 真实值 );

通常准确度accuracy 越高,模型效果越好。评估模型在训练和验证集上创建损失和准确性图。

模型评估¶

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs_range = range(epochs)

plt.figure(figsize=(12, 4))

plt.subplot(1, 2, 1)

plt.plot(epochs_range, acc, label='Training Accuracy')

plt.plot(epochs_range, val_acc, label='Validation Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')

plt.subplot(1, 2, 2)

plt.plot(epochs_range, loss, label='Training Loss')

plt.plot(epochs_range, val_loss, label='Validation Loss')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

plt.show()

从图中可以看出,训练精度和验证精度相差很大,模型仅在验证集上获得了约55%的精度。

训练精度随时间增长,而验证精度在训练过程中停滞在55%左右。

训练和验证准确性之间的准确性差异很明显,这是过拟合的标志。

可能过拟合出现的原因 :当训练示例数量很少时,像这次的只有50多张图片,该模型有时会从训练示例中的噪音或不必要的细节中学习,从而模型在新示例上的性能产生负面影响。