基于卷积神经网络的猫狗识别

这里写目录标题

-

-

- 任务要求

- 猫狗识别

- 开始训练

- 过拟合和数据增强

- 参考链接

-

任务要求

- 按照 https://github.com/fchollet/deep-learning-with-python-notebooks/blob/master/5.2-using-convnets-with-small-datasets.ipynb, 利用TensorFlow和Keras,自己搭建卷积神经网络完成狗猫数据集的分类实验;将关键步骤用汉语注释出来。解释什么是overfit(过拟合)?什么是数据增强?如果单独只做数据增强,精确率提高了多少?然后再添加的dropout层,是什么实际效果?

- 用Vgg19网络模型完成狗猫分类,写出实验结果;

猫狗识别

1.准备数据集

从kaggle网站的数据集下载猫狗数据集,解压后如图所示:

在trian文件夹中有许多猫狗的照片:

2.正式进行猫狗识别

图片分类并打印出结果,实现代码如下:

import os, shutil

# The path to the directory where the original

# dataset was uncompressed(原始数据集路径)

original_dataset_dir = 'C:/Users/23226/Desktop/kaggle_Dog&Cat/train/train'

# The directory where we will

# store our smaller dataset(目标存储路径)

base_dir = 'C:/Users/23226/Desktop/kaggle_Dog&Cat/result'

os.mkdir(base_dir)

# Directories for our training,

# validation and test splits

train_dir = os.path.join(base_dir, 'train')

os.mkdir(train_dir)

validation_dir = os.path.join(base_dir, 'validation')

os.mkdir(validation_dir)

test_dir = os.path.join(base_dir, 'test')

os.mkdir(test_dir)

# Directory with our training cat pictures

train_cats_dir = os.path.join(train_dir, 'cats')

os.mkdir(train_cats_dir)

# Directory with our training dog pictures

train_dogs_dir = os.path.join(train_dir, 'dogs')

os.mkdir(train_dogs_dir)

# Directory with our validation cat pictures

validation_cats_dir = os.path.join(validation_dir, 'cats')

os.mkdir(validation_cats_dir)

# Directory with our validation dog pictures

validation_dogs_dir = os.path.join(validation_dir, 'dogs')

os.mkdir(validation_dogs_dir)

# Directory with our validation cat pictures

test_cats_dir = os.path.join(test_dir, 'cats')

os.mkdir(test_cats_dir)

# Directory with our validation dog pictures

test_dogs_dir = os.path.join(test_dir, 'dogs')

os.mkdir(test_dogs_dir)

# Copy first 1000 cat images to train_cats_dir

fnames = ['cat.{}.jpg'.format(i) for i in range(1000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(train_cats_dir, fname)

shutil.copyfile(src, dst)

# Copy next 500 cat images to validation_cats_dir

fnames = ['cat.{}.jpg'.format(i) for i in range(1000, 1500)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(validation_cats_dir, fname)

shutil.copyfile(src, dst)

# Copy next 500 cat images to test_cats_dir

fnames = ['cat.{}.jpg'.format(i) for i in range(1500, 2000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(test_cats_dir, fname)

shutil.copyfile(src, dst)

# Copy first 1000 dog images to train_dogs_dir

fnames = ['dog.{}.jpg'.format(i) for i in range(1000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(train_dogs_dir, fname)

shutil.copyfile(src, dst)

# Copy next 500 dog images to validation_dogs_dir

fnames = ['dog.{}.jpg'.format(i) for i in range(1000, 1500)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(validation_dogs_dir, fname)

shutil.copyfile(src, dst)

# Copy next 500 dog images to test_dogs_dir

fnames = ['dog.{}.jpg'.format(i) for i in range(1500, 2000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(test_dogs_dir, fname)

shutil.copyfile(src, dst)

print('total training cat images:', len(os.listdir(train_cats_dir)))

print('total training dog images:', len(os.listdir(train_dogs_dir)))

print('total validation cat images:', len(os.listdir(validation_cats_dir)))

print('total validation dog images:', len(os.listdir(validation_dogs_dir)))

print('total test cat images:', len(os.listdir(test_cats_dir)))

print('total test dog images:', len(os.listdir(test_dogs_dir)))

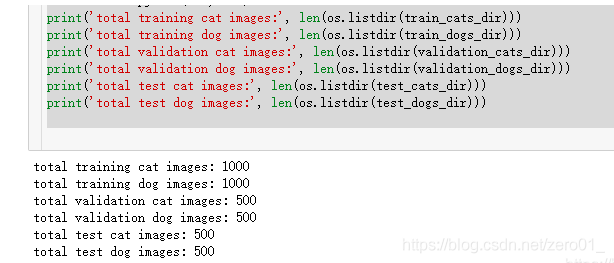

结果显示如下:

可以看出:

猫狗训练图片各1000张,验证图片各500张,测试图片各500张。

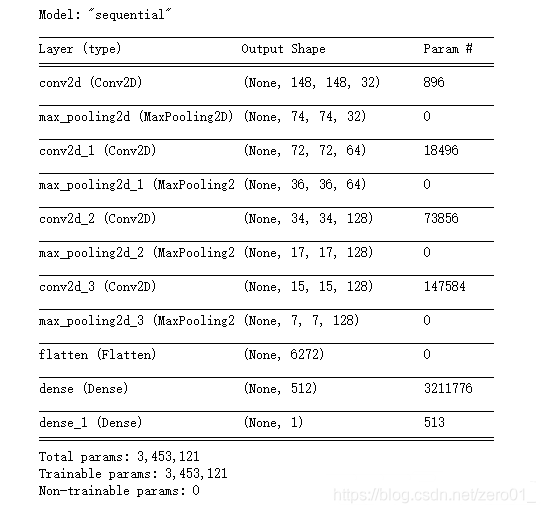

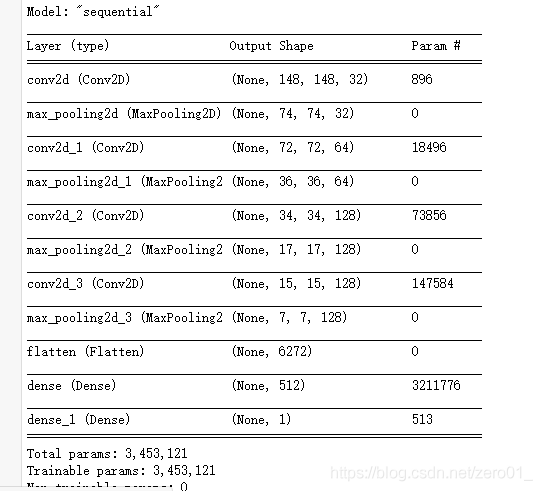

3.卷积神经网络CNN

==model.summary()==函数输出模型各层的参数状况:

from keras import layers

from keras import models

model = models.Sequential()

model.add(layers.Conv2D(32, (3, 3), activation='relu',

input_shape=(150, 150, 3)))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(64, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(128, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(128, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Flatten())

model.add(layers.Dense(512, activation='relu'))

model.add(layers.Dense(1, activation='sigmoid'))

model.summary()

结果如图:

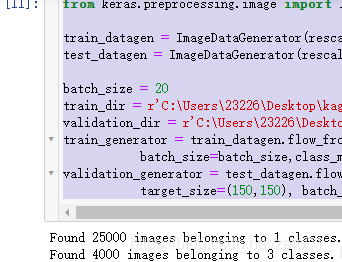

然后从图像生成器读取文件中数据,其中注意两个函数:

-

1.model.compile()优化器(loss:计算损失,这里用的是交叉熵损失,metrics: 列表,包含评估模型在训练和测试时的性能的指标)

-

2.ImageDataGenerator就像一个把文件中图像转换成所需格式的转接头,通常先定制一个转接头train_datagen,它可以根据需要对图像进行各种变换,然后再把它怼到文件中(flow方法是怼到array中),约定好出来数据的格式。

-

实现代码如下:

-from keras import optimizers model.compile(loss="binary_crossentropy", optimizer=optimizers.RMSprop(lr=1e-4), metrics=['acc'])

from keras.preprocessing.image import ImageDataGenerator

train_datagen = ImageDataGenerator(rescale=1./255) #在这里可进行图像增强

test_datagen = ImageDataGenerator(rescale=1./255) #其中验证集不可用图像增强

batch_size = 20

train_dir = r'C:\Users\23226\Desktop\kaggle_Dog&Cat\train'

validation_dir = r'C:\Users\23226\Desktop\kaggle_Dog&Cat\result'

train_generator = train_datagen.flow_from_directory(train_dir, target_size=(150,150),

batch_size=batch_size,class_mode='binary')

validation_generator = test_datagen.flow_from_directory(validation_dir,

target_size=(150,150), batch_size=batch_size, class_mode='binary')

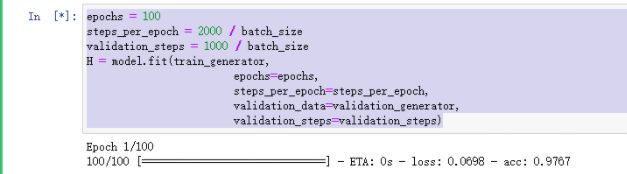

开始训练

epochs = 100

steps_per_epoch = 2000 / batch_size

validation_steps = 1000 / batch_size

H = model.fit(train_generator,

epochs=epochs,

steps_per_epoch=steps_per_epoch,

validation_data=validation_generator,

validation_steps=validation_steps)

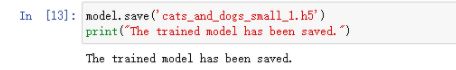

model.save('cats_and_dogs_small_1.h5')

print("The trained model has been saved.")

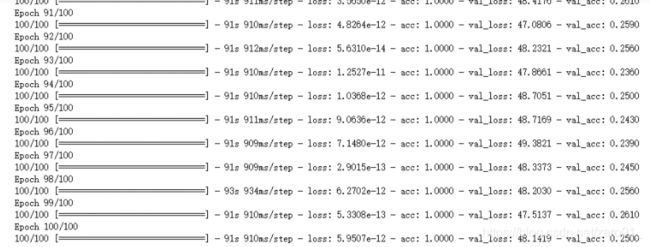

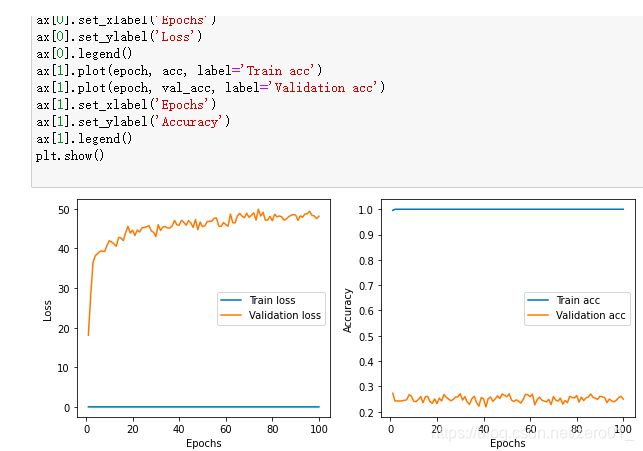

训练结果如下图所示,很明显模型上来就过拟合了,主要原因是数据不够,或者说相对于数据量,模型过复杂)。

import matplotlib.pyplot as plt

loss = H.history['loss']

acc = H.history['acc']

val_loss = H.history['val_loss']

val_acc = H.history['val_acc']

epoch = range(1, len(loss)+1)

fig, ax = plt.subplots(1, 2, figsize=(10,4))

ax[0].plot(epoch, loss, label='Train loss')

ax[0].plot(epoch, val_loss, label='Validation loss')

ax[0].set_xlabel('Epochs')

ax[0].set_ylabel('Loss')

ax[0].legend()

ax[1].plot(epoch, acc, label='Train acc')

ax[1].plot(epoch, val_acc, label='Validation acc')

ax[1].set_xlabel('Epochs')

ax[1].set_ylabel('Accuracy')

ax[1].legend()

plt.show()

4.Keras图像增强方法

在Keras中,可以利用图像生成器很方便地定义一些常见的图像变换。

from keras.preprocessing import image

import numpy as np

#定义一个图像生成器

datagen = ImageDataGenerator(

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,

fill_mode='nearest')

#生成猫图的路径列表

train_cats_dir = os.path.join(train_dir, 'cats')

fnames = [os.path.join(train_cats_dir, fname) for fname in os.listdir(train_cats_dir)]

#选一张图片,打包成(batches, 150, 150, 3)格式

img_path = fnames[1]

img = image.load_img(img_path, target_size=(150,150)) #读入一张图像

x_aug = image.img_to_array(img) #将图像格式转为array格式

x_aug = np.expand_dims(x_aug, axis=0) #(1, 150, 150, 3)

#对图片进行增强,查看效果

fig = plt.figure(figsize=(8,8))

k = 1

for batch in datagen.flow(x_aug, batch_size=1): #注意生成器的使用方式

ax = fig.add_subplot(3, 3, k)

ax.imshow(image.array_to_img(batch[0]))

k += 1

if k > 9:

break

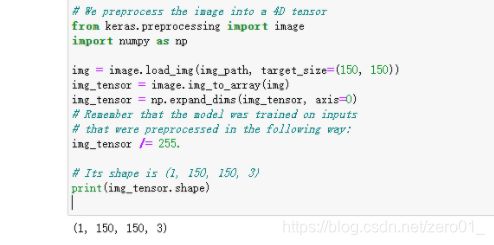

from keras.models import load_model

model = load_model('cats_and_dogs_small_1.h5')

model.summary() # As a reminder.

img_path = 'C:/Users/23226/Desktop/kaggle_Dog&Cat/result/test/cats/cat.1502.jpg'

# We preprocess the image into a 4D tensor

from keras.preprocessing import image

import numpy as np

img = image.load_img(img_path, target_size=(150, 150))

img_tensor = image.img_to_array(img)

img_tensor = np.expand_dims(img_tensor, axis=0)

# Remember that the model was trained on inputs

# that were preprocessed in the following way:

img_tensor /= 255.

# Its shape is (1, 150, 150, 3)

print(img_tensor.shape)

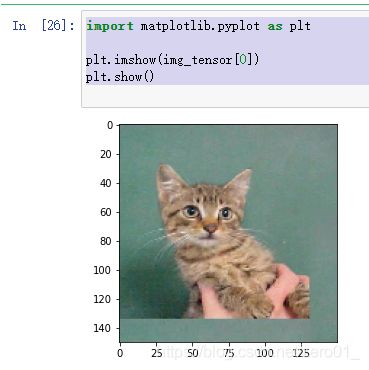

import matplotlib.pyplot as plt

plt.imshow(img_tensor[0])

plt.show()

过拟合和数据增强

过拟合:1)简单理解就是训练样本的得到的输出和期望输出基本一致,但是测试样本输出和测试样本的期望输出相差却很大 。2)为了得到一致假设而使假设变得过度复杂称为过拟合。想像某种学习算法产生了一个过拟合的分类器,这个分类器能够百分之百的正确分类样本数据(即再拿样本中的文档来给它,它绝对不会分错),但也就为了能够对样本完全正确的分类,使得它的构造如此精细复杂,规则如此严格,以至于任何与样本数据稍有不同的文档它全都认为不属于这个类别!

如果数据本身呈现二次型,故用一条二次曲线拟合会更好。但普通的PLS程序只提供线性方程供拟合之用。这就产生拟合不足即“欠拟合”现象,从而在预报时要造成偏差。如果我们用人工神经网络拟合,则因为三层人工神经网络拟合能力极强,有能力拟合任何函数。如果拟合彻底,就会连实验数据点分布不均匀,实验数据的误差等等“噪声”都按最小二乘判据拟合进数学模型。这当然也会造成预报的偏差。这就是“过拟合”的一个实例了。

数据增强主要用来防止过拟合,用于dataset较小的时候。

参考链接

https://blog.csdn.net/weixin_42807063/article/details/90230340?utm_medium=distribute.pc_relevant.none-task-blog-baidujs_title-3&spm=1001.2101.3001.4242