大数据数仓项目实战

一、数仓项目需求及架构设计

数据仓库是为企业所有级别的决策制定过程,提供所有类型数据支持的战略集合。

数据仓库是出于分析报告和决策支持目的而创建的,为需要业务智能的企业,提供指导业务流程改进、监控时间、成本、质量以及控制。

1、项目需求分析

- 数据采集平台搭建;

- 实现数据仓库分层的搭建;

- 实现数据清洗、聚合、计算等操作;

- 统计各指标,如统计通过各地址跳转注册的用户人数、统计各平台的用户人数、统计支付金额topN的用户;

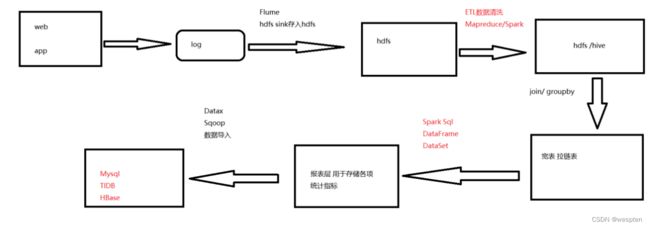

2、项目框架

1)技术选型

- 数据存储:Hdfs

- 数据处理:Hive、Spark

- 任务调度:Azkaban

2)流程设计

框架版本选型:

如何选择Apache/CDH/HDP版本?

Apache∶运维麻烦,组件间兼容性需要自己调研。(一般大厂使用,技术实力雄厚,有专业的运维人员)。

CDH∶国内使用最多的版本,但CM不开源,但其实对中、小公司使用来说没有影响(建议使用)。

HDP∶开源,可以进行二次开发,但是没有CDH稳定,国内使用较少。

二、用户注册模块需求

1、原始数据格式及字段含义

baseadlog 广告基础表原始json数据:

{

"adid": "0", //基础广告表广告id

"adname": "注册弹窗广告0", //广告详情名称

"dn": "webA" //网站分区

}basewebsitelog 网站基础表原始json数据:

{

"createtime": "2000-01-01",

"creator": "admin",

"delete": "0",

"dn": "webC", //网站分区

"siteid": "2", //网站id

"sitename": "114", //网站名称

"siteurl": "www.114.com/webC" //网站地址

}memberRegtype 用户跳转地址注册表:

{

"appkey": "-",

"appregurl": "http:www.webA.com/product/register/index.html", //注册时跳转地址

"bdp_uuid": "-",

"createtime": "2015-05-11",

"dt":"20190722", //日期分区

"dn": "webA", //网站分区

"domain": "-",

"isranreg": "-",

"regsource": "4", //所属平台 1.PC 2.MOBILE 3.APP 4.WECHAT

"uid": "0", //用户id

"websiteid": "0" //对应basewebsitelog 下的siteid网站

}pcentermempaymoneylog 用户支付金额表:

{

"dn": "webA", //网站分区

"paymoney": "162.54", //支付金额

"siteid": "1", //网站id对应 对应basewebsitelog 下的siteid网站

"dt":"20190722", //日期分区

"uid": "4376695", //用户id

"vip_id": "0" //对应pcentermemviplevellog vip_id

}pcentermemviplevellog用户vip等级基础表:

{

"discountval": "-",

"dn": "webA", //网站分区

"end_time": "2019-01-01", //vip结束时间

"last_modify_time": "2019-01-01",

"max_free": "-",

"min_free": "-",

"next_level": "-",

"operator": "update",

"start_time": "2015-02-07", //vip开始时间

"vip_id": "2", //vip id

"vip_level": "银卡" //vip级别名称

}memberlog 用户基本信息表:

{

"ad_id": "0", //广告id

"birthday": "1981-08-14", //出生日期

"dt":"20190722", //日期分区

"dn": "webA", //网站分区

"email": "[email protected]",

"fullname": "王69239", //用户姓名

"iconurl": "-",

"lastlogin": "-",

"mailaddr": "-",

"memberlevel": "6", //用户级别

"password": "123456", //密码

"paymoney": "-",

"phone": "13711235451", //手机号

"qq": "10000",

"register": "2016-08-15", //注册时间

"regupdatetime": "-",

"uid": "69239", //用户id

"unitname": "-",

"userip": "123.235.75.48", //ip地址

"zipcode": "-"

}其余字段为非统计项 直接使用默认值“-”存储即可。

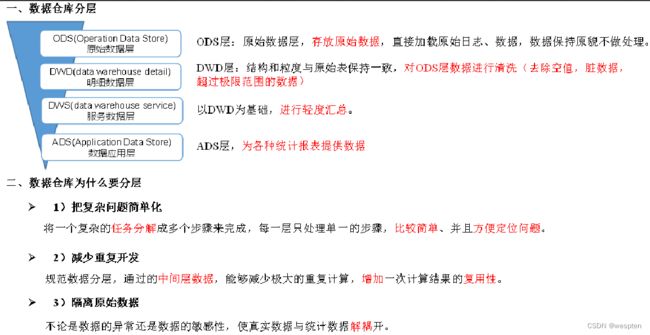

2、数据分层

在hadoop集群上创建 ods目录:

hadoop dfs -mkdir -p /user/yyds/ods在hive里分别建立三个库,dwd、dws、ads 分别用于存储etl清洗后的数据、宽表和拉链表数据、各报表层统计指标数据。

create database dwd;

create database dws;

create database ads; 各层级:

- ods:存放原始数据

- dwd:结构与原始表结构保持一致,对ods层数据进行清洗

- dws:以dwd为基础进行轻度汇总

- ads:报表层,为各种统计报表提供数据

各层建表语句:

用户模块:

create external table `dwd`.`dwd_member`(

uid int,

ad_id int,

birthday string,

email string,

fullname string,

iconurl string,

lastlogin string,

mailaddr string,

memberlevel string,

password string,

paymoney string,

phone string,

qq string,

register string,

regupdatetime string,

unitname string,

userip string,

zipcode string)

partitioned by(

dt string,

dn string

)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dwd`.`dwd_member_regtype`(

uid int,

appkey string,

appregurl string,

bdp_uuid string,

createtime timestamp,

isranreg string,

regsource string,

regsourcename string,

websiteid int)

partitioned by(

dt string,

dn string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dwd`.`dwd_base_ad`(

adid int,

adname string)

partitioned by (

dn string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dwd`.`dwd_base_website`(

siteid int,

sitename string,

siteurl string,

`delete` int,

createtime timestamp,

creator string)

partitioned by (

dn string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dwd`.`dwd_pcentermempaymoney`(

uid int,

paymoney string,

siteid int,

vip_id int

)

partitioned by(

dt string,

dn string

)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dwd`.`dwd_vip_level`(

vip_id int,

vip_level string,

start_time timestamp,

end_time timestamp,

last_modify_time timestamp,

max_free string,

min_free string,

next_level string,

operator string

)partitioned by(

dn string

)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dws`.`dws_member`(

uid int,

ad_id int,

fullname string,

iconurl string,

lastlogin string,

mailaddr string,

memberlevel string,

password string,

paymoney string,

phone string,

qq string,

register string,

regupdatetime string,

unitname string,

userip string,

zipcode string,

appkey string,

appregurl string,

bdp_uuid string,

reg_createtime timestamp,

isranreg string,

regsource string,

regsourcename string,

adname string,

siteid int,

sitename string,

siteurl string,

site_delete string,

site_createtime string,

site_creator string,

vip_id int,

vip_level string,

vip_start_time timestamp,

vip_end_time timestamp,

vip_last_modify_time timestamp,

vip_max_free string,

vip_min_free string,

vip_next_level string,

vip_operator string

)partitioned by(

dt string,

dn string

)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

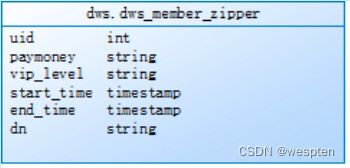

create external table `dws`.`dws_member_zipper`(

uid int,

paymoney string,

vip_level string,

start_time timestamp,

end_time timestamp

)partitioned by(

dn string

)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `ads`.`ads_register_appregurlnum`(

appregurl string,

num int

)partitioned by(

dt string,

dn string

)ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t';

create external table `ads`.`ads_register_sitenamenum`(

sitename string,

num int

)partitioned by(

dt string,

dn string

)ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t';

create external table `ads`.`ads_register_regsourcenamenum`(

regsourcename string,

num int

)partitioned by(

dt string,

dn string

) ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t';

create external table `ads`.`ads_register_adnamenum`(

adname string,

num int

)partitioned by(

dt string,

dn string

)ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t';

create external table `ads`.`ads_register_memberlevelnum`(

memberlevel string,

num int

)partitioned by(

dt string,

dn string

)ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t';

create external table `ads`.`ads_register_viplevelnum`(

vip_level string,

num int

)partitioned by(

dt string,

dn string

)ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t';

create external table `ads`.`ads_register_top3memberpay`(

uid int,

memberlevel string,

register string,

appregurl string,

regsourcename string,

adname string,

sitename string,

vip_level string,

paymoney decimal(10,4),

rownum int

)partitioned by(

dt string,

dn string

)ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t';

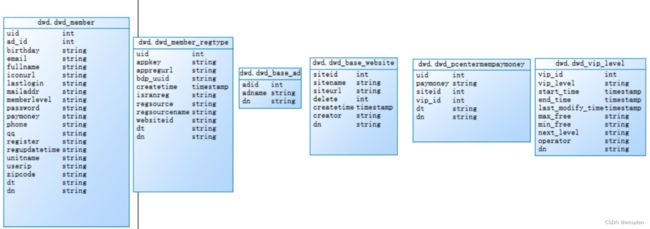

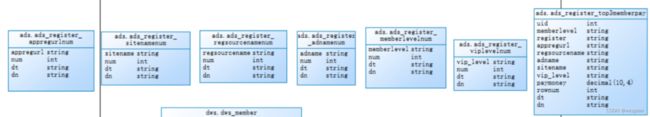

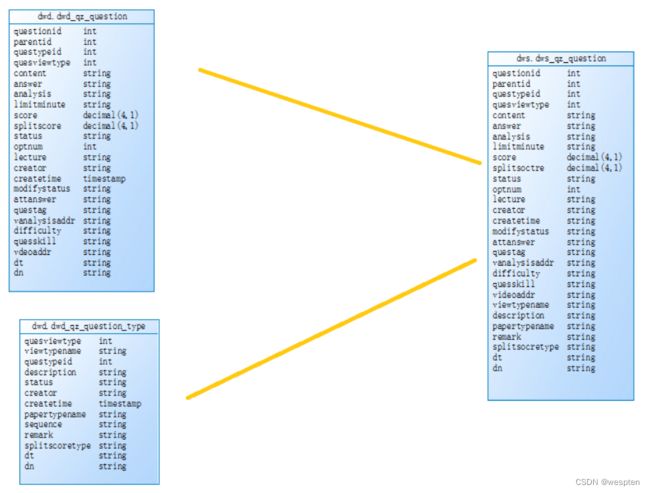

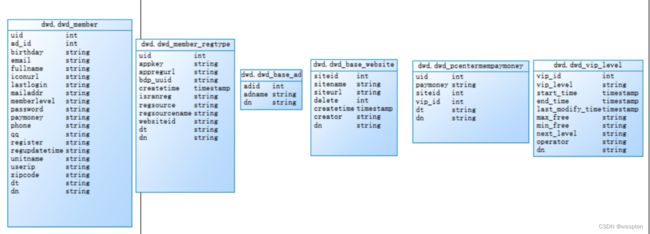

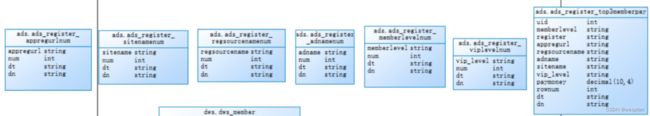

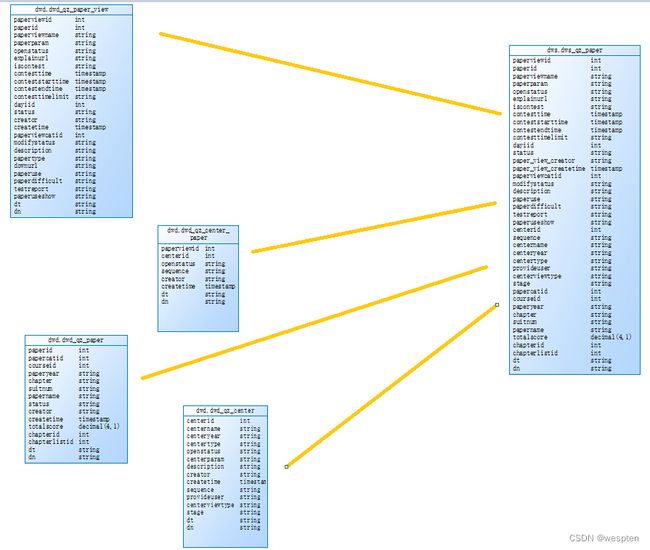

dwd层 6张基础表:

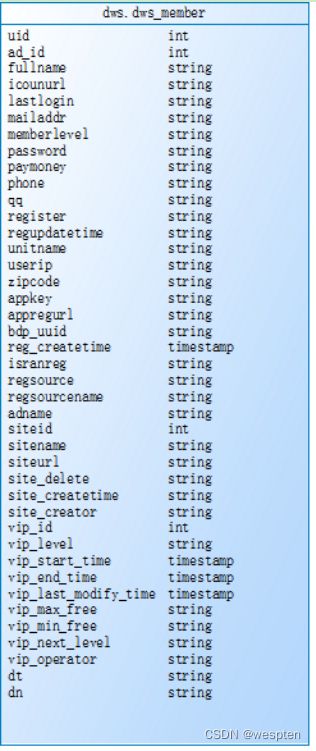

dws层宽表和拉链表:

宽表:

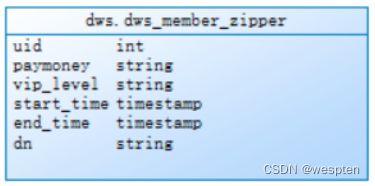

拉链表:

报表层各统计表:

3、模拟数据采集上传数据

模拟数据采集 将日志文件数据直接上传到hadoop集群上:

hadoop dfs -put baseadlog.log /user/yyds/ods/

hadoop dfs -put memberRegtype.log /user/yyds/ods/

hadoop dfs -put baswewebsite.log /user/yyds/ods/

hadoop dfs -put pcentermempaymoney.log /user/yyds/ods/

hadoop dfs -put pcenterMemViplevel.log /user/yyds/ods/

hadoop dfs -put member.log /user/yyds/ods/

4、ETL数据清洗

需求1:必须使用Spark进行数据清洗,对用户名、手机号、密码进行脱敏处理,并使用Spark将数据导入到dwd层hive表中

清洗规则 用户名:王XX 手机号:137*****789 密码直接替换成******

5、基于dwd层表合成dws层的宽表

需求2:对dwd层的6张表进行合并,生成一张宽表,先使用Spark Sql实现。有时间的同学需要使用DataFrame api实现功能,并对join进行优化。

6、拉链表

需求3:针对dws层宽表的支付金额(paymoney)和vip等级(vip_level)这两个会变动的字段生成一张拉链表,需要一天进行一次更新。

7、报表层各指标统计

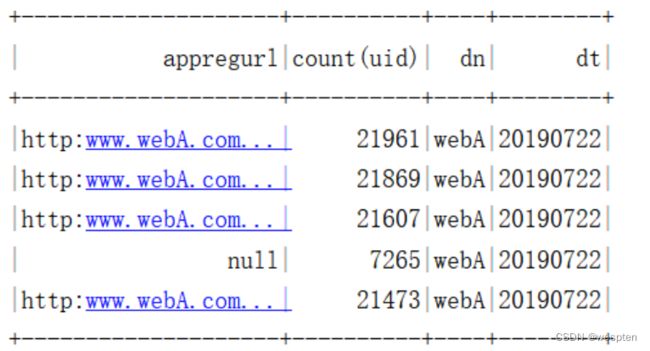

需求4:使用Spark DataFrame Api统计通过各注册跳转地址(appregurl)进行注册的用户数。

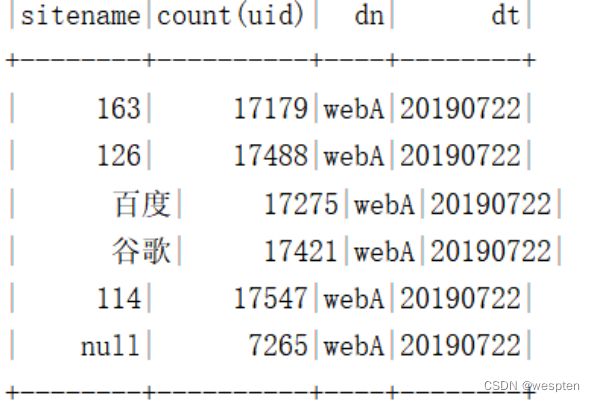

需求5:使用Spark DataFrame Api统计各所属网站(sitename)的用户数。

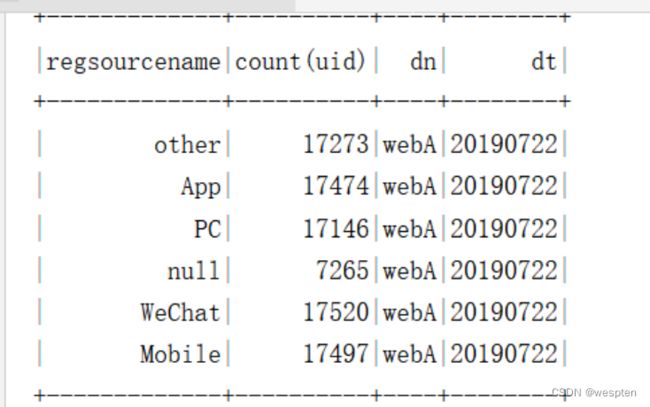

需求6:使用Spark DataFrame Api统计各所属平台的(regsourcename)用户数。

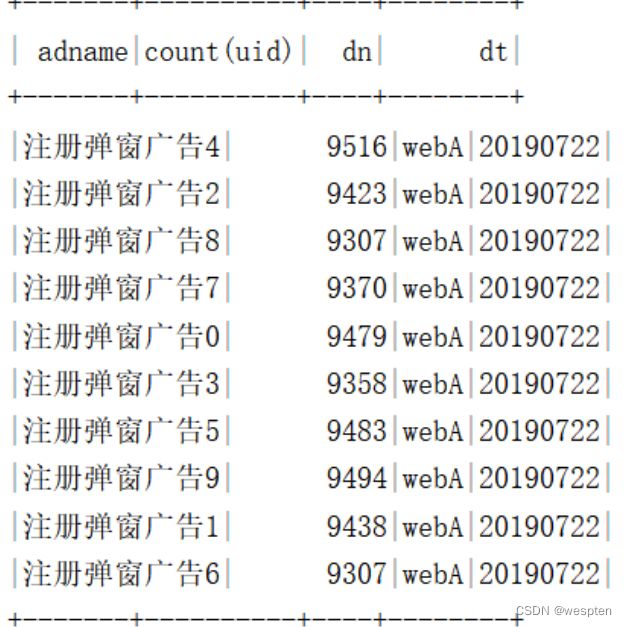

需求7:使用Spark DataFrame Api统计通过各广告跳转(adname)的用户数。

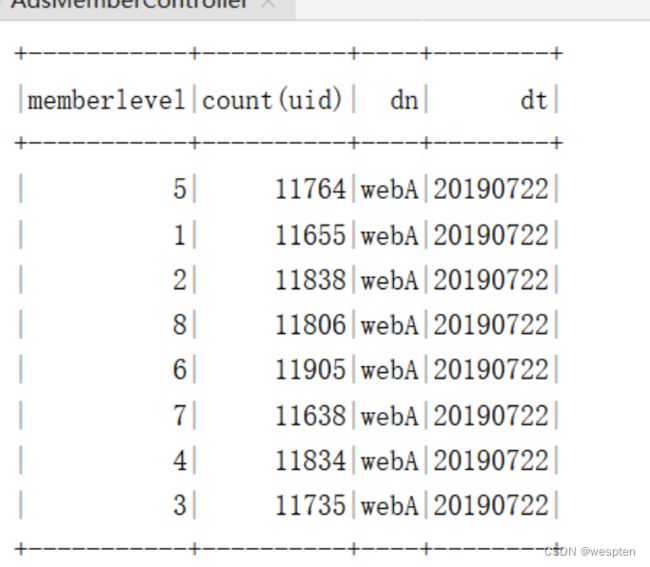

需求8:使用Spark DataFrame Api统计各用户级别(memberlevel)的用户数。

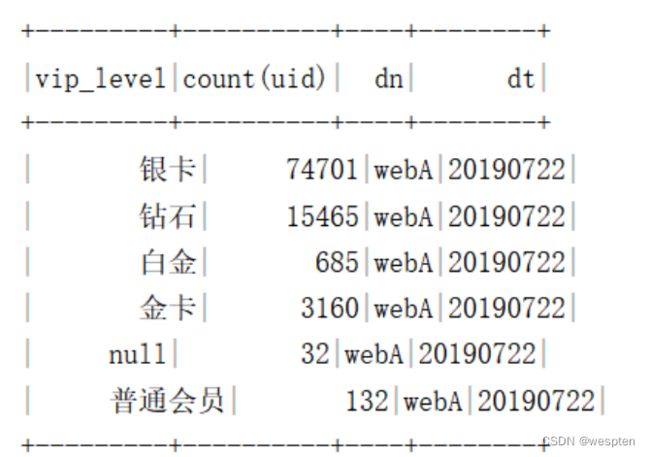

需求9:使用Spark DataFrame Api统计各vip等级人数。

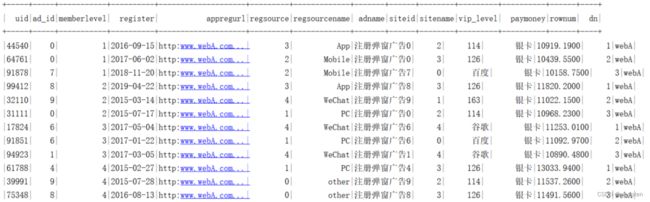

需求10:使用Spark DataFrame Api统计各分区网站、用户级别下(website、memberlevel)的top3用户。

三、用户做题需求模块

1、原始数据格式及字段含义

QzWebsite.log 做题网站日志数据:

{

"createtime": "2019-07-22 11:47:18", //创建时间

"creator": "admin", //创建者

"dn": "webA", //网站分区

"domain": "-",

"dt": "20190722", //日期分区

"multicastgateway": "-",

"multicastport": "-",

"multicastserver": "-",

"sequence": "-",

"siteid": 0, //网站id

"sitename": "sitename0", //网站名称

"status": "-",

"templateserver": "-"

}QzSiteCourse.log 网站课程日志数据:

{

"boardid": 64, //课程模板id

"coursechapter": "-",

"courseid": 66, //课程id

"createtime": "2019-07-22 11:43:32", //创建时间

"creator": "admin", //创建者

"dn": "webA", //网站分区

"dt": "20190722", //日期分区

"helpparperstatus": "-",

"sequence": "-",

"servertype": "-",

"showstatus": "-",

"sitecourseid": 2, //网站课程id

"sitecoursename": "sitecoursename2", //网站课程名称

"siteid": 77, //网站id

"status": "-"

}QzQuestionType.log 题目类型数据:

{

"createtime": "2019-07-22 10:42:47", //创建时间

"creator": "admin", //创建者

"description": "-",

"dn": "webA", //网站分区

"dt": "20190722", //日期分区

"papertypename": "-",

"questypeid": 0, //做题类型id

"quesviewtype": 0,

"remark": "-",

"sequence": "-",

"splitscoretype": "-",

"status": "-",

"viewtypename": "viewtypename0"

}QzQuestion.log 做题日志数据:

{

"analysis": "-",

"answer": "-",

"attanswer": "-",

"content": "-",

"createtime": "2019-07-22 11:33:46", //创建时间

"creator": "admin", //创建者

"difficulty": "-",

"dn": "webA", //网站分区

"dt": "20190722", //日期分区

"lecture": "-",

"limitminute": "-",

"modifystatus": "-",

"optnum": 8,

"parentid": 57,

"quesskill": "-",

"questag": "-",

"questionid": 0, //题id

"questypeid": 57, //题目类型id

"quesviewtype": 44,

"score": 24.124501582742543, //题的分数

"splitscore": 0.0,

"status": "-",

"vanalysisaddr": "-",

"vdeoaddr": "-"

}QzPointQuestion.log 做题知识点关联数据:

{

"createtime": "2019-07-22 09:16:46", //创建时间

"creator": "admin", //创建者

"dn": "webA", //网站分区

"dt": "20190722", //日期分区

"pointid": 0, //知识点id

"questionid": 0, //题id

"questype": 0

}QzPoint.log 知识点数据日志:

{

"chapter": "-", //所属章节

"chapterid": 0, //章节id

"courseid": 0, //课程id

"createtime": "2019-07-22 09:08:52", //创建时间

"creator": "admin", //创建者

"dn": "webA", //网站分区

"dt": "20190722", //日期分区

"excisenum": 73,

"modifystatus": "-",

"pointdescribe": "-",

"pointid": 0, //知识点id

"pointlevel": "9", //知识点级别

"pointlist": "-",

"pointlistid": 82, //知识点列表id

"pointname": "pointname0", //知识点名称

"pointnamelist": "-",

"pointyear": "2019", //知识点所属年份

"remid": "-",

"score": 83.86880766562163, //知识点分数

"sequece": "-",

"status": "-",

"thought": "-",

"typelist": "-"

}QzPaperView.log 试卷视图数据:

{

"contesttime": "2019-07-22 19:02:19",

"contesttimelimit": "-",

"createtime": "2019-07-22 19:02:19", //创建时间

"creator": "admin", //创建者

"dayiid": 94,

"description": "-",

"dn": "webA", //网站分区

"downurl": "-",

"dt": "20190722", //日期分区

"explainurl": "-",

"iscontest": "-",

"modifystatus": "-",

"openstatus": "-",

"paperdifficult": "-",

"paperid": 83, //试卷id

"paperparam": "-",

"papertype": "-",

"paperuse": "-",

"paperuseshow": "-",

"paperviewcatid": 1,

"paperviewid": 0, //试卷视图id

"paperviewname": "paperviewname0", //试卷视图名称

"testreport": "-"

}QzPaper.log 做题试卷日志数据:

{

"chapter": "-", //章节

"chapterid": 33, //章节id

"chapterlistid": 69, //所属章节列表id

"courseid": 72, //课程id

"createtime": "2019-07-22 19:14:27", //创建时间

"creator": "admin", //创建者

"dn": "webA", //网站分区

"dt": "20190722", //日期分区

"papercatid": 92,

"paperid": 0, //试卷id

"papername": "papername0", //试卷名称

"paperyear": "2019", //试卷所属年份

"status": "-",

"suitnum": "-",

"totalscore": 93.16710017696484 //试卷总分

}QzMemberPaperQuestion.log 学员做题详情数据:

{

"chapterid": 33, //章节id

"dn": "webA", //网站分区

"dt": "20190722", //日期分区

"istrue": "-",

"lasttime": "2019-07-22 11:02:30",

"majorid": 77, //主修id

"opertype": "-",

"paperid": 91,//试卷id

"paperviewid": 37, //试卷视图id

"question_answer": 1, //做题结果(0错误 1正确)

"questionid": 94, //题id

"score": 76.6941793631127, //学员成绩分数

"sitecourseid": 1, //网站课程id

"spendtime": 4823, //所用时间单位(秒)

"useranswer": "-",

"userid": 0 //用户id

}QzMajor.log 主修数据:

{

"businessid": 41, //主修行业id

"columm_sitetype": "-",

"createtime": "2019-07-22 11:10:20", //创建时间

"creator": "admin", //创建者

"dn": "webA", //网站分区

"dt": "20190722", //日期分区

"majorid": 1, //主修id

"majorname": "majorname1", //主修名称

"sequence": "-",

"shortname": "-",

"siteid": 24, //网站id

"status": "-"

}QzCourseEduSubject.log 课程辅导数据:

{

"courseeduid": 0, //课程辅导id

"courseid": 0, //课程id

"createtime": "2019-07-22 11:14:43", //创建时间

"creator": "admin", //创建者

"dn": "webA", //网站分区

"dt": "20190722", //日期分区

"edusubjectid": 44, //辅导科目id

"majorid": 38 //主修id

}QzCourse.log 题库课程数据:

{

"chapterlistid": 45, //章节列表id

"courseid": 0, //课程id

"coursename": "coursename0", //课程名称

"createtime": "2019-07-22 11:08:15", //创建时间

"creator": "admin", //创建者

"dn": "webA", //网站分区

"dt": "20190722", //日期分区

"isadvc": "-",

"majorid": 39, //主修id

"pointlistid": 92, //知识点列表id

"sequence": "8128f2c6-2430-42c7-9cb4-787e52da2d98",

"status": "-"

}QzChapterList.log 章节列表数据:

{

"chapterallnum": 0, //章节总个数

"chapterlistid": 0, //章节列表id

"chapterlistname": "chapterlistname0", //章节列表名称

"courseid": 71, //课程id

"createtime": "2019-07-22 16:22:19", //创建时间

"creator": "admin", //创建者

"dn": "webA", //网站分区

"dt": "20190722", //日期分区

"status": "-"

}QzChapter.log 章节数据:

{

"chapterid": 0, //章节id

"chapterlistid": 0, //所属章节列表id

"chaptername": "chaptername0", //章节名称

"chapternum": 10, //章节个数

"courseid": 61, //课程id

"createtime": "2019-07-22 16:37:24", //创建时间

"creator": "admin", //创建者

"dn": "webA", //网站分区

"dt": "20190722", //日期分区

"outchapterid": 0,

"sequence": "-",

"showstatus": "-",

"status": "-"

}QzCenterPaper.log 试卷主题关联数据:

{

"centerid": 55, //主题id

"createtime": "2019-07-22 10:48:30", //创建时间

"creator": "admin", //创建者

"dn": "webA", //网站分区

"dt": "20190722", //日期分区

"openstatus": "-",

"paperviewid": 2, //视图id

"sequence": "-"

}QzCenter.log 主题数据:

{

"centerid": 0, //主题id

"centername": "centername0", //主题名称

"centerparam": "-",

"centertype": "3", //主题类型

"centerviewtype": "-",

"centeryear": "2019", //主题年份

"createtime": "2019-07-22 19:13:09", //创建时间

"creator": "-",

"description": "-",

"dn": "webA",

"dt": "20190722", //日期分区

"openstatus": "1",

"provideuser": "-",

"sequence": "-",

"stage": "-"

}

Centerid:主题id centername:主题名称 centertype:主题类型 centeryear:主题年份

createtime:创建时间 dn:网站分区 dt:日期分区 QzBusiness.log 所属行业数据:

{

"businessid": 0, //行业id

"businessname": "bsname0", //行业名称

"createtime": "2019-07-22 10:40:54", //创建时间

"creator": "admin", //创建者

"dn": "webA", //网站分区

"dt": "20190722", //日期分区

"sequence": "-",

"siteid": 1, //所属网站id

"status": "-"

}2、模拟数据采集上传数据

日志上传命令:

hadoop dfs -put QzBusiness.log /user/yyds/ods/

hadoop dfs -put QzCenter.log /user/yyds/ods/

hadoop dfs -put QzCenterPaper.log /user/yyds/ods/

hadoop dfs -put QzChapter.log /user/yyds/ods/

hadoop dfs -put QzChapterList.log /user/yyds/ods/

hadoop dfs -put QzCourse.log /user/yyds/ods/

hadoop dfs -put QzCourseEduSubject.log /user/yyds/ods/

hadoop dfs -put QzMajor.log /user/yyds/ods/

hadoop dfs -put QzMemberPaperQuestion.log /user/yyds/ods/

hadoop dfs -put QzPaper.log /user/yyds/ods/

hadoop dfs -put QzPaperView.log /user/yyds/ods/

hadoop dfs -put QzPoint.log /user/yyds/ods/

hadoop dfs -put QzPointQuestion.log /user/yyds/ods/

hadoop dfs -put QzQuestion.log /user/yyds/ods/

hadoop dfs -put QzQuestionType.log /user/yyds/ods/

hadoop dfs -put QzSiteCourse.log /user/yyds/ods/

hadoop dfs -put QzWebsite.log /user/yyds/ods/

做题表建表语句:

create external table `dwd`.`dwd_qz_chapter`(

chapterid int ,

chapterlistid int ,

chaptername string ,

sequence string ,

showstatus string ,

creator string ,

createtime timestamp,

courseid int ,

chapternum int,

outchapterid int)

partitioned by(

dt string,

dn string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dwd`.`dwd_qz_chapter_list`(

chapterlistid int ,

chapterlistname string ,

courseid int ,

chapterallnum int ,

sequence string,

status string,

creator string ,

createtime timestamp

)

partitioned by(

dt string,

dn string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dwd`.`dwd_qz_point`(

pointid int ,

courseid int ,

pointname string ,

pointyear string ,

chapter string ,

creator string,

createtme timestamp,

status string,

modifystatus string,

excisenum int,

pointlistid int ,

chapterid int ,

sequece string,

pointdescribe string,

pointlevel string ,

typelist string,

score decimal(4,1),

thought string,

remid string,

pointnamelist string,

typelistids string,

pointlist string)

partitioned by(

dt string,

dn string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dwd`.`dwd_qz_point_question`(

pointid int,

questionid int ,

questype int ,

creator string,

createtime string)

partitioned by(

dt string,

dn string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dwd`.`dwd_qz_site_course`(

sitecourseid int,

siteid int ,

courseid int ,

sitecoursename string ,

coursechapter string ,

sequence string,

status string,

creator string,

createtime timestamp,

helppaperstatus string,

servertype string,

boardid int,

showstatus string)

partitioned by(

dt string,

dn string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dwd`.`dwd_qz_course`(

courseid int ,

majorid int ,

coursename string ,

coursechapter string ,

sequence string,

isadvc string,

creator string,

createtime timestamp,

status string,

chapterlistid int,

pointlistid int

)

partitioned by(

dt string,

dn string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dwd`.`dwd_qz_course_edusubject`(

courseeduid int ,

edusubjectid int ,

courseid int ,

creator string,

createtime timestamp,

majorid int

)

partitioned by(

dt string,

dn string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dwd`.`dwd_qz_website`(

siteid int ,

sitename string ,

domain string,

sequence string,

multicastserver string,

templateserver string,

status string,

creator string,

createtime timestamp,

multicastgateway string,

multicastport string

)

partitioned by(

dt string,

dn string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dwd`.`dwd_qz_major`(

majorid int ,

businessid int ,

siteid int ,

majorname string ,

shortname string ,

status string,

sequence string,

creator string,

createtime timestamp,

column_sitetype string

)

partitioned by(

dt string,

dn string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dwd`.`dwd_qz_business`(

businessid int ,

businessname string,

sequence string,

status string,

creator string,

createtime timestamp,

siteid int

)

partitioned by(

dt string,

dn string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dwd`.`dwd_qz_paper_view`(

paperviewid int ,

paperid int ,

paperviewname string,

paperparam string ,

openstatus string,

explainurl string,

iscontest string ,

contesttime timestamp,

conteststarttime timestamp ,

contestendtime timestamp ,

contesttimelimit string ,

dayiid int,

status string,

creator string,

createtime timestamp,

paperviewcatid int,

modifystatus string,

description string,

papertype string ,

downurl string ,

paperuse string,

paperdifficult string ,

testreport string,

paperuseshow string)

partitioned by(

dt string,

dn string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dwd`.`dwd_qz_center_paper`(

paperviewid int,

centerid int,

openstatus string,

sequence string,

creator string,

createtime timestamp)

partitioned by(

dt string,

dn string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dwd`.`dwd_qz_paper`(

paperid int,

papercatid int,

courseid int,

paperyear string,

chapter string,

suitnum string,

papername string,

status string,

creator string,

createtime timestamp,

totalscore decimal(4,1),

chapterid int,

chapterlistid int)

partitioned by(

dt string,

dn string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dwd`.`dwd_qz_center`(

centerid int,

centername string,

centeryear string,

centertype string,

openstatus string,

centerparam string,

description string,

creator string,

createtime timestamp,

sequence string,

provideuser string,

centerviewtype string,

stage string)

partitioned by(

dt string,

dn string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dwd`.`dwd_qz_question`(

questionid int,

parentid int,

questypeid int,

quesviewtype int,

content string,

answer string,

analysis string,

limitminute string,

score decimal(4,1),

splitscore decimal(4,1),

status string,

optnum int,

lecture string,

creator string,

createtime string,

modifystatus string,

attanswer string,

questag string,

vanalysisaddr string,

difficulty string,

quesskill string,

vdeoaddr string)

partitioned by(

dt string,

dn string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dwd`.`dwd_qz_question_type`(

quesviewtype int,

viewtypename string,

questypeid int,

description string,

status string,

creator string,

createtime timestamp,

papertypename string,

sequence string,

remark string,

splitscoretype string

)

partitioned by(

dt string,

dn string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dwd`.`dwd_qz_member_paper_question`(

userid int,

paperviewid int,

chapterid int,

sitecourseid int,

questionid int,

majorid int,

useranswer string,

istrue string,

lasttime timestamp,

opertype string,

paperid int,

spendtime int,

score decimal(4,1),

question_answer int

)

partitioned by(

dt string,

dn string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dws`.`dws_qz_chapter`(

chapterid int,

chapterlistid int,

chaptername string,

sequence string,

showstatus string,

status string,

chapter_creator string,

chapter_createtime string,

chapter_courseid int,

chapternum int,

chapterallnum int,

outchapterid int,

chapterlistname string,

pointid int,

questionid int,

questype int,

pointname string,

pointyear string,

chapter string,

excisenum int,

pointlistid int,

pointdescribe string,

pointlevel string,

typelist string,

point_score decimal(4,1),

thought string,

remid string,

pointnamelist string,

typelistids string,

pointlist string)

partitioned by(

dt string,

dn string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dws`.`dws_qz_course`(

sitecourseid int,

siteid int,

courseid int,

sitecoursename string,

coursechapter string,

sequence string,

status string,

sitecourse_creator string,

sitecourse_createtime string,

helppaperstatus string,

servertype string,

boardid int,

showstatus string,

majorid int,

coursename string,

isadvc string,

chapterlistid int,

pointlistid int,

courseeduid int,

edusubjectid int

)

partitioned by(

dt string,

dn string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

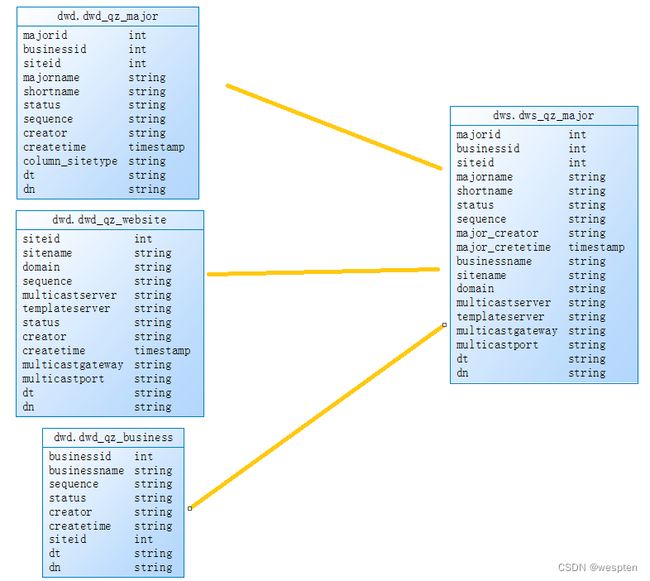

create external table `dws`.`dws_qz_major`(

majorid int,

businessid int,

siteid int,

majorname string,

shortname string,

status string,

sequence string,

major_creator string,

major_createtime timestamp,

businessname string,

sitename string,

domain string,

multicastserver string,

templateserver string,

multicastgateway string,

multicastport string)

partitioned by(

dt string,

dn string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

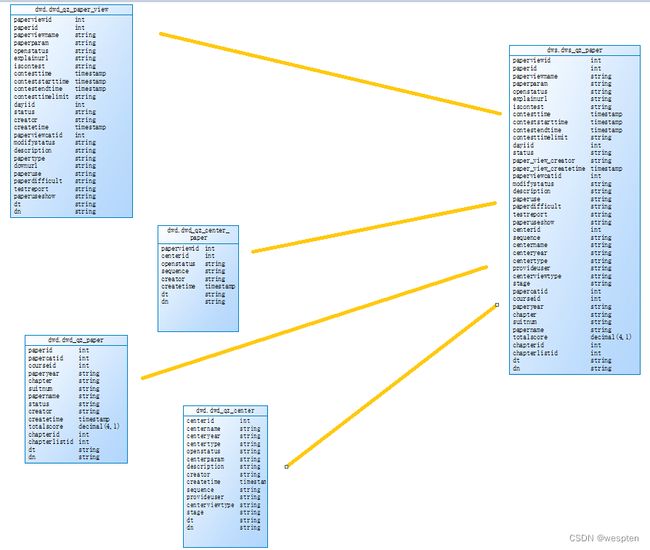

create external table `dws`.`dws_qz_paper`(

paperviewid int,

paperid int,

paperviewname string,

paperparam string,

openstatus string,

explainurl string,

iscontest string,

contesttime timestamp,

conteststarttime timestamp,

contestendtime timestamp,

contesttimelimit string,

dayiid int,

status string,

paper_view_creator string,

paper_view_createtime timestamp,

paperviewcatid int,

modifystatus string,

description string,

paperuse string,

paperdifficult string,

testreport string,

paperuseshow string,

centerid int,

sequence string,

centername string,

centeryear string,

centertype string,

provideuser string,

centerviewtype string,

stage string,

papercatid int,

courseid int,

paperyear string,

suitnum string,

papername string,

totalscore decimal(4,1),

chapterid int,

chapterlistid int)

partitioned by(

dt string,

dn string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dws`.`dws_qz_question`(

questionid int,

parentid int,

questypeid int,

quesviewtype int,

content string,

answer string,

analysis string,

limitminute string,

score decimal(4,1),

splitscore decimal(4,1),

status string,

optnum int,

lecture string,

creator string,

createtime timestamp,

modifystatus string,

attanswer string,

questag string,

vanalysisaddr string,

difficulty string,

quesskill string,

vdeoaddr string,

viewtypename string,

description string,

papertypename string,

splitscoretype string)

partitioned by(

dt string,

dn string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create table `dws`.`dws_user_paper_detail`(

`userid` int,

`courseid` int,

`questionid` int,

`useranswer` string,

`istrue` string,

`lasttime` string,

`opertype` string,

`paperid` int,

`spendtime` int,

`chapterid` int,

`chaptername` string,

`chapternum` int,

`chapterallnum` int,

`outchapterid` int,

`chapterlistname` string,

`pointid` int,

`questype` int,

`pointyear` string,

`chapter` string,

`pointname` string,

`excisenum` int,

`pointdescribe` string,

`pointlevel` string,

`typelist` string,

`point_score` decimal(4,1),

`thought` string,

`remid` string,

`pointnamelist` string,

`typelistids` string,

`pointlist` string,

`sitecourseid` int,

`siteid` int,

`sitecoursename` string,

`coursechapter` string,

`course_sequence` string,

`course_stauts` string,

`course_creator` string,

`course_createtime` timestamp,

`servertype` string,

`helppaperstatus` string,

`boardid` int,

`showstatus` string,

`majorid` int,

`coursename` string,

`isadvc` string,

`chapterlistid` int,

`pointlistid` int,

`courseeduid` int,

`edusubjectid` int,

`businessid` int,

`majorname` string,

`shortname` string,

`major_status` string,

`major_sequence` string,

`major_creator` string,

`major_createtime` timestamp,

`businessname` string,

`sitename` string,

`domain` string,

`multicastserver` string,

`templateserver` string,

`multicastgatway` string,

`multicastport` string,

`paperviewid` int,

`paperviewname` string,

`paperparam` string,

`openstatus` string,

`explainurl` string,

`iscontest` string,

`contesttime` timestamp,

`conteststarttime` timestamp,

`contestendtime` timestamp,

`contesttimelimit` string,

`dayiid` int,

`paper_status` string,

`paper_view_creator` string,

`paper_view_createtime` timestamp,

`paperviewcatid` int,

`modifystatus` string,

`description` string,

`paperuse` string,

`testreport` string,

`centerid` int,

`paper_sequence` string,

`centername` string,

`centeryear` string,

`centertype` string,

`provideuser` string,

`centerviewtype` string,

`paper_stage` string,

`papercatid` int,

`paperyear` string,

`suitnum` string,

`papername` string,

`totalscore` decimal(4,1),

`question_parentid` int,

`questypeid` int,

`quesviewtype` int,

`question_content` string,

`question_answer` string,

`question_analysis` string,

`question_limitminute` string,

`score` decimal(4,1),

`splitscore` decimal(4,1),

`lecture` string,

`question_creator` string,

`question_createtime` timestamp,

`question_modifystatus` string,

`question_attanswer` string,

`question_questag` string,

`question_vanalysisaddr` string,

`question_difficulty` string,

`quesskill` string,

`vdeoaddr` string,

`question_description` string,

`question_splitscoretype` string,

`user_question_answer` int

)

partitioned by(

dt string,

dn string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

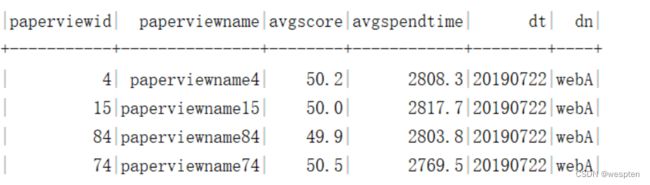

create external table ads.ads_paper_avgtimeandscore(

paperviewid int,

paperviewname string,

avgscore decimal(4,1),

avgspendtime decimal(10,1))

partitioned by(

dt string,

dn string)

row format delimited fields terminated by '\t';

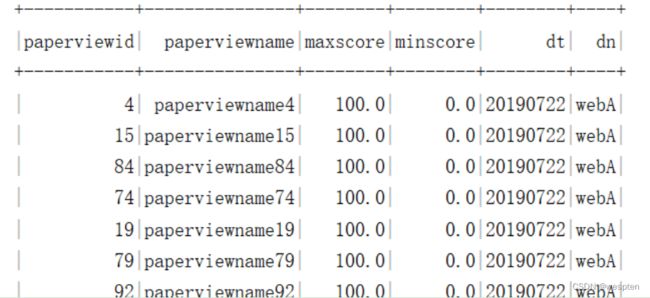

create external table ads.ads_paper_maxdetail(

paperviewid int,

paperviewname string,

maxscore decimal(4,1),

minscore decimal(4,1))

partitioned by(

dt string,

dn string)

row format delimited fields terminated by '\t';

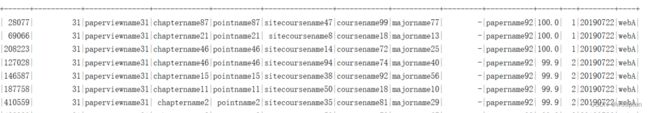

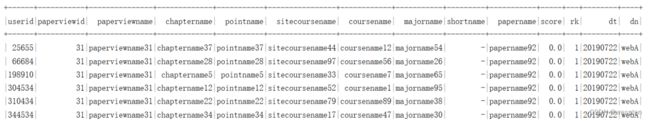

create external table ads.ads_top3_userdetail(

userid int,

paperviewid int,

paperviewname string,

chaptername string,

pointname string,

sitecoursename string,

coursename string,

majorname string,

shortname string,

papername string,

score decimal(4,1),

rk int)

partitioned by(

dt string,

dn string)

row format delimited fields terminated by '\t';

create external table ads.ads_low3_userdetail(

userid int,

paperviewid int,

paperviewname string,

chaptername string,

pointname string,

sitecoursename string,

coursename string,

majorname string,

shortname string,

papername string,

score decimal(4,1),

rk int)

partitioned by(

dt string,

dn string)

row format delimited fields terminated by '\t';

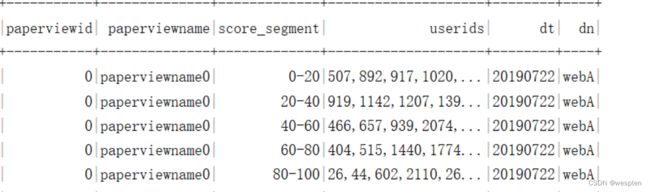

create external table ads.ads_paper_scoresegment_user(

paperviewid int,

paperviewname string,

score_segment string,

userids string)

partitioned by(

dt string,

dn string)

row format delimited fields terminated by '\t';

create external table ads.ads_user_paper_detail(

paperviewid int,

paperviewname string,

unpasscount int,

passcount int,

rate decimal(4,2))

partitioned by(

dt string,

dn string)

row format delimited fields terminated by '\t';

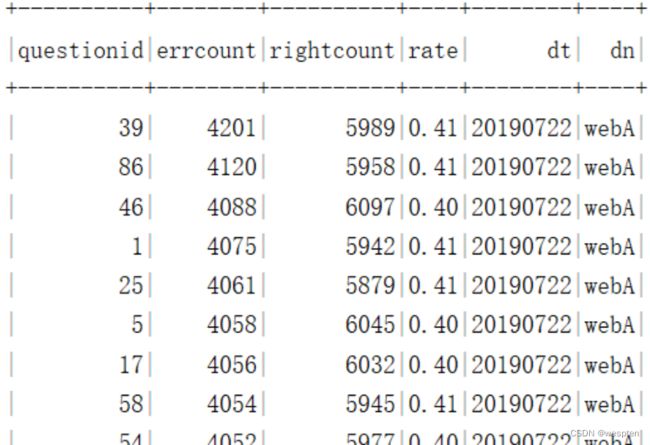

create external table ads.ads_user_question_detail(

questionid int,

errcount int,

rightcount int,

rate decimal(4,2))

partitioned by(

dt string,

dn string)

row format delimited fields terminated by '\t';3、解析数据

需求1:使用spark解析ods层数据,将数据存入到对应的hive表中,要求对所有score 分数字段进行保留1位小数并且四舍五入。

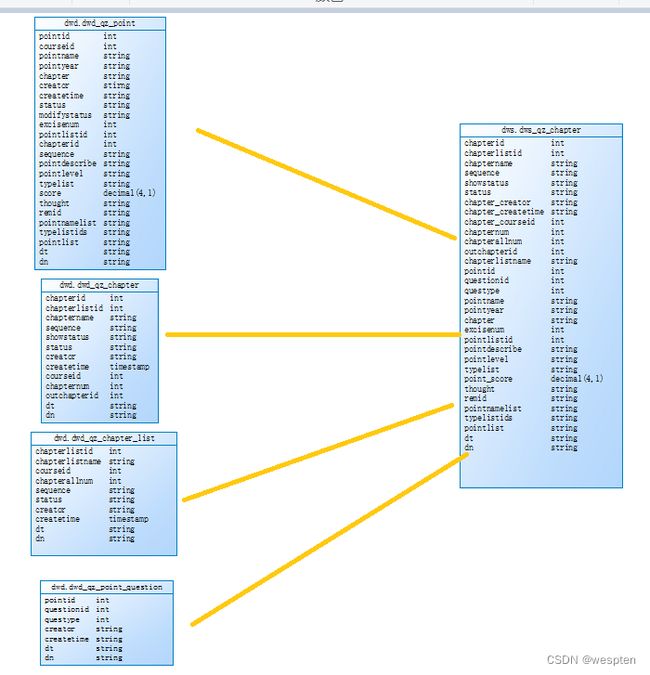

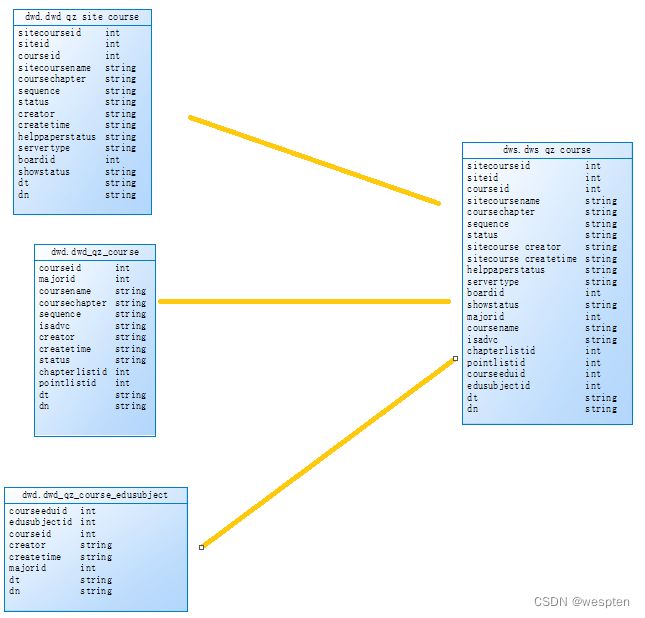

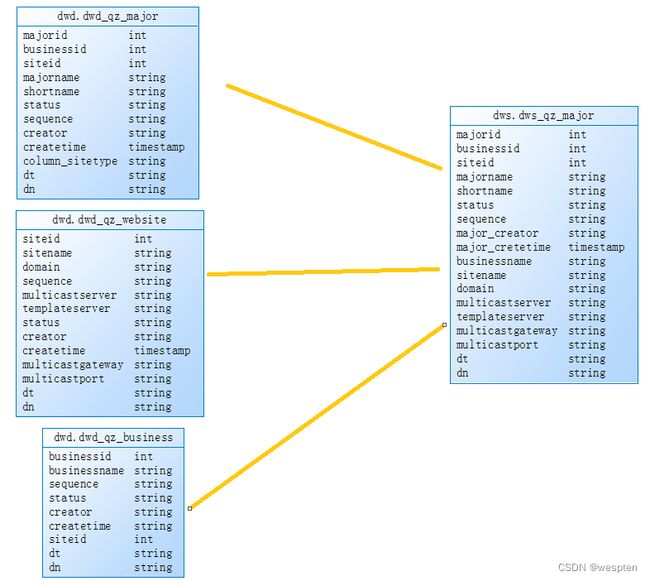

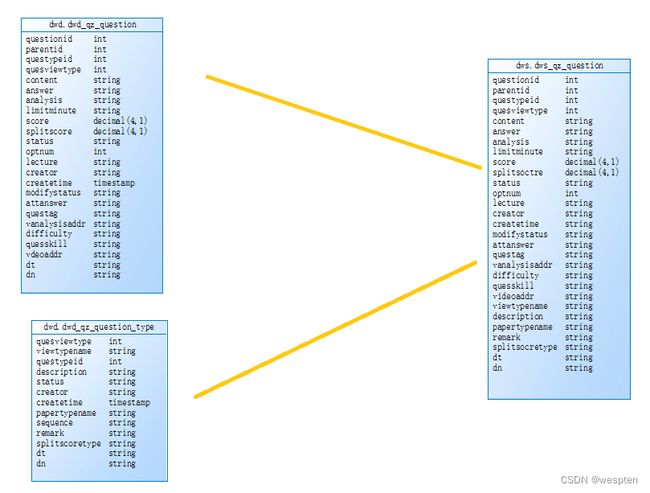

4、维度退化

需求2:基于dwd层基础表数据,需要对表进行维度退化进行表聚合,聚合成dws.dws_qz_chapter(章节维度表),dws.dws_qz_course(课程维度表),dws.dws_qz_major(主修维度表),dws.dws_qz_paper(试卷维度表),dws.dws_qz_question(题目维度表),使用spark sql和dataframe api操作

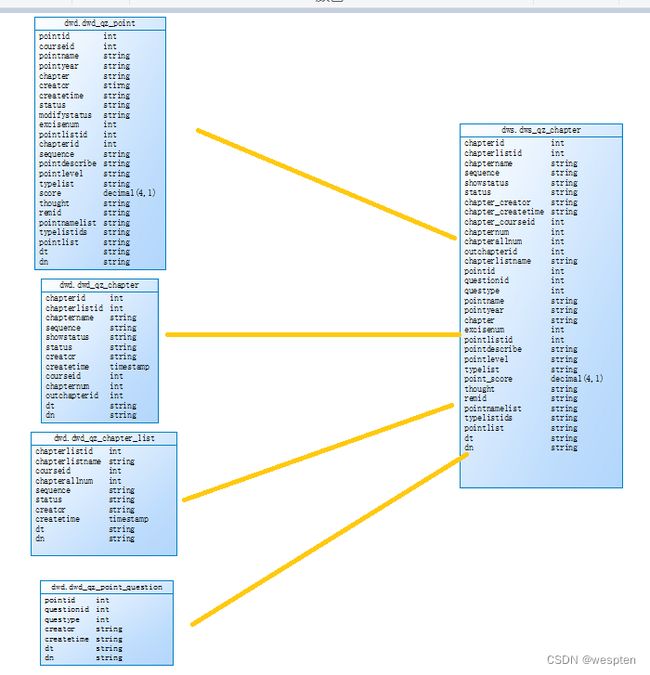

dws.dws_qz_chapte : 4张表join dwd.dwd_qz_chapter inner join dwd.qz_chapter_list join条件:chapterlistid和dn ,inner join dwd.dwd_qz_point join条件:chapterid和dn, inner join dwd.dwd_qz_point_question join条件:pointid和dn

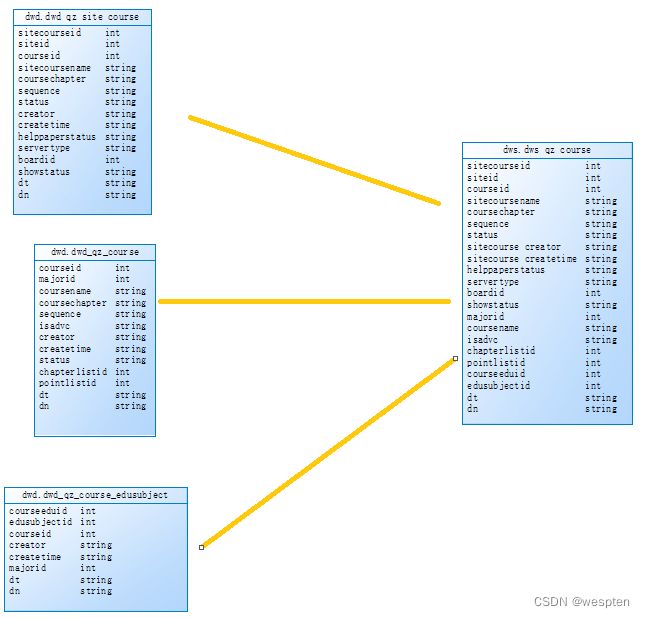

dws.dws_qz_course:3张表join dwd.dwd_qz_site_course inner join dwd.qz_course join条件:courseid和dn , inner join dwd.qz_course_edusubject join条件:courseid和dn

dws.dws_qz_major:3张表join dwd.dwd_qz_major inner join dwd.dwd_qz_website join条件:siteid和dn , inner join dwd.dwd_qz_business join条件:businessid和dn

dws.dws_qz_paper: 4张表join qz_paperview left join qz_center join 条件:paperviewid和dn,

left join qz_center join 条件:centerid和dn, inner join qz_paper join条件:paperid和dn

dws.dws_qz_question:2表join qz_quesiton inner join qz_questiontype join条件:

questypeid 和dn

5、宽表合成

需求3:基于dws.dws_qz_chapter、dws.dws_qz_course、dws.dws_qz_major、dws.dws_qz_paper、dws.dws_qz_question、dwd.dwd_qz_member_paper_question 合成宽表dw.user_paper_detail,使用spark sql和dataframe api操作

dws.user_paper_detail:dwd_qz_member_paper_question inner join dws_qz_chapter join条件:chapterid 和dn ,inner join dws_qz_course join条件:sitecourseid和dn , inner join dws_qz_major join条件majorid和dn, inner join dws_qz_paper 条件paperviewid和dn , inner join dws_qz_question 条件questionid和

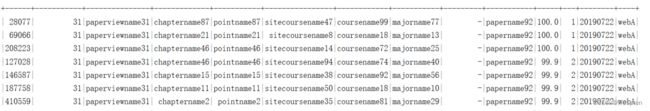

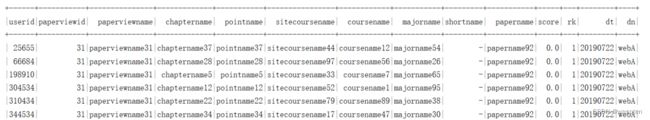

6、报表层各指标统计

需求4:基于宽表统计各试卷平均耗时、平均分,先使用Spark Sql 完成指标统计,再使用Spark DataFrame Api。

需求5:统计各试卷最高分、最低分,先使用Spark Sql 完成指标统计,再使用Spark DataFrame Api。

需求6:按试卷分组统计每份试卷的用Spa前三用户详情,先使rk Sql 完成指标统计,再使用Spark DataFrame Api。

需求7:按试卷分组统计每份试卷的倒数前三的用户详情,先使用Spark Sql 完成指标统计,再使用Spark DataFrame Api。

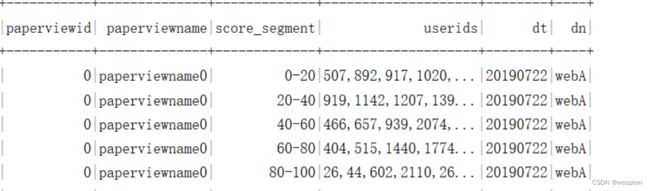

需求8:统计各试卷各分段的用户id,分段有0-20,20-40,40-60,60-80,80-100。

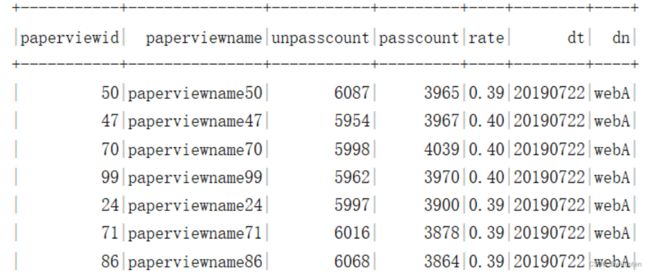

需求9:统计试卷未及格的人数,及格的人数,试卷的及格率 及格分数60。

![]()

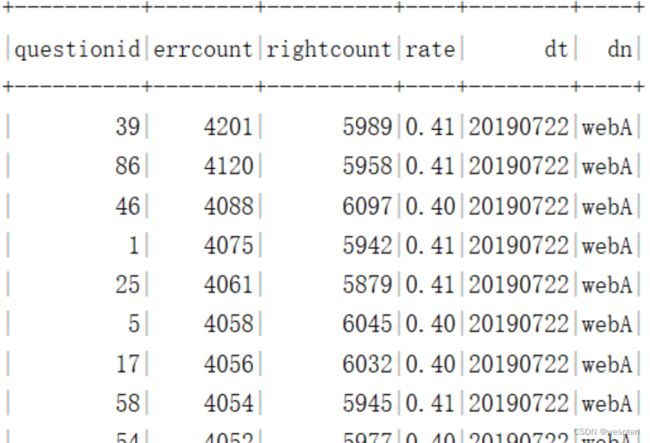

需求10:统计各题的错误数,正确数,错题率。

7、将数据导入mysql

需求11:统计指标数据导入到ads层后,通过datax将ads层数据导入到mysql中。

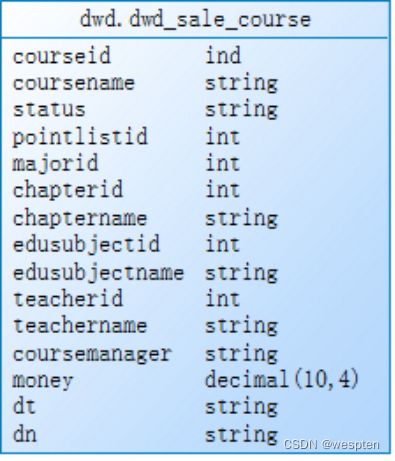

四、售课模块

1、原始数据格式及字段含义

salecourse.log 售课基本数据:

{

"chapterid": 2, //章节id

"chaptername": "chaptername2", //章节名称

"courseid": 0, //课程id

"coursemanager": "admin", //课程管理员

"coursename": "coursename0", //课程名称

"dn": "webA", //网站分区

"dt": "20190722", //日期分区

"edusubjectid": 7, //辅导科目id

"edusubjectname": "edusubjectname7", //辅导科目名称

"majorid": 9, //主修id

"majorname": "majorname9", //主修名称

"money": "100", //课程价格

"pointlistid": 9, //知识点列表id

"status": "-", //状态

"teacherid": 8, //老师id

"teachername": "teachername8" //老师名称

}courseshoppingcart.log 课程购物车信息:

{

"courseid": 9830, //课程id

"coursename": "coursename9830", //课程名称

"createtime": "2019-07-22 00:00:00", //创建时间

"discount": "8", //折扣

"dn": "webA", //网站分区

"dt": "20190722", //日期分区

"orderid": "odid-0", //订单id

"sellmoney": "80" //购物车金额

}coursepay.log 课程支付订单信息:

{

"createitme": "2019-07-22 00:00:00", //创建时间

"discount": "8", //支付折扣

"dn": "webA", //网站分区

"dt": "20190722", //日期分区

"orderid": "odid-0", //订单id

"paymoney": "80" //支付金额

}2、模拟数据采集上传数据

Hadoop dfs -put salecourse.log /user/yyds/ods

Hadoop dfs -put coursepay.log /user/yyds/ods

Hadoop dfs -put courseshoppingcart.log /user/yyds/ods3、解析数据导入到对应hive表中

![]()

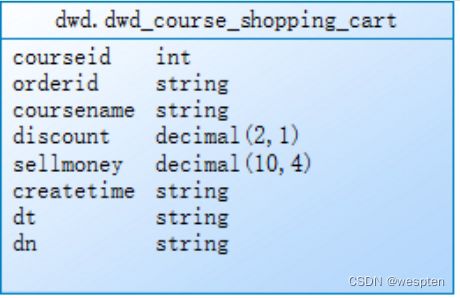

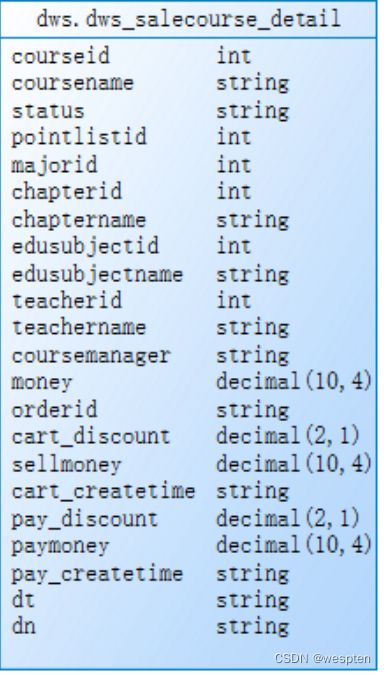

4、关联join聚合表

dwd.dwd_sale_course 与dwd.dwd_course_shopping_cart join条件:courseid、dn、dt

dwd.dwd_course_shopping_cart 与dwd.dwd_course_pay join条件:orderid、dn、dt

不允许丢数据,关联不上的字段为null,join之后导入dws层的表。

def etlBaseWebSiteLog(ssc: SparkContext, sparkSession: SparkSession) = {

import sparkSession.implicits._ //隐式转换

ssc.textFile("/user/yyds/ods/baswewebsite.log").mapPartitions(partition => {

partition.map(item => {

val jsonObject = ParseJsonData.getJsonData(item)

val siteid = jsonObject.getIntValue("siteid")

val sitename = jsonObject.getString("sitename")

val siteurl = jsonObject.getString("siteurl")

val delete = jsonObject.getIntValue("delete")

val createtime = jsonObject.getString("createtime")

val creator = jsonObject.getString("creator")

val dn = jsonObject.getString("dn")

(siteid, sitename, siteurl, delete, createtime, creator, dn)

})

}).toDF().coalesce(1).write.mode(SaveMode.Overwrite).insertInto("dwd.dwd_base_website")

}五、数仓环境准备

1、为什么要分层

2、数仓命名规范

- ODS层命名为ods

- DWD层命名为dwd

- DWS层命名为dws

- ADS层命名为ads

- 临时表数据库命名为xxx_tmp

- 备份数据数据库命名为xxx_bak

3、Hive&MySQL安装

1)Hive安装部署

(1)把apache-hive-1.2.1-bin.tar.gz上传到linux的/opt/software目录下

(2)解压apache-hive-1.2.1-bin.tar.gz到/opt/module/目录下面

[yyds@hadoop102 software]$ tar -zxvf apache-hive-1.2.1-bin.tar.gz -C /opt/module/(3)修改apache-hive-1.2.1-bin.tar.gz的名称为hive

[yyds@hadoop102 module]$ mv apache-hive-1.2.1-bin/ hive(4)修改/opt/module/hive/conf目录下的hive-env.sh.template名称为hive-env.sh

[yyds@hadoop102 conf]$ mv hive-env.sh.template hive-env.sh(5)配置hive-env.sh文件

- 配置HADOOP_HOME路径

export HADOOP_HOME=/opt/module/hadoop-2.7.2- 配置HIVE_CONF_DIR路径

export HIVE_CONF_DIR=/opt/module/hive/conf2)Hadoop集群配置

(1)必须启动HDFS和YARN

[yyds@hadoop102 hadoop-2.7.2]$ sbin/start-dfs.sh

[yyds@hadoop103 hadoop-2.7.2]$ sbin/start-yarn.sh(2)在HDFS上创建/tmp和/user/hive/warehouse两个目录并修改他们的同组权限可写

[yyds@hadoop102 hadoop-2.7.2]$ bin/hadoop fs -mkdir /tmp

[yyds@hadoop102 hadoop-2.7.2]$ bin/hadoop fs -mkdir -p /user/hive/warehouse

[yyds@hadoop102 hadoop-2.7.2]$ bin/hadoop fs -chmod g+w /tmp

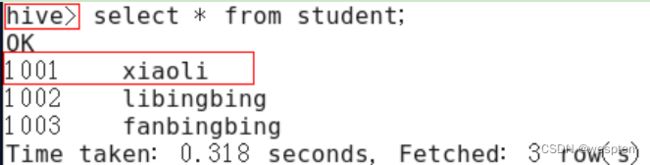

[yyds@hadoop102 hadoop-2.7.2]$ bin/hadoop fs -chmod g+w /user/hive/warehouse3)Hive基本操作

(1)启动hive

[yyds@hadoop102 hive]$ bin/hive(2)查看数据库

hive> show databases;(3)打开默认数据库

hive> use default;(4)显示default数据库中的表

hive> show tables;(5)创建一张表

hive> create table student(id int, name string);(6)显示数据库中有几张表

hive> show tables;(7)查看表的结构

hive> desc student;(8)向表中插入数据

hive> insert into student values(1000,"ss");(9)查询表中数据

hive> select * from student;(10)退出hive

hive> quit;4)MySql安装包准备

查看mysql是否安装,如果安装了,卸载mysql:

(1)查看

[root@hadoop102 桌面]# rpm -qa|grep mysql

mysql-libs-5.1.73-7.el6.x86_64(2)卸载

[root@hadoop102 桌面]# rpm -e --nodeps mysql-libs-5.1.73-7.el6.x86_64解压mysql-libs.zip文件到当前目录:

[root@hadoop102 software]# unzip mysql-libs.zip

[root@hadoop102 software]# ls

mysql-libs.zip

mysql-libs进入到mysql-libs文件夹下:

[root@hadoop102 mysql-libs]# ll

总用量 76048

-rw-r--r--. 1 root root 18509960 3月 26 2015 MySQL-client-5.6.24-1.el6.x86_64.rpm

-rw-r--r--. 1 root root 3575135 12月 1 2013 mysql-connector-java-5.1.27.tar.gz

-rw-r--r--. 1 root root 55782196 3月 26 2015 MySQL-server-5.6.24-1.el6.x86_64.rpm5)安装MySql服务器

安装mysql服务端:

[root@hadoop102 mysql-libs]# rpm -ivh MySQL-server-5.6.24-1.el6.x86_64.rpm查看产生的随机密码:

[root@hadoop102 mysql-libs]# cat /root/.mysql_secret

OEXaQuS8IWkG19Xs查看mysql状态:

[root@hadoop102 mysql-libs]# service mysql status启动mysql:

[root@hadoop102 mysql-libs]# service mysql start6)安装MySql客户端

安装mysql客户端:

[root@hadoop102 mysql-libs]# rpm -ivh MySQL-client-5.6.24-1.el6.x86_64.rpm链接mysql:

[root@hadoop102 mysql-libs]# mysql -uroot -pOEXaQuS8IWkG19Xs修改密码:

mysql>SET PASSWORD=PASSWORD('000000');退出mysql:

mysql>exit7)MySql中user表中主机配置

配置只要是root用户+密码,在任何主机上都能登录MySQL数据库。

进入mysql:

[root@hadoop102 mysql-libs]# mysql -uroot -p000000显示数据库:

mysql>show databases;使用mysql数据库:

mysql>use mysql;展示mysql数据库中的所有表:

mysql>show tables;展示user表的结构:

mysql>desc user;查询user表:

mysql>select User, Host, Password from user;修改user表,把Host表内容修改为%:

mysql>update user set host='%' where host='localhost';删除root用户的其他host:

mysql>

delete from user where Host='hadoop102';

delete from user where Host='127.0.0.1';

delete from user where Host='::1';刷新:

mysql>flush privileges;退出:

mysql>quit;4、Hive元数据配置到MySql

驱动拷贝:

在/opt/software/mysql-libs目录下解压mysql-connector-java-5.1.27.tar.gz驱动包。

[root@hadoop102 mysql-libs]# tar -zxvf mysql-connector-java-5.1.27.tar.gz拷贝/opt/software/mysql-libs/mysql-connector-java-5.1.27目录下的mysql-connector-java-5.1.27-bin.jar到/opt/module/hive/lib/:

[root@hadoop102 mysql-connector-java-5.1.27]# cp mysql-connector-java-5.1.27-bin.jar

/opt/module/hive/lib/配置Metastore到MySql:

在/opt/module/hive/conf目录下创建一个hive-site.xml。

[yyds@hadoop102 conf]$ vi hive-site.xml根据官方文档配置参数,拷贝数据到hive-site.xml文件中:

https://cwiki.apache.org/confluence/display/Hive/AdminManual+MetastoreAdmin

javax.jdo.option.ConnectionURL

jdbc:mysql://hadoop102:3306/metastore?createDatabaseIfNotExist=true

JDBC connect string for a JDBC metastore

javax.jdo.option.ConnectionDriverName

com.mysql.jdbc.Driver

Driver class name for a JDBC metastore

javax.jdo.option.ConnectionUserName

root

username to use against metastore database

javax.jdo.option.ConnectionPassword

000000

password to use against metastore database

配置完毕后,如果启动hive异常,可以重新启动虚拟机。(重启后,别忘了启动hadoop集群)。

5、Hive常见属性配置

1)查询后信息显示配置

在hive-site.xml文件中添加如下配置信息,就可以实现显示当前数据库,以及查询表的头信息配置。

hive.cli.print.header

true

hive.cli.print.current.db

true

重新启动hive,对比配置前后差异。

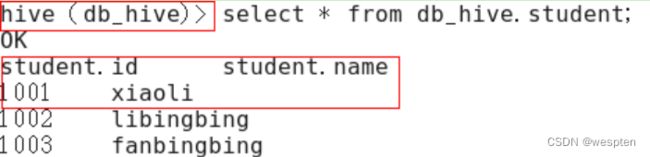

(1)配置前,如图所示:

配置后,如图所示:

2)Hive运行日志信息配置

1.Hive的log默认存放在/tmp/yyds/hive.log目录下(当前用户名下)

2.修改hive的log存放日志到/opt/module/hive/logs

(1)修改/opt/module/hive/conf/hive-log4j.properties.template文件名称为

hive-log4j.properties:

[yyds@hadoop102 conf]$ pwd

/opt/module/hive/conf

[yyds@hadoop102 conf]$ mv hive-log4j.properties.template hive-log4j.properties在hive-log4j.properties文件中修改log存放位置:

hive.log.dir=/opt/module/hive/logs3)关闭元数据检查

[yyds@hadoop102 conf]$ pwd

/opt/module/hive/conf

[yyds@hadoop102 conf]$ vim hive-site.xml增加如下配置:

hive.metastore.schema.verification

false

6、Spark集群安装

机器准备:

准备三台Linux服务器,安装好JDK1.8。

下载Spark安装包:

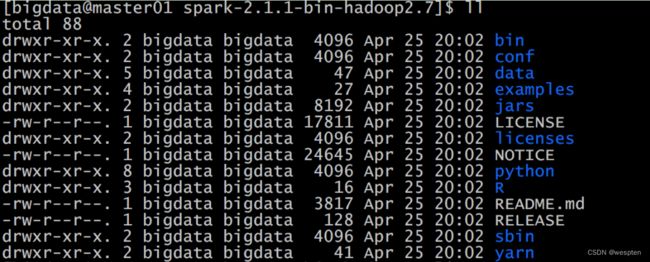

上传解压安装包:

上传spark-2.1.1-bin-hadoop2.7.tgz安装包到Linux上。

解压安装包到指定位置:

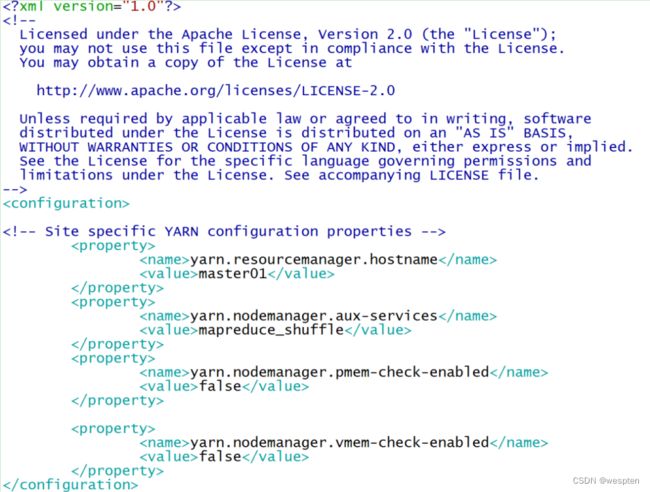

tar -xf spark-2.1.1-bin-hadoop2.7.tgz -C /home/bigdata/Hadoop配置Spark【Yarn】:

修改Hadoop配置下的yarn-site.xml。

yarn.resourcemanager.hostname

master01

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.nodemanager.pmem-check-enabled

false

yarn.nodemanager.vmem-check-enabled

false

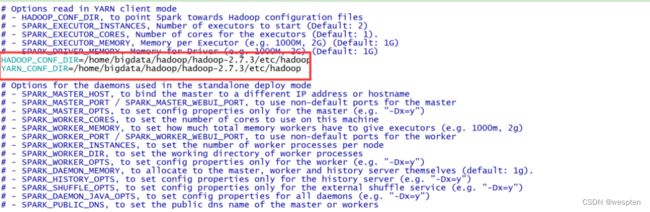

修改Spark-env.sh 添加:

让Spark能够发现Hadoop配置文件:

HADOOP_CONF_DIR=/home/bigdata/hadoop/hadoop-2.7.3/etc/hadoop

YARN_CONF_DIR=/home/bigdata/hadoop/hadoop-2.7.3/etc/hadoop让Spark能够发现Hadoop配置文件。

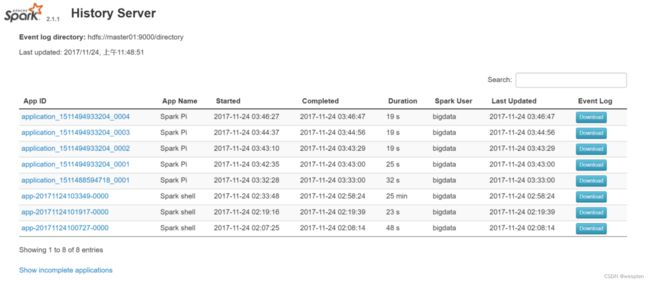

启动spark history server:

可以查看日志。

六、Maven项目创建

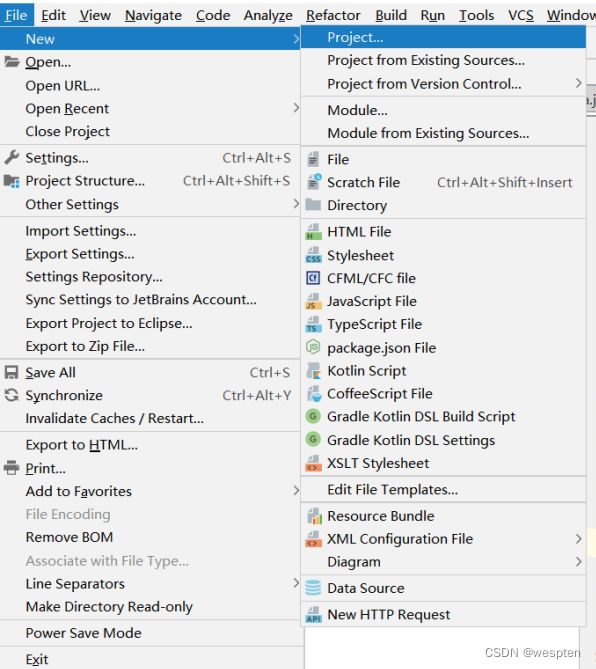

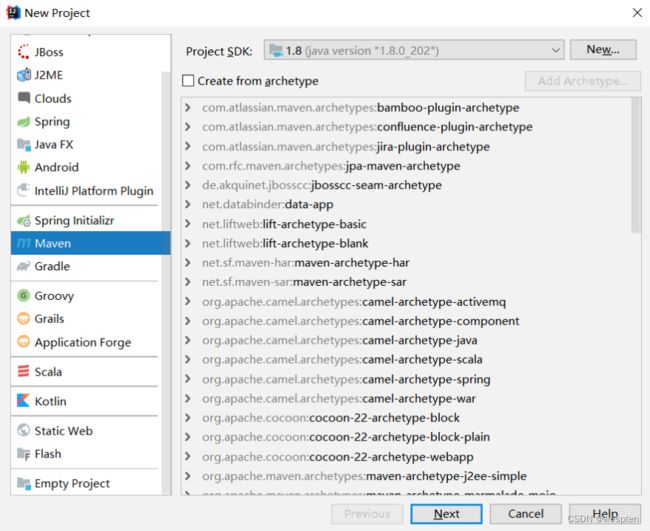

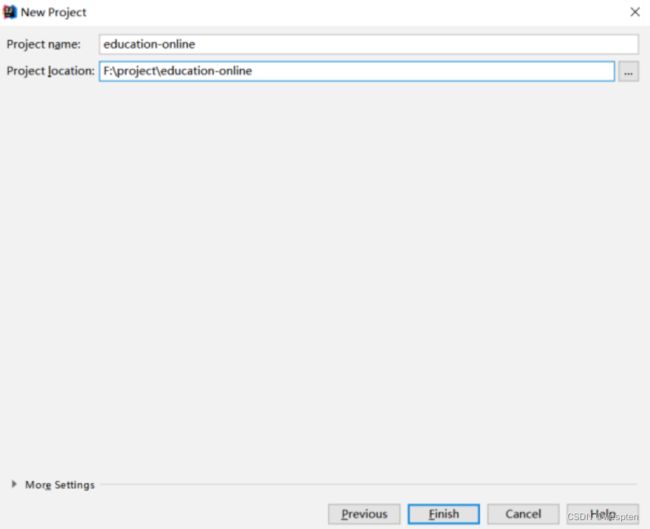

新建Maven项目:

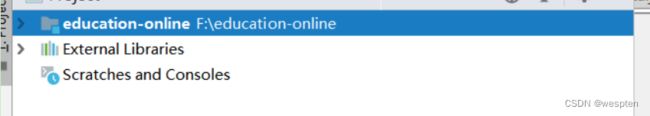

创建子项目:

配置主目录pom.xml:

4.0.0

com.yyds

education-online

pom

1.0-SNAPSHOT

com_yyds_warehouse

2.1.1

2.11.8

1.2.17

1.7.22

org.slf4j

jcl-over-slf4j

${slf4j.version}

org.slf4j

slf4j-api

${slf4j.version}

org.slf4j

slf4j-log4j12

${slf4j.version}

log4j

log4j

${log4j.version}

org.apache.spark

spark-core_2.11

${spark.version}

org.apache.spark

spark-sql_2.11

${spark.version}

org.apache.spark

spark-streaming_2.11

${spark.version}

org.scala-lang

scala-library

${scala.version}

org.apache.spark

spark-hive_2.11

${spark.version}

org.apache.maven.plugins

maven-compiler-plugin

3.6.1

1.8

1.8

net.alchim31.maven

scala-maven-plugin

3.2.2

compile

testCompile

org.apache.maven.plugins

maven-assembly-plugin

3.0.0

make-assembly

package

single

配置子项目pom.xml:

education-online

com.yyds

1.0-SNAPSHOT

4.0.0

com_yyds_warehouse

org.apache.spark

spark-core_2.11

${spark.version}

provided

org.apache.spark

spark-sql_2.11

${spark.version}

provided

org.apache.spark

spark-hive_2.11

${spark.version}

provided

org.scala-lang

scala-library

provided

com.alibaba

fastjson

1.2.47

org.scala-tools

maven-scala-plugin

2.15.1

compile-scala

add-source

compile

test-compile-scala

add-source

testCompile

org.apache.maven.plugins

maven-assembly-plugin

jar-with-dependencies

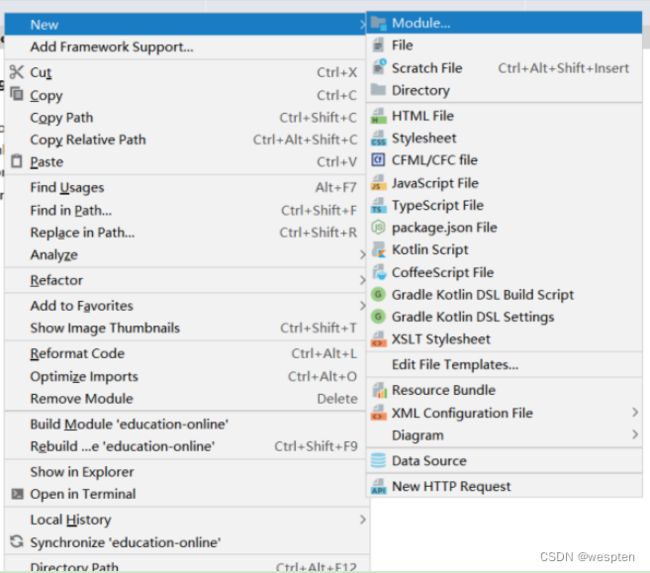

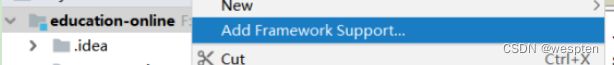

添加scala库支持:

Bean 目录下存放实体类;

Controller 目录下存放程序入口类;

Dao 目录下存放各表sql类;

Service 目录下存放各表业务类;

Util目录下存放工具类;

七、用户注册模块数仓设计与实现

1、用户注册模块数仓设计

1)原始数据格式及字段含义

baseadlog 广告基础表原始json数据:

{

"adid": "0", //基础广告表广告id

"adname": "注册弹窗广告0", //广告详情名称

"dn": "webA" //网站分区

}basewebsitelog 网站基础表原始json数据:

{

"createtime": "2000-01-01",

"creator": "admin",

"delete": "0",

"dn": "webC", //网站分区

"siteid": "2", //网站id

"sitename": "114", //网站名称

"siteurl": "www.114.com/webC" //网站地址

}memberRegtype 用户跳转地址注册表:

{

"appkey": "-",

"appregurl": "http:www.webA.com/product/register/index.html", //注册时跳转地址

"bdp_uuid": "-",

"createtime": "2015-05-11",

"dt":"20190722", //日期分区

"dn": "webA", //网站分区

"domain": "-",

"isranreg": "-",

"regsource": "4", //所属平台 1.PC 2.MOBILE 3.APP 4.WECHAT

"uid": "0", //用户id

"websiteid": "0" //对应basewebsitelog 下的siteid网站

}pcentermempaymoneylog 用户支付金额表:

{

"dn": "webA", //网站分区

"paymoney": "162.54", //支付金额

"siteid": "1", //网站id对应 对应basewebsitelog 下的siteid网站

"dt":"20190722", //日期分区

"uid": "4376695", //用户id

"vip_id": "0" //对应pcentermemviplevellog vip_id

}pcentermemviplevellog用户vip等级基础表:

{

"discountval": "-",

"dn": "webA", //网站分区

"end_time": "2019-01-01", //vip结束时间

"last_modify_time": "2019-01-01",

"max_free": "-",

"min_free": "-",

"next_level": "-",

"operator": "update",

"start_time": "2015-02-07", //vip开始时间

"vip_id": "2", //vip id

"vip_level": "银卡" //vip级别名称

}memberlog 用户基本信息表:

{

"ad_id": "0", //广告id

"birthday": "1981-08-14", //出生日期

"dt":"20190722", //日期分区

"dn": "webA", //网站分区

"email": "[email protected]",

"fullname": "王69239", //用户姓名

"iconurl": "-",

"lastlogin": "-",

"mailaddr": "-",

"memberlevel": "6", //用户级别

"password": "123456", //密码

"paymoney": "-",

"phone": "13711235451", //手机号

"qq": "10000",

"register": "2016-08-15", //注册时间

"regupdatetime": "-",

"uid": "69239", //用户id

"unitname": "-",

"userip": "123.235.75.48", //ip地址

"zipcode": "-"

}其余字段为非统计项 直接使用默认值“-”存储即可。

2)数据分层

在hadoop集群上创建 ods目录:

hadoop dfs -mkdir /user/yyds/ods在hive里分别建立三个库,dwd、dws、ads 分别用于存储etl清洗后的数据、宽表和拉链表数据、各报表层统计指标数据:

create database dwd;

create database dws;

create database ads;各层级:

- ods 存放原始数据

- dwd 结构与原始表结构保持一致,对ods层数据进行清洗

- dws 以dwd为基础进行轻度汇总

- ads 报表层,为各种统计报表提供数据

用户注册模块各层建表语句:

create external table `dwd`.`dwd_member`(

uid int,

ad_id int,

birthday string,

email string,

fullname string,

iconurl string,

lastlogin string,

mailaddr string,

memberlevel string,

password string,

paymoney string,

phone string,

qq string,

register string,

regupdatetime string,

unitname string,

userip string,

zipcode string)

partitioned by(

dt string,

dn string

)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dwd`.`dwd_member_regtype`(

uid int,

appkey string,

appregurl string,

bdp_uuid string,

createtime timestamp,

isranreg string,

regsource string,

regsourcename string,

websiteid int)

partitioned by(

dt string,

dn string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dwd`.`dwd_base_ad`(

adid int,

adname string)

partitioned by (

dn string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dwd`.`dwd_base_website`(

siteid int,

sitename string,

siteurl string,

`delete` int,

createtime timestamp,

creator string)

partitioned by (

dn string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dwd`.`dwd_pcentermempaymoney`(

uid int,

paymoney string,

siteid int,

vip_id int

)

partitioned by(

dt string,

dn string

)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dwd`.`dwd_vip_level`(

vip_id int,

vip_level string,

start_time timestamp,

end_time timestamp,

last_modify_time timestamp,

max_free string,

min_free string,

next_level string,

operator string

)partitioned by(

dn string

)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dws`.`dws_member`(

uid int,

ad_id int,

fullname string,

iconurl string,

lastlogin string,

mailaddr string,

memberlevel string,

password string,

paymoney string,

phone string,

qq string,

register string,

regupdatetime string,

unitname string,

userip string,

zipcode string,

appkey string,

appregurl string,

bdp_uuid string,

reg_createtime timestamp,

isranreg string,

regsource string,

regsourcename string,

adname string,

siteid int,

sitename string,

siteurl string,

site_delete string,

site_createtime string,

site_creator string,

vip_id int,

vip_level string,

vip_start_time timestamp,

vip_end_time timestamp,

vip_last_modify_time timestamp,

vip_max_free string,

vip_min_free string,

vip_next_level string,

vip_operator string

)partitioned by(

dt string,

dn string

)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `dws`.`dws_member_zipper`(

uid int,

paymoney string,

vip_level string,

start_time timestamp,

end_time timestamp

)partitioned by(

dn string

)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS PARQUET TBLPROPERTIES('parquet.compression'='SNAPPY');

create external table `ads`.`ads_register_appregurlnum`(

appregurl string,

num int

)partitioned by(

dt string,

dn string

)ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t';

create external table `ads`.`ads_register_sitenamenum`(

sitename string,

num int

)partitioned by(

dt string,

dn string

)ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t';

create external table `ads`.`ads_register_regsourcenamenum`(

regsourcename string,

num int

)partitioned by(

dt string,

dn string

) ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t';

create external table `ads`.`ads_register_adnamenum`(

adname string,

num int

)partitioned by(

dt string,

dn string

)ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t';

create external table `ads`.`ads_register_memberlevelnum`(

memberlevel string,

num int

)partitioned by(

dt string,

dn string

)ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t';

create external table `ads`.`ads_register_viplevelnum`(

vip_level string,

num int

)partitioned by(

dt string,

dn string

)ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t';

create external table `ads`.`ads_register_top3memberpay`(

uid int,

memberlevel string,

register string,

appregurl string,

regsourcename string,

adname string,

sitename string,

vip_level string,

paymoney decimal(10,4),

rownum int

)partitioned by(

dt string,

dn string

)ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t';

表模型:

dwd层 6张基础表。

dws层 宽表和拉链表:

宽表:

拉链表:

报表层各统计表:

3)模拟数据采集上传数据

模拟数据采集 将日志文件数据直接上传到hadoop集群上:

hadoop dfs -put baseadlog.log /user/yyds/ods/

hadoop dfs -put memberRegtype.log /user/yyds/ods/

hadoop dfs -put baswewebsite.log /user/yyds/ods/

hadoop dfs -put pcentermempaymoney.log /user/yyds/ods/

hadoop dfs -put pcenterMemViplevel.log /user/yyds/ods/

hadoop dfs -put member.log /user/yyds/ods/

4)ETL数据清洗

需求1:必须使用Spark进行数据清洗,对用户名、手机号、密码进行脱敏处理,并使用Spark将数据导入到dwd层hive表中

清洗规则 用户名:王XX 手机号:137*****789 密码直接替换成*****

5)基于dwd层表合成dws层的宽表

需求2:对dwd层的6张表进行合并,生成一张宽表,先使用Spark Sql实现。有时间的同学需要使用DataFrame api实现功能,并对join进行优化。

6)拉链表

需求3:针对dws层宽表的支付金额(paymoney)和vip等级(vip_level)这两个会变动的字段生成一张拉链表,需要一天进行一次更新。

7)报表层各指标统计

需求4:使用Spark DataFrame Api统计通过各注册跳转地址(appregurl)进行注册的用户数,有时间的再写Spark Sql;

需求5:使用Spark DataFrame Api统计各所属网站(sitename)的用户数,有时间的再写Spark Sql;

需求6:使用Spark DataFrame Api统计各所属平台的(regsourcename)用户数,有时间的再写Spark Sql;

需求7:使用Spark DataFrame Api统计通过各广告跳转(adname)的用户数,有时间的再写Spark Sql;

需求8:使用Spark DataFrame Api统计各用户级别(memberlevel)的用户数,有时间的再写Spark Sql;

需求9:使用Spark DataFrame Api统计各分区网站、用户级别下(dn、memberlevel)的top3用户,有时间的再写Spark Sql;

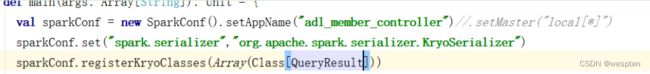

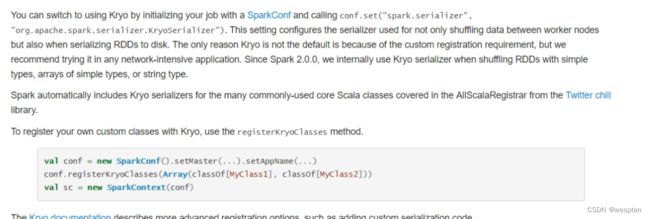

2、用户注册模块代码实现

1)准备样例类

package com.yyds.member.bean

case class MemberZipper(

uid: Int,

var paymoney: String,

vip_level: String,

start_time: String,

var end_time: String,

dn: String

)

case class MemberZipperResult(list: List[MemberZipper])

case class QueryResult(

uid: Int,

ad_id: Int,

memberlevel: String,

register: String,

appregurl: String, //注册来源url

regsource: String,

regsourcename: String,

adname: String,

siteid: String,

sitename: String,

vip_level: String,

paymoney: BigDecimal,

dt: String,

dn: String

)

case class DwsMember(

uid: Int,

ad_id: Int,

fullname: String,

iconurl: String,

lastlogin: String,

mailaddr: String,

memberlevel: String,

password: String,

paymoney: BigDecimal,

phone: String,

qq: String,

register: String,

regupdatetime: String,

unitname: String,

userip: String,

zipcode: String,

appkey: String,

appregurl: String,

bdp_uuid: String,

reg_createtime: String,

isranreg: String,

regsource: String,

regsourcename: String,

adname: String,

siteid: String,

sitename: String,

siteurl: String,

site_delete: String,

site_createtime: String,

site_creator: String,

vip_id: String,

vip_level: String,

vip_start_time: String,

vip_end_time: String,

vip_last_modify_time: String,

vip_max_free: String,

vip_min_free: String,

vip_next_level: String,

vip_operator: String,

dt: String,

dn: String

)

case class DwsMember_Result(

uid: Int,

ad_id: Int,

fullname: String,

icounurl: String,

lastlogin: String,

mailaddr: String,

memberlevel: String,

password: String,

paymoney: String,

phone: String,

qq: String,

register: String,

regupdatetime: String,

unitname: String,

userip: String,

zipcode: String,

appkey: String,

appregurl: String,

bdp_uuid: String,

reg_createtime: String,

isranreg: String,

regsource: String,

regsourcename: String,

adname: String,

siteid: String,

sitename: String,

siteurl: String,

site_delete: String,

site_createtime: String,

site_creator: String,

vip_id: String,

vip_level: String,

vip_start_time: String,

vip_end_time: String,

vip_last_modify_time: String,

vip_max_free: String,

vip_min_free: String,

vip_next_level: String,

vip_operator: String,

dt: String,

dn: String

)2)创建工具类

解析json使用fastjson,在util下创建ParseJosnData工具类:

package com.yyds.util;

import com.alibaba.fastjson.JSONObject;

public class ParseJsonData {

public static JSONObject getJsonData(String data) {

try {

return JSONObject.parseObject(data);

} catch (Exception e) {

return null;

}

}

}在util包下创建Hive工具HiveUtil类:

package com.yyds.util

import org.apache.spark.sql.SparkSession

object HiveUtil {

/**

* 调大最大分区个数

* @param spark

* @return

*/

def setMaxpartitions(spark: SparkSession)={

spark.sql("set hive.exec.dynamic.partition=true")

spark.sql("set hive.exec.dynamic.partition.mode=nonstrict")

spark.sql("set hive.exec.max.dynamic.partitions=100000")

spark.sql("set hive.exec.max.dynamic.partitions.pernode=100000")

spark.sql("set hive.exec.max.created.files=100000")

}

/**

* 开启压缩

*

* @param spark

* @return

*/

def openCompression(spark: SparkSession) = {

spark.sql("set mapred.output.compress=true")

spark.sql("set hive.exec.compress.output=true")

}

/**

* 开启动态分区,非严格模式

*

* @param spark

*/

def openDynamicPartition(spark: SparkSession) = {

spark.sql("set hive.exec.dynamic.partition=true")

spark.sql("set hive.exec.dynamic.partition.mode=nonstrict")

}

/**

* 使用lzo压缩

*

* @param spark

*/

def useLzoCompression(spark: SparkSession) = {

spark.sql("set io.compression.codec.lzo.class=com.hadoop.compression.lzo.LzoCodec")

spark.sql("set mapred.output.compression.codec=com.hadoop.compression.lzo.LzopCodec")

}

/**

* 使用snappy压缩

* @param spark

*/

def useSnappyCompression(spark:SparkSession)={

spark.sql("set mapreduce.map.output.compress.codec=org.apache.hadoop.io.compress.SnappyCodec");

spark.sql("set mapreduce.output.fileoutputformat.compress=true")

spark.sql("set mapreduce.output.fileoutputformat.compress.codec=org.apache.hadoop.io.compress.SnappyCodec")

}

}3)对日志进行数据清洗导入

收集日志原始数据后 我们需要对日志原始数据进行清洗 将清洗后的数据存入dwd层表

创建EtlDatService清洗类,使用该类读取hdfs上的原始日志数据,对原始日志进行清洗处理,对敏感字段姓名、电话做脱敏处理。filter对不能正常转换json数据的日志数据进行过滤,mappartiton针对每个分区去做数据循环map操作组装成对应表需要的字段,重组完之后coalesce缩小分区(减少文件个数)刷新到目标表中。

package com.yyds.member.service

import com.alibaba.fastjson.JSONObject

import com.yyds.util.ParseJsonData

import org.apache.spark.SparkContext

import org.apache.spark.sql.{SaveMode, SparkSession}

object EtlDataService {

/**

* etl用户注册信息

*

* @param ssc

* @param sparkSession

*/

def etlMemberRegtypeLog(ssc: SparkContext, sparkSession: SparkSession) = {

import sparkSession.implicits._ //隐式转换

ssc.textFile("/user/yyds/ods/memberRegtype.log")

.filter(item => {

val obj = ParseJsonData.getJsonData(item)

obj.isInstanceOf[JSONObject]

}).mapPartitions(partitoin => {

partitoin.map(item => {

val jsonObject = ParseJsonData.getJsonData(item)

val appkey = jsonObject.getString("appkey")

val appregurl = jsonObject.getString("appregurl")

val bdp_uuid = jsonObject.getString("bdp_uuid")

val createtime = jsonObject.getString("createtime")

val isranreg = jsonObject.getString("isranreg")

val regsource = jsonObject.getString("regsource")

val regsourceName = regsource match {

case "1" => "PC"

case "2" => "Mobile"

case "3" => "App"

case "4" => "WeChat"

case _ => "other"

}

val uid = jsonObject.getIntValue("uid")

val websiteid = jsonObject.getIntValue("websiteid")

val dt = jsonObject.getString("dt")

val dn = jsonObject.getString("dn")

(uid, appkey, appregurl, bdp_uuid, createtime, isranreg, regsource, regsourceName, websiteid, dt, dn)

})

}).toDF().coalesce(1).write.mode(SaveMode.Append).insertInto("dwd.dwd_member_regtype")

}

/**

* etl用户表数据

*

* @param ssc

* @param sparkSession

*/

def etlMemberLog(ssc: SparkContext, sparkSession: SparkSession) = {

import sparkSession.implicits._ //隐式转换

ssc.textFile("/user/yyds/ods/member.log").filter(item => {

val obj = ParseJsonData.getJsonData(item)

obj.isInstanceOf[JSONObject]

}).mapPartitions(partition => {

partition.map(item => {

val jsonObject = ParseJsonData.getJsonData(item)

val ad_id = jsonObject.getIntValue("ad_id")

val birthday = jsonObject.getString("birthday")

val email = jsonObject.getString("email")

val fullname = jsonObject.getString("fullname").substring(0, 1) + "xx"

val iconurl = jsonObject.getString("iconurl")

val lastlogin = jsonObject.getString("lastlogin")

val mailaddr = jsonObject.getString("mailaddr")

val memberlevel = jsonObject.getString("memberlevel")

val password = "******"

val paymoney = jsonObject.getString("paymoney")

val phone = jsonObject.getString("phone")

val newphone = phone.substring(0, 3) + "*****" + phone.substring(7, 11)

val qq = jsonObject.getString("qq")

val register = jsonObject.getString("register")

val regupdatetime = jsonObject.getString("regupdatetime")

val uid = jsonObject.getIntValue("uid")

val unitname = jsonObject.getString("unitname")

val userip = jsonObject.getString("userip")

val zipcode = jsonObject.getString("zipcode")

val dt = jsonObject.getString("dt")

val dn = jsonObject.getString("dn")

(uid, ad_id, birthday, email, fullname, iconurl, lastlogin, mailaddr, memberlevel, password, paymoney, newphone, qq,

register, regupdatetime, unitname, userip, zipcode, dt, dn)

})

}).toDF().coalesce(2).write.mode(SaveMode.Append).insertInto("dwd.dwd_member")

}

/**

* 导入广告表基础数据

*

* @param ssc

* @param sparkSession

*/

def etlBaseAdLog(ssc: SparkContext, sparkSession: SparkSession) = {

import sparkSession.implicits._ //隐式转换

val result = ssc.textFile("/user/yyds/ods/baseadlog.log").filter(item => {

val obj = ParseJsonData.getJsonData(item)

obj.isInstanceOf[JSONObject]

}).mapPartitions(partition => {

partition.map(item => {

val jsonObject = ParseJsonData.getJsonData(item)

val adid = jsonObject.getIntValue("adid")

val adname = jsonObject.getString("adname")

val dn = jsonObject.getString("dn")

(adid, adname, dn)

})

}).toDF().coalesce(1).write.mode(SaveMode.Overwrite).insertInto("dwd.dwd_base_ad")

}

/**

* 导入网站表基础数据

*

* @param ssc

* @param sparkSession

*/

def etlBaseWebSiteLog(ssc: SparkContext, sparkSession: SparkSession) = {

import sparkSession.implicits._ //隐式转换

ssc.textFile("/user/yyds/ods/baswewebsite.log").filter(item => {

val obj = ParseJsonData.getJsonDat a(item)

obj.isInstanceOf[JSONObject]

}).mapPartitions(partition => {

partition.map(item => {

val jsonObject = ParseJsonData.getJsonData(item)

val siteid = jsonObject.getIntValue("siteid")

val sitename = jsonObject.getString("sitename")

val siteurl = jsonObject.getString("siteurl")

val delete = jsonObject.getIntValue("delete")

val createtime = jsonObject.getString("createtime")

val creator = jsonObject.getString("creator")

val dn = jsonObject.getString("dn")

(siteid, sitename, siteurl, delete, createtime, creator, dn)

})

}).toDF().coalesce(1).write.mode(SaveMode.Overwrite).insertInto("dwd.dwd_base_website")

}

/**

* 导入用户付款信息

*

* @param ssc

* @param sparkSession

*/

def etlMemPayMoneyLog(ssc: SparkContext, sparkSession: SparkSession) = {

import sparkSession.implicits._ //隐式转换

ssc.textFile("/user/yyds/ods/pcentermempaymoney.log").filter(item => {

val obj = ParseJsonData.getJsonData(item)

obj.isInstanceOf[JSONObject]

}).mapPartitions(partition => {

partition.map(item => {

val jSONObject = ParseJsonData.getJsonData(item)

val paymoney = jSONObject.getString("paymoney")

val uid = jSONObject.getIntValue("uid")

val vip_id = jSONObject.getIntValue("vip_id")

val site_id = jSONObject.getIntValue("siteid")

val dt = jSONObject.getString("dt")

val dn = jSONObject.getString("dn")

(uid, paymoney, site_id, vip_id, dt, dn)

})

}).toDF().coalesce(1).write.mode(SaveMode.Append).insertInto("dwd.dwd_pcentermempaymoney")

}

/**

* 导入用户vip基础数据

*

* @param ssc

* @param sparkSession

*/

def etlMemVipLevelLog(ssc: SparkContext, sparkSession: SparkSession) = {

import sparkSession.implicits._ //隐式转换

ssc.textFile("/user/yyds/ods/pcenterMemViplevel.log").filter(item => {

val obj = ParseJsonData.getJsonData(item)

obj.isInstanceOf[JSONObject]

}).mapPartitions(partition => {

partition.map(item => {

val jSONObject = ParseJsonData.getJsonData(item)

val discountval = jSONObject.getString("discountval")

val end_time = jSONObject.getString("end_time")

val last_modify_time = jSONObject.getString("last_modify_time")

val max_free = jSONObject.getString("max_free")

val min_free = jSONObject.getString("min_free")

val next_level = jSONObject.getString("next_level")

val operator = jSONObject.getString("operator")

val start_time = jSONObject.getString("start_time")

val vip_id = jSONObject.getIntValue("vip_id")

val vip_level = jSONObject.getString("vip_level")

val dn = jSONObject.getString("dn")

(vip_id, vip_level, start_time, end_time, last_modify_time, max_free, min_free, next_level, operator, dn)

})

}).toDF().coalesce(1).write.mode(SaveMode.Overwrite).insertInto("dwd.dwd_vip_level")

}

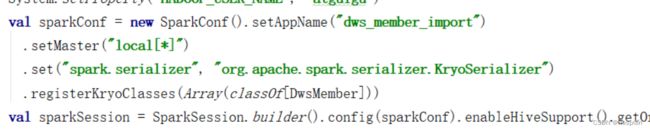

}4)创建DwdMemberController

package com.yyds.member.controller

import com.yyds.member.service.EtlDataService

import com.yyds.util.HiveUtil

import org.apache.spark.SparkConf

import org.apache.spark.sql.SparkSession

object DwdMemberController {

def main(args: Array[String]): Unit = {

System.setProperty("HADOOP_USER_NAME", "yyds")

val sparkConf = new SparkConf().setAppName("dwd_member_import").setMaster("local[*]")

val sparkSession = SparkSession.builder().config(sparkConf).enableHiveSupport().getOrCreate()

val ssc = sparkSession.sparkContext

HiveUtil.openDynamicPartition(sparkSession) //开启动态分区

HiveUtil.openCompression(sparkSession) //开启压缩

HiveUtil.useSnappyCompression(sparkSession) //使用snappy压缩

//对用户原始数据进行数据清洗 存入bdl层表中

EtlDataService.etlBaseAdLog(ssc, sparkSession) //导入基础广告表数据

EtlDataService.etlBaseWebSiteLog(ssc, sparkSession) //导入基础网站表数据

EtlDataService.etlMemberLog(ssc, sparkSession) //清洗用户数据

EtlDataService.etlMemberRegtypeLog(ssc, sparkSession) //清洗用户注册数据

EtlDataService.etlMemPayMoneyLog(ssc, sparkSession) //导入用户支付情况记录

EtlDataService.etlMemVipLevelLog(ssc, sparkSession) //导入vip基础数据

}

}5)创建DwdMemberDao

package com.yyds.member.dao

import org.apache.spark.sql.SparkSession

object DwdMemberDao {

def getDwdMember(sparkSession: SparkSession) = {

sparkSession.sql("select uid,ad_id,email,fullname,iconurl,lastlogin,mailaddr,memberlevel," +

"password,phone,qq,register,regupdatetime,unitname,userip,zipcode,dt,dn from dwd.dwd_member")

}

def getDwdMemberRegType(sparkSession: SparkSession) = {

sparkSession.sql("select uid,appkey,appregurl,bdp_uuid,createtime as reg_createtime,domain,isranreg," +

"regsource,regsourcename,websiteid as siteid,dn from dwd.dwd_member_regtype ")

}

def getDwdBaseAd(sparkSession: SparkSession) = {

sparkSession.sql("select adid as ad_id,adname,dn from dwd.dwd_base_ad")

}

def getDwdBaseWebSite(sparkSession: SparkSession) = {

sparkSession.sql("select siteid,sitename,siteurl,delete as site_delete," +

"createtime as site_createtime,creator as site_creator,dn from dwd.dwd_base_website")

}

def getDwdVipLevel(sparkSession: SparkSession) = {

sparkSession.sql("select vip_id,vip_level,start_time as vip_start_time,end_time as vip_end_time," +

"last_modify_time as vip_last_modify_time,max_free as vip_max_free,min_free as vip_min_free," +

"next_level as vip_next_level,operator as vip_operator,dn from dwd.dwd_vip_level")

}

def getDwdPcentermemPayMoney(sparkSession: SparkSession) = {

sparkSession.sql("select uid,cast(paymoney as decimal(10,4)) as paymoney,vip_id,dn from dwd.dwd_pcentermempaymoney")

}

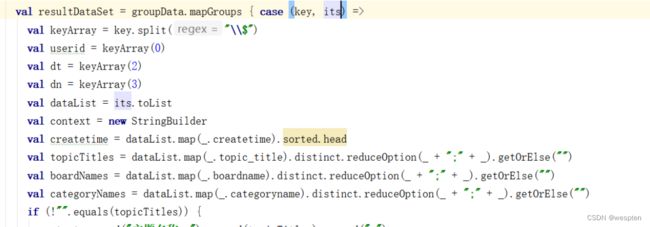

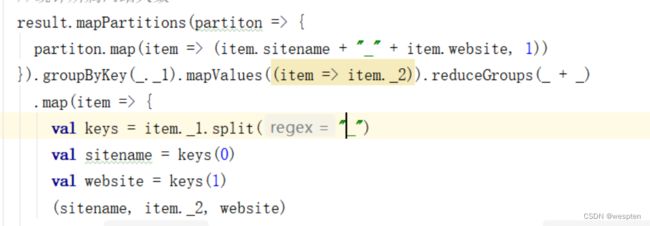

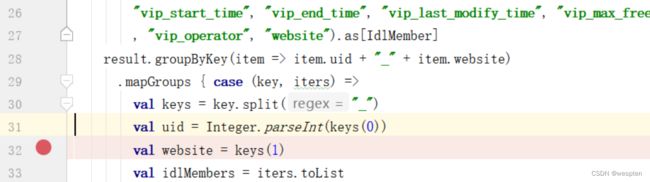

}6)基于dwd层表合成dws层的宽表和拉链表

宽表两种方式实现:

一种查询各单表基于单表dataframe使用 join算子得到结果,再使用groupbykey算子去重和取最大最小值等操作得到最终结果。

一种使用spark sql直接实现。

package com.yyds.member.service

import com.yyds.member.bean.{DwsMember, DwsMember_Result, MemberZipper, MemberZipperResult}

import com.yyds.member.dao.DwdMemberDao

import org.apache.spark.sql.{SaveMode, SparkSession}

object DwsMemberService {

def importMemberUseApi(sparkSession: SparkSession, dt: String) = {

import sparkSession.implicits._ //隐式转换

val dwdMember = DwdMemberDao.getDwdMember(sparkSession).where(s"dt='${dt}'") //主表用户表

val dwdMemberRegtype = DwdMemberDao.getDwdMemberRegType(sparkSession)

val dwdBaseAd = DwdMemberDao.getDwdBaseAd(sparkSession)

val dwdBaseWebsite = DwdMemberDao.getDwdBaseWebSite(sparkSession)

val dwdPcentermemPaymoney = DwdMemberDao.getDwdPcentermemPayMoney(sparkSession)

val dwdVipLevel = DwdMemberDao.getDwdVipLevel(sparkSession)

import org.apache.spark.sql.functions.broadcast

val result = dwdMember.join(dwdMemberRegtype, Seq("uid", "dn"), "left_outer")

.join(broadcast(dwdBaseAd), Seq("ad_id", "dn"), "left_outer")

.join(broadcast(dwdBaseWebsite), Seq("siteid", "dn"), "left_outer")

.join(broadcast(dwdPcentermemPaymoney), Seq("uid", "dn"), "left_outer")

.join(broadcast(dwdVipLevel), Seq("vip_id", "dn"), "left_outer")

.select("uid", "ad_id", "fullname", "iconurl", "lastlogin", "mailaddr", "memberlevel", "password"

, "paymoney", "phone", "qq", "register", "regupdatetime", "unitname", "userip", "zipcode", "appkey"

, "appregurl", "bdp_uuid", "reg_createtime", "domain", "isranreg", "regsource", "regsourcename", "adname"

, "siteid", "sitename", "siteurl", "site_delete", "site_createtime", "site_creator", "vip_id", "vip_level",

"vip_start_time", "vip_end_time", "vip_last_modify_time", "vip_max_free", "vip_min_free", "vip_next_level"

, "vip_operator", "dt", "dn").as[DwsMember]

result.groupByKey(item => item.uid + "_" + item.dn)

.mapGroups { case (key, iters) =>

val keys = key.split("_")