图神经网络(三):节点分类

节点分类问题

数据集:Cora

包含七类学术论文,论文与论文之间存在引用和被引用的关系

数据集导入

from torch_geometric.datasets import Planetoid

from torch_geometric.transforms import NormalizeFeatures

dataset=Planetoid(root='dataset',name='Cora',transform=NormalizeFeatures())

data=dataset[0]

print(data)

Data(edge_index=[2, 10556], test_mask=[2708], train_mask=[2708], val_mask=[2708], x=[2708, 1433], y=[2708])

打印数据集信息

print()

print(f'Dataset: {dataset}:')

print('======================')

print(f'Number of graphs: {len(dataset)}')

print(f'Number of features: {dataset.num_features}')

print(f'Number of classes: {dataset.num_classes}')

data = dataset[0] # Get the first graph object.

print()

print(data)

print('======================')

# Gather some statistics about the graph.

print(f'Number of nodes: {data.num_nodes}')

print(f'Number of edges: {data.num_edges}')

print(f'Average node degree: {data.num_edges / data.num_nodes:.2f}')

print(f'Number of training nodes: {data.train_mask.sum()}')

print(f'Training node label rate: {int(data.train_mask.sum()) / data.num_nodes:.2f}')

print(f'Contains isolated nodes: {data.contains_isolated_nodes()}')

print(f'Contains self-loops: {data.contains_self_loops()}')

print(f'Is undirected: {data.is_undirected()}')

Dataset: Cora():

Number of graphs: 1

Number of features: 1433

Number of classes: 7

Data(edge_index=[2, 10556], test_mask=[2708], train_mask=[2708], val_mask=[2708], x=[2708, 1433], y=[2708])

Number of nodes: 2708

Number of edges: 10556

Average node degree: 3.90

Number of training nodes: 140

Training node label rate: 0.05

Contains isolated nodes: False

Contains self-loops: False

Is undirected: True

节点分类图打印

import matplotlib.pyplot as plt

from sklearn.manifold import TSNE

def visualize(h, color):

z = TSNE(n_components=2).fit_transform(out.detach().cpu().numpy())

plt.figure(figsize=(10,10))

plt.xticks([])

plt.yticks([])

plt.scatter(z[:, 0], z[:, 1], s=70, c=color, cmap="Set2")

plt.show()

MLP分类

import torch.nn.functional as F

import torch

from torch.nn import Linear

class MLP(torch.nn.Module):

def __init__(self,hidden_channels):

super(MLP,self).__init__()

torch.manual_seed(12345)#为GPU设置种子

self.lin1=Linear(dataset.num_features,hidden_channels)

self.lin2=Linear(hidden_channels,dataset.num_classes)

def forward(self,x):

x=self.lin1(x)

x=x.relu()

x=F.dropout(x,p=0.5,training=self.training)

x=self.lin2(x)

return x

model=MLP(hidden_channels=16)

print(model)

训练和测试

criterion = torch.nn.CrossEntropyLoss() # 交叉熵

optimizer = torch.optim.Adam(model.parameters(), lr=0.01, weight_decay=5e-4) # 优化器

def train():

model.train()

optimizer.zero_grad() # 梯度清0

out = model(data.x) # x的节点表征作为输入

loss = criterion(out[data.train_mask], data.y[data.train_mask]) # 计算损失

loss.backward() # Derive gradients.

optimizer.step() # Update parameters based on gradients.

return loss

for epoch in range(1, 201):

loss = train()

print(f'Epoch: {epoch:03d}, Loss: {loss:.4f}')

def test():

model.eval()

out=model(data.x)

pred=out.argmax(dim=1)

test_correct=pred[data.test_mask]==data.y[data.test_mask]

test_acc=int(test_correct.sum())/int(data.test_mask.sum())

return test_acc

test_acc=test()

print(f'accuracy: {test_acc:.4f}')

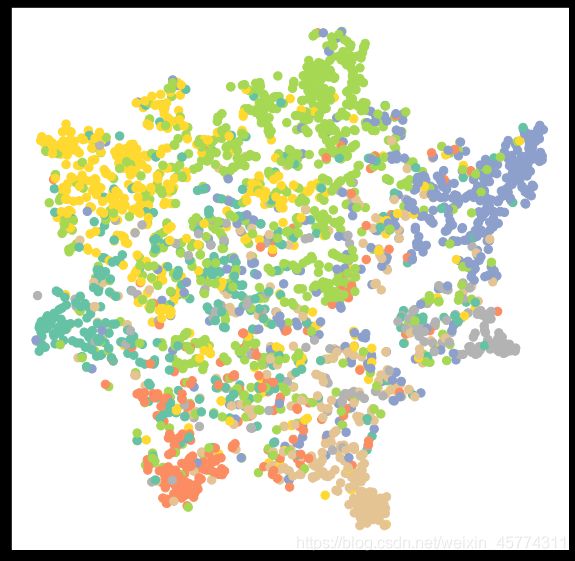

准确率:0.59

打印

model.eval()

out = model(data.x)

visualize(out, color=data.y)

GCN

from torch_geometric.nn import GCNConv

class GCN(torch.nn.Module):

def __init__(self,hidden_channels):

super(GCN,self).__init__()

self.conv1=GCNConv(dataset.num_features,hidden_channels)

self.conv2=GCNConv(hidden_channels,dataset.num_classes)

def forward(self,x,edge_index):

x = self.conv1(x, edge_index)

x = x.relu()

x = F.dropout(x, p=0.5, training=self.training)

x = self.conv2(x, edge_index)

return x

model = GCN(hidden_channels=16)

print(model)

训练和测试

criterion = torch.nn.CrossEntropyLoss() # 交叉熵

optimizer = torch.optim.Adam(model.parameters(), lr=0.01, weight_decay=5e-4) # 优化器

def train():

model.train()

x,edge_index=data.x,data.edge_index

optimizer.zero_grad()

out=model(x,edge_index)

loss=criterion(out[data.train_mask],data.y[data.train_mask])

loss.backward()

optimizer.step()

return loss

for epoch in range(1,201):

loss=train()

print(f'Epoch: {epoch:03d}, Loss: {loss:.4f}')

def test():

model.eval()

out = model(data.x, data.edge_index)

pred = out.argmax(dim=1) # Use the class with highest probability.

test_correct = pred[data.test_mask] == data.y[data.test_mask] # Check against ground-truth labels.

test_acc = int(test_correct.sum()) / int(data.test_mask.sum()) # Derive ratio of correct predictions.

return test_acc

test_acc = test()

print(f'Test Accuracy: {test_acc:.4f}')

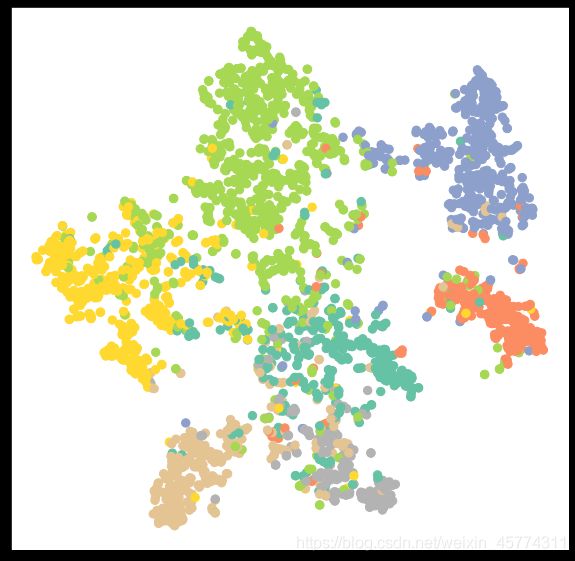

准确率:0.826

打印

model.eval()

out=model(data.x,data.edge_index)

visualize(out,color=data.y)

GAT

from torch_geometric.nn import GATConv

class GCN(torch.nn.Module):

def __init__(self,hidden_channels):

super(GAT,self).__init__()

self.conv1=GATConv(dataset.num_features,hidden_channels)

self.conv2=GATConv(hidden_channels,dataset.num_classes)

def forward(self,x,edge_index):

x = self.conv1(x, edge_index)

x = x.relu()

x = F.dropout(x, p=0.5, training=self.training)

x = self.conv2(x, edge_index)

return x

model = GAT(hidden_channels=16)

print(model)

训练和测试

criterion = torch.nn.CrossEntropyLoss() # 交叉熵

optimizer = torch.optim.Adam(model.parameters(), lr=0.01, weight_decay=5e-4) # 优化器

def train():

model.train()

x,edge_index=data.x,data.edge_index

optimizer.zero_grad()

out=model(x,edge_index)

loss=criterion(out[data.train_mask],data.y[data.train_mask])

loss.backward()

optimizer.step()

return loss

for epoch in range(1,201):

loss=train()

print(f'Epoch: {epoch:03d}, Loss: {loss:.4f}')

def test():

model.eval()

out = model(data.x, data.edge_index)

pred = out.argmax(dim=1) # Use the class with highest probability.

test_correct = pred[data.test_mask] == data.y[data.test_mask] # Check against ground-truth labels.

test_acc = int(test_correct.sum()) / int(data.test_mask.sum()) # Derive ratio of correct predictions.

return test_acc

test_acc = test()

print(f'Test Accuracy: {test_acc:.4f}')

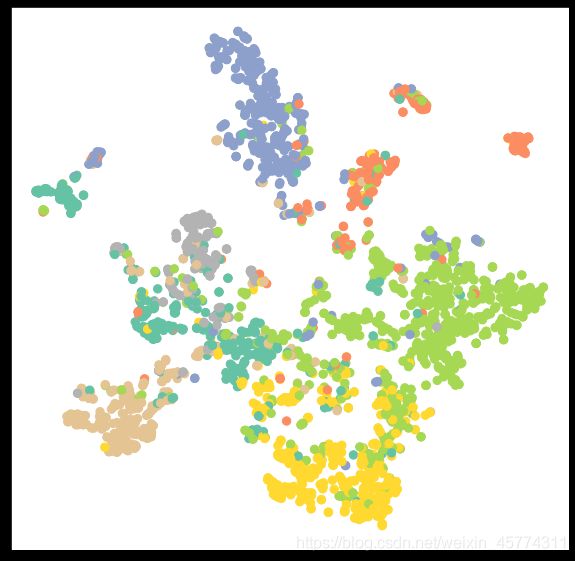

准确率:0.755

打印

model.eval()

out=model(data.x,data.edge_index)

visualize(out,color=data.y)

总结

本次主要是学习pyg中内置的一些图网络的使用,但是想要深挖其中的原理,还是需要从源码入手,已经通过消息传递机制来构建自己的图网络。