7. 神经网络训练MNIST数据集的简单实现

1. 实现全连接神经网络搭建(第3节内容)

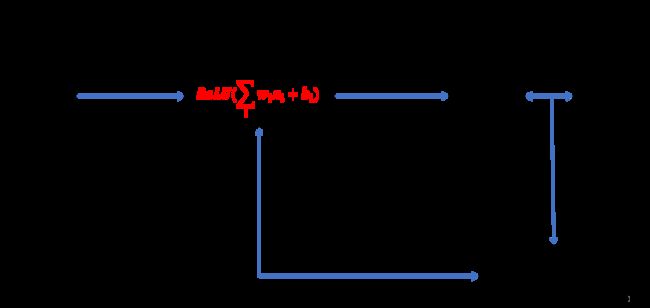

在简单神经网络框架中,增加ReLU激活函数

import torch.nn as nn

##########################Step 1: 全连接神经网络搭建#############################

class NeuralNet(nn.Module):

def __init__(self, in_features, hidden_features, out_features):

super(NeuralNet, self).__init__()

# 输入层 -> 隐藏层

self.layer1 = nn.Linear(in_features, hidden_features)

# 隐藏层 -> 输出层

self.layer2 = nn.Linear(hidden_features, out_features)

def forward(self, x):

y = self.layer1(x) # 第一层参数传递

y = nn.functional.relu(y) # ReLU激活函数

y = self.layer2(y) # 第二层参数传递

return y2. 完成数据集下载(第6节内容)

from torchvision.transforms import ToTensor

from torchvision.datasets import MNIST

############################Step 2: 数据集下载##################################

trainData = MNIST(root = "./",

train = True,

transform=ToTensor(),

download = True)

testData = MNIST(root = "./",

train = False,

transform=ToTensor(),

download = True)3. 完成数据集加载(第6节内容)

from torch.utils.data import DataLoader

############################Step 3: 数据集加载##################################

batch_size = 64

trainData_loader = DataLoader(dataset = trainData,

batch_size = batch_size,

shuffle = True)

testData_loader = DataLoader(dataset = testData,

batch_size = batch_size,

shuffle = True)4. 样本标签的独热编码(One-hot encoding)

import torch

####################### Step 4: 独热编码 ################################

def one_hot(label,depth=10):

out = torch.zeros(label.size(0),depth)

idx = torch.LongTensor(label).view(-1,1)

out.scatter_(dim=1,index=idx,value=1)

return out在本案例中,并不是将问题当做 数据拟合,而是当做 数字分类 来处理。基于独热编码格式,将标量标签0~9编码成一个10维的向量。

5. 基于已搭建的网络结构来训练样本

简单思路: 将一张28X28的张量数据,通过简单全连接神经网络结构,进行学习分类,输出一个10的向量对应0~9数字。

实际运行:每次打包64个数据样本图片,调用NeuralNet网络框架进行参数传递,得到输出y_pred。与实际标签y对比,计算损失函数,调用最小梯度算法,计算新的神经元权重,更新NeuralNet网络框架。

in_features = 28*28

hidden_features = 1000

out_features = 10

NN_model = NeuralNet(in_features, hidden_features, out_features) # 定义网络具体参数

L = nn.CrossEntropyLoss() # 交叉熵损失函数

from torch.optim.sgd import SGD

optimizer = SGD(NN_model.parameters(),lr=0.1) # 最小梯度优化算法

progress = []

loss_degree = []

####################### Step 5: 机器学习 ################################

for idx,(data,label) in enumerate(trainData_loader):

# Size([batch_size,1,28,28]) -> Size([batch_size,28*28])

data = data.view(data.size(0),28*28)

optimizer.zero_grad() #梯度清零

y_pred = NN_model(data) #正向学习

y = one_hot(label)

loss = L(y_pred,y) #计算损失

loss.backward() #反向传播,计算参数

optimizer.step() #参数更新

progress.append(100. * idx / len(trainData_loader))

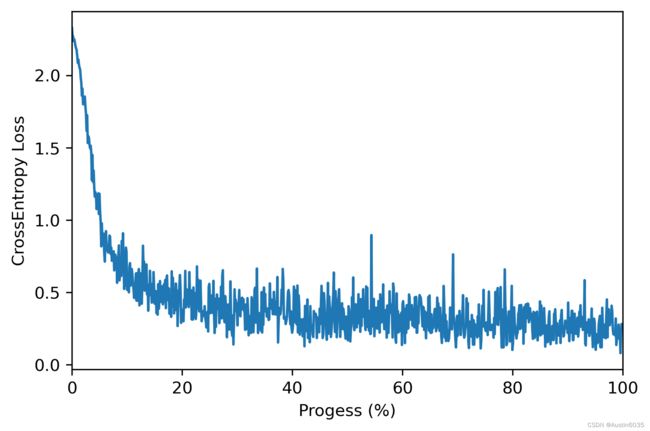

loss_degree.append(loss.item())6. 训练过程中数据的可视化(方便理解)

import matplotlib.pyplot as plt

plt.figure(dpi=300)

plt.plot(progress,loss_degree)

plt.xlabel("Progess (%)")

plt.ylabel("CrossEntropy Loss")

plt.xlim([0,100])

plt.show()6. 将NN_model用于预测测试集数据

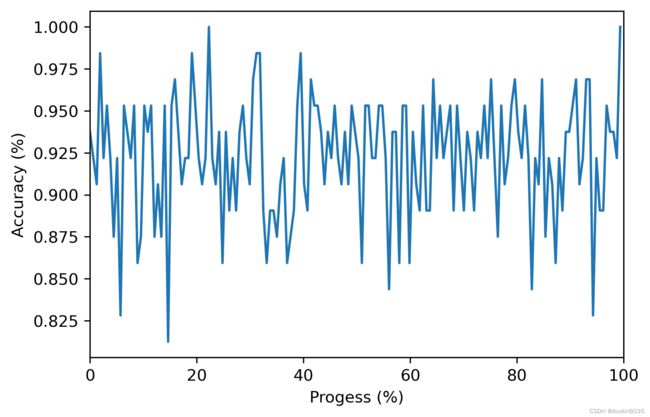

progress = []

accuracy = []

####################### Step 5: 模型评估 ################################

for idx,(data,label) in enumerate(testData_loader):

data = data.view(data.size(0),28*28)

y_pred = NN_model(data)

_, y_pred = torch.max(y_pred, dim=1) # dim = 1 列是第0个维度,行是第1个维度

progress.append(100. * idx / len(testData_loader))

accuracy.append( ((y_pred == label).sum().item())/ data.size(0) )

plt.figure(dpi=300)

plt.plot(progress,accuracy)

plt.xlabel("Progess (%)")

plt.ylabel("Accuracy (%)")

plt.xlim([0,100])

plt.show()7. 训练模型的保存和加载

torch.save(NN_model,"./NN_model.pth") # 模型保存:torch.save(net,PATH)

NN_model = torch.load("./NN_model.pth") # 模型加载:net = torch.load(PATH) 在当前路径下生成NN_model.pth文件,即训练的模型文件,用于后续加载。

附录:完整代码

import torch

import torch.nn as nn

from torch.optim.sgd import SGD

from torchvision.transforms import ToTensor

from torchvision.datasets import MNIST

from torch.utils.data import DataLoader

import matplotlib.pyplot as plt

##########################Step 0: 超参数的初始化################################

batch_size = 64

in_features = 28*28

hidden_features =256

out_features = 10

##########################Step 1: 全连接神经网络搭建#############################

class NeuralNet(nn.Module):

def __init__(self, in_features, hidden_features, out_features):

super(NeuralNet, self).__init__()

self.layer1 = nn.Linear(in_features, hidden_features)

self.layer2 = nn.Linear(hidden_features, out_features)

def forward(self, x):

y = self.layer1(x)

y = nn.functional.relu(y)

y = self.layer2(y)

return y

############################Step 2: 数据集下载##################################

trainData = MNIST(root = "./",

train = True,

transform=ToTensor(),

download = True)

testData = MNIST(root = "./",

train = False,

transform=ToTensor(),

download = True)

############################Step 3: 数据集加载##################################

trainData_loader = DataLoader(dataset = trainData,

batch_size = batch_size,

shuffle = True)

testData_loader = DataLoader(dataset = testData,

batch_size = batch_size,

shuffle = True)

#######################Step 4: 标签编码#########################################

def one_hot(label,depth=10):

out = torch.zeros(label.size(0),depth)

idx = torch.LongTensor(label).view(-1,1)

out.scatter_(dim=1,index=idx,value=1)

return out

###############################################################################

NN_model = NeuralNet(in_features, hidden_features, out_features) # 定义网络具体参数

L = nn.CrossEntropyLoss() # 交叉熵损失函数

optimizer = SGD(NN_model.parameters(),lr=0.1) # 最小梯度优化算法

progress = []

loss_degree = []

####################### Step 5: 机器学习 ################################

for idx,(data,label) in enumerate(trainData_loader):

# Size([batch_size,1,28,28]) -> Size([batch_size,28*28])

data = data.view(data.size(0),28*28)

optimizer.zero_grad() #梯度清零

y_pred = NN_model(data) #正向学习

y = one_hot(label)

loss = L(y_pred,y) #计算误差

loss.backward() #反向传播,计算参数

optimizer.step() #参数更新

progress.append(100. * idx / len(trainData_loader))

loss_degree.append(loss.item())

plt.figure(dpi=300)

plt.plot(progress,loss_degree)

plt.xlabel("Progess (%)")

plt.ylabel("CrossEntropy Loss")

plt.xlim([0,100])

plt.show()

progress = []

accuracy = []

####################### Step 5: 模型评估 ################################

for idx,(data,label) in enumerate(testData_loader):

data = data.view(data.size(0),28*28)

y_pred = NN_model(data)

_, y_pred = torch.max(y_pred, dim=1) # dim = 1 列是第0个维度,行是第1个维度

progress.append(100. * idx / len(testData_loader))

accuracy.append( ((y_pred == label).sum().item())/ data.size(0) )

plt.figure(dpi=300)

plt.plot(progress,accuracy)

plt.xlabel("Progess (%)")

plt.ylabel("Accuracy (%)")

plt.xlim([0,100])

plt.show()