win10系统下yolov5-V6.1版本的tensorrt部署细节教程及bug修改

提示:文章写完后,目录可以自动生成,如何生成可参考右边的帮助文档

文章目录

- 前言

- 一、开始前,要准备点什么?

- 二、安装

-

- 1.安装VS2019

- 2.安装cuda11.1和cudnn11.X

-

- 1)安装cuda

- 2)安装cudnn

- 3)安装测试

- 2.安装OpenCV

- 3.安装anaconda和创建虚拟环境

- 4.安装CMAKE

- 三、YOLOv5代码下载及运行

-

- 1. YOLOv5代码下载:Github链接:[yolov5](https://github.com/ultralytics/yolov5/tags)

- 2. 下载模型权重v5s.pt

- 3. 安装依赖包

- 4. 运行测试

- 三、Tensorrt安装

-

- 1. [下载版本8.2.5.1](https://developer.nvidia.com/tensorrt)

- 2. 环境配置

- 3. 测试运行

- 2. PGM文件

- Tensorrt的C++和python部署

-

- 1. tensorrtx下载([github](https://github.com/wang-xinyu/tensorrtx/tags))

- 2. dirent.h下载

- 3. yolov5s.wts生成

- 4. CMakeList.txt修改⭐

- 5. tensorrtx加速命令运行

- 总结

前言

什么是tensorrt:

tensorrt是一个有助于在NVIDIA图形处理单元(GPU)上高性能推理c++库,对网络的推理过程进行了深度优化,可以实现非常高的推理速度,实乃不掉精度就能提速的上上策。

一、开始前,要准备点什么?

- cuda and cudnn:cuda and cudnn。本人使用的是cuda11.1和cudnn11.X

- opencv:opencv。版本不用太高,本人使用4.2

- pytorch:pytorch and torchvision

- pycharm and annaconda:下载

- tensorrtx部署代码,大佬开源:tensorrtx

- vs2019:vs2019

- Cmake3.19.4:cmake

二、安装

1.安装VS2019

下载安装包后,双击安装软件进行安装,勾选以下信息

安装的时候最好默认位置安装,

2.安装cuda11.1和cudnn11.X

1)安装cuda

提前下载鲁大师更新一下显卡驱动,然后下载cuda安装包和cudnn压缩文件。

可能会出现安装失败bug

安装cuda的时候显示全部未安装或安装失败,则选择自定义安装,取消这两个选项即可

2)安装cudnn

将cudnn目录下的bin,include,lib中的文件分别复制粘贴到路径C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.1文件夹下相对应的目录

3)安装测试

打开cmd运行:

nvcc -V

2.安装OpenCV

3.安装anaconda和创建虚拟环境

官网下载安装包。

下载后点击进行安装,一直执行下一步,选择好软件的安装路径,本文安装路径默认c盘,隐藏文件夹programdata里,然后耐心等待,等到安装完成。

对于pytorch1.8.1,打开cmd创建虚拟环境,

conda create -n your_name python==3.7

activate your_name

pip install torch==1.8.1+cu111 torchvision==0.9.1+cu111 torchaudio==0.8.1 -f https://download.pytorch.org/whl/torch_stable.html

4.安装CMAKE

下载安装包

下载完成后,一直点击下一步执行即可,直到遇到以下情况

安装完成之后,重启电脑,然后打开Anaconda Prompt (Anaconda3),输入以下命令

cmake /V

三、YOLOv5代码下载及运行

1. YOLOv5代码下载:Github链接:yolov5

下载V6.0或最新版本都可以

2. 下载模型权重v5s.pt

3. 安装依赖包

激活刚才创建的环境,

d:

cd cd D:\YOLOv5_TensorRT\yolov5

pip install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple

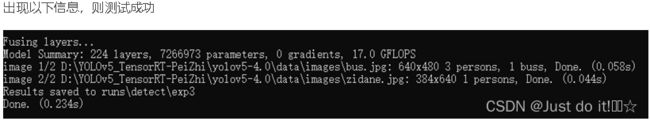

4. 运行测试

python detect.py --source ./data/images/ --weights weights/yolov5s.pt

三、Tensorrt安装

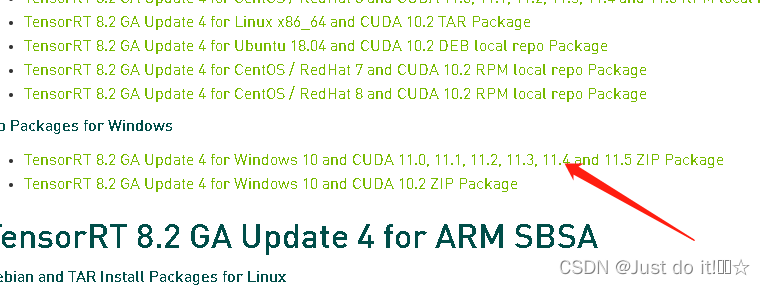

1. 下载版本8.2.5.1

2. 环境配置

跟cudnn一样,将include下的文件复制到cuda的include文件内

lib下的dll文件复制到cuda 的bin文件夹内

lib下的lib文件复制到cuda 的lib/x64文件夹内

3. 测试运行

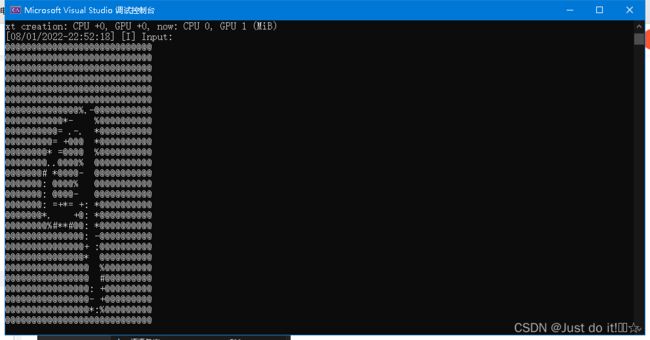

进入到TensorRT-8.2.5.1\samples\sampleMNIST文件夹内,vs2019打开![]()

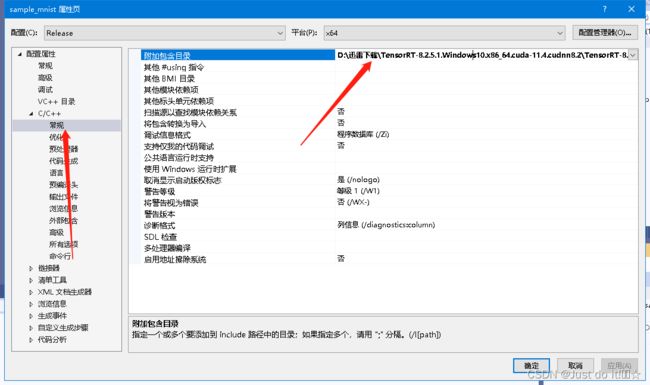

- 此处对sampleMNIST示例进行测试,vs2019打开sample_mnist.sln

- 然后依次点击 项目—>属性—>VC++目录

- 将路径D:\tensorrt_tar\TensorRT-7.0.0.11\lib分别加入可执行文件目录及库目录里

- 将D:\tensorrt_tar\TensorRT-7.0.0.11\include加入C/C++ —> 常规 —> 附加包含目录

- 将nvinfer.lib、nvinfer_plugin.lib、nvonnxparser.lib和nvparsers.lib加入链接器–>输入–>附加依赖项

- 将C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.1\lib加入链接器->常规->附加库目录

2. PGM文件

Tensorrt的C++和python部署

1. tensorrtx下载(github)

选择最新版本即可,支持yolov5v6.0和v6.1

2. dirent.h下载

Dirent 是一个 C/C++ 编程接口,Github链接:https://github.com/tronkko/dirent

下载后复制里面dirent.h到tensorrtx的include文件中

![]()

3. yolov5s.wts生成

将tensorrtx的gen_wts.py文件复制到下载好的yolov5源码中,运行得到wts文件

![]()

4. CMakeList.txt修改⭐

修改自定义的位置,还有cuda的版本

cmake_minimum_required(VERSION 3.2)

if(NOT DEFINED CMAKE_CUDA_ARCHITECTURES)

set(CMAKE_CUDA_ARCHITECTURES 50)###########

endif()

set(CudaToolkitDir "C:\\Program Files\\NVIDIA GPU Computing Toolkit\\CUDA\\v11.1")###########

project(yolov5)

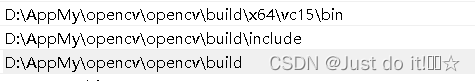

set(OpenCV_DIR "D:\\AppMy\\opencv\\opencv\\build") #1

set(TRT_DIR "D:\\Myproject\\TensorRT-8.2.5.1.Windows10.x86_64.cuda-11.4.cudnn8.2\\TensorRT-8.2.5.1") #2

set(OpenCV_INCLUDE_DIRS "D:\\AppMy\\opencv\\opencv\\build\\include") #3

set(OpenCV_LIBS "D:\\AppMy\\opencv\\opencv\\build\\x64\\vc15\\lib\\opencv_world340.lib") #4

add_definitions(-std=c++11)

add_definitions(-DAPI_EXPORTS)

option(CUDA_USE_STATIC_CUDA_RUNTIME OFF)

set(CMAKE_CXX_STANDARD 11)

set(CMAKE_BUILD_TYPE Debug)

set(THREADS_PREFER_PTHREAD_FLAG ON)

find_package(Threads)

# setup CUDA

find_package(CUDA REQUIRED)

message(STATUS " libraries: ${CUDA_LIBRARIES}")

message(STATUS " include path: ${CUDA_INCLUDE_DIRS}")

include_directories(${CUDA_INCLUDE_DIRS})

####

enable_language(CUDA) # add this line, then no need to setup cuda path in vs

####

include_directories(${PROJECT_SOURCE_DIR}/include)

include_directories(${TRT_DIR}\\include)

include_directories(D:\\yanyi\\project_process\\Completed_projects\\yolo-deploy\\tensorrtx-master\\yolov5) # 5

##### find package(opencv)

include_directories(${OpenCV_INCLUDE_DIRS})

include_directories(${OpenCV_INCLUDE_DIRS}\\opencv2) #6

# -D_MWAITXINTRIN_H_INCLUDED for solving error: identifier "__builtin_ia32_mwaitx" is undefined

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++11 -Wall -Ofast -D_MWAITXINTRIN_H_INCLUDED")

# setup opencv

find_package(OpenCV QUIET

NO_MODULE

NO_DEFAULT_PATH

NO_CMAKE_PATH

NO_CMAKE_ENVIRONMENT_PATH

NO_SYSTEM_ENVIRONMENT_PATH

NO_CMAKE_PACKAGE_REGISTRY

NO_CMAKE_BUILDS_PATH

NO_CMAKE_SYSTEM_PATH

NO_CMAKE_SYSTEM_PACKAGE_REGISTRY

)

message(STATUS "OpenCV library status:")

message(STATUS " version: ${OpenCV_VERSION}")

message(STATUS " libraries: ${OpenCV_LIBS}")

message(STATUS " include path: ${OpenCV_INCLUDE_DIRS}")

include_directories(${OpenCV_INCLUDE_DIRS})

link_directories(${TRT_DIR}\\lib) #7

link_directories(${OpenCV_DIR}\\x64\\vc15\\lib) #8

#add_executable(yolov5 ${PROJECT_SOURCE_DIR}/calibrator.cpp ${PROJECT_SOURCE_DIR}/yolov5.cpp ${PROJECT_SOURCE_DIR}/yololayer.cu ${PROJECT_SOURCE_DIR}/yololayer.h ${PROJECT_SOURCE_DIR}/preprocess.cu ${PROJECT_SOURCE_DIR}/preprocess.h)

add_executable(yolov5 ${PROJECT_SOURCE_DIR}/yolov5.cpp ${PROJECT_SOURCE_DIR}/common.hpp ${PROJECT_SOURCE_DIR}/yololayer.cu ${PROJECT_SOURCE_DIR}/yololayer.h ${PROJECT_SOURCE_DIR}/preprocess.cu ${PROJECT_SOURCE_DIR}/preprocess.h) #17

target_link_libraries(yolov5 "nvinfer" "nvinfer_plugin") #5

target_link_libraries(yolov5 ${OpenCV_LIBS}) #6

target_link_libraries(yolov5 ${CUDA_LIBRARIES}) #7

target_link_libraries(yolov5 Threads::Threads) #8

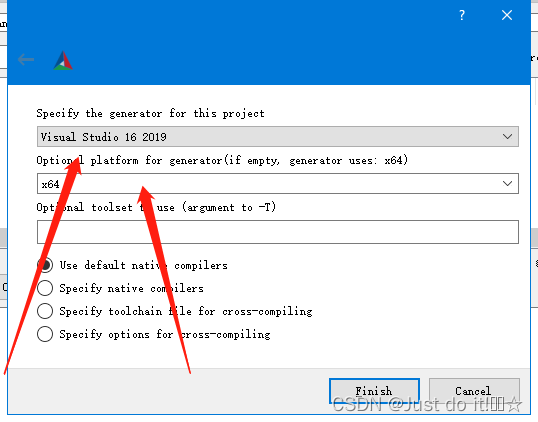

然后打开CMake软件,输入tensorrtx下的yolov5文件路径和build路径,会自动创建文件夹

选择vs2019和x64

之后点击Configure ->Generate->open project,打开VS

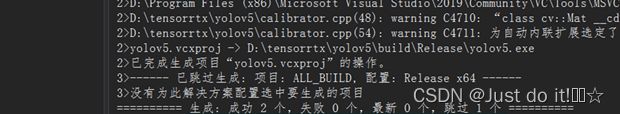

选择Realse和x64,点击生成解决方案

编译成功后会显示成功,只要没有失败即可,在yolov5-build-Realse文件中生成exe文件

5. tensorrtx加速命令运行

将第3步生成的wts文件复制到yolov5-Build-Release文件下,cmd执行:

yolov5.exe -s yolov5s.wts yolov5s.engine s

会在当前目录生成一个yolov5s.engine文件

然后运行推理命令:

yolov5.exe -d yolov5s.engine ../samples

加速后的检测结果

总结

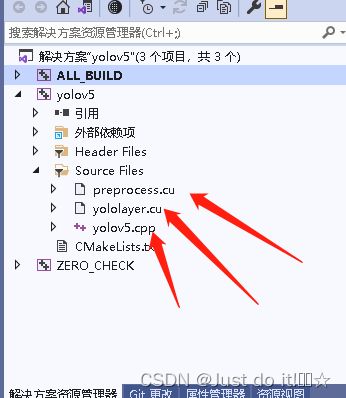

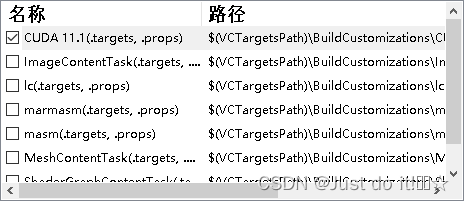

bug:如果编译时出现20多个错误的情况,找不到各种函数的解决办法

点击项目-生成自定义选择cuda11.1

右键这三个文件的属性选择cuda即可