经典卷积神经网络模型 - InceptionNet

文章目录

- 卷积神经网络经典模型 - InceptionNet

-

- 综述与模型创新突破点

- 模型结构

-

- Inception模块

- GoogLeNet -- InceptionNet模型的具体实现

- 模型复现

-

- 复现构成Inception模块的子模块

- 复现Inception模块

- 搭建InceptionNet模型

卷积神经网络经典模型 - InceptionNet

综述与模型创新突破点

LeNet神经网络问世,标志着卷积神经网络的发展迈入一个新的里程碑。后续提出AlexNet,VggNet方法都是通过堆叠卷积-池化层的方法来提高模型预测的准确度,然而LeNet,AlexNet,VggNet模型的共同点除了是通过增大模型精度(以牺牲算力为代价来提高模型精度),同时在卷积层的角度,我们发现卷积核的尺寸是单一的,这不利于宏观细节特征相兼顾。因此我们提出InceptionNet网络结构,通过设置多种卷积核尺寸来兼顾宏观细节特征的提取;

【创新点】:

- 引入Inceotion结构,旨在融合不同尺度的特征信息

- 使用 1 × \times × 1 的卷积核进行降维以及映射处理

- 添加两个辅助分类器帮助训练,确保了即便是隐藏单元和中间层也参与了特征计算,在Inception网络中起到一种调整的效果并且能防止网络发生过拟合

- 丢弃全连接层,使用平均池化层,以大大减少模型参数

模型结构

Inception模块

【设计思想】:

- 在论文中我们提出了Inception模块,其目的就是通过设置不同尺寸的卷积核提取从宏观到微观的特征,融合不同尺度的特征信息,如下两幅图是提出的两种Inception模块内部的两种基本结构,其两者的本质区别在于是否需要经过一个 1 × \times × 1 卷积,使用该卷积的目的是降维,以减少模型参数,降低模型的复杂度,防止过拟合;

- 将输入的原始数据放在不同的提取特征通道得到不同的特征层 (注意:每个分支所得的特征矩阵高和宽必须相同),随后将特征图分别进行拼接

- 采用不同的提取特征通道站在模型训练的角度来看,有利于实现并行计算,有利于模型训练加速

GoogLeNet – InceptionNet模型的具体实现

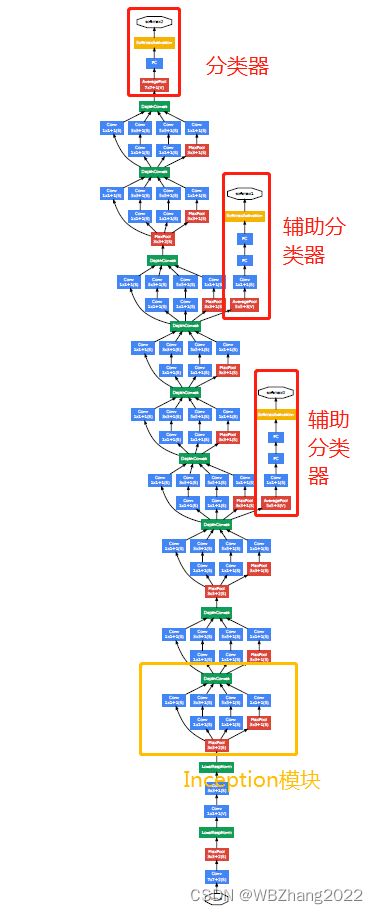

GoogLeNet模型就是由若干个Inception模块堆叠构成的,但是值得注意的是,如图所示模型多出两个分支这两个分支就是辅助分类器,引入辅助分类器的方法目的就是调整效果防止网络发生过拟合

模型复现

我们基于Tensorflow2.0复现一个简单的InceptionNet网络来加深对这一个神经网络模型的工作原理的理解

复现构成Inception模块的子模块

## Inception模块由若不同尺寸的卷积核构成

class ConvBNRelu(Model):

def __init__(self,channels,kernel_size=3,strides=1,padding='same'):

super(ConvBNRelu, self).__init__()

self.model = tf.keras.models.Sequential([

Conv2D(channels,kernel_size,strides=strides,padding=padding),

BatchNormalization(),

Activation('relu')

])

def call(self,x):

x = self.model(x)

return x

复现Inception模块

## 设置不同的卷积核(不同提取特征的分支),得到Inception模块

class InceptionBlock(Model):

def __init__(self,channels,strides=1):

super(InceptionBlock, self).__init__()

self.channels = channels

self.strides = strides

## 分支1

self.c1 = ConvBNRelu(channels,kernel_size=1,strides=strides)

## 分支2

self.c2_1 = ConvBNRelu(channels,kernel_size=1,strides=strides)

self.c2_2 = ConvBNRelu(channels,kernel_size=3,strides=1)

## 分支3

self.c3_1 = ConvBNRelu(channels,kernel_size=1,strides=strides)

self.c3_2 = ConvBNRelu(channels,kernel_size=5,strides=1)

## 分支4

self.p4_1 = MaxPool2D(3,strides=1,padding='same')

self.c4_2 = ConvBNRelu(channels,kernel_size=1,strides=strides)

def call(self,x):

x1 = self.c1(x)

x2_1 = self.c2_1(x)

x2_2 = self.c2_2(x2_1)

x3_1 = self.c3_1(x)

x3_2 = self.c3_2(x3_1)

x4_1 = self.p4_1(x)

x4_2 = self.c4_2(x4_1)

# 将四个分支按照深度的顺序拼接起来

x = tf.concat([x1,x2_2,x3_2,x4_2],axis=3)

return x

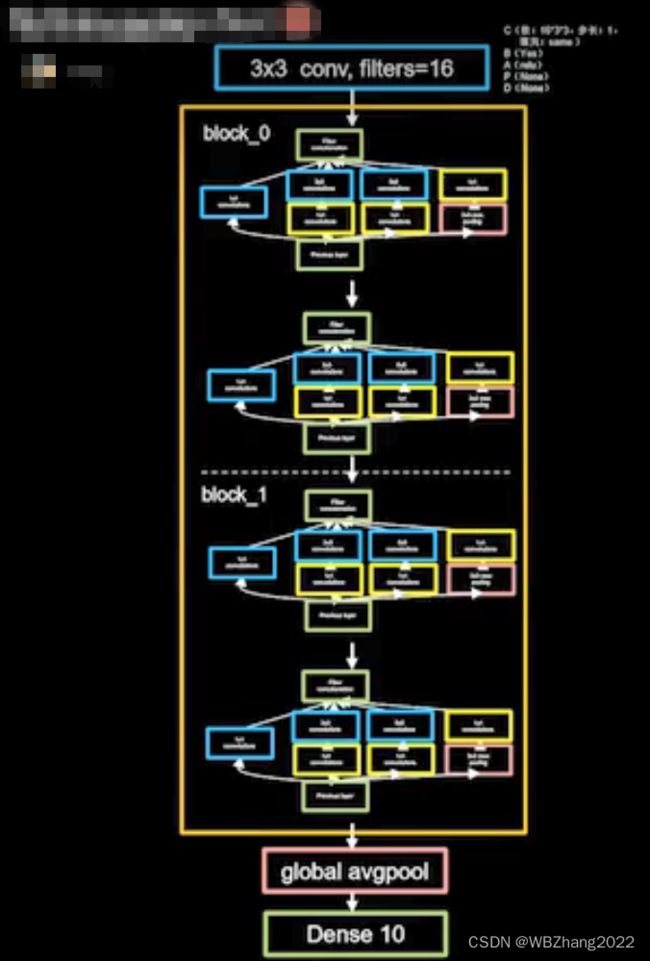

搭建InceptionNet模型

## 将模型封装在一个类中

class InceptionNet(Model):

def __init__(self,num_blocks,num_classes,init_channels=16,**kwargs):

super(InceptionNet, self).__init__(**kwargs)

self.in_channels = init_channels

self.out_channels = init_channels

self.num_blocks = num_blocks

self.init_channels = init_channels

## 由若干个Inception模块堆叠构成的

self.c1 = ConvBNRelu(init_channels)

self.blocks = tf.keras.models.Sequential()

for block_id in range(num_blocks):

for layer_id in range(2):

if layer_id == 0:

block = InceptionBlock(self.out_channels, strides=2)

else:

block = InceptionBlock(self.out_channels, strides=1)

self.blocks.add(block)

# 经过一个Inception Block 输出通道数会翻4倍

self.out_channels *= 2 # 出于计算效率每次乘2,即在二进制中向左移一位

self.p1 = GlobalMaxPool2D()

self.f1 = Dense(num_classes,activation='softmax')

def call(self,x):

x = self.c1(x)

x = self.blocks(x)

x = self.p1(x)

y = self.f1(x)

return y