Review of Algorithm (HITSZ) 含22年真题回忆

Review of Algorithm (HITSZ)含22年真题回忆

-

- 1. Time Analysis

-

- 1.1 Basic

- 1.2 Master Method

- 1.3 Recurrence Problems

- 2. Sorting Algorithm

-

- 2.1 Comparing Sort

-

- 2.1.1 Insertion Sort

- 2.1.2 Merge Sort

- 2.1.3 Shell Sort

- 2.1.4 Lower boundary of comparison sort

- 2.2 Linear Sort

-

- 2.2.1 Counting Sort (Stable)

- 2.2.2 Radix Sort (Stable)

- 2.2.3 Bucket Sort

- 3. Hash Table

-

- 3.1 Hash method

- 3.2 Collision

- 4. BST

-

- 4.1 Normal BST

- 4.2 Treap

- 5. RB Tree

- 6. B Tree

- 7. Augmenting Data Structures

- 8. Skip Lists

- 9. Amortized Analysis

-

- 9.1 Basic Concept

- 9.2 Examples

-

- 9.2.1 Dynamic tables

- 9.2.2 Binary Counter

- 10. Dynamic Programming

- 11. Greedy Algorithm

-

- 11.1 Basic

- 11.2 Examples

- 12. Linear Programming

- 13. FFT

- 14. NP

-

- 14.1 P, NP, NPC, NPH

- 14.2 Solution for NPC problems

- 15. 2022年真题回忆

-

- 15.1 选择题

- 15.2 大题

1. Time Analysis

1.1 Basic

NOTE:

- l o g a b = l o g c b l o g c a log_ab=\frac{log_cb}{log_ca} logab=logcalogcb

- l g n = l o g 2 n lgn=log_2n lgn=log2n

-

p ( n ) = O ( f ( n ) ) p(n)=O(f(n)) p(n)=O(f(n))

p ( n ) ≤ c f ( n ) p(n)\le cf(n) p(n)≤cf(n).

-

p ( n ) = Ω ( f ( n ) ) p(n)=\Omega(f(n)) p(n)=Ω(f(n))

p ( n ) ≥ c f ( n ) p(n)\ge cf(n) p(n)≥cf(n)

-

p ( n ) = Θ ( f ( n ) ) p(n)=\Theta(f(n)) p(n)=Θ(f(n))

c 1 f ( n ) ≤ p ( n ) ≤ c 2 f ( n ) c_1f(n)\le p(n)\le c_2f(n) c1f(n)≤p(n)≤c2f(n)

-

p ( n ) = o ( f ( n ) ) p(n)=o(f(n)) p(n)=o(f(n))

p ( n ) < c f ( n ) p(n)< cf(n) p(n)<cf(n)

-

p ( n ) = ω ( f ( n ) ) p(n)=\omega(f(n)) p(n)=ω(f(n))

p ( n ) > c f ( n ) p(n)> cf(n) p(n)>cf(n)

Solution: Calculating l i m n → ∞ p ( n ) f ( n ) lim_{n\rightarrow\infty}\frac{p(n)}{f(n)} limn→∞f(n)p(n).

- If l i m n → ∞ p ( n ) f ( n ) = a ≠ 0 lim_{n\rightarrow\infty}\frac{p(n)}{f(n)}=a\ne0 limn→∞f(n)p(n)=a=0, p ( n ) = Θ ( f ( n ) ) p(n)=\Theta(f(n)) p(n)=Θ(f(n))

- If l i m n → ∞ p ( n ) f ( n ) = t ≤ a lim_{n\rightarrow\infty}\frac{p(n)}{f(n)}=t\le a limn→∞f(n)p(n)=t≤a, p ( n ) = O ( f ( n ) ) p(n)=O(f(n)) p(n)=O(f(n)), since p ( n ) f ( n ) < δ + t ≤ δ + a \frac{p(n)}{f(n)}<\delta+t\le\delta+a f(n)p(n)<δ+t≤δ+a.

- If l i m n → ∞ p ( n ) f ( n ) = t ≥ a lim_{n\rightarrow\infty}\frac{p(n)}{f(n)}=t\ge a limn→∞f(n)p(n)=t≥a, p ( n ) = Ω ( f ( n ) ) p(n)=\Omega(f(n)) p(n)=Ω(f(n)), since p ( n ) f ( n ) > t − δ ≥ a − δ \frac{p(n)}{f(n)}>t-\delta\ge a-\delta f(n)p(n)>t−δ≥a−δ

- If l i m n → ∞ p ( n ) f ( n ) = 0 lim_{n\rightarrow\infty}\frac{p(n)}{f(n)}= 0 limn→∞f(n)p(n)=0, p ( n ) = o ( f ( n ) ) p(n)=o(f(n)) p(n)=o(f(n)), since p ( n ) f ( n ) < δ \frac{p(n)}{f(n)}<\delta f(n)p(n)<δ.

- If l i m n → ∞ p ( n ) f ( n ) = ∞ lim_{n\rightarrow\infty}\frac{p(n)}{f(n)}= \infty limn→∞f(n)p(n)=∞, p ( n ) = ω ( f ( n ) ) p(n)=\omega(f(n)) p(n)=ω(f(n)), since p ( n ) f ( n ) > N 0 \frac{p(n)}{f(n)}>N_0 f(n)p(n)>N0.

1.2 Master Method

Given equation like T ( n ) = a T ( n b ) + f ( n ) T(n)=aT(\frac{n}{b})+f(n) T(n)=aT(bn)+f(n)

- If f ( n ) = O ( n l o g b ( a ) − ϵ ) f(n)=O(n^{log_b(a)-\epsilon}) f(n)=O(nlogb(a)−ϵ), T ( n ) = Θ ( n l o g b ( a ) ) T(n)=\Theta(n^{log_b(a)}) T(n)=Θ(nlogb(a))

- If f ( n ) = Θ ( n l o g b ( a ) ) f(n)=\Theta(n^{log_b(a)}) f(n)=Θ(nlogb(a)), T ( n ) = Θ ( n l o g b ( a ) ∗ l g n ) T(n)=\Theta(n^{log_b(a)}*lgn) T(n)=Θ(nlogb(a)∗lgn)

- If f ( n ) = Ω ( n l o g b ( a ) + ϵ ) f(n)=\Omega(n^{log_b(a)+\epsilon}) f(n)=Ω(nlogb(a)+ϵ), and a f ( n / b ) ≤ c f ( n ) , ∃ c < 1 af(n/b)\le cf(n), \exist c<1 af(n/b)≤cf(n),∃c<1, then T ( n ) = Θ ( f ( n ) ) T(n)=\Theta(f(n)) T(n)=Θ(f(n))

1.3 Recurrence Problems

-

Big Integer Multiplication

X Y = a c 2 n + ( a b + c d ) 2 n / 2 + b d XY=ac2^n+(ab+cd)2^{n/2}+bd XY=ac2n+(ab+cd)2n/2+bd

Time complexity:

T ( n ) = 4 T ( n / 2 ) + O ( n ) T(n)=4T(n/2)+O(n) T(n)=4T(n/2)+O(n)Divide and conquer:

X Y = a c 2 n + ( ( a + c ) ( b + d ) − a c − b d ) 2 n / 2 + b d XY=ac2^n+((a+c)(b+d)-ac-bd)2^{n/2}+bd XY=ac2n+((a+c)(b+d)−ac−bd)2n/2+bd

Time complexity:

T ( n ) = 3 T ( n / 2 ) + O ( n ) T(n)=3T(n/2)+O(n) T(n)=3T(n/2)+O(n) -

FFT

-

Chess Board Cover

Put the “L” into center.

-

Binary Search

2. Sorting Algorithm

2.1 Comparing Sort

2.1.1 Insertion Sort

Insert a number into a sorted array. Need to move the element.

Time complexity:

T ( n ) = ∑ i = 1 i = n ( i − 1 ) = O ( n 2 ) T(n)=\sum_{i=1}^{i=n} (i-1) = O(n^2) T(n)=i=1∑i=n(i−1)=O(n2)

2.1.2 Merge Sort

Divide the array into two parts, sort them separately and merge them

Time complexity:

T ( n ) = 2 T ( n 2 ) + O ( n ) T(n)=2T(\frac{n}{2})+O(n) T(n)=2T(2n)+O(n)

According to master method: a = 2 , b = 2 , f ( n ) = c n a=2,b=2,f(n)=cn a=2,b=2,f(n)=cn. Thus n l o g b a = n = Θ ( n ) n^{log_ba}=n=\Theta(n) nlogba=n=Θ(n). So that

T ( n ) = Θ ( n l g n ) T(n)=\Theta(nlgn) T(n)=Θ(nlgn)

2.1.3 Shell Sort

希尔排序动图_哔哩哔哩_bilibili

Time complexity:

≈ O ( N 1.25 ) \approx O(N^{1.25}) ≈O(N1.25)

2.1.4 Lower boundary of comparison sort

Θ ( n l g n ) \Theta(nlgn) Θ(nlgn)

2.2 Linear Sort

2.2.1 Counting Sort (Stable)

-

Flow:

- Input array A A A.

- Assigning 0 to array C C C. ( O ( k ) O(k) O(k))

- Counting each element in an array A A A, recording in C C C. ( O ( n ) O(n) O(n))

- Accumulating array C C C. ( O ( k ) O(k) O(k))

- Sorting in array B B B. ( O ( n ) O(n) O(n))

-

Time complexity: O ( n + k ) O(n + k) O(n+k), where n n n is the input number, k k k is equivalent to value of the maximal number.

2.2.2 Radix Sort (Stable)

- Run Counting Sort in group

- Time complexity: O ( b r ( n + 2 r ) ) = O ( n ) O(\frac{b}{r}(n+2^r))=O(n) O(rb(n+2r))=O(n). Where b b b is the bits number of the compared numbers, r r r is the bits number of per group number. Thus there are b r \frac{b}{r} rb groups. We do not want r > l g n r>lgn r>lgn.

2.2.3 Bucket Sort

- Divide input array into several buckets, running insert sort in each bucket and concatenate them together.

- Expected time: Θ ( n ) \Theta(n) Θ(n)

- Worst-case performance: O ( n 2 ) O(n^2) O(n2)

- How to improve? Change insert sort to other efficient sort. Like, merge sort.

3. Hash Table

3.1 Hash method

- Division method: h ( k ) = k m o d m h(k)=k\mod m h(k)=kmodm. Do not choose m = 2 r m=2^r m=2r, this not uses all of bits in k k k.

- Multiplication method: h ( k ) = ( A k m o d 2 r ) r s h ( w − r ) h(k)=(Ak\mod 2^r) rsh(w-r) h(k)=(Akmod2r)rsh(w−r). A A A is an odd integer in the range 2 w − 1 ≤ A ≤ 2 w 2^{w-1}\le A\le2^w 2w−1≤A≤2w

- An unsuccessful search requires: 1 + α 1+\alpha 1+α, where α = m / n \alpha=m/n α=m/n, refer to load factor.

3.2 Collision

NOTE:

- A m o d B = A − ( A ÷ B ) ∗ B A \mod B = A - (A \div B)*B AmodB=A−(A÷B)∗B

- ( A + B ) m o d N = ( A m o d N + B m o d N ) m o d N (A+B)\mod N=(A\mod N + B\mod N) \mod N (A+B)modN=(AmodN+BmodN)modN

- Assuming h ( k ) = k m o d m h(k)=k\mod m h(k)=kmodm.

- Learning probing: h ′ ( k , i ) = ( k m o d m + i ) m o d m h'(k,i)=(k \mod m+i) \mod m h′(k,i)=(kmodm+i)modm

- Quadratic probing: h ′ ( k , i ) = ( k m o d m + c 1 i + c 2 i 2 ) m o d m h'(k,i)=(k \mod m+c_1i+c_2i^2) \mod m h′(k,i)=(kmodm+c1i+c2i2)modm

- Double hash probing: h ′ ( k , i ) = ( k m o d m + i ∗ h 2 ( k ) ) m o d m h'(k,i)=(k \mod m+i*h_2(k)) \mod m h′(k,i)=(kmodm+i∗h2(k))modm

- Theorem: Open-addressed hash table with load factor α \alpha α, the expected number of probes in an unsuccessful search is at most 1 / ( 1 − α ) 1/(1-\alpha) 1/(1−α) . That is to say:

- If the table is half full, then the expected number of probes is 1 / ( 1 – 0.5 ) = 2 1/(1–0.5) = 2 1/(1–0.5)=2.

- If the table is 90% full, then the expected number of probes is 1 / ( 1 – 0.9 ) = 10 1/(1–0.9) = 10 1/(1–0.9)=10.

4. BST

4.1 Normal BST

-

Left subtree is less than root

-

Right subtree is large than root

-

Deletion:

- No children: Delete directly.

- One child: Delete and make the child as the parent’s child.

- Two child: Replace with the successor. Apply 1 or 2 to delete it.

-

Randomly built BST has O ( l g n ) O(lgn) O(lgn) search time complexity.

4.2 Treap

Treap is the BST with priority. This helps build a randomly BST.

5. RB Tree

- Node is either red or black.

- Root is always black.

- Black height has only one.

- Terminate with two black nodes.

- Red node cannot connect with red node.

- Theorem: A RB tree with n n n internal nodes has height h ≤ 2 l g ( n + 1 ) h\le2lg(n+1) h≤2lg(n+1). h = O ( l g n ) h=O(lgn) h=O(lgn).

- In my opinion, N ≤ n + n − 1 N\le n+n-1 N≤n+n−1, so that h ≤ l g ( 2 n − 1 ) = O ( l g n ) h\le lg(2n-1)=O(lgn) h≤lg(2n−1)=O(lgn)

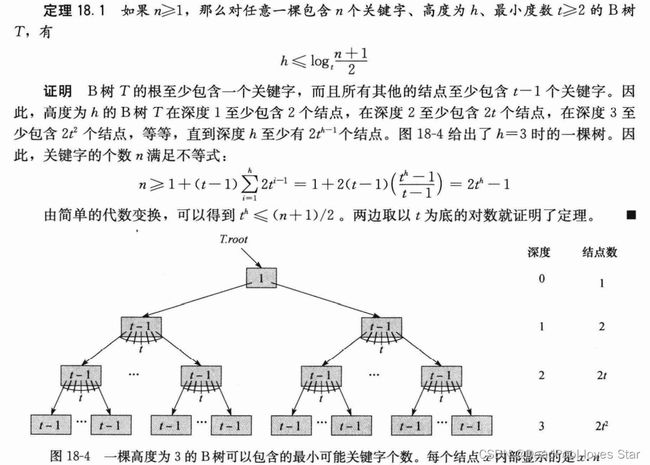

6. B Tree

-

Degree n n n (out degree): allows at least n − 1 n-1 n−1 keys, at most 2 n − 1 2n-1 2n−1 keys.

-

Split when insertion.

-

h ≤ l o g t ( ( n + 1 ) 2 ) h\le log_t(\frac{(n+1)}{2}) h≤logt(2(n+1)). See proof below.

- Deletion: Make sure each node has at least t − 1 t-1 t−1 keys and merge those nodes can be merged. i.e., both t − 1 t-1 t−1.

7. Augmenting Data Structures

- Order-Statistic Tree (OS-Tree): Recording the number of elements of subtree.

- Interval Tree: Each node represents an interval [ a , b ] [a,b] [a,b], and maintains a m a x max max field, which satisfies:

m a x = m a x ( { n o d e . h i g h n o d e . l e f t . m a x n o d e . r i g h t . m a x ) max=max(\left\{ \begin{aligned} node.high \\ node.left.max \\ node.right.max \end{aligned} \right.) max=max(⎩⎪⎨⎪⎧node.highnode.left.maxnode.right.max)

- Search interval

iin the Interval Tree:- Check if

rootis overlapped. If it is overlapped, then returnroot. - Check whether

root.left.max≥i.left, go to left. Recursively run process. - Otherwise, go to right. Recursively run process.

- Check if

8. Skip Lists

- Each node is promoted with 1 / 2 1/2 1/2 probability.

- Multiple indexing

- Idea: Skip list with l g n lgn lgn linked lists is like a binary tree (B+ tree).

- With high probability, every search in an n-element skip list costs O ( l g n ) O(lg n) O(lgn)

9. Amortized Analysis

9.1 Basic Concept

-

Aggregated:

T = ∑ i = 1 n u n i t n T=\frac {\sum_{i=1}^n unit}{n} T=n∑i=1nunit -

Accounting: Store c c c for each normal case operation, and each normal case operation spends m m m. Thus, you store c − m c-m c−m to the bank for each normal case operation. When the cost operation is coming, you spend all of your money in the bank. Make sure that the bank always has positive money.

T = ∑ i = 1 n c n = c T=\frac{\sum_{i=1}^n c}{n}=c T=n∑i=1nc=c -

Potential: Design a potential function ϕ ( D i ) \phi(D_i) ϕ(Di), s.t., c i ′ = c i + ϕ ( D i ) − ϕ ( D i − 1 ) c'_i=c_i+\phi(D_i)-\phi(D_{i-1}) ci′=ci+ϕ(Di)−ϕ(Di−1). Make sure that ϕ ( D 0 ) = 0 \phi(D_0)=0 ϕ(D0)=0, and ϕ ( D i ) ≥ 0 \phi(D_i)\ge 0 ϕ(Di)≥0

9.2 Examples

9.2.1 Dynamic tables

-

Aggregated

c i = { i , i − 1 = 2 n 1 , o t h e r w i s e c_i=\left\{ \begin{aligned} i, i-1=2^n \\ 1, otherwise \\ \end{aligned} \right. ci={i,i−1=2n1,otherwise∑ i = 1 n c i ≤ n + ∑ j = 1 l g ( n − 1 ) 2 j ≤ 3 n = Θ ( n ) \sum_{i=1}^nc_i\le n+\sum_{j=1}^{lg(n-1)} 2^j\le3n=\Theta(n) i=1∑nci≤n+j=1∑lg(n−1)2j≤3n=Θ(n)

Thus,

c i ′ = Θ ( n ) / n = Θ ( 1 ) c_i'=\Theta(n)/n= \Theta(1) ci′=Θ(n)/n=Θ(1) -

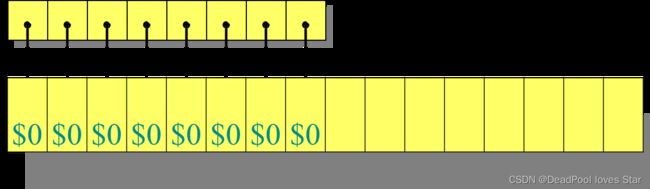

Accounting

Charge c i ′ = 3 c_i'=3 ci′=3 for each operation, so that 1 1 1 for insertion, 2 2 2 is stored for extending. When extending the table, 1 1 1 for inserting old item. 1 1 1 for inserting new item. See the figure below

-

Potential

Define

ϕ ( D i ) = 2 i − 2 ⌈ l g i ⌉ \phi(D_i)=2i-2^{\lceil lgi\rceil } ϕ(Di)=2i−2⌈lgi⌉

So that

c i ′ = c i + ϕ ( D i ) − ϕ ( D i − 1 ) c_i'=c_i+\phi(D_i)-\phi(D_{i-1}) ci′=ci+ϕ(Di)−ϕ(Di−1)

Thus, we have c i ′ = 3 c_i'=3 ci′=3.

9.2.2 Binary Counter

-

Aggregated

The summation of flip frequency of each bit.

n 2 + n 4 + n 8 + . . . + n 2 n = 2 n \frac{n}{2}+\frac{n}{4}+\frac{n}{8}+...+\frac{n}{2^n}=2n 2n+4n+8n+...+2nn=2n

So that c i ′ = 2 c_i'=2 ci′=2. -

Accounting

Operation 0 → 1 0\rightarrow 1 0→1 store 2 2 2, and pay for 1 1 1. Operation 1 → 0 1\rightarrow 0 1→0 pay for 1 1 1 store 0. Thus, for n n n operations, we must store 2 n 2n 2n fee. Thus c i ′ = 2 c_i'=2 ci′=2.

-

Potential

Define

ϕ ( D i ) = n u m b e r o f 1 i n c o u n t e r \phi(D_i)= number\ of\ 1\ in \ counter ϕ(Di)=number of 1 in counter

Thus,

c i ′ = c i + ϕ ( D i ) − ϕ ( D i − 1 ) c_i'=c_i+\phi(D_i)-\phi(D_{i-1}) ci′=ci+ϕ(Di)−ϕ(Di−1)

Let operation i i i changes t i t_i ti 1 1 1 to 0 0 0. And there only one 0 0 0 is changed to 1 1 1 (But be careful to the situation that the counter is overflow). Thus

ϕ ( D i ) ≤ ϕ ( D i − 1 ) − t i + 1 \phi(D_i)\le\phi(D_{i-1})-t_i+1 ϕ(Di)≤ϕ(Di−1)−ti+1

So that

c i ′ ≤ t i + 1 + 1 − t i = 2 c_i'\le t_i+1 +1-t_i=2 ci′≤ti+1+1−ti=2

10. Dynamic Programming

-

Matrix-chain Multiplication

The minimal multiplication times of A 1 ∗ A 2 ∗ . . . ∗ A n A_1*A_2*...*A_n A1∗A2∗...∗An

Let M i n [ i , j ] Min[i,j] Min[i,j] denote the minimal multiplication times of A i ∗ . . . ∗ A j A_i*...*A_j Ai∗...∗Aj

M i n [ i , j ] = { 0 i = j m i n i ≤ k ≤ j { M i n [ i , j ] , M i n [ i , k ] + M i n [ k + 1 , j ] + p i ∗ p k ∗ p j } i ≠ j Min[i,j]= \begin{cases} 0 & \text{ $ i=j $ } \\ min_{i\le k\le j}\{Min[i,j], Min[i,k]+Min[k+1,j]+p_i*p_k*p_j\} & \text{ $ i\ne j $ } \end{cases} Min[i,j]={0mini≤k≤j{Min[i,j],Min[i,k]+Min[k+1,j]+pi∗pk∗pj} i=j i=j -

LCS problem

The longest common sub sequence of X = x 1 x 2 . . . x m X=x_1x_2...x_m X=x1x2...xm and Y = y 1 y 2 . . . y n Y=y_1y_2...y_n Y=y1y2...yn

Let L C S [ i , j ] LCS[i,j] LCS[i,j] denote the length of the longest common sequence of X i = x 1... x i X_i=x1...x_i Xi=x1...xi and Y j = y 1 . . . y j Y_j=y_1...y_j Yj=y1...yj

Thus,

L C S [ i , j ] = { 0 i = 0 o r j = 0 L C S [ i − 1 , j − 1 ] + 1 X i = Y j m a x { L C S [ i − 1 , j ] , L C S [ i , j − 1 ] } X i ≠ Y j LCS[i,j]= \begin{cases} 0 & \text{ $ i =0 \ or\ j=0 $} \\ LCS[i-1,j-1]+1 & \text{ $ X_i=Y_j $ } \\ max\{ LCS[i-1,j],LCS[i,j-1]\} & \text{ $ X_i\ne Y_j $ } \end{cases} LCS[i,j]=⎩⎪⎨⎪⎧0LCS[i−1,j−1]+1max{LCS[i−1,j],LCS[i,j−1]} i=0 or j=0 Xi=Yj Xi=Yj -

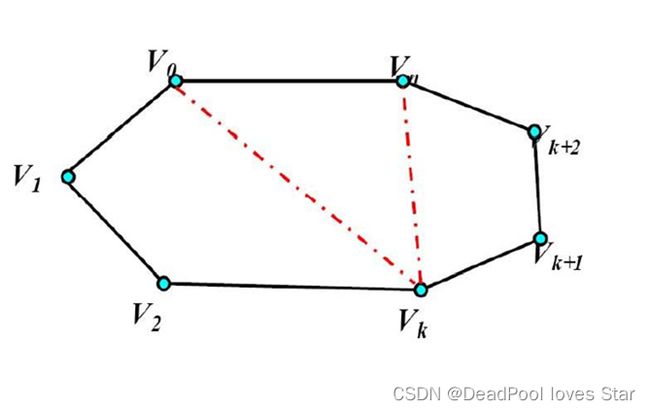

Triangle Decomposition of Convex Polygon

Find the minimal summation of triangle decomposition.

Let M i n [ i , j ] Min[i,j] Min[i,j] denote the minimal summation triangle decomposition of vertex from i i i to j j j.

Thus,

M i n [ i , j ] = { 0 i = j m i n i < k < j { M i n [ i , k ] + M i n [ k , j ] + w ( v i v j v k ) } i ≠ j Min[i,j]= \begin{cases} 0 & \text{ $ i=j $ } \\ min_{i< k< j}\{Min[i,k]+Min[k,j]+w(v_i v_j v_k)\} & \text{ $ i\ne j $ } \end{cases} Min[i,j]={0mini<k<j{Min[i,k]+Min[k,j]+w(vivjvk)} i=j i=j

11. Greedy Algorithm

11.1 Basic

To prove a greedy algorithm is workable, you should:

- First, show the optimal structure: Given an optimal solution of the problem, prove that it’s subproblem is also optimal. i.e., applying the recursive function like DP problem.

- Second, show that the greedy selection must be in the final optimal solution.

11.2 Examples

- Activities arrangement

- 0-1 Knapsack

12. Linear Programming

- Simplex Algorithm

- Build the slack form, where each variable is constrained by ≥ 0 \ge 0 ≥0.

- Find the most constrained variable, replacing it with the slack variable. Where slack variable can reach to 0 0 0.

- Recursive to find the anwser.

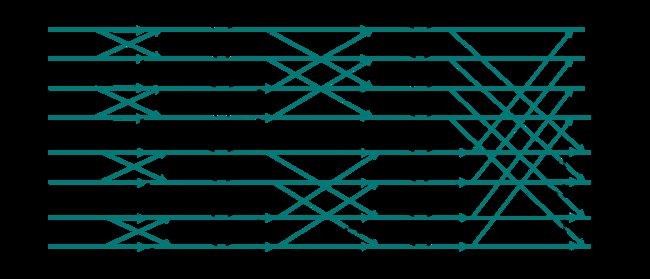

13. FFT

To solve a FFT problem, for example:

Compute DFT of input ( x ( 0 ) , x ( 1 ) , x ( 2 ) , x ( 3 ) , x ( 4 ) , x ( 5 ) , x ( 6 ) , x ( 7 ) ) (x(0),x(1),x(2),x(3),x(4),x(5),x(6),x(7)) (x(0),x(1),x(2),x(3),x(4),x(5),x(6),x(7)) by using FFT

-

First, determine leaves. In this example, leaves are

( x ( 0 ) , x ( 4 ) ) (x(0),x(4)) (x(0),x(4)), ( x ( 2 ) , x ( 6 ) ) (x(2),x(6)) (x(2),x(6)), ( x ( 1 ) , x ( 5 ) ) (x(1),x(5)) (x(1),x(5)), ( x ( 3 ) , x ( 7 ) ) (x(3),x(7)) (x(3),x(7))

-

Second, draw a butterfly. i.e,

There are two things you should note:

- Recursively. W 8 0 = W 4 0 W_8^0=W_4^0 W80=W40, W 8 2 = W 4 1 W_8^2=W_4^1 W82=W41, and 1 = W 2 0 1=W_2^0 1=W20

- Positive for even number and Negative for odd number.

- Third, compute from leaves, for example

X ( 0 ) = A ( 0 ) + W 8 0 B ( 0 ) = ( C ( 0 ) + D ( 0 ) ) + W 8 0 ( E ( 0 ) + F ( 0 ) ) = ( x ( 0 ) + x ( 4 ) + x ( 2 ) + x ( 6 ) ) + W 8 0 ( x ( 1 ) + x ( 5 ) + x ( 3 ) + x ( 7 ) ) = ∑ i = 0 i = 7 x ( i ) \begin{aligned} X(0) &=A(0)+W_8^0B(0)\\ &=(C(0)+D(0))+W_8^0(E(0)+F(0))\\ &=(x(0)+x(4)+x(2)+x(6))+W_8^0(x(1)+x(5)+x(3)+x(7))\\ &=\sum_{i=0}^{i=7} x(i) \end{aligned} X(0)=A(0)+W80B(0)=(C(0)+D(0))+W80(E(0)+F(0))=(x(0)+x(4)+x(2)+x(6))+W80(x(1)+x(5)+x(3)+x(7))=i=0∑i=7x(i)

14. NP

14.1 P, NP, NPC, NPH

-

P: Solve and Verify in polynomial time.

-

NP: Verify in polynomial time.

-

NP-hard: All NP problems can be reduce to the problem.

-

NPC: ① It is a NP problem ② Any NP problem can be reduced to the problem.

-

The relations

- If N P ≠ P NP\ne P NP=P: N P H NPH NPH can be reduced to either N P NP NP problems or other n o n − P non-P non−P problems.

- If N P = P NP=P NP=P: N P H NPH NPH can be reduce to N P = P NP=P NP=P problems or other problems. Thus if one problem is N P NP NP, it is a N P H NPH NPH problem. Thus, it is a N P C NPC NPC problem.

14.2 Solution for NPC problems

-

Exponential solution

- Backtracking (DFS + prune)

- Dynamic programming

-

Approximate algorithm

-

Approximate ratio

Assume the best solution is c ∗ c^* c∗, and the approximate solution is c c c. The approximate ratio is:

m a x { c ∗ c , c c ∗ } ≤ ρ ( n ) max\{\frac{c^*}{c},\frac{c}{c^*}\} \le \rho(n) max{cc∗,c∗c}≤ρ(n)

ρ ( n ) \rho(n) ρ(n) is approximate ratio. -

Relative error ϵ ( n ) \epsilon(n) ϵ(n)

∣ c − c ∗ c ∗ ∣ ≤ ϵ ( n ) |\frac{c-c^*}{c^*}|\le \epsilon(n) ∣c∗c−c∗∣≤ϵ(n)

-

15. 2022年真题回忆

选择 + 大题

15.1 选择题

共5题

- FFT时间复杂度 ( O l g n Olgn Olgn)

- 红黑树插入时间复杂度( l g n lgn lgn)

- B树度为t, 判断关键字数、儿子数

- 哪种形式是hash的线性探测

- 忘记了

15.2 大题

- 树堆(Treap)插入

- 用FFT计算DFT

- 递归树计算 T ( n ) = 3 T ( n / 4 ) + c n 2 T(n)=3T(n/4)+cn^2 T(n)=3T(n/4)+cn2

- 线性规划,求解 18 x + 12.5 y 18x+12.5y 18x+12.5y的最大值

- 证明活动选择问题,选择最晚开始的活动

- BST与红黑树问题:给一个树形结构填值,使之符合BST;红黑树黑高度为k,最大、最少内部节点数;BST旋转一下变成红黑树

- B树+Augumenting

- 动规问题,合并石堆

总的来说不算难,但是第7题的证明题是真难想,希望有分~~