MMLAB系列:MMCLS训练结果验证与测试

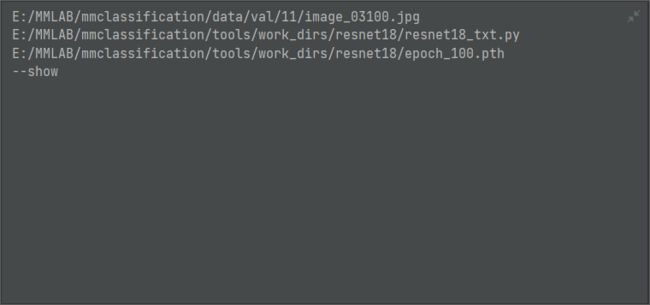

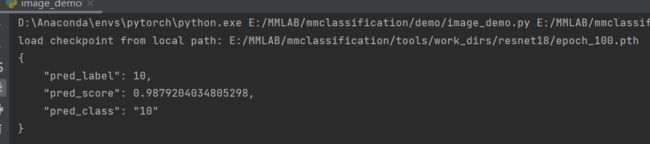

1.推理

运行demo/image_demo文件,指定模型参数运行

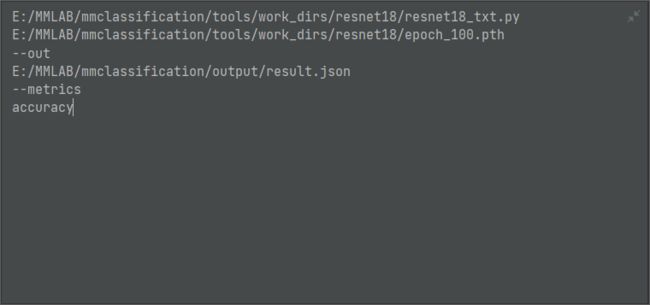

2. 测试

指定模型参数,运行tools/test.py文件

3.模型修改方式

如果需要自己写相关模块,将代码写好后,添加至相关初始化文件,然后修改模型配置文件,在相应部分修改为自己的类别即可。

总之,模型文件以配置文件为核心

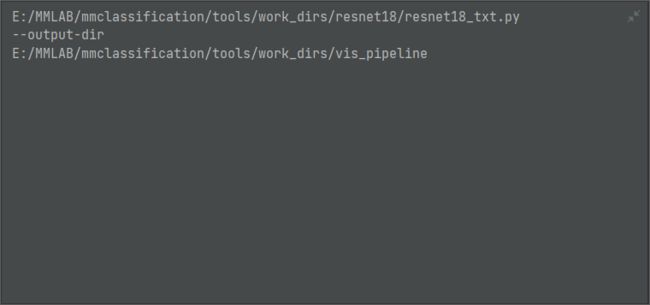

4.数据增强模型可视化展示

tools/visualizations/vis_pipeline.py是数据增强模型的可视化展示的脚本

参数说明:

config:模型配置文件

--skip-type:可以跳过的步骤

--output-dir:输出文件路径

--phase:训练集、测试集或验证集

--number:图片数量

--mode:transformed表示展示最终转化后的图像,pipeline表示展示模型中间结果,concat表示展示输入和输出

设置参数并运行:

5.MMCLS可视化模块

5.MMCLS可视化模块

tools/visualizations/vis_cam.py.py是数据增强模型的可视化展示的脚本,可以展示出某一层模型做出决策的关注点。

使用时需要下载第三方包:pip install grad-cam

重要参数:

img:img图像

config:模型配置文件

checkpoint:权重文件

--target-layers:查看哪些层模型的关注点

--preview-model:打印模型

--method:可视化方法

--target-category:指定类别

修改运行配置

值得注意的是,--save-path输出路径为图片,不是文件夹

结果如下:

6.其他工具

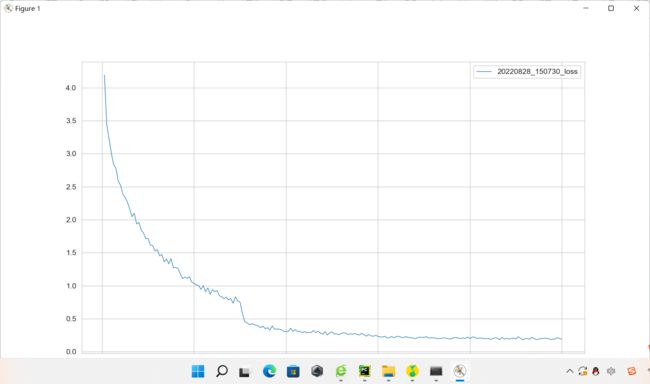

tools/analysis_tools/analyze_logs.py可以根据日志文件信息绘制图像

修改模型配置文件:

结果展示:

tools/analysis_tools/get_flops.py可以计算模型参数与计算量,同时还可以计算每一层的参数和计算量。

修改模型配置文件:

ImageClassifier(

11.229 M, 100.000% Params, 1.822 GFLOPs, 100.000% FLOPs,

(backbone): ResNet(

11.177 M, 99.534% Params, 1.822 GFLOPs, 99.999% FLOPs,

(conv1): Conv2d(0.009 M, 0.084% Params, 0.118 GFLOPs, 6.478% FLOPs, 3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)

(bn1): BatchNorm2d(0.0 M, 0.001% Params, 0.002 GFLOPs, 0.088% FLOPs, 64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(0.0 M, 0.000% Params, 0.001 GFLOPs, 0.044% FLOPs, inplace=True)

(maxpool): MaxPool2d(0.0 M, 0.000% Params, 0.001 GFLOPs, 0.044% FLOPs, kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

(layer1): ResLayer(

0.148 M, 1.318% Params, 0.465 GFLOPs, 25.517% FLOPs,

(0): BasicBlock(

0.074 M, 0.659% Params, 0.232 GFLOPs, 12.758% FLOPs,

(conv1): Conv2d(0.037 M, 0.328% Params, 0.116 GFLOPs, 6.346% FLOPs, 64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(0.0 M, 0.001% Params, 0.0 GFLOPs, 0.022% FLOPs, 64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(0.037 M, 0.328% Params, 0.116 GFLOPs, 6.346% FLOPs, 64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(0.0 M, 0.001% Params, 0.0 GFLOPs, 0.022% FLOPs, 64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(0.0 M, 0.000% Params, 0.0 GFLOPs, 0.022% FLOPs, inplace=True)

(drop_path): Identity(0.0 M, 0.000% Params, 0.0 GFLOPs, 0.000% FLOPs, )

)

(1): BasicBlock(

0.074 M, 0.659% Params, 0.232 GFLOPs, 12.758% FLOPs,

(conv1): Conv2d(0.037 M, 0.328% Params, 0.116 GFLOPs, 6.346% FLOPs, 64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(0.0 M, 0.001% Params, 0.0 GFLOPs, 0.022% FLOPs, 64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(0.037 M, 0.328% Params, 0.116 GFLOPs, 6.346% FLOPs, 64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(0.0 M, 0.001% Params, 0.0 GFLOPs, 0.022% FLOPs, 64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(0.0 M, 0.000% Params, 0.0 GFLOPs, 0.022% FLOPs, inplace=True)

(drop_path): Identity(0.0 M, 0.000% Params, 0.0 GFLOPs, 0.000% FLOPs, )

)

)

(layer2): ResLayer(

0.526 M, 4.681% Params, 0.412 GFLOPs, 22.641% FLOPs,

(0): BasicBlock(

0.23 M, 2.050% Params, 0.181 GFLOPs, 9.916% FLOPs,

(conv1): Conv2d(0.074 M, 0.657% Params, 0.058 GFLOPs, 3.173% FLOPs, 64, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(0.0 M, 0.002% Params, 0.0 GFLOPs, 0.011% FLOPs, 128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(0.147 M, 1.313% Params, 0.116 GFLOPs, 6.346% FLOPs, 128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(0.0 M, 0.002% Params, 0.0 GFLOPs, 0.011% FLOPs, 128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(0.0 M, 0.000% Params, 0.0 GFLOPs, 0.011% FLOPs, inplace=True)

(downsample): Sequential(

0.008 M, 0.075% Params, 0.007 GFLOPs, 0.364% FLOPs,

(0): Conv2d(0.008 M, 0.073% Params, 0.006 GFLOPs, 0.353% FLOPs, 64, 128, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(0.0 M, 0.002% Params, 0.0 GFLOPs, 0.011% FLOPs, 128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(drop_path): Identity(0.0 M, 0.000% Params, 0.0 GFLOPs, 0.000% FLOPs, )

)

(1): BasicBlock(

0.295 M, 2.631% Params, 0.232 GFLOPs, 12.725% FLOPs,

(conv1): Conv2d(0.147 M, 1.313% Params, 0.116 GFLOPs, 6.346% FLOPs, 128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(0.0 M, 0.002% Params, 0.0 GFLOPs, 0.011% FLOPs, 128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(0.147 M, 1.313% Params, 0.116 GFLOPs, 6.346% FLOPs, 128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(0.0 M, 0.002% Params, 0.0 GFLOPs, 0.011% FLOPs, 128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(0.0 M, 0.000% Params, 0.0 GFLOPs, 0.011% FLOPs, inplace=True)

(drop_path): Identity(0.0 M, 0.000% Params, 0.0 GFLOPs, 0.000% FLOPs, )

)

)

(layer3): ResLayer(

2.1 M, 18.699% Params, 0.412 GFLOPs, 22.603% FLOPs,

(0): BasicBlock(

0.919 M, 8.185% Params, 0.18 GFLOPs, 9.894% FLOPs,

(conv1): Conv2d(0.295 M, 2.626% Params, 0.058 GFLOPs, 3.173% FLOPs, 128, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(0.001 M, 0.005% Params, 0.0 GFLOPs, 0.006% FLOPs, 256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(0.59 M, 5.253% Params, 0.116 GFLOPs, 6.346% FLOPs, 256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(0.001 M, 0.005% Params, 0.0 GFLOPs, 0.006% FLOPs, 256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(0.0 M, 0.000% Params, 0.0 GFLOPs, 0.006% FLOPs, inplace=True)

(downsample): Sequential(

0.033 M, 0.296% Params, 0.007 GFLOPs, 0.358% FLOPs,

(0): Conv2d(0.033 M, 0.292% Params, 0.006 GFLOPs, 0.353% FLOPs, 128, 256, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(0.001 M, 0.005% Params, 0.0 GFLOPs, 0.006% FLOPs, 256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(drop_path): Identity(0.0 M, 0.000% Params, 0.0 GFLOPs, 0.000% FLOPs, )

)

(1): BasicBlock(

1.181 M, 10.515% Params, 0.232 GFLOPs, 12.709% FLOPs,

(conv1): Conv2d(0.59 M, 5.253% Params, 0.116 GFLOPs, 6.346% FLOPs, 256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(0.001 M, 0.005% Params, 0.0 GFLOPs, 0.006% FLOPs, 256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(0.59 M, 5.253% Params, 0.116 GFLOPs, 6.346% FLOPs, 256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(0.001 M, 0.005% Params, 0.0 GFLOPs, 0.006% FLOPs, 256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(0.0 M, 0.000% Params, 0.0 GFLOPs, 0.006% FLOPs, inplace=True)

(drop_path): Identity(0.0 M, 0.000% Params, 0.0 GFLOPs, 0.000% FLOPs, )

)

)

(layer4): ResLayer(

8.394 M, 74.752% Params, 0.411 GFLOPs, 22.583% FLOPs,

(0): BasicBlock(

3.673 M, 32.711% Params, 0.18 GFLOPs, 9.883% FLOPs,

(conv1): Conv2d(1.18 M, 10.506% Params, 0.058 GFLOPs, 3.173% FLOPs, 256, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(0.001 M, 0.009% Params, 0.0 GFLOPs, 0.003% FLOPs, 512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(2.359 M, 21.011% Params, 0.116 GFLOPs, 6.346% FLOPs, 512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(0.001 M, 0.009% Params, 0.0 GFLOPs, 0.003% FLOPs, 512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(0.0 M, 0.000% Params, 0.0 GFLOPs, 0.003% FLOPs, inplace=True)

(downsample): Sequential(

0.132 M, 1.176% Params, 0.006 GFLOPs, 0.355% FLOPs,

(0): Conv2d(0.131 M, 1.167% Params, 0.006 GFLOPs, 0.353% FLOPs, 256, 512, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(0.001 M, 0.009% Params, 0.0 GFLOPs, 0.003% FLOPs, 512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(drop_path): Identity(0.0 M, 0.000% Params, 0.0 GFLOPs, 0.000% FLOPs, )

)

(1): BasicBlock(

4.721 M, 42.040% Params, 0.231 GFLOPs, 12.701% FLOPs,

(conv1): Conv2d(2.359 M, 21.011% Params, 0.116 GFLOPs, 6.346% FLOPs, 512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(0.001 M, 0.009% Params, 0.0 GFLOPs, 0.003% FLOPs, 512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(2.359 M, 21.011% Params, 0.116 GFLOPs, 6.346% FLOPs, 512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(0.001 M, 0.009% Params, 0.0 GFLOPs, 0.003% FLOPs, 512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(0.0 M, 0.000% Params, 0.0 GFLOPs, 0.003% FLOPs, inplace=True)

(drop_path): Identity(0.0 M, 0.000% Params, 0.0 GFLOPs, 0.000% FLOPs, )

)

)

)

init_cfg=[{'type': 'Kaiming', 'layer': ['Conv2d']}, {'type': 'Constant', 'val': 1, 'layer': ['_BatchNorm', 'GroupNorm']}]

(neck): GlobalAveragePooling(

0.0 M, 0.000% Params, 0.0 GFLOPs, 0.001% FLOPs,

(gap): AdaptiveAvgPool2d(0.0 M, 0.000% Params, 0.0 GFLOPs, 0.001% FLOPs, output_size=(1, 1))

)

(head): LinearClsHead(

0.052 M, 0.466% Params, 0.0 GFLOPs, 0.000% FLOPs,

(compute_loss): CrossEntropyLoss(0.0 M, 0.000% Params, 0.0 GFLOPs, 0.000% FLOPs, )

(compute_accuracy): Accuracy(0.0 M, 0.000% Params, 0.0 GFLOPs, 0.000% FLOPs, )

(fc): Linear(0.052 M, 0.466% Params, 0.0 GFLOPs, 0.000% FLOPs, in_features=512, out_features=102, bias=True)

)

init_cfg={'type': 'Normal', 'layer': 'Linear', 'std': 0.01}

)

==============================

Input shape: (3, 224, 224)

Flops: 1.82 GFLOPs

Params: 11.23 M

==============================