Multimodal Machine Learning:A Survey and Taxonomy

Abstract

aim: build models that can process and relate information from multiple modalities

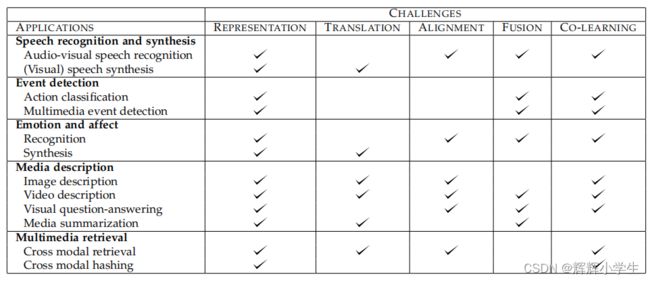

new taxonomy:representation, translation, alignment, fusion, and co-learning.

INTRODUCTION

three modalities:

(nlp)natural language which can be both written or spoken;

(cv) visual signals which are often represented with images or videos;

(sr) vocal signals which encode sounds and para-verbal information such as prosody and vocal expressions

challenges:

representation: the complementarity and redundancy

translation:translate one modality to another( open-ended or subjective)

alignment: measure similarity between different modalities and deal with possible long

range dependencies and ambiguities

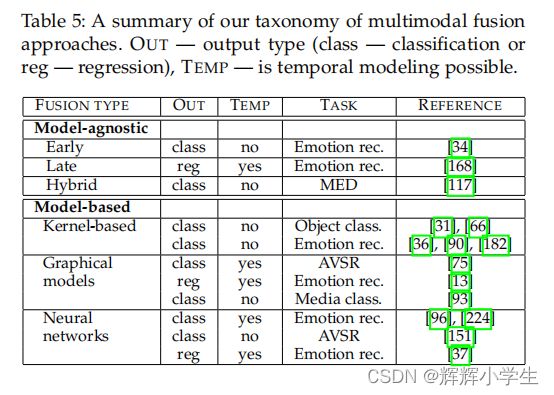

fusion: join information from two or more modalities to perform a prediction

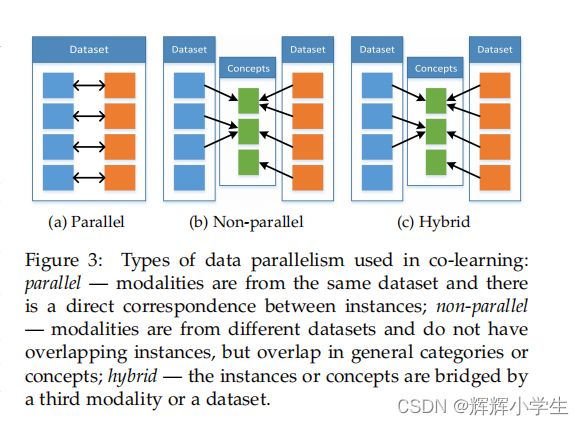

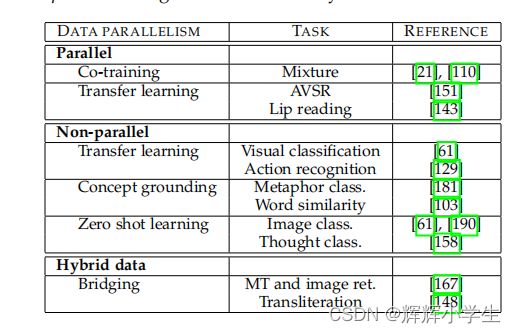

co-learning: co -training, conceptual grounding, and zero shot learning

Table 1: A summary of applications enabled by multimodal machine learning. For each application area we identify the core technical challenges that need to be addressed in order to tackle it.

2 A PPLICATIONS : A HISTORICAL PERSPECTIVE 略

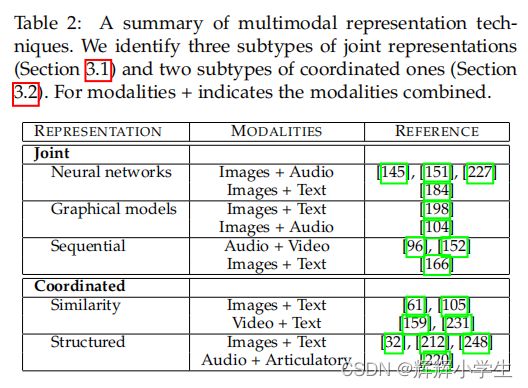

3 M ULTIMODAL R EPRESENTATIONS

Representing multiple modalities poses many diffificulties:

how to combine the data from heterogeneous sources;

how to deal with different levels of noise;

and how to deal with missing data.

a number of properties for good representations:

smoothness(类比nlp的平滑), temporal and spatial coherence, sparsity, and natural clustering amongst others

additional desirable properties for multimodal representations:

similarity in the representation space should reflect the similarity of the corresponding concepts,

the representation should be easy to obtain even in the absence of some modalities

it should be possible to fifill-in missing modalities given the observed ones.

3.1 Joint Representations( project unimodal representations together into a multimodal

space )

space )

Mathematically, the joint representation is expressed as:

xm = f(x1, . . . , xn) (1)

Neural networks

To construct a multimodal representation using neural networks each modality starts with several individual neural layers fol lowed by a hidden layer that projects the modalities into a joint space.The joint multimodal representation is then be passed through multiple hidden layers itself or used directly for prediction.(usually be trained end to end)

advantages:

neural network based joint rep resentations comes from their often superior performance

and the ability to pre-train the representations in an unsu pervised manner. The performance gain is, however, depen dent on the amount of data available for training.

disadvantages:

model not being able to handle missing data naturally deep networks are often diffificult to train

Probabilistic graphical models

advantages:

generative nature, which allows for an easy way to deal with missing data (

even if a whole modality is missing, the model has a natural way to cope)

generate samples of one modality in the presence of the other one, or both modalities from the representation s

the representation can be trained in an unsupervised manner enabling the use of unlabeled data

disadvantages:

the diffificulty of training them( high computational cost, and the need to use approximate

variational training methods )

Sequential Representation(varying length sequences) 略

3.2 Coordinated Representations ( learn separate representations for each modality but coordinate them through a constraint )

While coordinated representation is as follows:

f ( x 1 ) ∼ g ( x 2 ) (2)

Similarity models( minimize the distance between modal ities in the coordinated space )

structured coordinated space( Structured coordinated spaces are commonly used in cross-modal hashing )

Hashing enforces certain constraints on the resulting multimodal space:

1) it has to be an N -dimensionalHamming space — a binary representation with controllable

number of bits;

2) the same object from different modalities has to have a similar hash code;

3) the space has to be similarity-preserving.

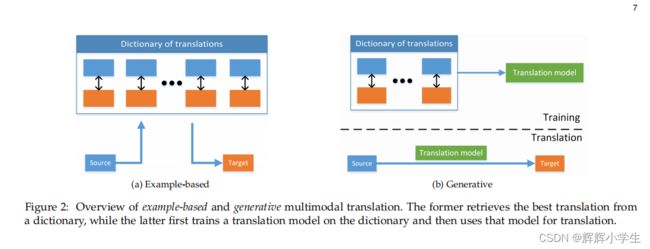

4 T RANSLATION

Given an entity in one modality the task is to generate the same entity in a different modality.

4.1 Example-based

Retrieval-based models ( directly use the retrieved translation without modifying it )

Combination-based models ( rely on more complex rules to create translations based on a number of retrieved instances )

4.2 Generative approaches

Grammar-based models

Encoder-decoder models

Continuous generation models

5 A LIGNMENT

5.1 Explicit alignment

Unsupervised

Supervised