目录

- 一、openCV入门

-

- 二、TensorFlow入门

-

- 1 - TensorFlow常量变量

- 2 - TensorFlow运算本质

- 3 - TensorFlow四则运算

- 4 - tensorflow矩阵基础

- 5 - numpy矩阵

- 6 - matplotlib绘图

- 三、神经网络逼近股票收盘均价(案例)

-

- 1 - 绘制15天股票K线的柱状图

- 2 - 人工神经网络简介

- 3 - 编码实现

一、openCV入门

1 - 简单图片操作

- 图片读取与展示:imread第2参数,0表示灰度图片,1表示彩色图片

import cv2

img = cv2.imread('image0.jpg',1)

cv2.imshow('image',img)

cv2.waitKey (0)

import cv2

img = cv2.imread('image0.jpg',1)

cv2.imwrite('image1.jpg',img)

- 有损压缩:

cv2.IMWRITE_JPEG_QUALITY,范围是0-100,数字越大图片越清晰

import cv2

img = cv2.imread('image0.jpg',1)

cv2.imwrite('imageTest.jpg',img,[cv2.IMWRITE_JPEG_QUALITY,50])

- png压缩:

cv2.IMWRITE_PNG_COMPRESSION,压缩范围0-9,数字越小图片越清晰

- 特点1:png是无损压缩

- 特点2:png有图像透明度,jpg是没有图像透明度属性的

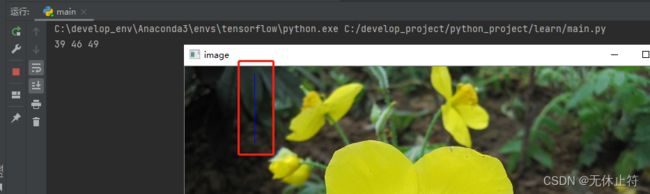

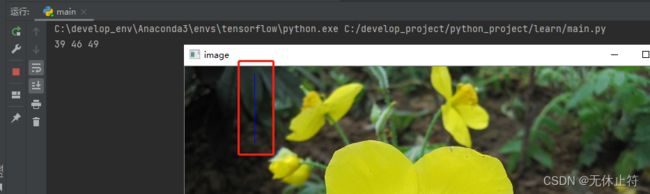

2 - 像素操作

- RGB:每一种颜色(像素)都是由RGB三种分量构成的

- 颜色深度:对于8位的颜色深度来说,可以表示0-255;也就是说一个颜色可以使用256256256来表示

- 图片的宽高:比如w:h = 640 * 480 -> 这个表示在水平方向上有640个像素点,在垂直方向上有480个像素点

- 图片的大小计算:假设图片的宽为720,高位547;那么图片的大小=7205473*8 bit/8(B)=1.14M

- 其中720是宽,547是高,3代表RGB三种分量,第1个8代表是8位,第2个8代表bit转成B

- alpha通道:图片的透明度信息,上面介绍过png是无损压缩,有透明度信息

- BGR:颜色深度格式,除了RGB之外,还有BGR

- opencv读取图片时获取的是bgr

- BGR与RGB不同的是第一个像素值是B蓝色

- BGR每个称之为一个分量,每个分量又叫做颜色通道

- 案例:像素读取与写入:注意像素写入的时候第一个是b

import cv2

img = cv2.imread('image0.jpg', 1)

(b, g, r) = img[100, 100]

print(b, g, r)

for i in range(1, 100):

img[10 + i, 100] = (255, 0, 0)

cv2.imshow('image', img)

cv2.waitKey(0)

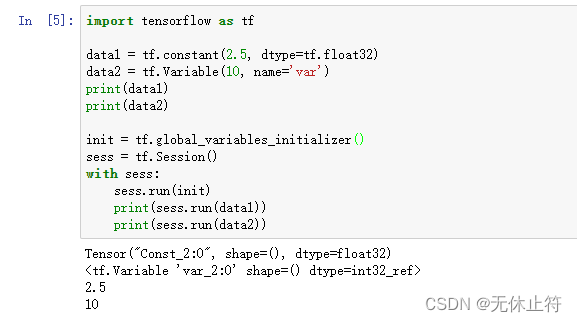

二、TensorFlow入门

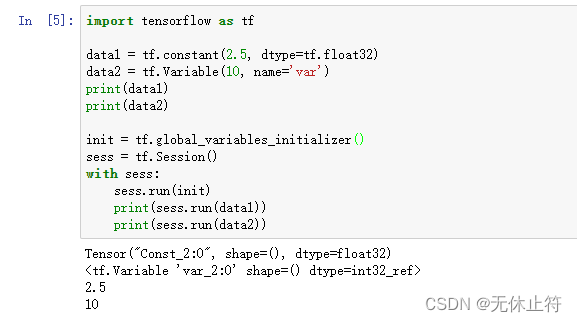

1 - TensorFlow常量变量

- 变量的使用注意:session使用前需要

sess.run(init)初始化

import tensorflow as tf

data1 = tf.constant(2, dtype=tf.int32)

data2 = tf.Variable(10, name='var')

print(data1)

print(data2)

init = tf.global_variables_initializer()

sess = tf.Session()

with sess:

sess.run(init)

print(sess.run(data1))

print(sess.run(data2))

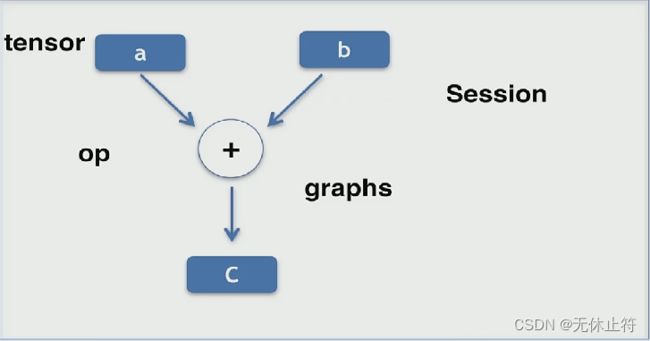

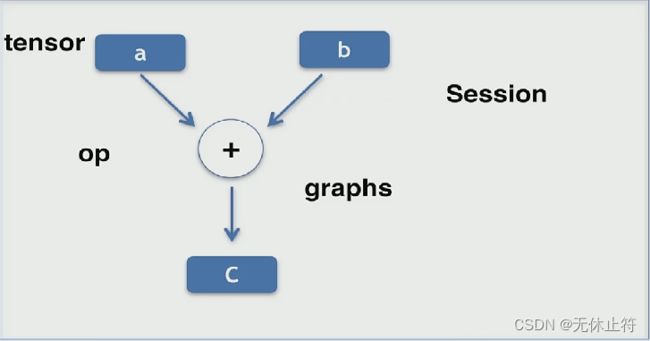

2 - TensorFlow运算本质

- tensorflow的本质:张量tensor+计算图grahps

- tf = tensor + grahps

- tensor:数据

- op:操作,可以是赋值操作也可以是四则运算操作

- grahps:数据操作的过程

- Session:在tf中所有的grahps都需要放到Session会话中来执行,Session可以理解成一个运算的交互环境

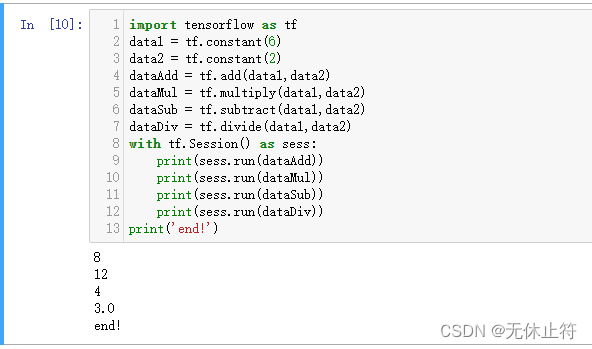

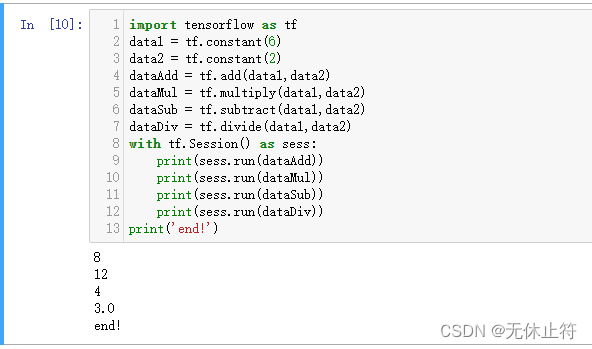

3 - TensorFlow四则运算

import tensorflow as tf

data1 = tf.constant(6)

data2 = tf.constant(2)

dataAdd = tf.add(data1,data2)

dataMul = tf.multiply(data1,data2)

dataSub = tf.subtract(data1,data2)

dataDiv = tf.divide(data1,data2)

with tf.Session() as sess:

print(sess.run(dataAdd))

print(sess.run(dataMul))

print(sess.run(dataSub))

print(sess.run(dataDiv))

print('end!')

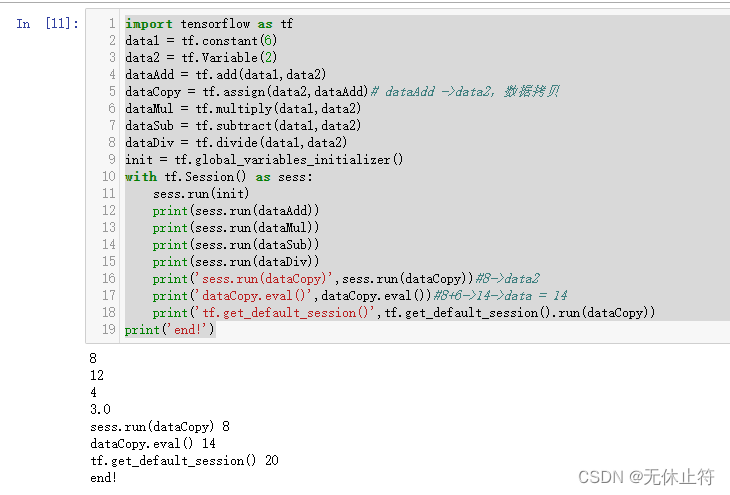

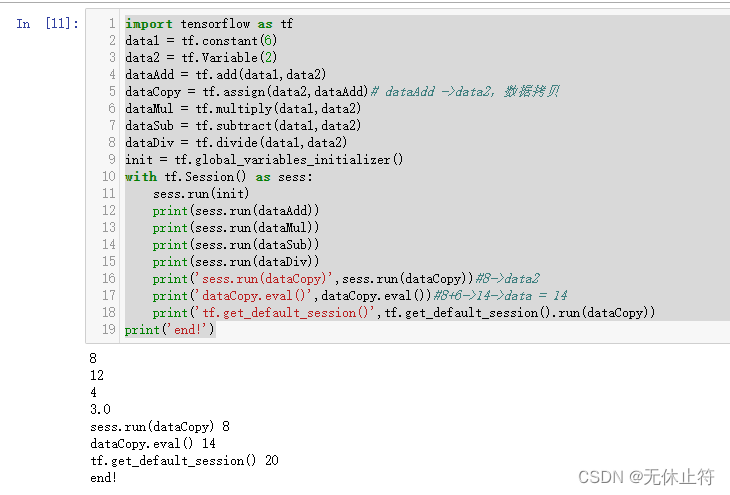

import tensorflow as tf

data1 = tf.constant(6)

data2 = tf.Variable(2)

dataAdd = tf.add(data1, data2)

dataCopy = tf.assign(data2, dataAdd)

dataMul = tf.multiply(data1, data2)

dataSub = tf.subtract(data1, data2)

dataDiv = tf.divide(data1, data2)

init = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init)

print(sess.run(dataAdd))

print(sess.run(dataMul))

print(sess.run(dataSub))

print(sess.run(dataDiv))

print('sess.run(dataCopy)', sess.run(dataCopy))

print('dataCopy.eval()', dataCopy.eval())

print('tf.get_default_session()', tf.get_default_session().run(dataCopy))

print('end!')

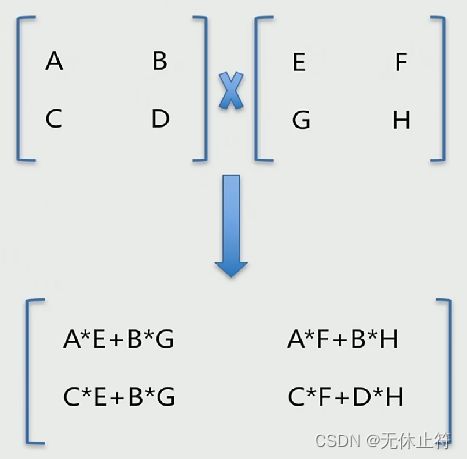

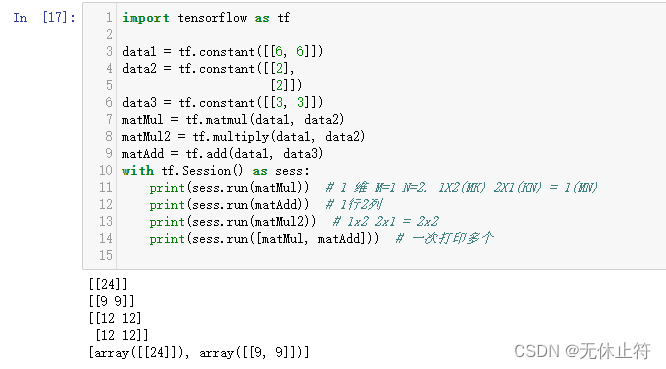

4 - tensorflow矩阵基础

import tensorflow as tf

data1 = tf.placeholder(tf.float32)

data2 = tf.placeholder(tf.float32)

dataAdd = tf.add(data1, data2)

with tf.Session() as sess:

print(sess.run(dataAdd, feed_dict={data1: 6, data2: 2}))

print('end!')

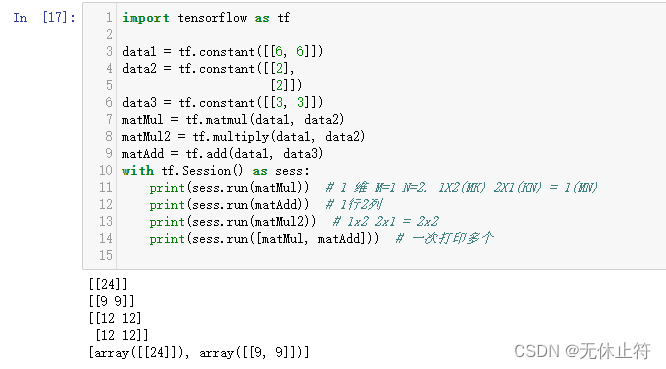

import tensorflow as tf

data1 = tf.constant([[6, 6]])

data2 = tf.constant([[2],

[2]])

data3 = tf.constant([[3, 3]])

matMul = tf.matmul(data1, data2)

matMul2 = tf.multiply(data1, data2)

matAdd = tf.add(data1, data3)

with tf.Session() as sess:

print(sess.run(matMul))

print(sess.run(matAdd))

print(sess.run(matMul2))

print(sess.run([matMul, matAdd]))

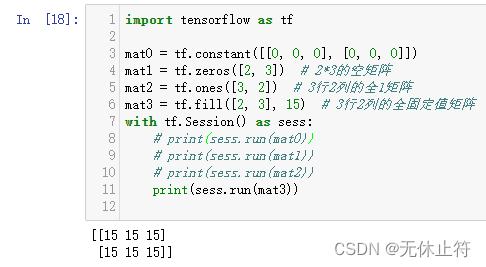

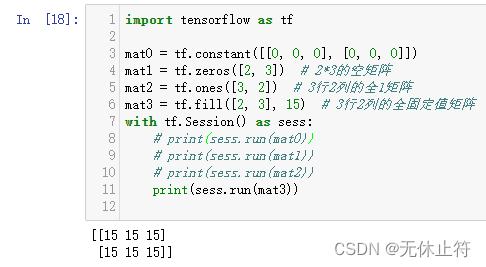

- 矩阵填充:

tf.zeros、tf.ones、tf.fill

import tensorflow as tf

mat0 = tf.constant([[0, 0, 0], [0, 0, 0]])

mat1 = tf.zeros([2, 3])

mat2 = tf.ones([3, 2])

mat3 = tf.fill([2, 3], 15)

with tf.Session() as sess:

print(sess.run(mat3))

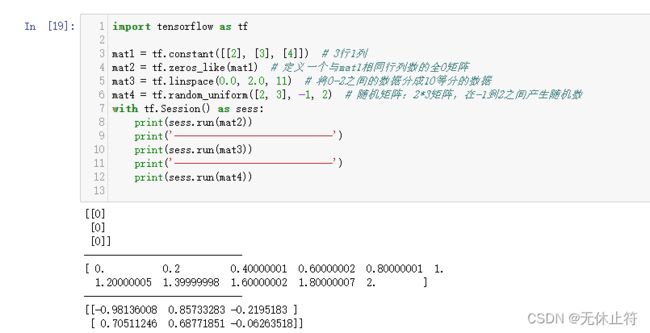

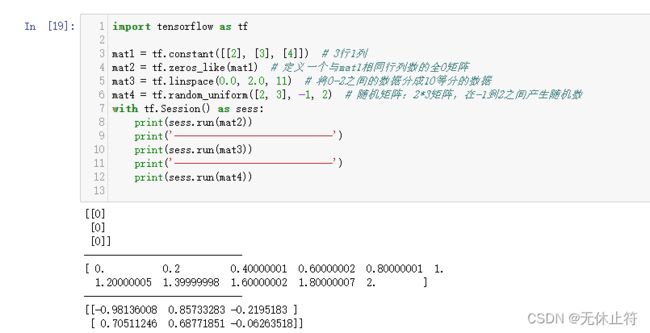

- 其他矩阵操作

tf.zeros_like:填充维度相同的0矩阵tf.linspace:生成等分数据的数组tf.random_uniform:随机矩阵

import tensorflow as tf

mat1 = tf.constant([[2], [3], [4]])

mat2 = tf.zeros_like(mat1)

mat3 = tf.linspace(0.0, 2.0, 11)

mat4 = tf.random_uniform([2, 3], -1, 2)

with tf.Session() as sess:

print(sess.run(mat2))

print('----------------------------')

print(sess.run(mat3))

print('----------------------------')

print(sess.run(mat4))

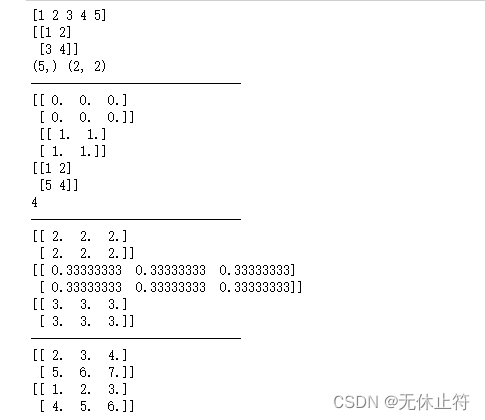

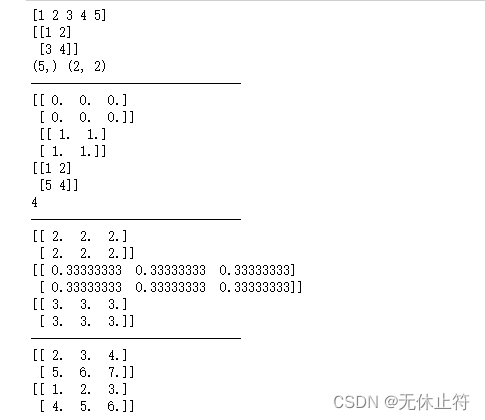

5 - numpy矩阵

import numpy as np

data1 = np.array([1, 2, 3, 4, 5])

print(data1)

data2 = np.array([[1, 2],

[3, 4]])

print(data2)

print(data1.shape, data2.shape)

print('------------------------------')

print(np.zeros([2, 3]), '\n', np.ones([2, 2]))

data2[1, 0] = 5

print(data2)

print(data2[1, 1])

print('------------------------------')

data3 = np.ones([2, 3])

print(data3 * 2)

print(data3 / 3)

print(data3 + 2)

print('------------------------------')

data4 = np.array([[1, 2, 3], [4, 5, 6]])

print(data3 + data4)

print(data3 * data4)

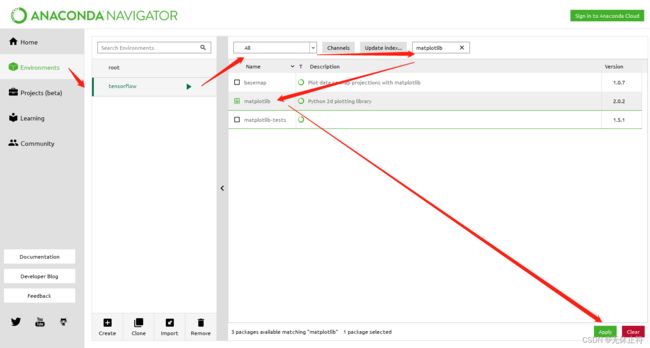

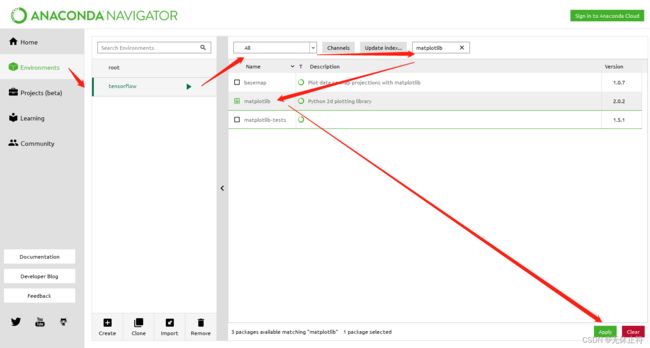

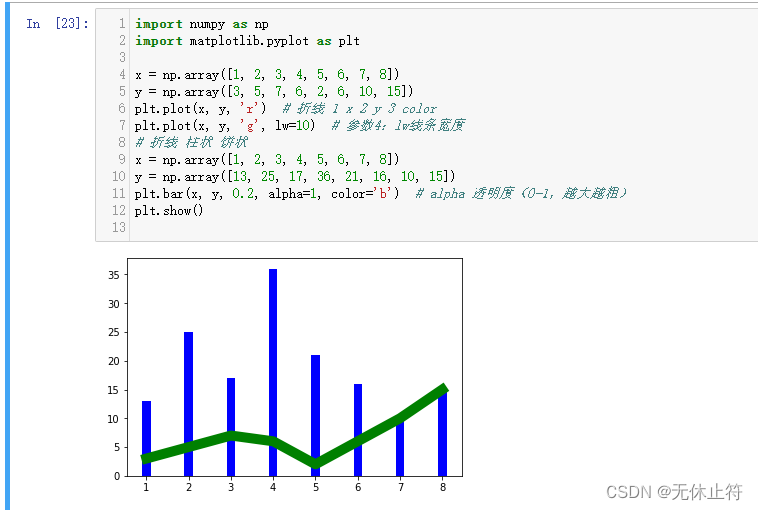

6 - matplotlib绘图

- 可以直接在anaconda中安装matplotlib

- matplotlib绘图

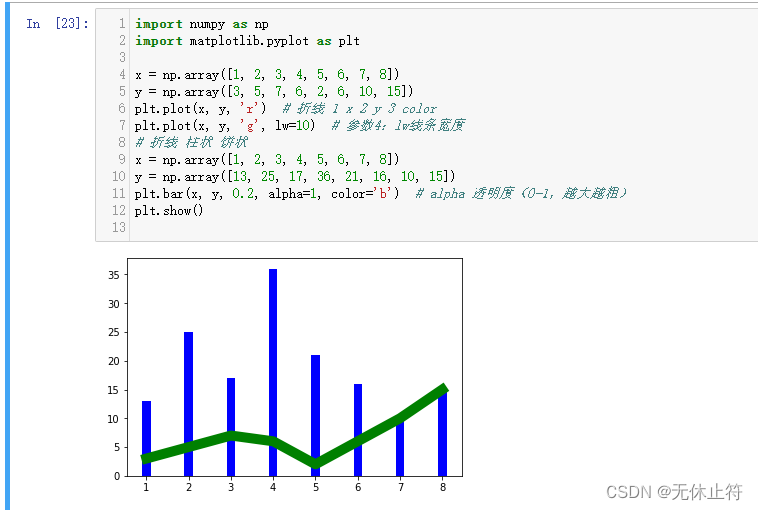

plt.plot:绘制折线图plt.bar:绘制柱状图

import numpy as np

import matplotlib.pyplot as plt

x = np.array([1, 2, 3, 4, 5, 6, 7, 8])

y = np.array([3, 5, 7, 6, 2, 6, 10, 15])

plt.plot(x, y, 'r')

plt.plot(x, y, 'g', lw=10)

x = np.array([1, 2, 3, 4, 5, 6, 7, 8])

y = np.array([13, 25, 17, 36, 21, 16, 10, 15])

plt.bar(x, y, 0.2, alpha=1, color='b')

plt.show()

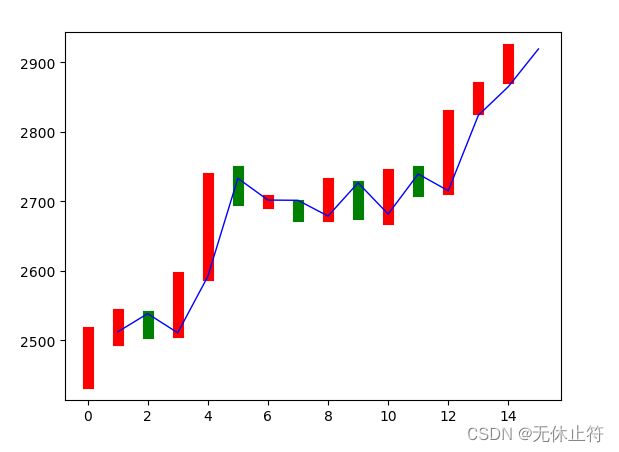

三、神经网络逼近股票收盘均价(案例)

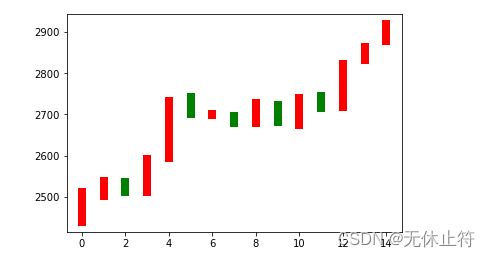

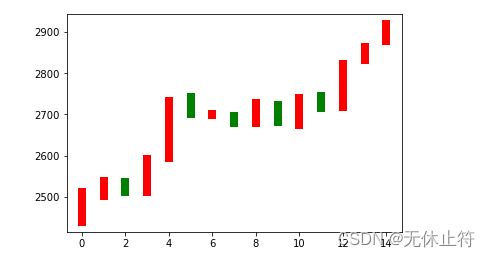

1 - 绘制15天股票K线的柱状图

import numpy as np

import matplotlib.pyplot as plt

date = np.linspace(1, 15, 15)

endPrice = np.array(

[2511.90, 2538.26, 2510.68, 2591.66, 2732.98, 2701.69, 2701.29, 2678.67, 2726.50, 2681.50, 2739.17, 2715.07,

2823.58, 2864.90, 2919.08])

beginPrice = np.array(

[2438.71, 2500.88, 2534.95, 2512.52, 2594.04, 2743.26, 2697.47, 2695.24, 2678.23, 2722.13, 2674.93, 2744.13,

2717.46, 2832.73, 2877.40])

print(date)

plt.figure()

for i in range(0, 15):

dateOne = np.zeros([2])

dateOne[0] = i

dateOne[1] = i

priceOne = np.zeros([2])

priceOne[0] = beginPrice[i]

priceOne[1] = endPrice[i]

if endPrice[i] > beginPrice[i]:

plt.plot(dateOne, priceOne, 'r', lw=8)

else:

plt.plot(dateOne, priceOne, 'g', lw=8)

plt.show()

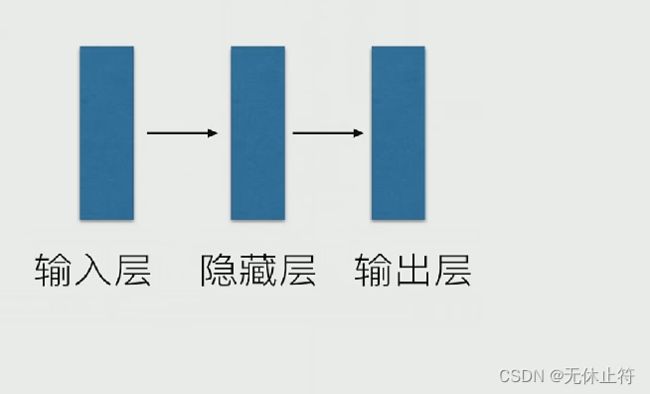

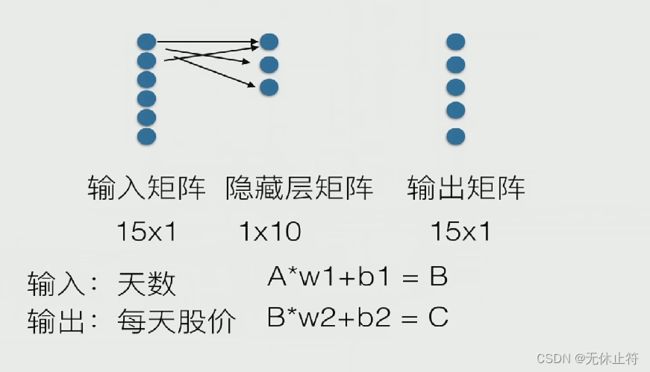

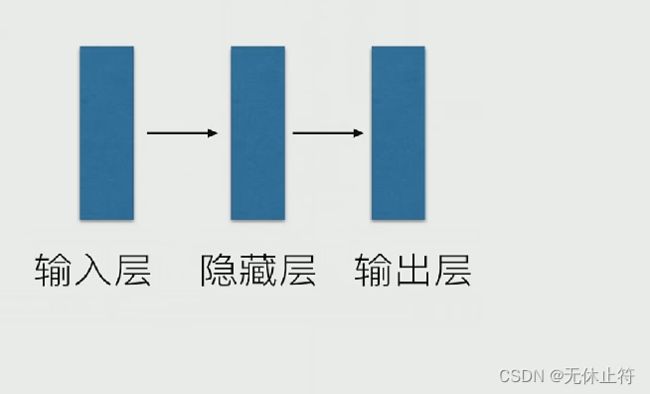

2 - 人工神经网络简介

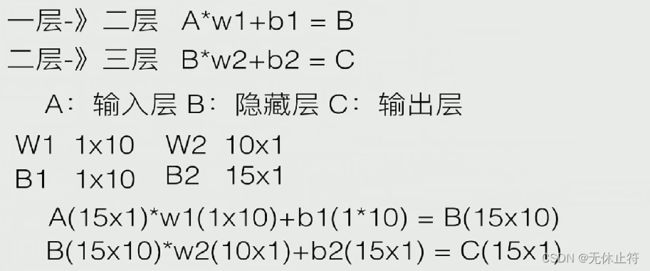

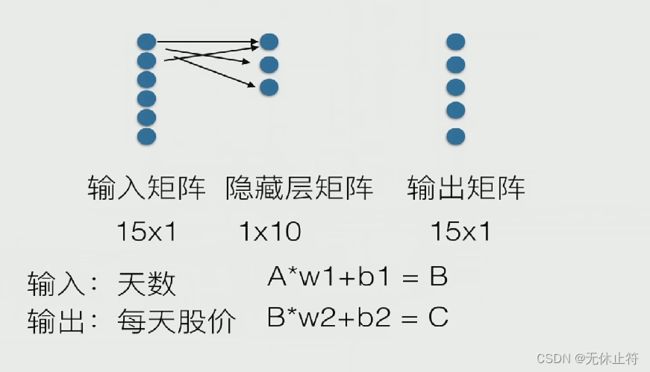

- 人工神经网络三层:输入层、隐藏层(中间层)、输出层

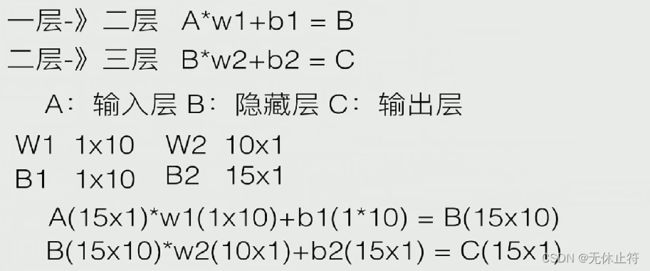

- 神经网络三层的转换公式

- A代表输入矩阵、B代表隐藏矩阵、C代表输出矩阵

- w代表权重矩阵

- b代表偏置矩阵

- 矩阵维度分析

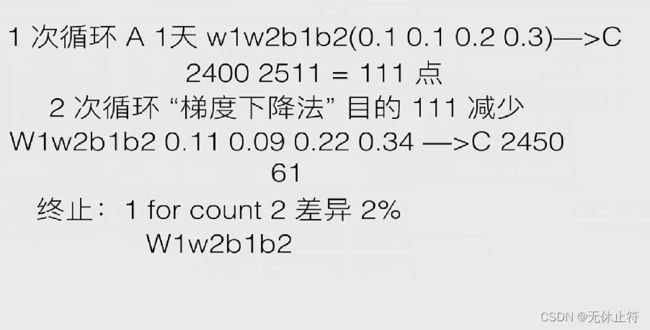

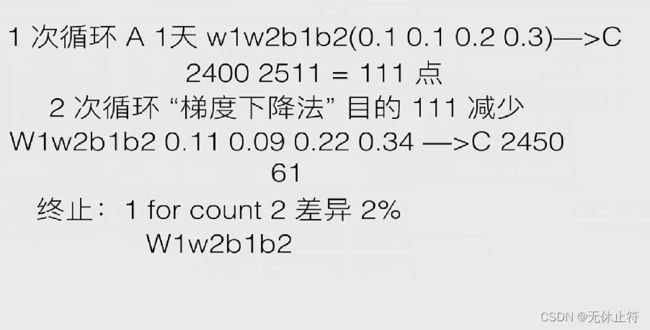

- 梯度下降法:获取最终的w1w2b1b2

- 终止的2种条件:根据循环次数控制;根据与真实的差异百分比控制

3 - 编码实现

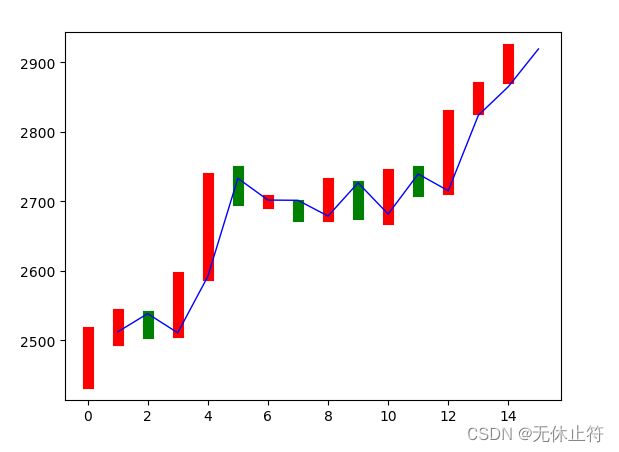

- 结果说明:可以看到蓝色的折线图是预测的收盘价格,与实际的收盘价格还是比较接近的

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

date = np.linspace(1, 15, 15)

endPrice = np.array(

[2511.90, 2538.26, 2510.68, 2591.66, 2732.98, 2701.69, 2701.29, 2678.67, 2726.50, 2681.50, 2739.17, 2715.07,

2823.58, 2864.90, 2919.08]

)

beginPrice = np.array(

[2438.71, 2500.88, 2534.95, 2512.52, 2594.04, 2743.26, 2697.47, 2695.24, 2678.23, 2722.13, 2674.93, 2744.13,

2717.46, 2832.73, 2877.40])

print(date)

plt.figure()

for i in range(0, 15):

dateOne = np.zeros([2])

dateOne[0] = i

dateOne[1] = i

priceOne = np.zeros([2])

priceOne[0] = beginPrice[i]

priceOne[1] = endPrice[i]

if endPrice[i] > beginPrice[i]:

plt.plot(dateOne, priceOne, 'r', lw=8)

else:

plt.plot(dateOne, priceOne, 'g', lw=8)

dateNormal = np.zeros([15, 1])

priceNormal = np.zeros([15, 1])

for i in range(0, 15):

dateNormal[i, 0] = i / 14.0

priceNormal[i, 0] = endPrice[i] / 3000.0

x = tf.placeholder(tf.float32, [None, 1])

y = tf.placeholder(tf.float32, [None, 1])

w1 = tf.Variable(tf.random_uniform([1, 10], 0, 1))

b1 = tf.Variable(tf.zeros([1, 10]))

wb1 = tf.matmul(x, w1) + b1

layer1 = tf.nn.relu(wb1)

w2 = tf.Variable(tf.random_uniform([10, 1], 0, 1))

b2 = tf.Variable(tf.zeros([15, 1]))

wb2 = tf.matmul(layer1, w2) + b2

layer2 = tf.nn.relu(wb2)

loss = tf.reduce_mean(tf.square(y - layer2))

train_step = tf.train.GradientDescentOptimizer(0.1).minimize(loss)

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

for i in range(0, 10000):

sess.run(train_step, feed_dict={x: dateNormal, y: priceNormal})

pred = sess.run(layer2, feed_dict={x: dateNormal})

predPrice = np.zeros([15, 1])

for i in range(0, 15):

predPrice[i, 0] = (pred * 3000)[i, 0]

plt.plot(date, predPrice, 'b', lw=1)

plt.show()