记录:使用Tensorflow Object Detection API识别自己的数据集

上篇文章有说到怎么配置及运行例程:

https://blog.csdn.net/qq_45128278/article/details/106469761

环境:

之前跑官方例程的时候,使用tens1.14 一直报错

TypeError: load() missing 2 required positional arguments: ‘tags’ and ‘export_dir’

没有解决,之后就换了1.15.例程可以跑了。但是在此文后面的训练时报错缺少tensorflow.contrib的库,又降级到了1.14.

一些准备:

安装labelImg软件

见链接:

https://blog.csdn.net/qq_45128278/article/details/106470242

标记好数据后得到很多xml文件。

xml文件转化为.record

https://github.com/datitran/raccoon_dataset 下载这个包内,可以把xml文件转化为csv文件,再用model自带的py文件转化为record格式。

下载好后修改xml_to_csv.py的主函数:

def main():

# image_path = os.path.join(os.getcwd(), 'annotations')

image_path = r'C:\Users\83543\Desktop\xml_train'

xml_df = xml_to_csv(image_path)

xml_df.to_csv('glass_train_labels.csv', index=None)

print('Successfully converted xml to csv.')

之后运行,在该文件目录下会生成对应的csv文件。

在cmd里调用generate_tfrecord.py文件。也在上面那个包内,自行修改参数。

在运行之前要把gnerator_tfrecord.py的大概30行左右,修改成你自己的标签名。

如:(id从1开始,跟前面的设置对应的。)

# TO-DO replace this with label map

def class_text_to_int(row_label):

if row_label == 'glass':

return 1

else:

None

多个标签示例:

def class_text_to_int(row_label):

if row_label == ‘glass’:

return 1

elif row_label == ‘fabric’:

return 2

else:

None

python generate_tfrecord.py --csv_input=glass_test_labels.csv --output_path=glass_test.record --image_dir=C:\Users\83543\Desktop\glass_test

报错了:

flags = tf.app.flags

AttributeError: module ‘tensorflow’ has no attribute ‘app’

修改代码为:

##flags = tf.app.flags

flags=tf.compat.v1.app.flags

又报错:

File “generate_tfrecord.py”, line 101, in

tf.app.run()

AttributeError: module ‘tensorflow’ has no attribute ‘app’

修改代码为:

##tf.app.run()

tf.compat.v1.app.run()

又报错…

干脆直接修改tf的版本,以上两步可以不用了:

##import tensorflow as tf

import tensorflow.compat.v1 as tf

结果:

Successfully created the TFRecords: C:\Users\83543\Desktop\raccoon_dataset-master\raccoon_dataset-master\glass_train.record

创建文件:

glass_rabel_map.pbtxt

item {

id: 1

name: 'glass'

}

一个脚本,用于去除文件后缀:

import os

import random

pt = "C:/Users/83543/Desktop/glass"

image_name = os.listdir(pt)

for temp in image_name:

if temp.endswith(".jpg"):

print(temp.replace('.jpg', ''))

训练

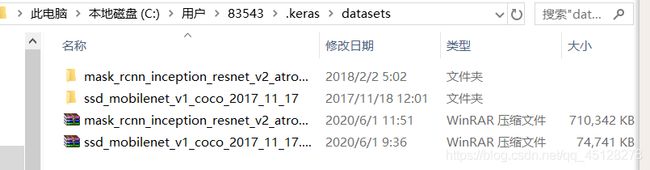

先下载model官方的模型(如果之前跑过官方例程,倒数几行的代码已经下载好了一个模型),提前找到地址,我的地址在c盘:

1.复制object_detection/samples/configs下的ssd_mobilenet_v1_coco.config 到 ~/dataset/raccoon_dataset-master/下,重命名为ssd_mobilenet_v1_glass.config,并做如下修改:

num_classes: 1 ##第九行

fine_tune_checkpoint: "C:/Users/83543/.keras/datasets/ssd_mobilenet_v1_coco_2017_11_17/model.ckpt" ##156

input_path: "C:/Users/83543/Desktop/raccoon_dataset-master/raccoon_dataset-master/glass_train.record" ##175

label_map_path: "C:/Users/83543/Desktop/dataset-glass/glass-rabel-map.pbtxt" ##177

input_path: "C:/Users/83543/Desktop/raccoon_dataset-master/raccoon_dataset-master/glass_test.record"

}

label_map_path: "C:/Users/83543/Desktop/dataset-glass/glass-rabel-map.pbtxt" ##189 191

2.在~/dataset/raccoon_dataset-master/目录下新建一个ty_train目录,用于保存训练的检查点文件。

在cmd命令行里进入object_detection的目录下,输入:参数自行修改

python model_main.py --logtostderr --pipeline_config_path=C:/Users/83543/Desktop/raccoon_dataset-master/raccoon_dataset-master/ssd_mobilenet_v1_glass.config --train_dir=C:/Users/83543/Desktop/raccoon_dataset-master/raccoon_dataset-master/ty_train

报错:

import tensorflow.contrib.slim as slim; eval = slim.evaluation.evaluate_once

ModuleNotFoundError: No module named ‘tensorflow.contrib’

原因:

1.15之后的版本把contrib删了把,没有该库,降级了1.14才好。

使用tensorboard来监控

tensorboard不在site-package文件夹下,在D:\120\Python\Python37\Scripts下。进入该目录,再输入

tensorboard --logdir=C:/Users/83543/Desktop/raccoon_dataset-master/raccoon_dataset-master/ty_train

测试

可以直接调用jupyter notebook里的方法,稍微改改。

这里附上一份可以检测图片或者视频的代码:自己修改路径即可。

# -*- coding: utf-8 -*-

import time

start = time.time()

import numpy as np

import os

import six.moves.urllib as urllib

import sys

import tarfile

import tensorflow as tf

import zipfile

import cv2

from collections import defaultdict

from io import StringIO

from matplotlib import pyplot as plt

from PIL import Image

import pandas as pd

cv2.namedWindow("frame",0)

cv2.resizeWindow("frame", 640, 480)

if tf.__version__ < '1.0.0':

raise ImportError('Please upgrade your tensorflow installation to v1.4.* or later!')

os.chdir('D:/120/Python/Python37/Lib/site-packages/tensorflow/models/research/object_detection')

# Env setup

# This is needed to display the images.

# %matplotlib inline

# This is needed since the notebook is stored in the object_detection folder.

sys.path.append("..")

# Object detection imports

from object_detection.utils import label_map_util

from object_detection.utils import visualization_utils as vis_util

# Path to frozen detection graph. This is the actual model that is used for the object detection.

PATH_TO_CKPT = 'C:/Users/83543/Desktop/glass_inference_graph/glass_inference_graph/frozen_inference_graph.pb'

# List of the strings that is used to add correct label for each box.

PATH_TO_LABELS = os.path.join('C:/Users/83543/Desktop/dataset-glass/', 'glass-rabel-map.pbtxt')

NUM_CLASSES = 1 # YOUR_CLASS_NUM

# Load a (frozen) Tensorflow model into memory.

detection_graph = tf.Graph()

# Loading label map

label_map = label_map_util.load_labelmap(PATH_TO_LABELS)

categories = label_map_util.convert_label_map_to_categories(label_map, max_num_classes=NUM_CLASSES,

use_display_name=True)

category_index = label_map_util.create_category_index(categories)

# Helper code

def load_image_into_numpy_array(image):

(im_width, im_height) = image.size

return np.array(image.getdata()).reshape(

(im_height, im_width, 3)).astype(np.uint8)

def video_capture(image_tensor, detection_boxes, detection_scores, detection_classes, num_detections, sess, video_path):

if video_path == "novideo":

# 0是代表摄像头编号,只有一个的话默认为0

cap = cv2.VideoCapture(0)

else:

cap = cv2.VideoCapture(video_path)

i = 1

while 1:

ref, frame = cap.read()

if ref:

i = i + 1

if i % 3 == 0:

loss_show(image_tensor, detection_boxes, detection_scores, detection_classes, num_detections, frame,

sess)

else:

cv2.imshow("frame", frame)

# 等待30ms显示图像,若过程中按“Esc”退出

c = cv2.waitKey(30) & 0xff

if c == 27: # ESC 按键 对应键盘值 27

cap.release()

break

else:

print("ref == false ")

break

def init_ogject_detection(video_path):

with detection_graph.as_default():

with tf.Session(graph=detection_graph) as sess:

od_graph_def = tf.GraphDef()

with tf.gfile.GFile(PATH_TO_CKPT, 'rb') as fid:

serialized_graph = fid.read()

od_graph_def.ParseFromString(serialized_graph)

tf.import_graph_def(od_graph_def, name='')

# Definite input and output Tensors for detection_graph

image_tensor = detection_graph.get_tensor_by_name('image_tensor:0')

# Each box represents a part of the image where a particular object was detected.

detection_boxes = detection_graph.get_tensor_by_name('detection_boxes:0')

# Each score represent how level of confidence for each of the objects.

# Score is shown on the result image, together with the class label.

detection_scores = detection_graph.get_tensor_by_name('detection_scores:0')

detection_classes = detection_graph.get_tensor_by_name('detection_classes:0')

num_detections = detection_graph.get_tensor_by_name('num_detections:0')

video_capture(image_tensor, detection_boxes, detection_scores, detection_classes, num_detections, sess, video_path)

def loss_show(image_tensor, detection_boxes, detection_scores, detection_classes, num_detections, image_np, sess):

starttime = time.time()

image_np = Image.fromarray(cv2.cvtColor(image_np, cv2.COLOR_BGR2RGB))

image_np = load_image_into_numpy_array(image_np)

# Expand dimensions since the model expects images to have shape: [1, None, None, 3]

image_np_expanded = np.expand_dims(image_np, axis=0)

# Actual detection.

(boxes, scores, classes, num) = sess.run(

[detection_boxes, detection_scores, detection_classes, num_detections],

feed_dict={image_tensor: image_np_expanded})

#print("--scores--->", scores)

# Visualization of the results of a detection.

vis_util.visualize_boxes_and_labels_on_image_array(

image_np,

np.squeeze(boxes),

np.squeeze(classes).astype(np.int32),

np.squeeze(scores),

category_index,

use_normalized_coordinates=True,

line_thickness=5,

min_score_thresh=.6)

# write images

# 保存识别结果图片

print("------------use time ====> ", time.time() - starttime)

image_np = cv2.cvtColor(image_np, cv2.COLOR_BGR2RGB)

cv2.imshow("frame", image_np)

def load_pic(path):

i = 0

starttime = time.time()

i = i + 1

with detection_graph.as_default():

with tf.Session(graph=detection_graph) as sess:

od_graph_def = tf.GraphDef()

with tf.gfile.GFile(PATH_TO_CKPT, 'rb') as fid:

serialized_graph = fid.read()

od_graph_def.ParseFromString(serialized_graph)

tf.import_graph_def(od_graph_def, name='')

# Definite input and output Tensors for detection_graph

image_tensor = detection_graph.get_tensor_by_name('image_tensor:0')

# Each box represents a part of the image where a particular object was detected.

detection_boxes = detection_graph.get_tensor_by_name('detection_boxes:0')

# Each score represent how level of confidence for each of the objects.

# Score is shown on the result image, together with the class label.

detection_scores = detection_graph.get_tensor_by_name('detection_scores:0')

detection_classes = detection_graph.get_tensor_by_name('detection_classes:0')

num_detections = detection_graph.get_tensor_by_name('num_detections:0')

# TEST_IMAGE_PATHS = os.listdir(os.path.join(image_folder))

# os.makedirs(output_image_path+image_folder)

# data = pd.DataFrame()

# for image_path in TEST_IMAGE_DIRS:

filelist = os.listdir(path) # 该文件夹下的所有文件

print(len(filelist))

for file in filelist: # 遍历所有文件

print(file)

image = Image.open(path+file)

# the array based representation of the image will be used later in order to prepare the

# result image with boxes and labels on it.

image_np = load_image_into_numpy_array(image)

# Expand dimensions since the model expects images to have shape: [1, None, None, 3]

image_np_expanded = np.expand_dims(image_np, axis=0)

# Actual detection.

(boxes, scores, classes, num) = sess.run(

[detection_boxes, detection_scores, detection_classes, num_detections],

feed_dict={image_tensor: image_np_expanded})

# Visualization of the results of a detection.

vis_util.visualize_boxes_and_labels_on_image_array(

image_np,

np.squeeze(boxes),

np.squeeze(classes).astype(np.int32),

np.squeeze(scores),

category_index,

use_normalized_coordinates=True,

line_thickness=8)

# 显示标记图片

#print(str(i), "------------use time ====> ", time.time() - starttime)

image_np = cv2.cvtColor(image_np, cv2.COLOR_BGR2RGB)

cv2.imshow("pic", image_np)

cv2.waitKey(0)

if __name__ == '__main__':

PIC_PATH = "YOUR_PATH"

VIDEO_PATH = "nopath" # 本地文件传入文件路径 调用camera 传入'nopath'

# 图片列表预测

#load_pic(PIC_PATH)

# 视频流预测

init_ogject_detection(VIDEO_PATH)

文末附上不同训练模型的下载地址:

https://github.com/tensorflow/models/blob/master/research/object_detection/g3doc/detection_model_zoo.md